Zhenwei Shi

Beihang University

Remodeling Semantic Relationships in Vision-Language Fine-Tuning

Nov 13, 2025

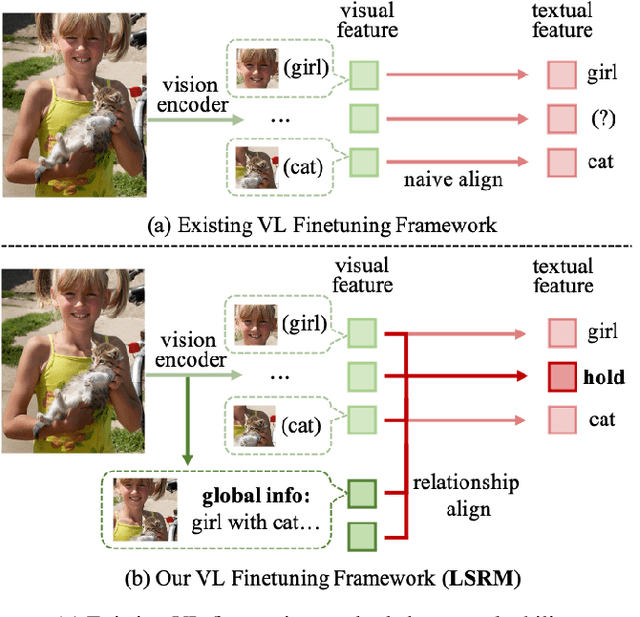

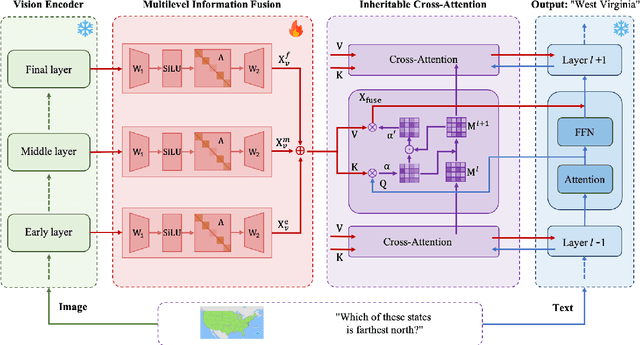

Abstract:Vision-language fine-tuning has emerged as an efficient paradigm for constructing multimodal foundation models. While textual context often highlights semantic relationships within an image, existing fine-tuning methods typically overlook this information when aligning vision and language, thus leading to suboptimal performance. Toward solving this problem, we propose a method that can improve multimodal alignment and fusion based on both semantics and relationships.Specifically, we first extract multilevel semantic features from different vision encoder to capture more visual cues of the relationships. Then, we learn to project the vision features to group related semantics, among which are more likely to have relationships. Finally, we fuse the visual features with the textual by using inheritable cross-attention, where we globally remove the redundant visual relationships by discarding visual-language feature pairs with low correlation. We evaluate our proposed method on eight foundation models and two downstream tasks, visual question answering and image captioning, and show that it outperforms all existing methods.

FoBa: A Foreground-Background co-Guided Method and New Benchmark for Remote Sensing Semantic Change Detection

Sep 19, 2025

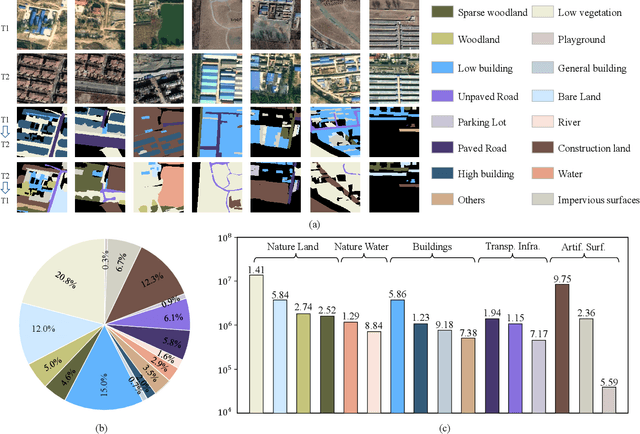

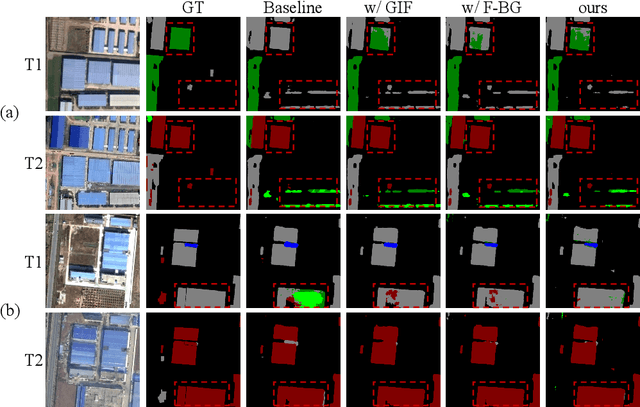

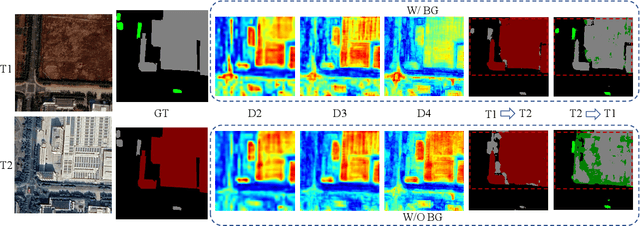

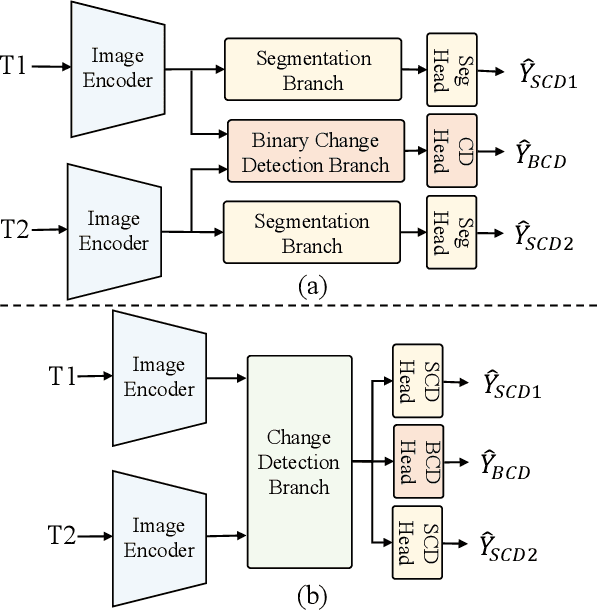

Abstract:Despite the remarkable progress achieved in remote sensing semantic change detection (SCD), two major challenges remain. At the data level, existing SCD datasets suffer from limited change categories, insufficient change types, and a lack of fine-grained class definitions, making them inadequate to fully support practical applications. At the methodological level, most current approaches underutilize change information, typically treating it as a post-processing step to enhance spatial consistency, which constrains further improvements in model performance. To address these issues, we construct a new benchmark for remote sensing SCD, LevirSCD. Focused on the Beijing area, the dataset covers 16 change categories and 210 specific change types, with more fine-grained class definitions (e.g., roads are divided into unpaved and paved roads). Furthermore, we propose a foreground-background co-guided SCD (FoBa) method, which leverages foregrounds that focus on regions of interest and backgrounds enriched with contextual information to guide the model collaboratively, thereby alleviating semantic ambiguity while enhancing its ability to detect subtle changes. Considering the requirements of bi-temporal interaction and spatial consistency in SCD, we introduce a Gated Interaction Fusion (GIF) module along with a simple consistency loss to further enhance the model's detection performance. Extensive experiments on three datasets (SECOND, JL1, and the proposed LevirSCD) demonstrate that FoBa achieves competitive results compared to current SOTA methods, with improvements of 1.48%, 3.61%, and 2.81% in the SeK metric, respectively. Our code and dataset are available at https://github.com/zmoka-zht/FoBa.

Preserving Domain Generalization in Fine-Tuning via Joint Parameter Selection

Aug 23, 2025Abstract:Domain generalization seeks to develop models trained on a limited set of source domains that are capable of generalizing effectively to unseen target domains. While the predominant approach leverages large-scale pre-trained vision models as initialization, recent studies have highlighted that full fine-tuning can compromise the intrinsic generalization capabilities of these models. To address this limitation, parameter-efficient adaptation strategies have emerged, wherein only a subset of model parameters is selectively fine-tuned, thereby balancing task adaptation with the preservation of generalization. Motivated by this paradigm, we introduce Joint Parameter Selection (JPS), a novel method that restricts updates to a small, sparse subset of parameters, thereby retaining and harnessing the generalization strength of pre-trained models. Theoretically, we establish a generalization error bound that explicitly accounts for the sparsity of parameter updates, thereby providing a principled justification for selective fine-tuning. Practically, we design a selection mechanism employing dual operators to identify and update parameters exhibiting consistent and significant gradients across all source domains. Extensive benchmark experiments demonstrate that JPS achieves superior performance compared to state-of-the-art domain generalization methods, substantiating both the efficiency and efficacy of the proposed approach.

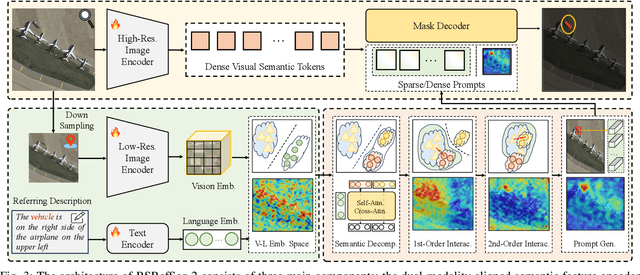

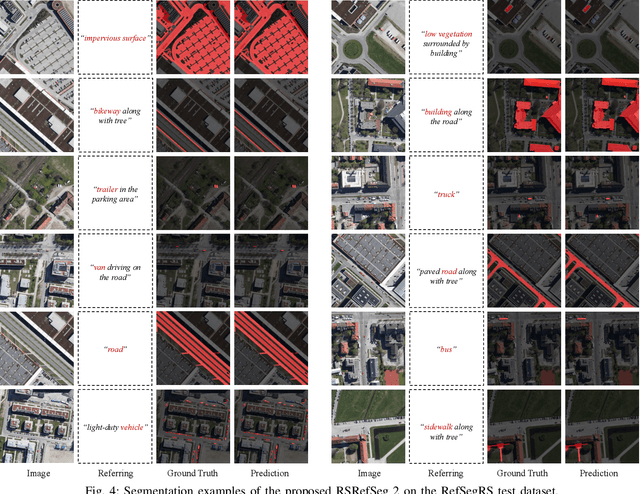

RSRefSeg 2: Decoupling Referring Remote Sensing Image Segmentation with Foundation Models

Jul 08, 2025

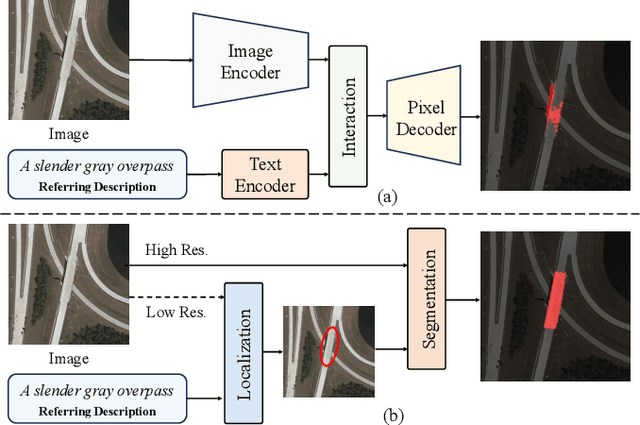

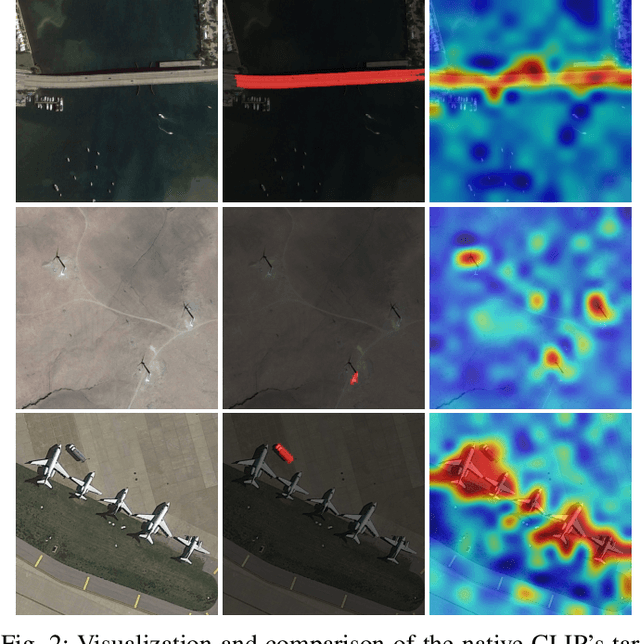

Abstract:Referring Remote Sensing Image Segmentation provides a flexible and fine-grained framework for remote sensing scene analysis via vision-language collaborative interpretation. Current approaches predominantly utilize a three-stage pipeline encompassing dual-modal encoding, cross-modal interaction, and pixel decoding. These methods demonstrate significant limitations in managing complex semantic relationships and achieving precise cross-modal alignment, largely due to their coupled processing mechanism that conflates target localization with boundary delineation. This architectural coupling amplifies error propagation under semantic ambiguity while restricting model generalizability and interpretability. To address these issues, we propose RSRefSeg 2, a decoupling paradigm that reformulates the conventional workflow into a collaborative dual-stage framework: coarse localization followed by fine segmentation. RSRefSeg 2 integrates CLIP's cross-modal alignment strength with SAM's segmentation generalizability through strategic foundation model collaboration. Specifically, CLIP is employed as the dual-modal encoder to activate target features within its pre-aligned semantic space and generate localization prompts. To mitigate CLIP's misactivation challenges in multi-entity scenarios described by referring texts, a cascaded second-order prompter is devised, which enhances precision through implicit reasoning via decomposition of text embeddings into complementary semantic subspaces. These optimized semantic prompts subsequently direct the SAM to generate pixel-level refined masks, thereby completing the semantic transmission pipeline. Extensive experiments (RefSegRS, RRSIS-D, and RISBench) demonstrate that RSRefSeg 2 surpasses contemporary methods in segmentation accuracy (+~3% gIoU) and complex semantic interpretation. Code is available at: https://github.com/KyanChen/RSRefSeg2.

Fine-grained Hierarchical Crop Type Classification from Integrated Hyperspectral EnMAP Data and Multispectral Sentinel-2 Time Series: A Large-scale Dataset and Dual-stream Transformer Method

Jun 09, 2025Abstract:Fine-grained crop type classification serves as the fundamental basis for large-scale crop mapping and plays a vital role in ensuring food security. It requires simultaneous capture of both phenological dynamics (obtained from multi-temporal satellite data like Sentinel-2) and subtle spectral variations (demanding nanometer-scale spectral resolution from hyperspectral imagery). Research combining these two modalities remains scarce currently due to challenges in hyperspectral data acquisition and crop types annotation costs. To address these issues, we construct a hierarchical hyperspectral crop dataset (H2Crop) by integrating 30m-resolution EnMAP hyperspectral data with Sentinel-2 time series. With over one million annotated field parcels organized in a four-tier crop taxonomy, H2Crop establishes a vital benchmark for fine-grained agricultural crop classification and hyperspectral image processing. We propose a dual-stream Transformer architecture that synergistically processes these modalities. It coordinates two specialized pathways: a spectral-spatial Transformer extracts fine-grained signatures from hyperspectral EnMAP data, while a temporal Swin Transformer extracts crop growth patterns from Sentinel-2 time series. The designed hierarchical classification head with hierarchical fusion then simultaneously delivers multi-level crop type classification across all taxonomic tiers. Experiments demonstrate that adding hyperspectral EnMAP data to Sentinel-2 time series yields a 4.2% average F1-scores improvement (peaking at 6.3%). Extensive comparisons also confirm our method's higher accuracy over existing deep learning approaches for crop type classification and the consistent benefits of hyperspectral data across varying temporal windows and crop change scenarios. Codes and dataset are available at https://github.com/flyakon/H2Crop.

A Novel Large-scale Crop Dataset and Dual-stream Transformer Method for Fine-grained Hierarchical Crop Classification from Integrated Hyperspectral EnMAP Data and Multispectral Sentinel-2 Time Series

Jun 06, 2025Abstract:Fine-grained crop classification is crucial for precision agriculture and food security monitoring. It requires simultaneous capture of both phenological dynamics (obtained from multi-temporal satellite data like Sentinel-2) and subtle spectral variations (demanding nanometer-scale spectral resolution from hyperspectral imagery). Research combining these two modalities remains scarce currently due to challenges in hyperspectral data acquisition and crop types annotation costs. To address these issues, we construct a hierarchical hyperspectral crop dataset (H2Crop) by integrating 30m-resolution EnMAP hyperspectral data with Sentinel-2 time series. With over one million annotated field parcels organized in a four-tier crop taxonomy, H2Crop establishes a vital benchmark for fine-grained agricultural crop classification and hyperspectral image processing. We propose a dual-stream Transformer architecture that synergistically processes these modalities. It coordinates two specialized pathways: a spectral-spatial Transformer extracts fine-grained signatures from hyperspectral EnMAP data, while a temporal Swin Transformer extracts crop growth patterns from Sentinel-2 time series. The designed hierarchy classification heads with hierarchical fusion then simultaneously delivers multi-level classification across all taxonomic tiers. Experiments demonstrate that adding hyperspectral EnMAP data to Sentinel-2 time series yields a 4.2% average F1-scores improvement (peaking at 6.3%). Extensive comparisons also confirming our method's higher accuracy over existing deep learning approaches for crop type classification and the consistent benefits of hyperspectral data across varying temporal windows and crop change scenarios. Codes and dataset will be available at https://github.com/flyakon/H2Crop and www.glass.hku.hk Keywords: Crop type classification, precision agriculture, remote sensing, deep learning, hyperspectral data, Sentinel-2 time series, fine-grained crops

SeG-SR: Integrating Semantic Knowledge into Remote Sensing Image Super-Resolution via Vision-Language Model

May 29, 2025Abstract:High-resolution (HR) remote sensing imagery plays a vital role in a wide range of applications, including urban planning and environmental monitoring. However, due to limitations in sensors and data transmission links, the images acquired in practice often suffer from resolution degradation. Remote Sensing Image Super-Resolution (RSISR) aims to reconstruct HR images from low-resolution (LR) inputs, providing a cost-effective and efficient alternative to direct HR image acquisition. Existing RSISR methods primarily focus on low-level characteristics in pixel space, while neglecting the high-level understanding of remote sensing scenes. This may lead to semantically inconsistent artifacts in the reconstructed results. Motivated by this observation, our work aims to explore the role of high-level semantic knowledge in improving RSISR performance. We propose a Semantic-Guided Super-Resolution framework, SeG-SR, which leverages Vision-Language Models (VLMs) to extract semantic knowledge from input images and uses it to guide the super resolution (SR) process. Specifically, we first design a Semantic Feature Extraction Module (SFEM) that utilizes a pretrained VLM to extract semantic knowledge from remote sensing images. Next, we propose a Semantic Localization Module (SLM), which derives a series of semantic guidance from the extracted semantic knowledge. Finally, we develop a Learnable Modulation Module (LMM) that uses semantic guidance to modulate the features extracted by the SR network, effectively incorporating high-level scene understanding into the SR pipeline. We validate the effectiveness and generalizability of SeG-SR through extensive experiments: SeG-SR achieves state-of-the-art performance on two datasets and consistently delivers performance improvements across various SR architectures. Codes can be found at https://github.com/Mr-Bamboo/SeG-SR.

AgriFM: A Multi-source Temporal Remote Sensing Foundation Model for Crop Mapping

May 28, 2025Abstract:Accurate crop mapping fundamentally relies on modeling multi-scale spatiotemporal patterns, where spatial scales range from individual field textures to landscape-level context, and temporal scales capture both short-term phenological transitions and full growing-season dynamics. Transformer-based remote sensing foundation models (RSFMs) offer promising potential for crop mapping due to their innate ability for unified spatiotemporal processing. However, current RSFMs remain suboptimal for crop mapping: they either employ fixed spatiotemporal windows that ignore the multi-scale nature of crop systems or completely disregard temporal information by focusing solely on spatial patterns. To bridge these gaps, we present AgriFM, a multi-source remote sensing foundation model specifically designed for agricultural crop mapping. Our approach begins by establishing the necessity of simultaneous hierarchical spatiotemporal feature extraction, leading to the development of a modified Video Swin Transformer architecture where temporal down-sampling is synchronized with spatial scaling operations. This modified backbone enables efficient unified processing of long time-series satellite inputs. AgriFM leverages temporally rich data streams from three satellite sources including MODIS, Landsat-8/9 and Sentinel-2, and is pre-trained on a global representative dataset comprising over 25 million image samples supervised by land cover products. The resulting framework incorporates a versatile decoder architecture that dynamically fuses these learned spatiotemporal representations, supporting diverse downstream tasks. Comprehensive evaluations demonstrate AgriFM's superior performance over conventional deep learning approaches and state-of-the-art general-purpose RSFMs across all downstream tasks. Codes will be available at https://github.com/flyakon/AgriFM.

Multitemporal Latent Dynamical Framework for Hyperspectral Images Unmixing

May 27, 2025Abstract:Multitemporal hyperspectral unmixing can capture dynamical evolution of materials. Despite its capability, current methods emphasize variability of endmembers while neglecting dynamics of abundances, which motivates our adoption of neural ordinary differential equations to model abundances temporally. However, this motivation is hindered by two challenges: the inherent complexity in defining, modeling and solving problem, and the absence of theoretical support. To address above challenges, in this paper, we propose a multitemporal latent dynamical (MiLD) unmixing framework by capturing dynamical evolution of materials with theoretical validation. For addressing multitemporal hyperspectral unmixing, MiLD consists of problem definition, mathematical modeling, solution algorithm and theoretical support. We formulate multitemporal unmixing problem definition by conducting ordinary differential equations and developing latent variables. We transfer multitemporal unmixing to mathematical model by dynamical discretization approaches, which describe the discreteness of observed sequence images with mathematical expansions. We propose algorithm to solve problem and capture dynamics of materials, which approximates abundance evolution by neural networks. Furthermore, we provide theoretical support by validating the crucial properties, which verifies consistency, convergence and stability theorems. The major contributions of MiLD include defining problem by ordinary differential equations, modeling problem by dynamical discretization approach, solving problem by multitemporal unmixing algorithm, and presenting theoretical support. Our experiments on both synthetic and real datasets have validated the utility of our work

MFogHub: Bridging Multi-Regional and Multi-Satellite Data for Global Marine Fog Detection and Forecasting

May 15, 2025Abstract:Deep learning approaches for marine fog detection and forecasting have outperformed traditional methods, demonstrating significant scientific and practical importance. However, the limited availability of open-source datasets remains a major challenge. Existing datasets, often focused on a single region or satellite, restrict the ability to evaluate model performance across diverse conditions and hinder the exploration of intrinsic marine fog characteristics. To address these limitations, we introduce \textbf{MFogHub}, the first multi-regional and multi-satellite dataset to integrate annotated marine fog observations from 15 coastal fog-prone regions and six geostationary satellites, comprising over 68,000 high-resolution samples. By encompassing diverse regions and satellite perspectives, MFogHub facilitates rigorous evaluation of both detection and forecasting methods under varying conditions. Extensive experiments with 16 baseline models demonstrate that MFogHub can reveal generalization fluctuations due to regional and satellite discrepancy, while also serving as a valuable resource for the development of targeted and scalable fog prediction techniques. Through MFogHub, we aim to advance both the practical monitoring and scientific understanding of marine fog dynamics on a global scale. The dataset and code are at \href{https://github.com/kaka0910/MFogHub}{https://github.com/kaka0910/MFogHub}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge