Yiwei He

RAIDX: A Retrieval-Augmented Generation and GRPO Reinforcement Learning Framework for Explainable Deepfake Detection

Aug 06, 2025

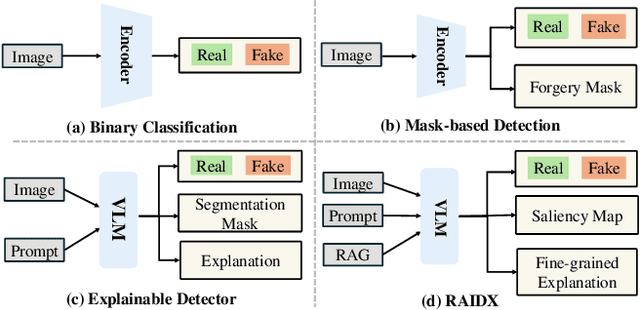

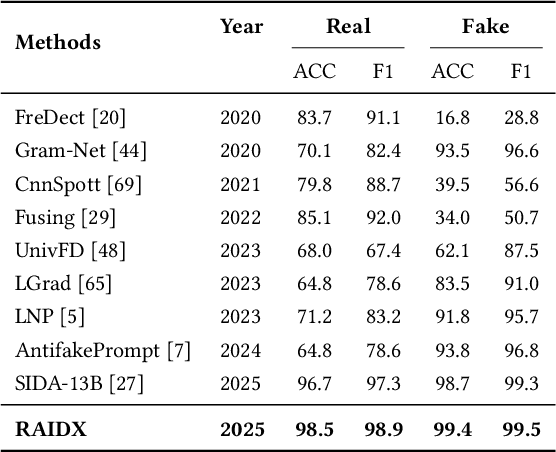

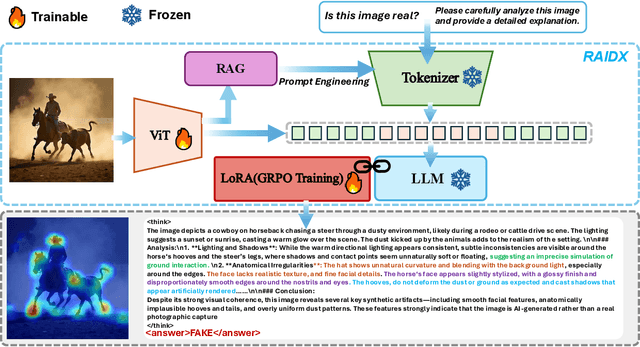

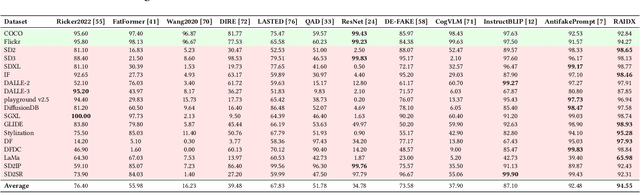

Abstract:The rapid advancement of AI-generation models has enabled the creation of hyperrealistic imagery, posing ethical risks through widespread misinformation. Current deepfake detection methods, categorized as face specific detectors or general AI-generated detectors, lack transparency by framing detection as a classification task without explaining decisions. While several LLM-based approaches offer explainability, they suffer from coarse-grained analyses and dependency on labor-intensive annotations. This paper introduces RAIDX (Retrieval-Augmented Image Deepfake Detection and Explainability), a novel deepfake detection framework integrating Retrieval-Augmented Generation (RAG) and Group Relative Policy Optimization (GRPO) to enhance detection accuracy and decision explainability. Specifically, RAIDX leverages RAG to incorporate external knowledge for improved detection accuracy and employs GRPO to autonomously generate fine-grained textual explanations and saliency maps, eliminating the need for extensive manual annotations. Experiments on multiple benchmarks demonstrate RAIDX's effectiveness in identifying real or fake, and providing interpretable rationales in both textual descriptions and saliency maps, achieving state-of-the-art detection performance while advancing transparency in deepfake identification. RAIDX represents the first unified framework to synergize RAG and GRPO, addressing critical gaps in accuracy and explainability. Our code and models will be publicly available.

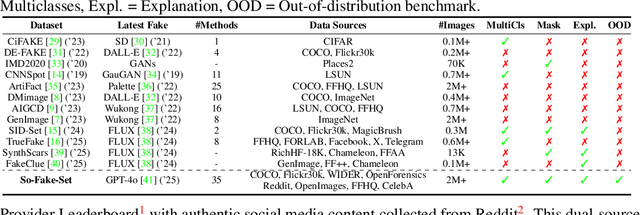

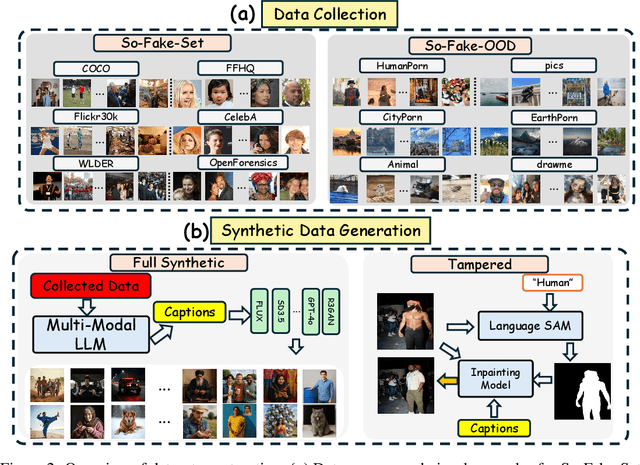

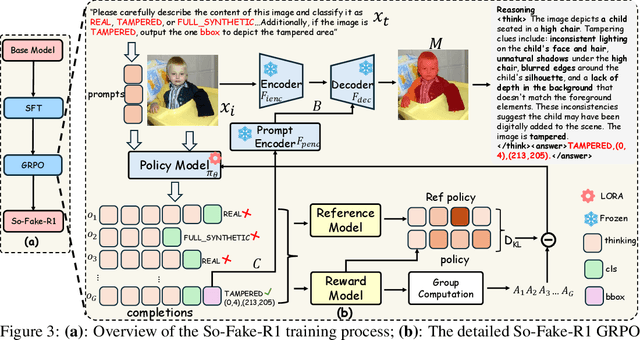

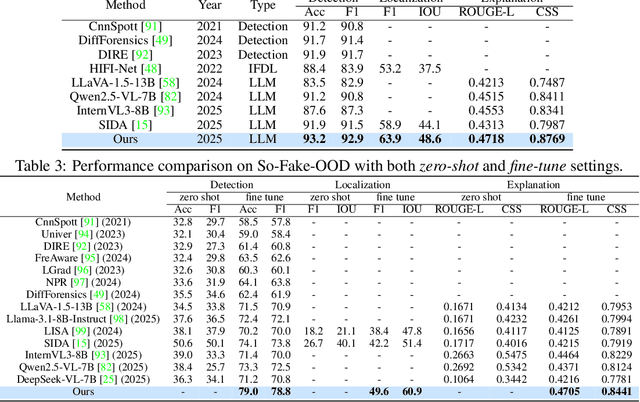

So-Fake: Benchmarking and Explaining Social Media Image Forgery Detection

May 24, 2025

Abstract:Recent advances in AI-powered generative models have enabled the creation of increasingly realistic synthetic images, posing significant risks to information integrity and public trust on social media platforms. While robust detection frameworks and diverse, large-scale datasets are essential to mitigate these risks, existing academic efforts remain limited in scope: current datasets lack the diversity, scale, and realism required for social media contexts, while detection methods struggle with generalization to unseen generative technologies. To bridge this gap, we introduce So-Fake-Set, a comprehensive social media-oriented dataset with over 2 million high-quality images, diverse generative sources, and photorealistic imagery synthesized using 35 state-of-the-art generative models. To rigorously evaluate cross-domain robustness, we establish a novel and large-scale (100K) out-of-domain benchmark (So-Fake-OOD) featuring synthetic imagery from commercial models explicitly excluded from the training distribution, creating a realistic testbed for evaluating real-world performance. Leveraging these resources, we present So-Fake-R1, an advanced vision-language framework that employs reinforcement learning for highly accurate forgery detection, precise localization, and explainable inference through interpretable visual rationales. Extensive experiments show that So-Fake-R1 outperforms the second-best method, with a 1.3% gain in detection accuracy and a 4.5% increase in localization IoU. By integrating a scalable dataset, a challenging OOD benchmark, and an advanced detection framework, this work establishes a new foundation for social media-centric forgery detection research. The code, models, and datasets will be released publicly.

BusterX: MLLM-Powered AI-Generated Video Forgery Detection and Explanation

May 19, 2025

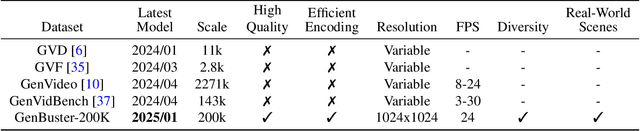

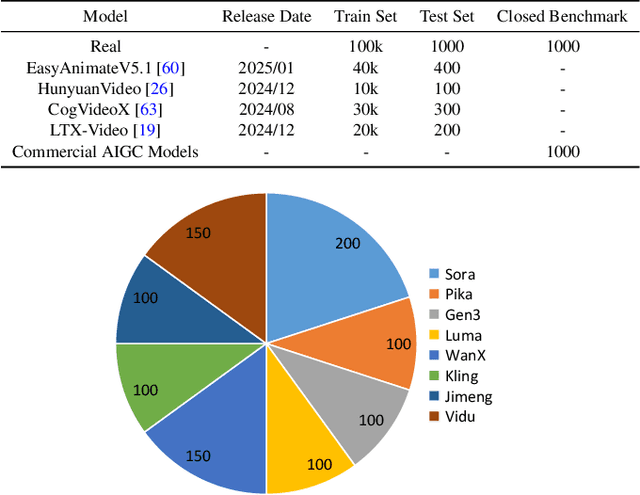

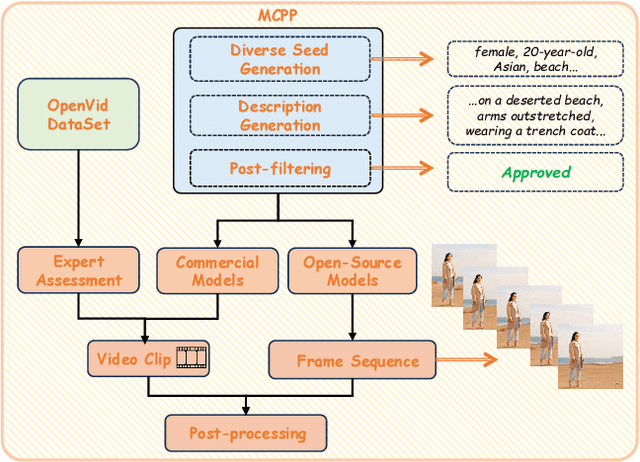

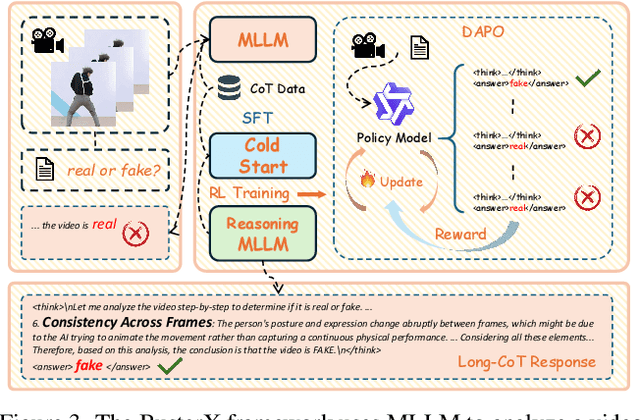

Abstract:Advances in AI generative models facilitate super-realistic video synthesis, amplifying misinformation risks via social media and eroding trust in digital content. Several research works have explored new deepfake detection methods on AI-generated images to alleviate these risks. However, with the fast development of video generation models, such as Sora and WanX, there is currently a lack of large-scale, high-quality AI-generated video datasets for forgery detection. In addition, existing detection approaches predominantly treat the task as binary classification, lacking explainability in model decision-making and failing to provide actionable insights or guidance for the public. To address these challenges, we propose \textbf{GenBuster-200K}, a large-scale AI-generated video dataset featuring 200K high-resolution video clips, diverse latest generative techniques, and real-world scenes. We further introduce \textbf{BusterX}, a novel AI-generated video detection and explanation framework leveraging multimodal large language model (MLLM) and reinforcement learning for authenticity determination and explainable rationale. To our knowledge, GenBuster-200K is the {\it \textbf{first}} large-scale, high-quality AI-generated video dataset that incorporates the latest generative techniques for real-world scenarios. BusterX is the {\it \textbf{first}} framework to integrate MLLM with reinforcement learning for explainable AI-generated video detection. Extensive comparisons with state-of-the-art methods and ablation studies validate the effectiveness and generalizability of BusterX. The code, models, and datasets will be released.

Probabilistic Segmentation for Robust Field of View Estimation

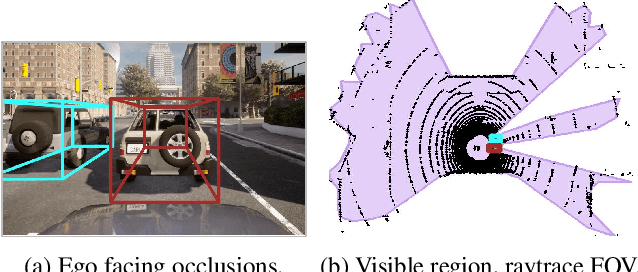

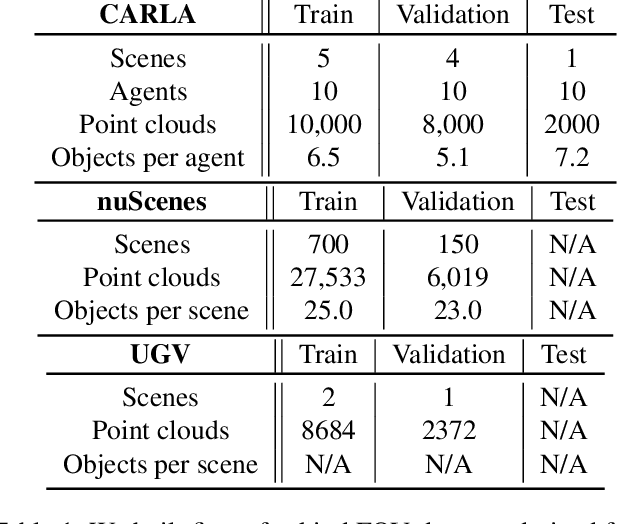

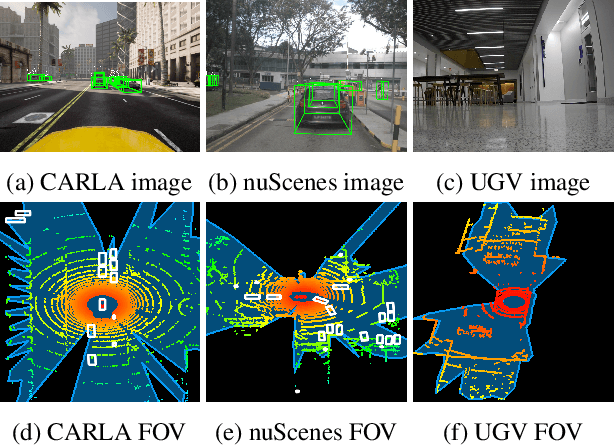

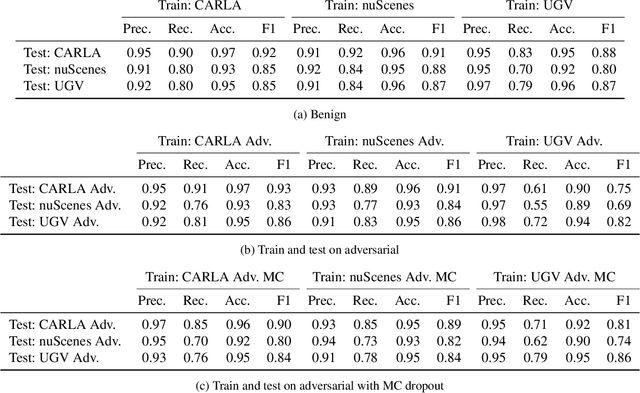

Mar 10, 2025

Abstract:Attacks on sensing and perception threaten the safe deployment of autonomous vehicles (AVs). Security-aware sensor fusion helps mitigate threats but requires accurate field of view (FOV) estimation which has not been evaluated autonomy. To address this gap, we adapt classical computer graphics algorithms to develop the first autonomy-relevant FOV estimators and create the first datasets with ground truth FOV labels. Unfortunately, we find that these approaches are themselves highly vulnerable to attacks on sensing. To improve robustness of FOV estimation against attacks, we propose a learning-based segmentation model that captures FOV features, integrates Monte Carlo dropout (MCD) for uncertainty quantification, and performs anomaly detection on confidence maps. We illustrate through comprehensive evaluations attack resistance and strong generalization across environments. Architecture trade studies demonstrate the model is feasible for real-time deployment in multiple applications.

SIDA: Social Media Image Deepfake Detection, Localization and Explanation with Large Multimodal Model

Dec 05, 2024

Abstract:The rapid advancement of generative models in creating highly realistic images poses substantial risks for misinformation dissemination. For instance, a synthetic image, when shared on social media, can mislead extensive audiences and erode trust in digital content, resulting in severe repercussions. Despite some progress, academia has not yet created a large and diversified deepfake detection dataset for social media, nor has it devised an effective solution to address this issue. In this paper, we introduce the Social media Image Detection dataSet (SID-Set), which offers three key advantages: (1) extensive volume, featuring 300K AI-generated/tampered and authentic images with comprehensive annotations, (2) broad diversity, encompassing fully synthetic and tampered images across various classes, and (3) elevated realism, with images that are predominantly indistinguishable from genuine ones through mere visual inspection. Furthermore, leveraging the exceptional capabilities of large multimodal models, we propose a new image deepfake detection, localization, and explanation framework, named SIDA (Social media Image Detection, localization, and explanation Assistant). SIDA not only discerns the authenticity of images, but also delineates tampered regions through mask prediction and provides textual explanations of the model's judgment criteria. Compared with state-of-the-art deepfake detection models on SID-Set and other benchmarks, extensive experiments demonstrate that SIDA achieves superior performance among diversified settings. The code, model, and dataset will be released.

Joint-Optimized Unsupervised Adversarial Domain Adaptation in Remote Sensing Segmentation with Prompted Foundation Model

Nov 18, 2024

Abstract:Unsupervised Domain Adaptation for Remote Sensing Semantic Segmentation (UDA-RSSeg) addresses the challenge of adapting a model trained on source domain data to target domain samples, thereby minimizing the need for annotated data across diverse remote sensing scenes. This task presents two principal challenges: (1) severe inconsistencies in feature representation across different remote sensing domains, and (2) a domain gap that emerges due to the representation bias of source domain patterns when translating features to predictive logits. To tackle these issues, we propose a joint-optimized adversarial network incorporating the "Segment Anything Model (SAM) (SAM-JOANet)" for UDA-RSSeg. Our approach integrates SAM to leverage its robust generalized representation capabilities, thereby alleviating feature inconsistencies. We introduce a finetuning decoder designed to convert SAM-Encoder features into predictive logits. Additionally, a feature-level adversarial-based prompted segmentor is employed to generate class-agnostic maps, which guide the finetuning decoder's feature representations. The network is optimized end-to-end, combining the prompted segmentor and the finetuning decoder. Extensive evaluations on benchmark datasets, including ISPRS (Potsdam/Vaihingen) and CITY-OSM (Paris/Chicago), demonstrate the effectiveness of our method. The results, supported by visualization and analysis, confirm the method's interpretability and robustness. The code of this paper is available at https://github.com/CV-ShuchangLyu/SAM-JOANet.

Out-of-distribution detection based on subspace projection of high-dimensional features output by the last convolutional layer

May 02, 2024

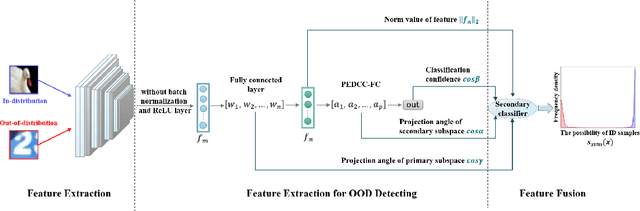

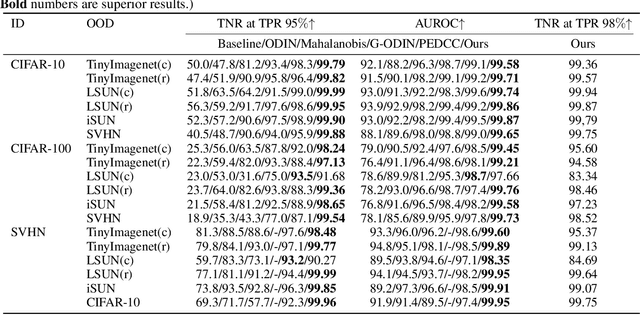

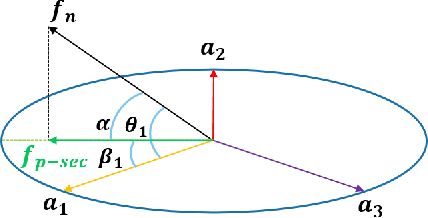

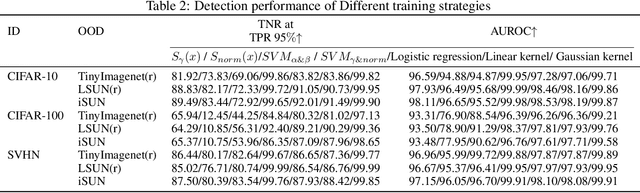

Abstract:Out-of-distribution (OOD) detection, crucial for reliable pattern classification, discerns whether a sample originates outside the training distribution. This paper concentrates on the high-dimensional features output by the final convolutional layer, which contain rich image features. Our key idea is to project these high-dimensional features into two specific feature subspaces, leveraging the dimensionality reduction capacity of the network's linear layers, trained with Predefined Evenly-Distribution Class Centroids (PEDCC)-Loss. This involves calculating the cosines of three projection angles and the norm values of features, thereby identifying distinctive information for in-distribution (ID) and OOD data, which assists in OOD detection. Building upon this, we have modified the batch normalization (BN) and ReLU layer preceding the fully connected layer, diminishing their impact on the output feature distributions and thereby widening the distribution gap between ID and OOD data features. Our method requires only the training of the classification network model, eschewing any need for input pre-processing or specific OOD data pre-tuning. Extensive experiments on several benchmark datasets demonstrates that our approach delivers state-of-the-art performance. Our code is available at https://github.com/Hewell0/ProjOOD.

GaitSet: Cross-view Gait Recognition through Utilizing Gait as a Deep Set

Feb 05, 2021

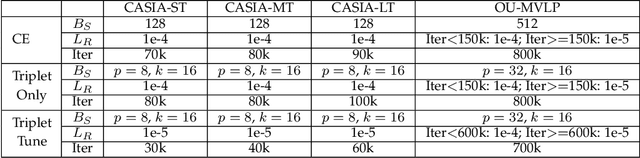

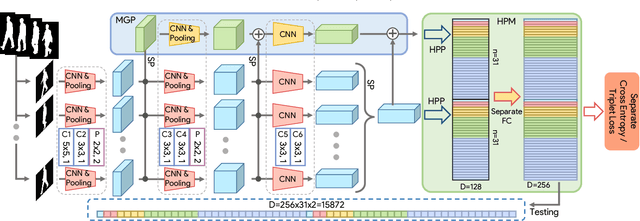

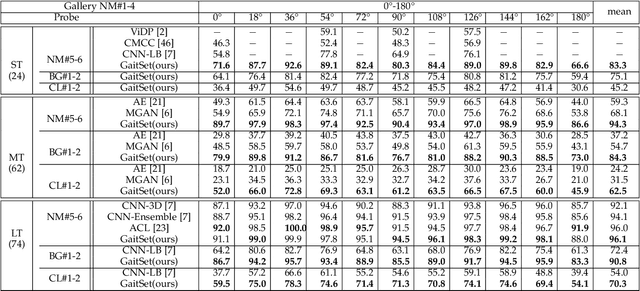

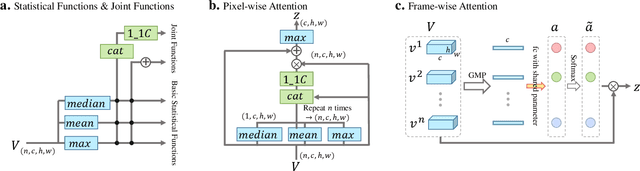

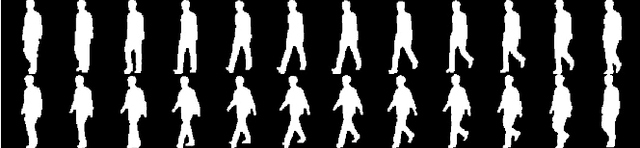

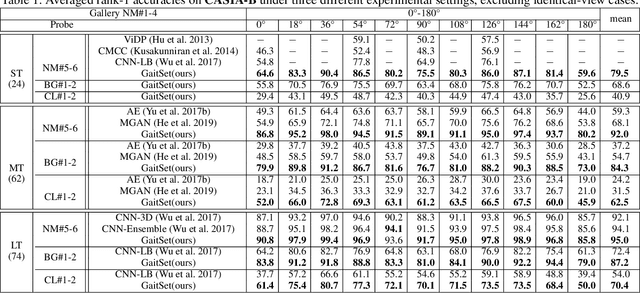

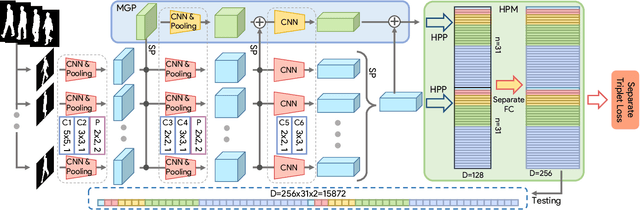

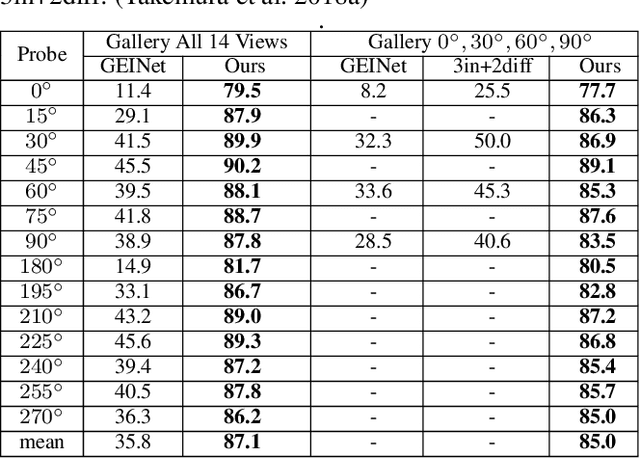

Abstract:Gait is a unique biometric feature that can be recognized at a distance; thus, it has broad applications in crime prevention, forensic identification, and social security. To portray a gait, existing gait recognition methods utilize either a gait template which makes it difficult to preserve temporal information, or a gait sequence that maintains unnecessary sequential constraints and thus loses the flexibility of gait recognition. In this paper, we present a novel perspective that utilizes gait as a deep set, which means that a set of gait frames are integrated by a global-local fused deep network inspired by the way our left- and right-hemisphere processes information to learn information that can be used in identification. Based on this deep set perspective, our method is immune to frame permutations, and can naturally integrate frames from different videos that have been acquired under different scenarios, such as diverse viewing angles, different clothes, or different item-carrying conditions. Experiments show that under normal walking conditions, our single-model method achieves an average rank-1 accuracy of 96.1% on the CASIA-B gait dataset and an accuracy of 87.9% on the OU-MVLP gait dataset. Under various complex scenarios, our model also exhibits a high level of robustness. It achieves accuracies of 90.8% and 70.3% on CASIA-B under bag-carrying and coat-wearing walking conditions respectively, significantly outperforming the best existing methods. Moreover, the proposed method maintains a satisfactory accuracy even when only small numbers of frames are available in the test samples; for example, it achieves 85.0% on CASIA-B even when using only 7 frames. The source code has been released at https://github.com/AbnerHqC/GaitSet.

GaitSet: Regarding Gait as a Set for Cross-View Gait Recognition

Dec 12, 2018

Abstract:As a unique biometric feature that can be recognized at a distance, gait has broad applications in crime prevention, forensic identification and social security. To portray a gait, existing gait recognition methods utilize either a gait template, where temporal information is hard to preserve, or a gait sequence, which must keep unnecessary sequential constraints and thus loses the flexibility of gait recognition. In this paper we present a novel perspective, where a gait is regarded as a set consisting of independent frames. We propose a new network named GaitSet to learn identity information from the set. Based on the set perspective, our method is immune to permutation of frames, and can naturally integrate frames from different videos which have been filmed under different scenarios, such as diverse viewing angles, different clothes/carrying conditions. Experiments show that under normal walking conditions, our single-model method achieves an average rank-1 accuracy of 95.0% on the CASIA-B gait dataset and an 87.1% accuracy on the OU-MVLP gait dataset. These results represent new state-of-the-art recognition accuracy. On various complex scenarios, our model exhibits a significant level of robustness. It achieves accuracies of 87.2% and 70.4% on CASIA-B under bag-carrying and coat-wearing walking conditions, respectively. These outperform the existing best methods by a large margin. The method presented can also achieve a satisfactory accuracy with a small number of frames in a test sample, e.g., 82.5% on CASIA-B with only 7 frames. The source code has been released at https://github.com/AbnerHqC/GaitSet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge