Yichi Zhang

AI Lab, Netease

Uncovering Modality Discrepancy and Generalization Illusion for General-Purpose 3D Medical Segmentation

Feb 07, 2026Abstract:While emerging 3D medical foundation models are envisioned as versatile tools with offer general-purpose capabilities, their validation remains largely confined to regional and structural imaging, leaving a significant modality discrepancy unexplored. To provide a rigorous and objective assessment, we curate the UMD dataset comprising 490 whole-body PET/CT and 464 whole-body PET/MRI scans ($\sim$675k 2D images, $\sim$12k 3D organ annotations) and conduct a thorough and comprehensive evaluation of representative 3D segmentation foundation models. Through intra-subject controlled comparisons of paired scans, we isolate imaging modality as the primary independent variable to evaluate model robustness in real-world applications. Our evaluation reveals a stark discrepancy between literature-reported benchmarks and real-world efficacy, particularly when transitioning from structural to functional domains. Such systemic failures underscore that current 3D foundation models are far from achieving truly general-purpose status, necessitating a paradigm shift toward multi-modal training and evaluation to bridge the gap between idealized benchmarking and comprehensive clinical utility. This dataset and analysis establish a foundational cornerstone for future research to develop truly modality-agnostic medical foundation models.

Hyperparameter Transfer Laws for Non-Recurrent Multi-Path Neural Networks

Feb 07, 2026Abstract:Deeper modern architectures are costly to train, making hyperparameter transfer preferable to expensive repeated tuning. Maximal Update Parametrization ($μ$P) helps explain why many hyperparameters transfer across width. Yet depth scaling is less understood for modern architectures, whose computation graphs contain multiple parallel paths and residual aggregation. To unify various non-recurrent multi-path neural networks such as CNNs, ResNets, and Transformers, we introduce a graph-based notion of effective depth. Under stabilizing initializations and a maximal-update criterion, we show that the optimal learning rate decays with effective depth following a universal -3/2 power law. Here, the maximal-update criterion maximizes the typical one-step representation change at initialization without causing instability, and effective depth is the minimal path length from input to output, counting layers and residual additions. Experiments across diverse architectures confirm the predicted slope and enable reliable zero-shot transfer of learning rates across depths and widths, turning depth scaling into a predictable hyperparameter-transfer problem.

Kimi K2.5: Visual Agentic Intelligence

Feb 02, 2026Abstract:We introduce Kimi K2.5, an open-source multimodal agentic model designed to advance general agentic intelligence. K2.5 emphasizes the joint optimization of text and vision so that two modalities enhance each other. This includes a series of techniques such as joint text-vision pre-training, zero-vision SFT, and joint text-vision reinforcement learning. Building on this multimodal foundation, K2.5 introduces Agent Swarm, a self-directed parallel agent orchestration framework that dynamically decomposes complex tasks into heterogeneous sub-problems and executes them concurrently. Extensive evaluations show that Kimi K2.5 achieves state-of-the-art results across various domains including coding, vision, reasoning, and agentic tasks. Agent Swarm also reduces latency by up to $4.5\times$ over single-agent baselines. We release the post-trained Kimi K2.5 model checkpoint to facilitate future research and real-world applications of agentic intelligence.

Small-Margin Preferences Still Matter-If You Train Them Right

Feb 01, 2026Abstract:Preference optimization methods such as DPO align large language models (LLMs) using paired comparisons, but their effectiveness can be highly sensitive to the quality and difficulty of preference pairs. A common heuristic treats small-margin (ambiguous) pairs as noisy and filters them out. In this paper, we revisit this assumption and show that pair difficulty interacts strongly with the optimization objective: when trained with preference-based losses, difficult pairs can destabilize training and harm alignment, yet these same pairs still contain useful supervision signals when optimized with supervised fine-tuning (SFT). Motivated by this observation, we propose MixDPO, a simple yet effective difficulty-aware training strategy that (i) orders preference data from easy to hard (a curriculum over margin-defined difficulty), and (ii) routes difficult pairs to an SFT objective while applying a preference loss to easy pairs. This hybrid design provides a practical mechanism to leverage ambiguous pairs without incurring the optimization failures often associated with preference losses on low-margin data. Across three LLM-judge benchmarks, MixDPO consistently improves alignment over DPO and a range of widely-used variants, with particularly strong gains on AlpacaEval~2 length-controlled (LC) win rate.

Reasoning as State Transition: A Representational Analysis of Reasoning Evolution in Large Language Models

Jan 31, 2026Abstract:Large Language Models have achieved remarkable performance on reasoning tasks, motivating research into how this ability evolves during training. Prior work has primarily analyzed this evolution via explicit generation outcomes, treating the reasoning process as a black box and obscuring internal changes. To address this opacity, we introduce a representational perspective to investigate the dynamics of the model's internal states. Through comprehensive experiments across models at various training stages, we discover that post-training yields only limited improvement in static initial representation quality. Furthermore, we reveal that, distinct from non-reasoning tasks, reasoning involves a significant continuous distributional shift in representations during generation. Comparative analysis indicates that post-training empowers models to drive this transition toward a better distribution for task solving. To clarify the relationship between internal states and external outputs, statistical analysis confirms a high correlation between generation correctness and the final representations; while counterfactual experiments identify the semantics of the generated tokens, rather than additional computation during inference or intrinsic parameter differences, as the dominant driver of the transition. Collectively, we offer a novel understanding of the reasoning process and the effect of training on reasoning enhancement, providing valuable insights for future model analysis and optimization.

Leveraging Second-Order Curvature for Efficient Learned Image Compression: Theory and Empirical Evidence

Jan 29, 2026Abstract:Training learned image compression (LIC) models entails navigating a challenging optimization landscape defined by the fundamental trade-off between rate and distortion. Standard first-order optimizers, such as SGD and Adam, struggle with \emph{gradient conflicts} arising from competing objectives, leading to slow convergence and suboptimal rate-distortion performance. In this work, we demonstrate that a simple utilization of a second-order quasi-Newton optimizer, \textbf{SOAP}, dramatically improves both training efficiency and final performance across diverse LICs. Our theoretical and empirical analyses reveal that Newton preconditioning inherently resolves the intra-step and inter-step update conflicts intrinsic to the R-D objective, facilitating faster, more stable convergence. Beyond acceleration, we uncover a critical deployability benefit: second-order trained models exhibit significantly fewer activation and latent outliers. This substantially enhances robustness to post-training quantization. Together, these results establish second-order optimization, achievable as a seamless drop-in replacement of the imported optimizer, as a powerful, practical tool for advancing the efficiency and real-world readiness of LICs.

RLPO: Residual Listwise Preference Optimization for Long-Context Review Ranking

Jan 12, 2026Abstract:Review ranking is pivotal in e-commerce for prioritizing diagnostic and authentic feedback from the deluge of user-generated content. While large language models have improved semantic assessment, existing ranking paradigms face a persistent trade-off in long-context settings. Pointwise scoring is efficient but often fails to account for list-level interactions, leading to miscalibrated top-$k$ rankings. Listwise approaches can leverage global context, yet they are computationally expensive and become unstable as candidate lists grow. To address this, we propose Residual Listwise Preference Optimization (RLPO), which formulates ranking as listwise representation-level residual correction over a strong pointwise LLM scorer. RLPO first produces calibrated pointwise scores and item representations, then applies a lightweight encoder over the representations to predict listwise score residuals, avoiding full token-level listwise processing. We also introduce a large-scale benchmark for long-context review ranking with human verification. Experiments show RLPO improves NDCG@k over strong pointwise and listwise baselines and remains robust as list length increases.

6D Movable Antenna Enhanced Cell-free MIMO: Two-timescale Decentralized Beamforming and Antenna Movement Optimization

Jan 08, 2026Abstract:This paper investigates a six-dimensional movable antenna (6DMA)-aided cell-free multi-user multiple-input multiple-output (MIMO) communication system. In this system, each distributed access point (AP) can flexibly adjust its array orientation and antenna positions to adapt to spatial channel variations and enhance communication performance. However, frequent antenna movements and centralized beamforming based on global instantaneous channel state information (CSI) sharing among APs entail extremely high signal processing delay and system overhead, which is difficult to be practically implemented in high-mobility scenarios with short channel coherence time. To address these practical implementation challenges and improve scalability, a two-timescale decentralized optimization framework is proposed in this paper to jointly design the beamformer, antenna positions, and array orientations. In the short timescale, each AP updates its receive beamformer based on local instantaneous CSI and global statistical CSI. In the long timescale, the central processing unit optimizes the antenna positions and array orientations at all APs based on global statistical CSI to maximize the ergodic sum rate of all users. The resulting optimization problem is non-convex and involves highly coupled variables, thus posing significant challenges for obtaining efficient solutions. To address this problem, a constrained stochastic successive convex approximation algorithm is developed. Numerical results demonstrate that the proposed 6DMA-aided cell-free system with decentralized beamforming significantly outperforms other antenna movement schemes with less flexibility and even achieves a performance comparable to that of the centralized beamforming benchmark.

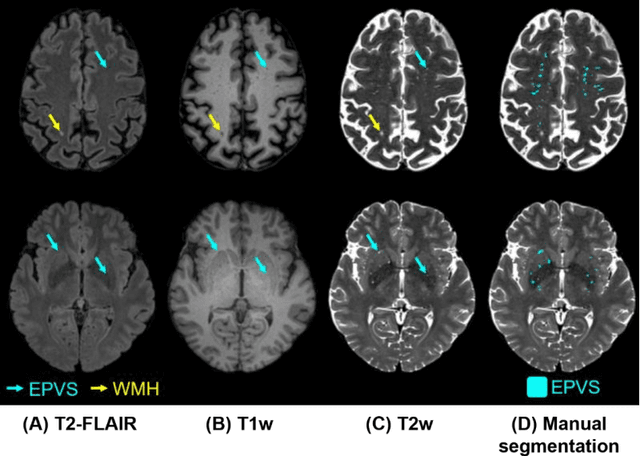

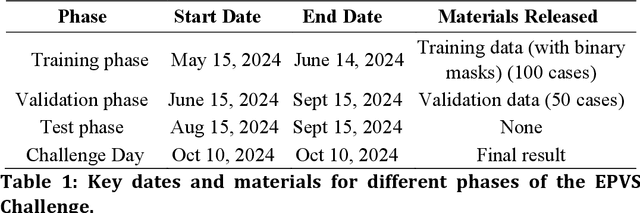

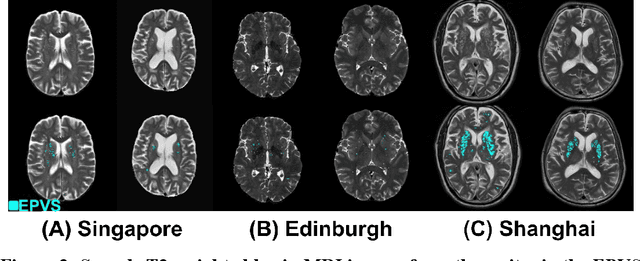

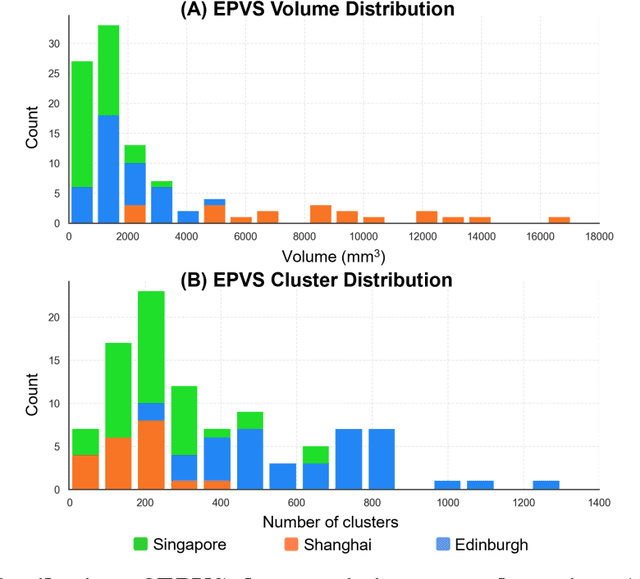

Standardized Evaluation of Automatic Methods for Perivascular Spaces Segmentation in MRI -- MICCAI 2024 Challenge Results

Dec 20, 2025

Abstract:Perivascular spaces (PVS), when abnormally enlarged and visible in magnetic resonance imaging (MRI) structural sequences, are important imaging markers of cerebral small vessel disease and potential indicators of neurodegenerative conditions. Despite their clinical significance, automatic enlarged PVS (EPVS) segmentation remains challenging due to their small size, variable morphology, similarity with other pathological features, and limited annotated datasets. This paper presents the EPVS Challenge organized at MICCAI 2024, which aims to advance the development of automated algorithms for EPVS segmentation across multi-site data. We provided a diverse dataset comprising 100 training, 50 validation, and 50 testing scans collected from multiple international sites (UK, Singapore, and China) with varying MRI protocols and demographics. All annotations followed the STRIVE protocol to ensure standardized ground truth and covered the full brain parenchyma. Seven teams completed the full challenge, implementing various deep learning approaches primarily based on U-Net architectures with innovations in multi-modal processing, ensemble strategies, and transformer-based components. Performance was evaluated using dice similarity coefficient, absolute volume difference, recall, and precision metrics. The winning method employed MedNeXt architecture with a dual 2D/3D strategy for handling varying slice thicknesses. The top solutions showed relatively good performance on test data from seen datasets, but significant degradation of performance was observed on the previously unseen Shanghai cohort, highlighting cross-site generalization challenges due to domain shift. This challenge establishes an important benchmark for EPVS segmentation methods and underscores the need for the continued development of robust algorithms that can generalize in diverse clinical settings.

A Conditional Generative Framework for Synthetic Data Augmentation in Segmenting Thin and Elongated Structures in Biological Images

Dec 11, 2025

Abstract:Thin and elongated filamentous structures, such as microtubules and actin filaments, often play important roles in biological systems. Segmenting these filaments in biological images is a fundamental step for quantitative analysis. Recent advances in deep learning have significantly improved the performance of filament segmentation. However, there is a big challenge in acquiring high quality pixel-level annotated dataset for filamentous structures, as the dense distribution and geometric properties of filaments making manual annotation extremely laborious and time-consuming. To address the data shortage problem, we propose a conditional generative framework based on the Pix2Pix architecture to generate realistic filaments in microscopy images from binary masks. We also propose a filament-aware structural loss to improve the structure similarity when generating synthetic images. Our experiments have demonstrated the effectiveness of our approach and outperformed existing model trained without synthetic data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge