Fengqing Zhu

Food Portion Estimation: From Pixels to Calories

Feb 04, 2026Abstract:Reliance on images for dietary assessment is an important strategy to accurately and conveniently monitor an individual's health, making it a vital mechanism in the prevention and care of chronic diseases and obesity. However, image-based dietary assessment suffers from estimating the three dimensional size of food from 2D image inputs. Many strategies have been devised to overcome this critical limitation such as the use of auxiliary inputs like depth maps, multi-view inputs, or model-based approaches such as template matching. Deep learning also helps bridge the gap by either using monocular images or combinations of the image and the auxillary inputs to precisely predict the output portion from the image input. In this paper, we explore the different strategies employed for accurate portion estimation.

Leveraging Second-Order Curvature for Efficient Learned Image Compression: Theory and Empirical Evidence

Jan 29, 2026Abstract:Training learned image compression (LIC) models entails navigating a challenging optimization landscape defined by the fundamental trade-off between rate and distortion. Standard first-order optimizers, such as SGD and Adam, struggle with \emph{gradient conflicts} arising from competing objectives, leading to slow convergence and suboptimal rate-distortion performance. In this work, we demonstrate that a simple utilization of a second-order quasi-Newton optimizer, \textbf{SOAP}, dramatically improves both training efficiency and final performance across diverse LICs. Our theoretical and empirical analyses reveal that Newton preconditioning inherently resolves the intra-step and inter-step update conflicts intrinsic to the R-D objective, facilitating faster, more stable convergence. Beyond acceleration, we uncover a critical deployability benefit: second-order trained models exhibit significantly fewer activation and latent outliers. This substantially enhances robustness to post-training quantization. Together, these results establish second-order optimization, achievable as a seamless drop-in replacement of the imported optimizer, as a powerful, practical tool for advancing the efficiency and real-world readiness of LICs.

Size Matters: Reconstructing Real-Scale 3D Models from Monocular Images for Food Portion Estimation

Jan 27, 2026Abstract:The rise of chronic diseases related to diet, such as obesity and diabetes, emphasizes the need for accurate monitoring of food intake. While AI-driven dietary assessment has made strides in recent years, the ill-posed nature of recovering size (portion) information from monocular images for accurate estimation of ``how much did you eat?'' is a pressing challenge. Some 3D reconstruction methods have achieved impressive geometric reconstruction but fail to recover the crucial real-world scale of the reconstructed object, limiting its usage in precision nutrition. In this paper, we bridge the gap between 3D computer vision and digital health by proposing a method that recovers a true-to-scale 3D reconstructed object from a monocular image. Our approach leverages rich visual features extracted from models trained on large-scale datasets to estimate the scale of the reconstructed object. This learned scale enables us to convert single-view 3D reconstructions into true-to-life, physically meaningful models. Extensive experiments and ablation studies on two publicly available datasets show that our method consistently outperforms existing techniques, achieving nearly a 30% reduction in mean absolute volume-estimation error, showcasing its potential to enhance the domain of precision nutrition. Code: https://gitlab.com/viper-purdue/size-matters

Training-Free Text-to-Image Compositional Food Generation via Prompt Grafting

Jan 25, 2026Abstract:Real-world meal images often contain multiple food items, making reliable compositional food image generation important for applications such as image-based dietary assessment, where multi-food data augmentation is needed, and recipe visualization. However, modern text-to-image diffusion models struggle to generate accurate multi-food images due to object entanglement, where adjacent foods (e.g., rice and soup) fuse together because many foods do not have clear boundaries. To address this challenge, we introduce Prompt Grafting (PG), a training-free framework that combines explicit spatial cues in text with implicit layout guidance during sampling. PG runs a two-stage process where a layout prompt first establishes distinct regions and the target prompt is grafted once layout formation stabilizes. The framework enables food entanglement control: users can specify which food items should remain separated or be intentionally mixed by editing the arrangement of layouts. Across two food datasets, our method significantly improves the presence of target objects and provides qualitative evidence of controllable separation.

Instance camera focus prediction for crystal agglomeration classification

Jan 13, 2026Abstract:Agglomeration refers to the process of crystal clustering due to interparticle forces. Crystal agglomeration analysis from microscopic images is challenging due to the inherent limitations of two-dimensional imaging. Overlapping crystals may appear connected even when located at different depth layers. Because optical microscopes have a shallow depth of field, crystals that are in-focus and out-of-focus in the same image typically reside on different depth layers and do not constitute true agglomeration. To address this, we first quantified camera focus with an instance camera focus prediction network to predict 2 class focus level that aligns better with visual observations than traditional image processing focus measures. Then an instance segmentation model is combined with the predicted focus level for agglomeration classification. Our proposed method has a higher agglomeration classification and segmentation accuracy than the baseline models on ammonium perchlorate crystal and sugar crystal dataset.

Food Image Generation on Multi-Noun Categories

Dec 09, 2025Abstract:Generating realistic food images for categories with multiple nouns is surprisingly challenging. For instance, the prompt "egg noodle" may result in images that incorrectly contain both eggs and noodles as separate entities. Multi-noun food categories are common in real-world datasets and account for a large portion of entries in benchmarks such as UEC-256. These compound names often cause generative models to misinterpret the semantics, producing unintended ingredients or objects. This is due to insufficient multi-noun category related knowledge in the text encoder and misinterpretation of multi-noun relationships, leading to incorrect spatial layouts. To overcome these challenges, we propose FoCULR (Food Category Understanding and Layout Refinement) which incorporates food domain knowledge and introduces core concepts early in the generation process. Experimental results demonstrate that the integration of these techniques improves image generation performance in the food domain.

PANDA - Patch And Distribution-Aware Augmentation for Long-Tailed Exemplar-Free Continual Learning

Nov 12, 2025

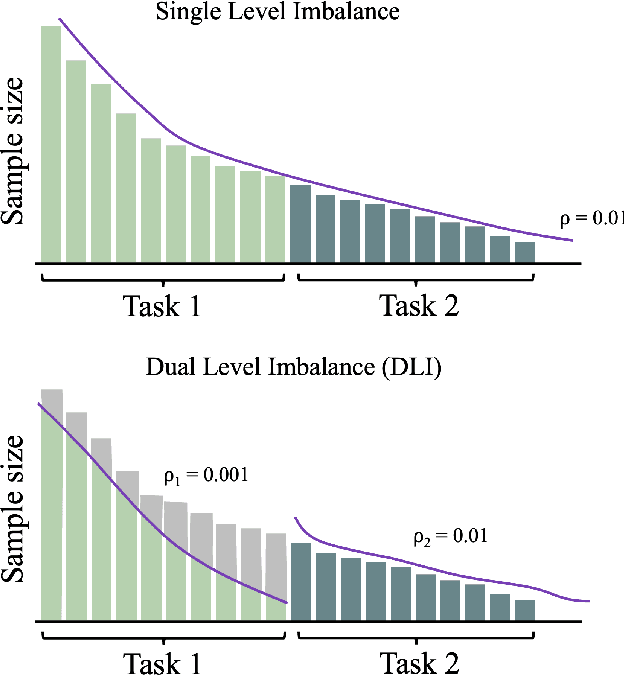

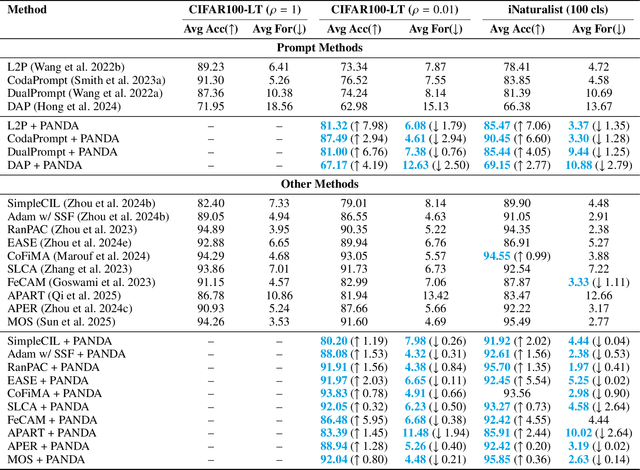

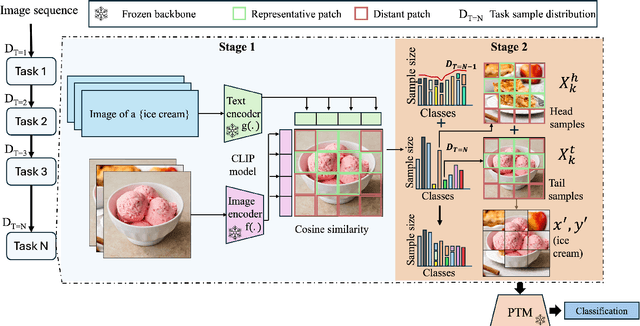

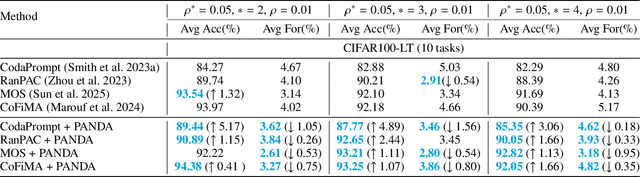

Abstract:Exemplar-Free Continual Learning (EFCL) restricts the storage of previous task data and is highly susceptible to catastrophic forgetting. While pre-trained models (PTMs) are increasingly leveraged for EFCL, existing methods often overlook the inherent imbalance of real-world data distributions. We discovered that real-world data streams commonly exhibit dual-level imbalances, dataset-level distributions combined with extreme or reversed skews within individual tasks, creating both intra-task and inter-task disparities that hinder effective learning and generalization. To address these challenges, we propose PANDA, a Patch-and-Distribution-Aware Augmentation framework that integrates seamlessly with existing PTM-based EFCL methods. PANDA amplifies low-frequency classes by using a CLIP encoder to identify representative regions and transplanting those into frequent-class samples within each task. Furthermore, PANDA incorporates an adaptive balancing strategy that leverages prior task distributions to smooth inter-task imbalances, reducing the overall gap between average samples across tasks and enabling fairer learning with frozen PTMs. Extensive experiments and ablation studies demonstrate PANDA's capability to work with existing PTM-based CL methods, improving accuracy and reducing catastrophic forgetting.

EntropyGS: An Efficient Entropy Coding on 3D Gaussian Splatting

Aug 13, 2025

Abstract:As an emerging novel view synthesis approach, 3D Gaussian Splatting (3DGS) demonstrates fast training/rendering with superior visual quality. The two tasks of 3DGS, Gaussian creation and view rendering, are typically separated over time or devices, and thus storage/transmission and finally compression of 3DGS Gaussians become necessary. We begin with a correlation and statistical analysis of 3DGS Gaussian attributes. An inspiring finding in this work reveals that spherical harmonic AC attributes precisely follow Laplace distributions, while mixtures of Gaussian distributions can approximate rotation, scaling, and opacity. Additionally, harmonic AC attributes manifest weak correlations with other attributes except for inherited correlations from a color space. A factorized and parameterized entropy coding method, EntropyGS, is hereinafter proposed. During encoding, distribution parameters of each Gaussian attribute are estimated to assist their entropy coding. The quantization for entropy coding is adaptively performed according to Gaussian attribute types. EntropyGS demonstrates about 30x rate reduction on benchmark datasets while maintaining similar rendering quality compared to input 3DGS data, with a fast encoding and decoding time.

OpenDCVCs: A PyTorch Open Source Implementation and Performance Evaluation of the DCVC series Video Codecs

Aug 06, 2025Abstract:We present OpenDCVCs, an open-source PyTorch implementation designed to advance reproducible research in learned video compression. OpenDCVCs provides unified and training-ready implementations of four representative Deep Contextual Video Compression (DCVC) models--DCVC, DCVC with Temporal Context Modeling (DCVC-TCM), DCVC with Hybrid Entropy Modeling (DCVC-HEM), and DCVC with Diverse Contexts (DCVC-DC). While the DCVC series achieves substantial bitrate reductions over both classical codecs and advanced learned models, previous public code releases have been limited to evaluation codes, presenting significant barriers to reproducibility, benchmarking, and further development. OpenDCVCs bridges this gap by offering a comprehensive, self-contained framework that supports both end-to-end training and evaluation for all included algorithms. The implementation includes detailed documentation, evaluation protocols, and extensive benchmarking results across diverse datasets, providing a transparent and consistent foundation for comparison and extension. All code and experimental tools are publicly available at https://gitlab.com/viper-purdue/opendcvcs, empowering the community to accelerate research and foster collaboration.

Evaluating Large Multimodal Models for Nutrition Analysis: A Benchmark Enriched with Contextual Metadata

Jul 09, 2025

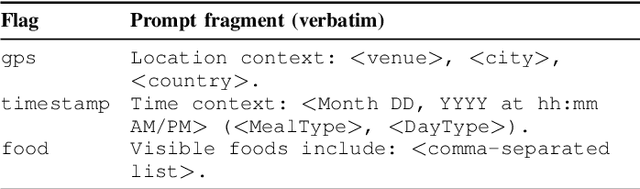

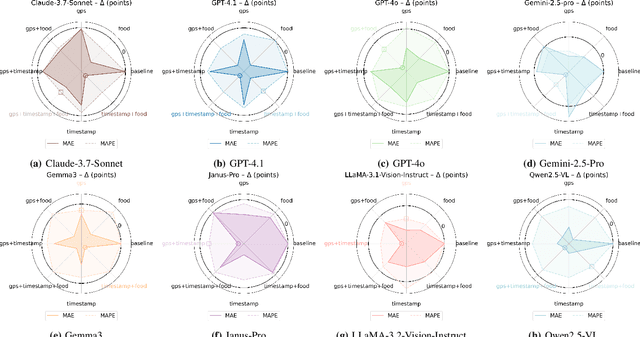

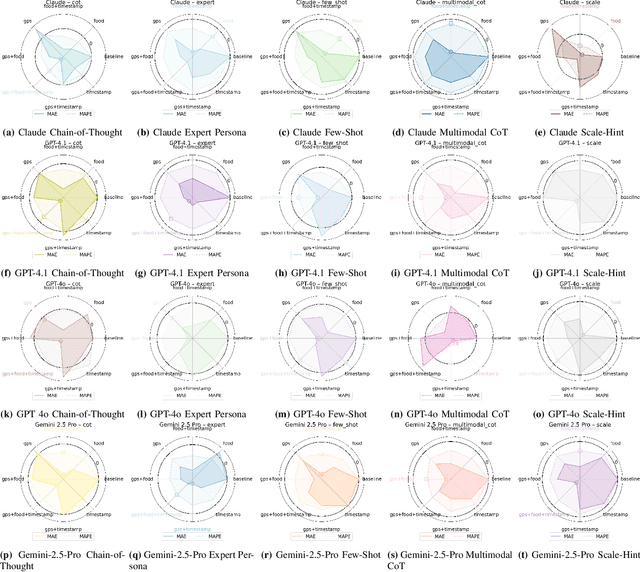

Abstract:Large Multimodal Models (LMMs) are increasingly applied to meal images for nutrition analysis. However, existing work primarily evaluates proprietary models, such as GPT-4. This leaves the broad range of LLMs underexplored. Additionally, the influence of integrating contextual metadata and its interaction with various reasoning modifiers remains largely uncharted. This work investigates how interpreting contextual metadata derived from GPS coordinates (converted to location/venue type), timestamps (transformed into meal/day type), and the food items present can enhance LMM performance in estimating key nutritional values. These values include calories, macronutrients (protein, carbohydrates, fat), and portion sizes. We also introduce ACETADA, a new food-image dataset slated for public release. This open dataset provides nutrition information verified by the dietitian and serves as the foundation for our analysis. Our evaluation across eight LMMs (four open-weight and four closed-weight) first establishes the benefit of contextual metadata integration over straightforward prompting with images alone. We then demonstrate how this incorporation of contextual information enhances the efficacy of reasoning modifiers, such as Chain-of-Thought, Multimodal Chain-of-Thought, Scale Hint, Few-Shot, and Expert Persona. Empirical results show that integrating metadata intelligently, when applied through straightforward prompting strategies, can significantly reduce the Mean Absolute Error (MAE) and Mean Absolute Percentage Error (MAPE) in predicted nutritional values. This work highlights the potential of context-aware LMMs for improved nutrition analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge