Siddeshwar Raghavan

Size Matters: Reconstructing Real-Scale 3D Models from Monocular Images for Food Portion Estimation

Jan 27, 2026Abstract:The rise of chronic diseases related to diet, such as obesity and diabetes, emphasizes the need for accurate monitoring of food intake. While AI-driven dietary assessment has made strides in recent years, the ill-posed nature of recovering size (portion) information from monocular images for accurate estimation of ``how much did you eat?'' is a pressing challenge. Some 3D reconstruction methods have achieved impressive geometric reconstruction but fail to recover the crucial real-world scale of the reconstructed object, limiting its usage in precision nutrition. In this paper, we bridge the gap between 3D computer vision and digital health by proposing a method that recovers a true-to-scale 3D reconstructed object from a monocular image. Our approach leverages rich visual features extracted from models trained on large-scale datasets to estimate the scale of the reconstructed object. This learned scale enables us to convert single-view 3D reconstructions into true-to-life, physically meaningful models. Extensive experiments and ablation studies on two publicly available datasets show that our method consistently outperforms existing techniques, achieving nearly a 30% reduction in mean absolute volume-estimation error, showcasing its potential to enhance the domain of precision nutrition. Code: https://gitlab.com/viper-purdue/size-matters

PANDA - Patch And Distribution-Aware Augmentation for Long-Tailed Exemplar-Free Continual Learning

Nov 12, 2025

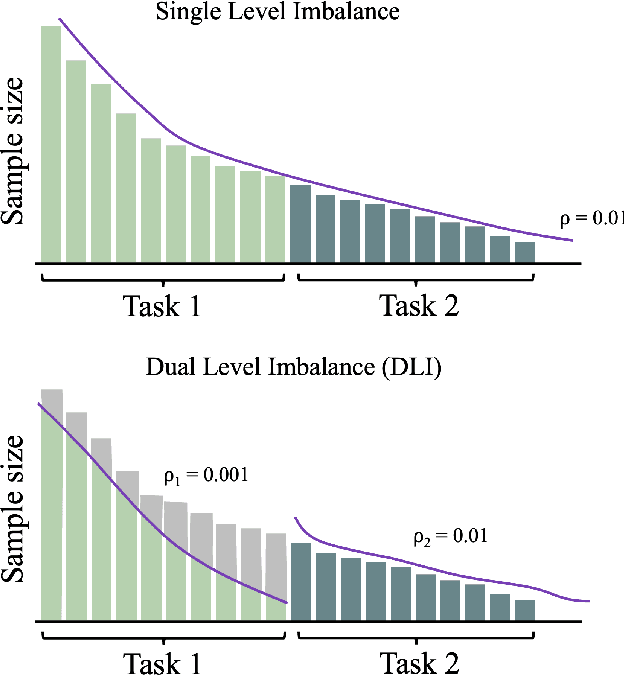

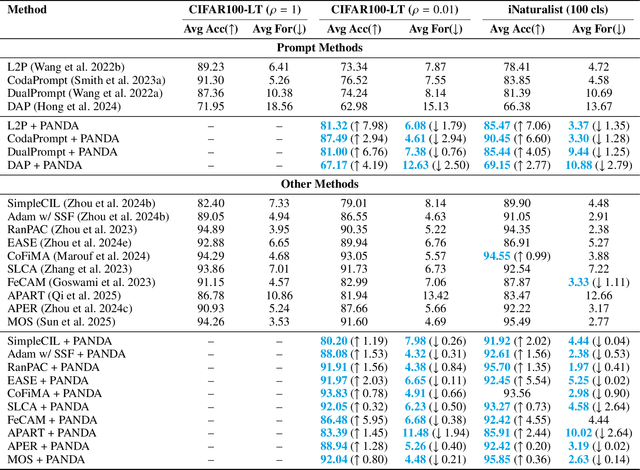

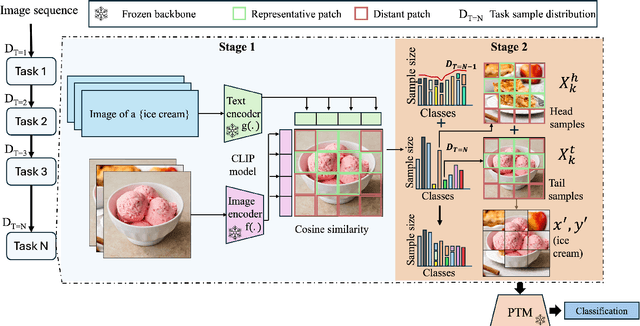

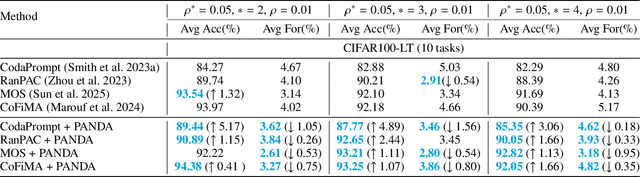

Abstract:Exemplar-Free Continual Learning (EFCL) restricts the storage of previous task data and is highly susceptible to catastrophic forgetting. While pre-trained models (PTMs) are increasingly leveraged for EFCL, existing methods often overlook the inherent imbalance of real-world data distributions. We discovered that real-world data streams commonly exhibit dual-level imbalances, dataset-level distributions combined with extreme or reversed skews within individual tasks, creating both intra-task and inter-task disparities that hinder effective learning and generalization. To address these challenges, we propose PANDA, a Patch-and-Distribution-Aware Augmentation framework that integrates seamlessly with existing PTM-based EFCL methods. PANDA amplifies low-frequency classes by using a CLIP encoder to identify representative regions and transplanting those into frequent-class samples within each task. Furthermore, PANDA incorporates an adaptive balancing strategy that leverages prior task distributions to smooth inter-task imbalances, reducing the overall gap between average samples across tasks and enabling fairer learning with frozen PTMs. Extensive experiments and ablation studies demonstrate PANDA's capability to work with existing PTM-based CL methods, improving accuracy and reducing catastrophic forgetting.

MFP3D: Monocular Food Portion Estimation Leveraging 3D Point Clouds

Nov 14, 2024

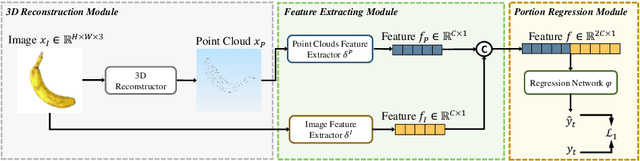

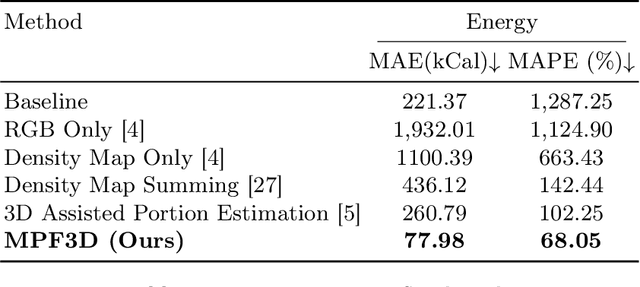

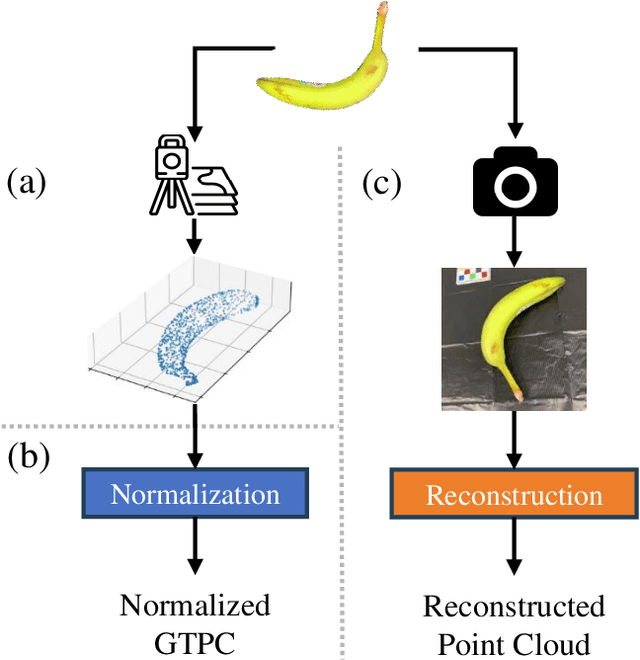

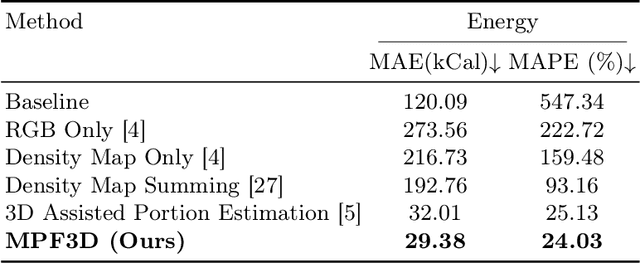

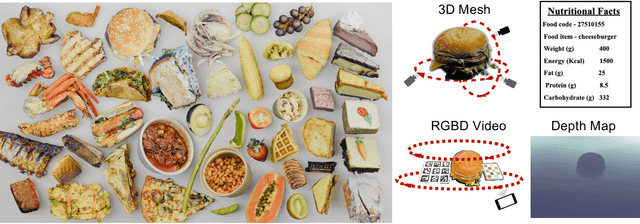

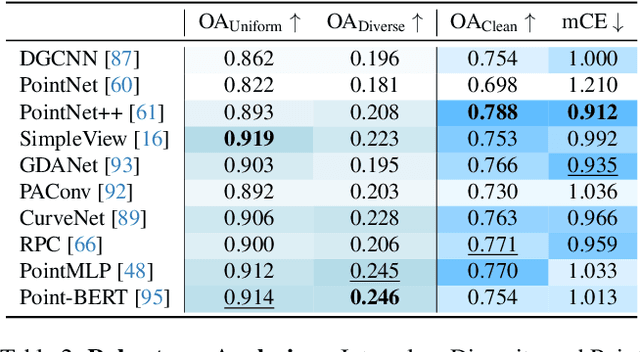

Abstract:Food portion estimation is crucial for monitoring health and tracking dietary intake. Image-based dietary assessment, which involves analyzing eating occasion images using computer vision techniques, is increasingly replacing traditional methods such as 24-hour recalls. However, accurately estimating the nutritional content from images remains challenging due to the loss of 3D information when projecting to the 2D image plane. Existing portion estimation methods are challenging to deploy in real-world scenarios due to their reliance on specific requirements, such as physical reference objects, high-quality depth information, or multi-view images and videos. In this paper, we introduce MFP3D, a new framework for accurate food portion estimation using only a single monocular image. Specifically, MFP3D consists of three key modules: (1) a 3D Reconstruction Module that generates a 3D point cloud representation of the food from the 2D image, (2) a Feature Extraction Module that extracts and concatenates features from both the 3D point cloud and the 2D RGB image, and (3) a Portion Regression Module that employs a deep regression model to estimate the food's volume and energy content based on the extracted features. Our MFP3D is evaluated on MetaFood3D dataset, demonstrating its significant improvement in accurate portion estimation over existing methods.

MetaFood3D: Large 3D Food Object Dataset with Nutrition Values

Sep 03, 2024

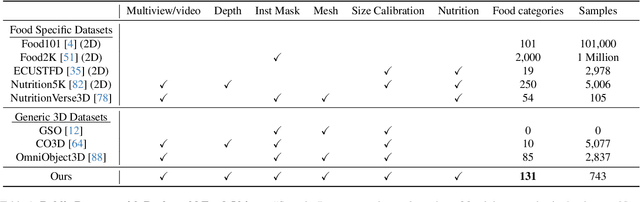

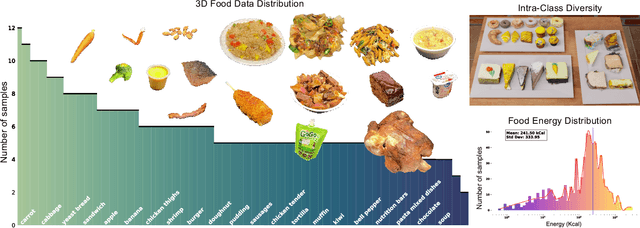

Abstract:Food computing is both important and challenging in computer vision (CV). It significantly contributes to the development of CV algorithms due to its frequent presence in datasets across various applications, ranging from classification and instance segmentation to 3D reconstruction. The polymorphic shapes and textures of food, coupled with high variation in forms and vast multimodal information, including language descriptions and nutritional data, make food computing a complex and demanding task for modern CV algorithms. 3D food modeling is a new frontier for addressing food-related problems, due to its inherent capability to deal with random camera views and its straightforward representation for calculating food portion size. However, the primary hurdle in the development of algorithms for food object analysis is the lack of nutrition values in existing 3D datasets. Moreover, in the broader field of 3D research, there is a critical need for domain-specific test datasets. To bridge the gap between general 3D vision and food computing research, we propose MetaFood3D. This dataset consists of 637 meticulously labeled 3D food objects across 108 categories, featuring detailed nutrition information, weight, and food codes linked to a comprehensive nutrition database. The dataset emphasizes intra-class diversity and includes rich modalities such as textured mesh files, RGB-D videos, and segmentation masks. Experimental results demonstrate our dataset's significant potential for improving algorithm performance, highlight the challenging gap between video captures and 3D scanned data, and show the strength of the MetaFood3D dataset in high-quality data generation, simulation, and augmentation.

DELTA: Decoupling Long-Tailed Online Continual Learning

Apr 06, 2024

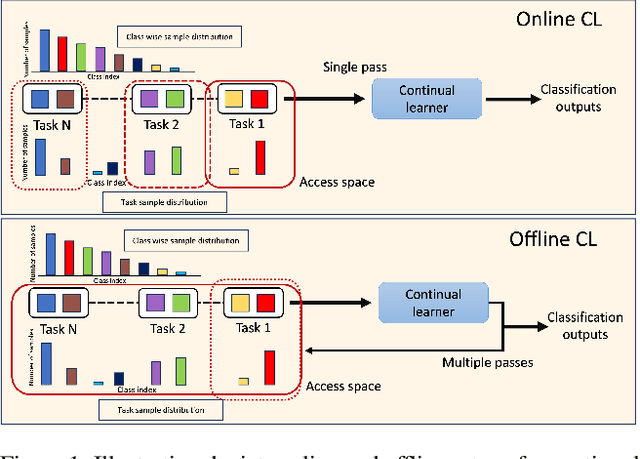

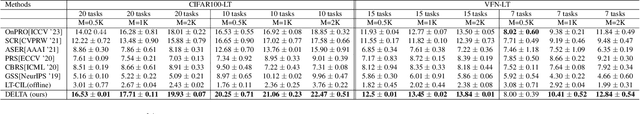

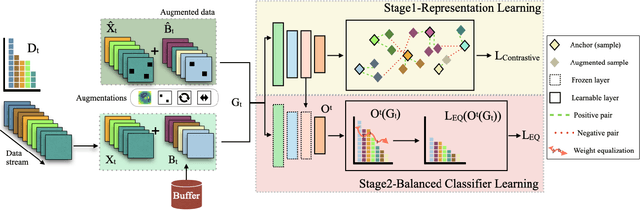

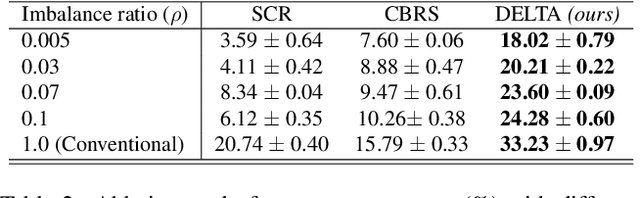

Abstract:A significant challenge in achieving ubiquitous Artificial Intelligence is the limited ability of models to rapidly learn new information in real-world scenarios where data follows long-tailed distributions, all while avoiding forgetting previously acquired knowledge. In this work, we study the under-explored problem of Long-Tailed Online Continual Learning (LTOCL), which aims to learn new tasks from sequentially arriving class-imbalanced data streams. Each data is observed only once for training without knowing the task data distribution. We present DELTA, a decoupled learning approach designed to enhance learning representations and address the substantial imbalance in LTOCL. We enhance the learning process by adapting supervised contrastive learning to attract similar samples and repel dissimilar (out-of-class) samples. Further, by balancing gradients during training using an equalization loss, DELTA significantly enhances learning outcomes and successfully mitigates catastrophic forgetting. Through extensive evaluation, we demonstrate that DELTA improves the capacity for incremental learning, surpassing existing OCL methods. Our results suggest considerable promise for applying OCL in real-world applications.

Online Class-Incremental Learning For Real-World Food Classification

Jan 12, 2023

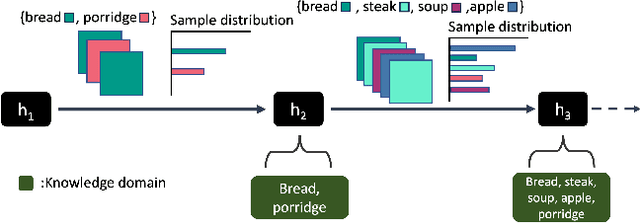

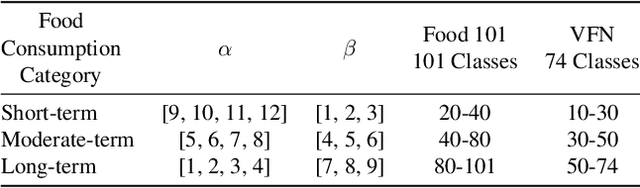

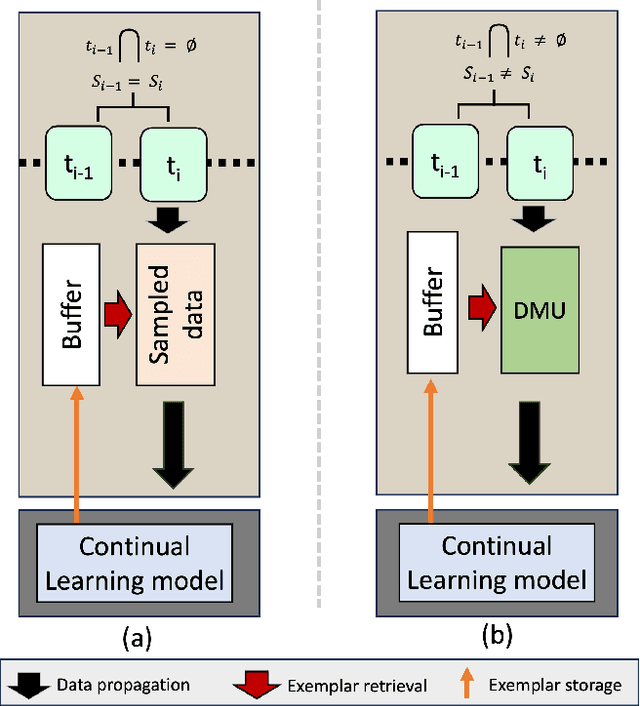

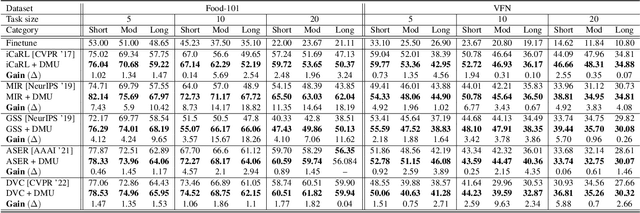

Abstract:Online Class-Incremental Learning (OCIL) aims to continuously learn new information from single-pass data streams to update the model and mitigate catastrophic forgetting. However, most existing OCIL methods make several assumptions, including non-overlapped classes across phases and an equal number of classes in each learning phase. This is a highly simplified view of typical real-world scenarios. In this paper, we extend OCIL to the real-world food image classification task by removing these assumptions and significantly improving the performance of existing OCIL methods. We first introduce a novel probabilistic framework to simulate realistic food data sequences in different scenarios, including strict, moderate, and open diets, as a new benchmark experiment protocol. Next, we propose a novel plug-and-play module to dynamically select relevant images during training for the model update to improve learning and forgetting performance. Our proposed module can be incorporated into existing Experience Replay (ER) methods, which store representative samples from each class into an episodic memory buffer for knowledge rehearsal. We evaluate our method on the challenging Food-101 dataset and show substantial improvements over the current OCIL methods, demonstrating great potential for lifelong learning of real-world food image classification.

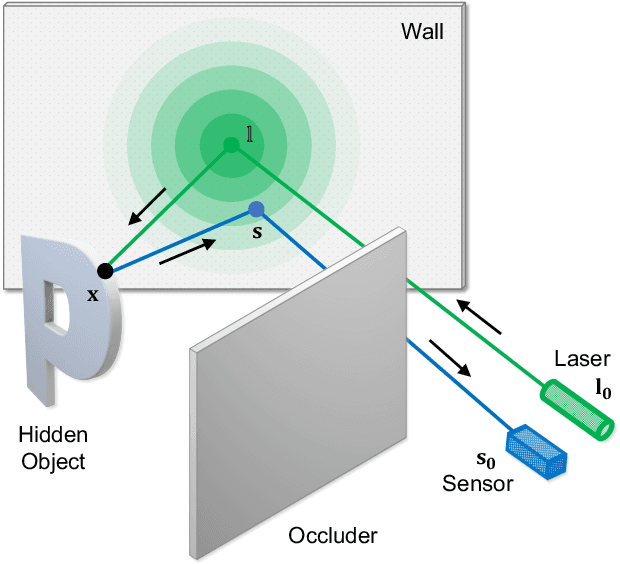

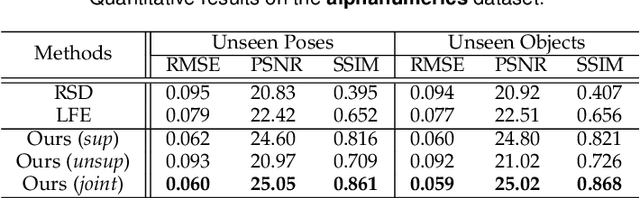

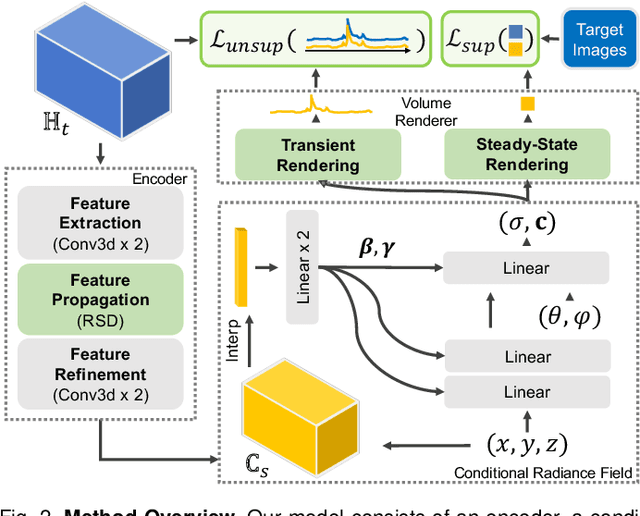

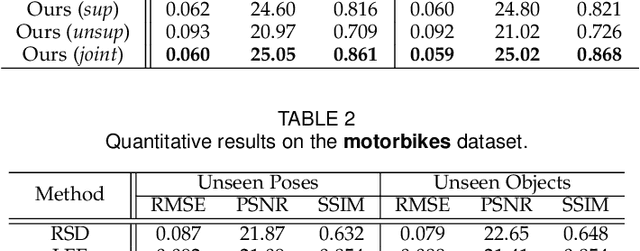

Physics to the Rescue: Deep Non-line-of-sight Reconstruction for High-speed Imaging

May 03, 2022

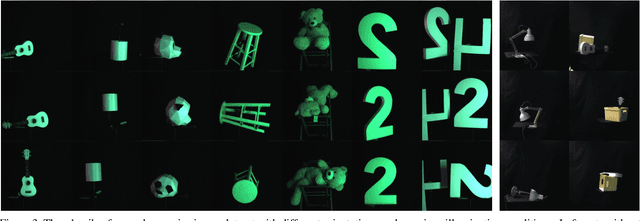

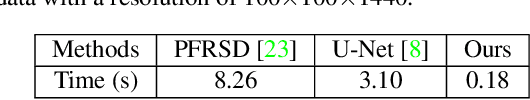

Abstract:Computational approach to imaging around the corner, or non-line-of-sight (NLOS) imaging, is becoming a reality thanks to major advances in imaging hardware and reconstruction algorithms. A recent development towards practical NLOS imaging, Nam et al. demonstrated a high-speed non-confocal imaging system that operates at 5Hz, 100x faster than the prior art. This enormous gain in acquisition rate, however, necessitates numerous approximations in light transport, breaking many existing NLOS reconstruction methods that assume an idealized image formation model. To bridge the gap, we present a novel deep model that incorporates the complementary physics priors of wave propagation and volume rendering into a neural network for high-quality and robust NLOS reconstruction. This orchestrated design regularizes the solution space by relaxing the image formation model, resulting in a deep model that generalizes well on real captures despite being exclusively trained on synthetic data. Further, we devise a unified learning framework that enables our model to be flexibly trained using diverse supervision signals, including target intensity images or even raw NLOS transient measurements. Once trained, our model renders both intensity and depth images at inference time in a single forward pass, capable of processing more than 5 captures per second on a high-end GPU. Through extensive qualitative and quantitative experiments, we show that our method outperforms prior physics and learning based approaches on both synthetic and real measurements. We anticipate that our method along with the fast capturing system will accelerate future development of NLOS imaging for real world applications that require high-speed imaging.

Towards Non-Line-of-Sight Photography

Sep 16, 2021

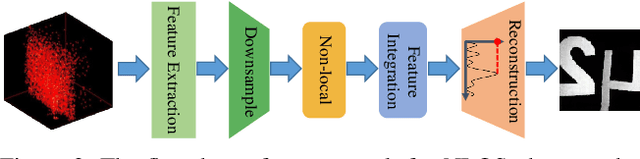

Abstract:Non-line-of-sight (NLOS) imaging is based on capturing the multi-bounce indirect reflections from the hidden objects. Active NLOS imaging systems rely on the capture of the time of flight of light through the scene, and have shown great promise for the accurate and robust reconstruction of hidden scenes without the need for specialized scene setups and prior assumptions. Despite that existing methods can reconstruct 3D geometries of the hidden scene with excellent depth resolution, accurately recovering object textures and appearance with high lateral resolution remains an challenging problem. In this work, we propose a new problem formulation, called NLOS photography, to specifically address this deficiency. Rather than performing an intermediate estimate of the 3D scene geometry, our method follows a data-driven approach and directly reconstructs 2D images of a NLOS scene that closely resemble the pictures taken with a conventional camera from the location of the relay wall. This formulation largely simplifies the challenging reconstruction problem by bypassing the explicit modeling of 3D geometry, and enables the learning of a deep model with a relatively small training dataset. The results are NLOS reconstructions of unprecedented lateral resolution and image quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge