Ji Hyun Nam

Physics to the Rescue: Deep Non-line-of-sight Reconstruction for High-speed Imaging

May 03, 2022

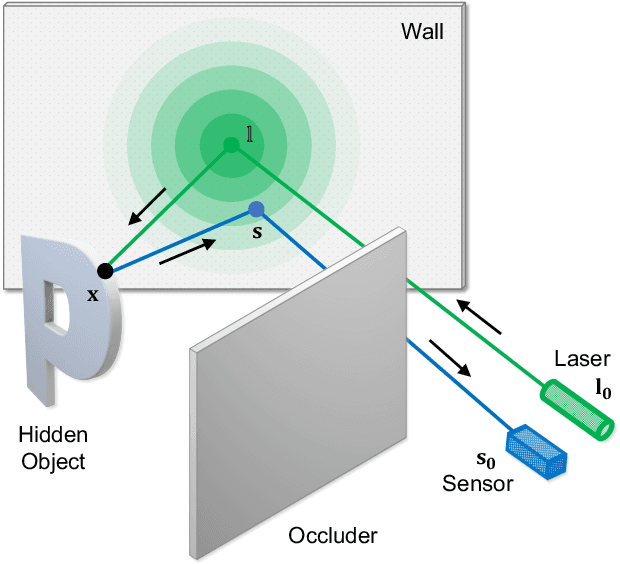

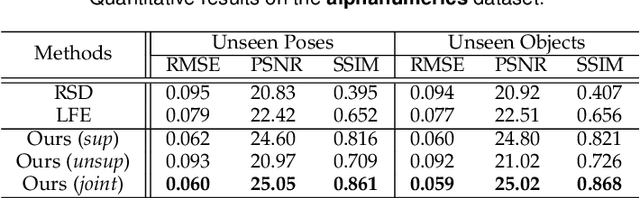

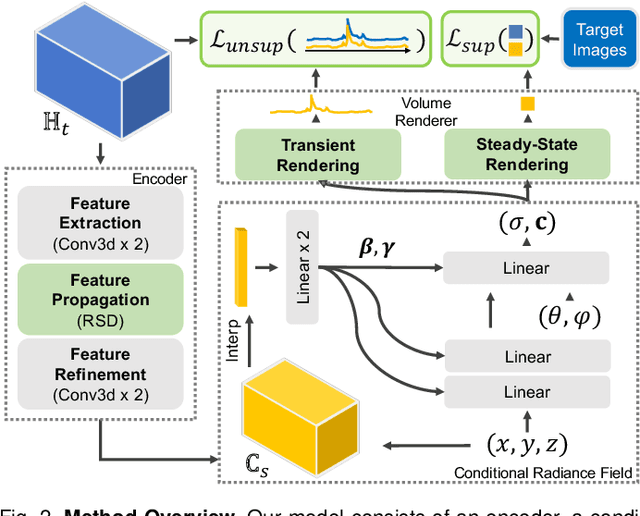

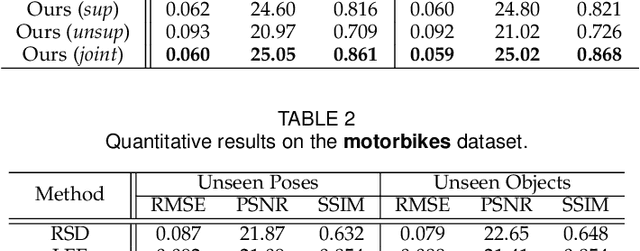

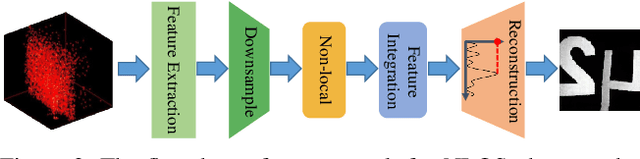

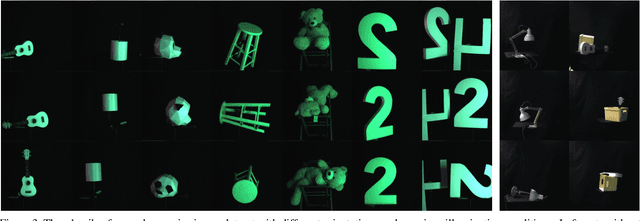

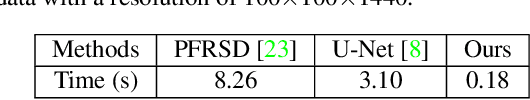

Abstract:Computational approach to imaging around the corner, or non-line-of-sight (NLOS) imaging, is becoming a reality thanks to major advances in imaging hardware and reconstruction algorithms. A recent development towards practical NLOS imaging, Nam et al. demonstrated a high-speed non-confocal imaging system that operates at 5Hz, 100x faster than the prior art. This enormous gain in acquisition rate, however, necessitates numerous approximations in light transport, breaking many existing NLOS reconstruction methods that assume an idealized image formation model. To bridge the gap, we present a novel deep model that incorporates the complementary physics priors of wave propagation and volume rendering into a neural network for high-quality and robust NLOS reconstruction. This orchestrated design regularizes the solution space by relaxing the image formation model, resulting in a deep model that generalizes well on real captures despite being exclusively trained on synthetic data. Further, we devise a unified learning framework that enables our model to be flexibly trained using diverse supervision signals, including target intensity images or even raw NLOS transient measurements. Once trained, our model renders both intensity and depth images at inference time in a single forward pass, capable of processing more than 5 captures per second on a high-end GPU. Through extensive qualitative and quantitative experiments, we show that our method outperforms prior physics and learning based approaches on both synthetic and real measurements. We anticipate that our method along with the fast capturing system will accelerate future development of NLOS imaging for real world applications that require high-speed imaging.

Towards Non-Line-of-Sight Photography

Sep 16, 2021

Abstract:Non-line-of-sight (NLOS) imaging is based on capturing the multi-bounce indirect reflections from the hidden objects. Active NLOS imaging systems rely on the capture of the time of flight of light through the scene, and have shown great promise for the accurate and robust reconstruction of hidden scenes without the need for specialized scene setups and prior assumptions. Despite that existing methods can reconstruct 3D geometries of the hidden scene with excellent depth resolution, accurately recovering object textures and appearance with high lateral resolution remains an challenging problem. In this work, we propose a new problem formulation, called NLOS photography, to specifically address this deficiency. Rather than performing an intermediate estimate of the 3D scene geometry, our method follows a data-driven approach and directly reconstructs 2D images of a NLOS scene that closely resemble the pictures taken with a conventional camera from the location of the relay wall. This formulation largely simplifies the challenging reconstruction problem by bypassing the explicit modeling of 3D geometry, and enables the learning of a deep model with a relatively small training dataset. The results are NLOS reconstructions of unprecedented lateral resolution and image quality.

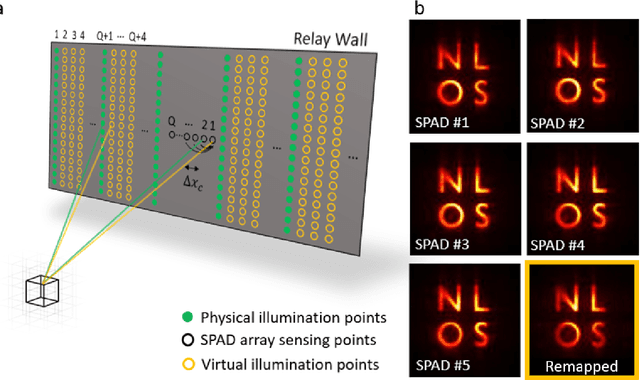

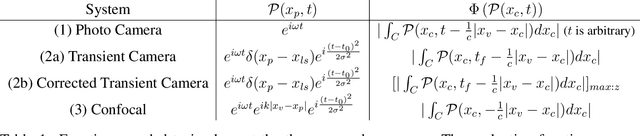

Virtual light transport matrices for non-line-of-sight imaging

Mar 23, 2021

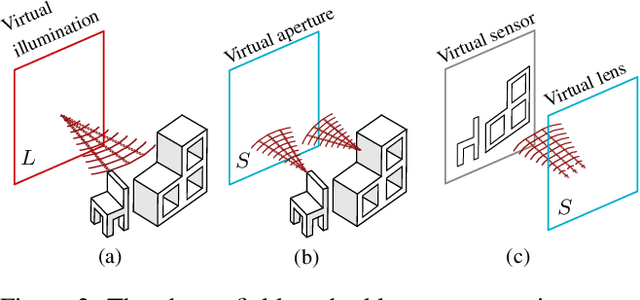

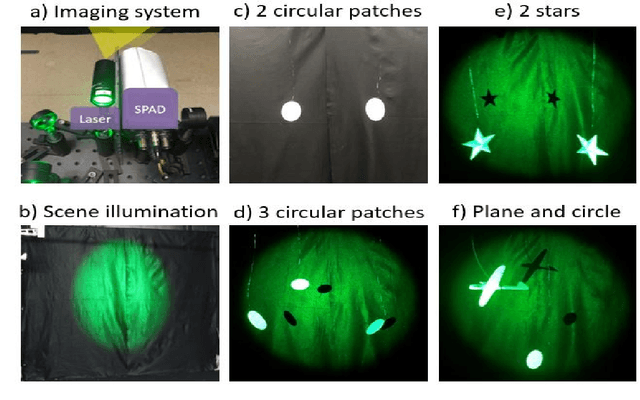

Abstract:The light transport matrix (LTM) is an instrumental tool in line-of-sight (LOS) imaging, describing how light interacts with the scene and enabling applications such as relighting or separation of illumination components. We introduce a framework to estimate the LTM of non-line-of-sight (NLOS) scenarios, coupling recent virtual forward light propagation models for NLOS imaging with the LOS light transport equation. We design computational projector-camera setups, and use these virtual imaging systems to estimate the transport matrix of hidden scenes. We introduce the specific illumination functions to compute the different elements of the matrix, overcoming the challenging wide-aperture conditions of NLOS setups. Our NLOS light transport matrix allows us to (re)illuminate specific locations of a hidden scene, and separate direct, first-order indirect, and higher-order indirect illumination of complex cluttered hidden scenes, similar to existing LOS techniques.

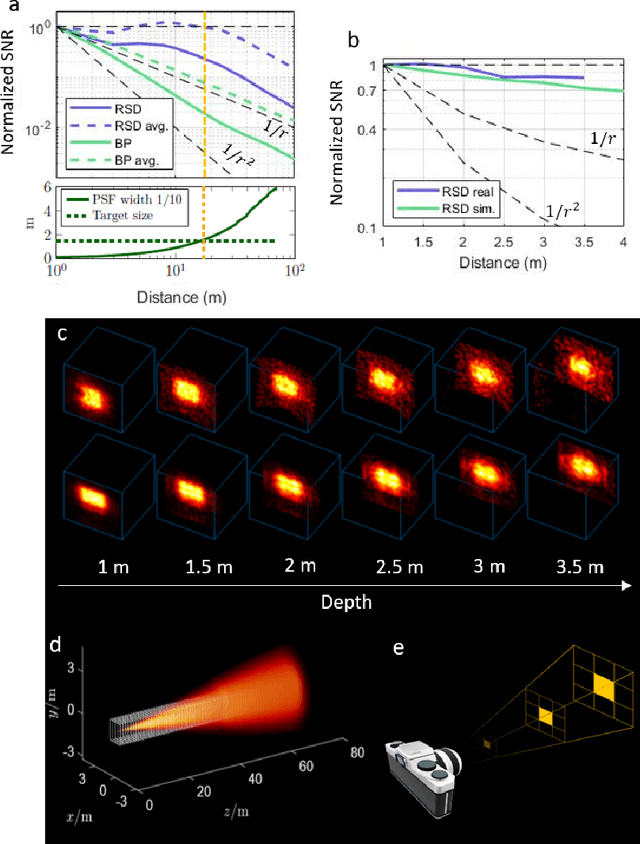

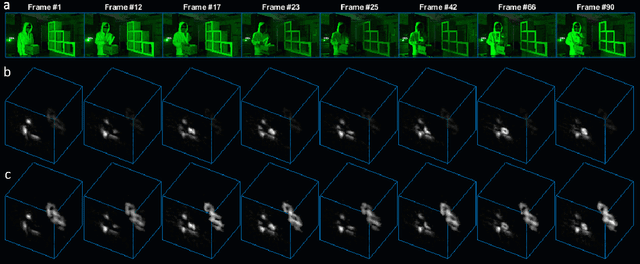

Real-time Non-line-of-Sight imaging of dynamic scenes

Oct 24, 2020

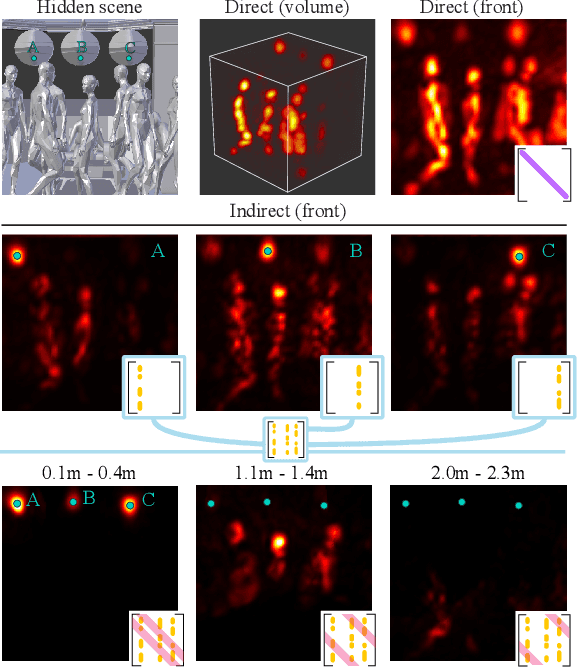

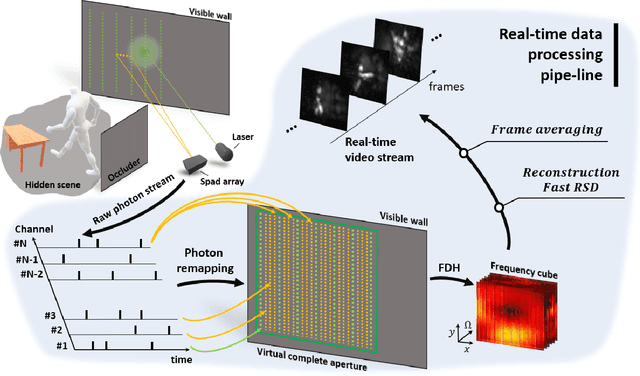

Abstract:Non-Line-of-Sight (NLOS) imaging aims at recovering the 3D geometry of objects that are hidden from the direct line of sight. In the past, this method has suffered from the weak available multibounce signal limiting scene size, capture speed, and reconstruction quality. While algorithms capable of reconstructing scenes at several frames per second have been demonstrated, real-time NLOS video has only been demonstrated for retro-reflective objects where the NLOS signal strength is enhanced by 4 orders of magnitude or more. Furthermore, it has also been noted that the signal-to-noise ratio of reconstructions in NLOS methods drops quickly with distance and past reconstructions, therefore, have been limited to small scenes with depths of few meters. Actual models of noise and resolution in the scene have been simplistic, ignoring many of the complexities of the problem. We show that SPAD (Single-Photon Avalanche Diode) array detectors with a total of just 28 pixels combined with a specifically extended Phasor Field reconstruction algorithm can reconstruct live real-time videos of non-retro-reflective NLOS scenes. We provide an analysis of the Signal-to-Noise-Ratio (SNR) of our reconstructions and show that for our method it is possible to reconstruct the scene such that SNR, motion blur, angular resolution, and depth resolution are all independent of scene size suggesting that reconstruction of very large scenes may be possible. In the future, the light efficiency for NLOS imaging systems can be improved further by adding more pixels to the sensor array.

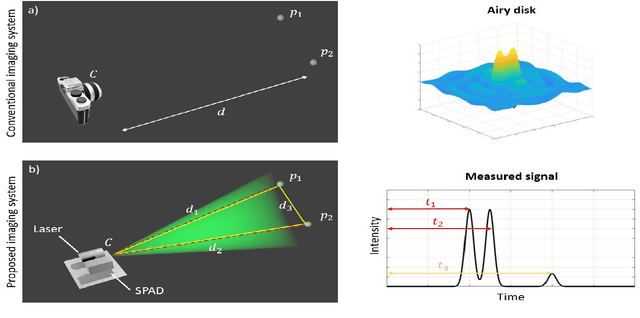

Super-Resolution Remote Imaging using Time Encoded Remote Apertures

Jul 16, 2020

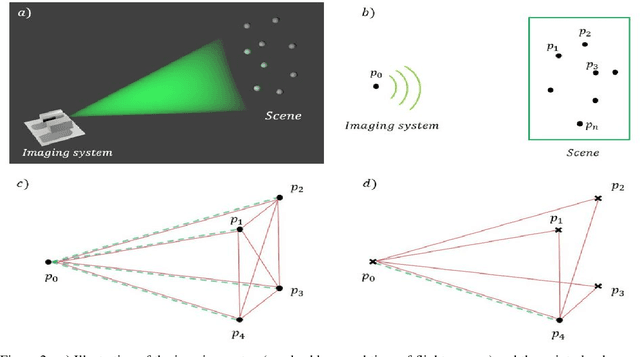

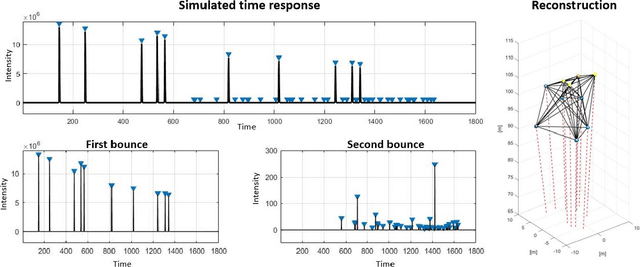

Abstract:Imaging of scenes using light or other wave phenomena is subject to the diffraction limit. The spatial profile of a wave propagating between a scene and the imaging system is distorted by diffraction resulting in a loss of resolution that is proportional with traveled distance. We show here that it is possible to reconstruct sparse scenes from the temporal profile of the wave-front using only one spatial pixel or a spatial average. The temporal profile of the wave is not affected by diffraction yielding an imaging method that can in theory achieve wavelength scale resolution independent of distance from the scene.

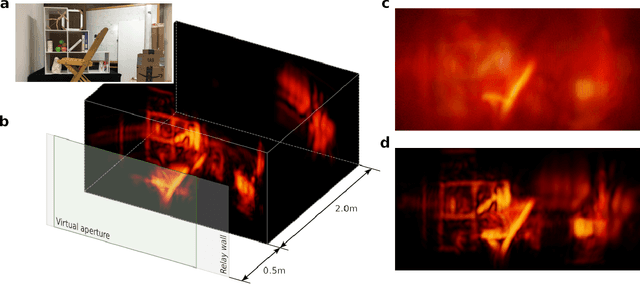

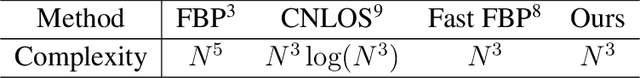

Virtual Wave Optics for Non-Line-of-Sight Imaging

Oct 17, 2018

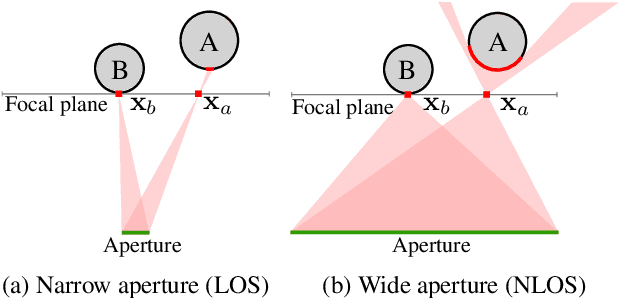

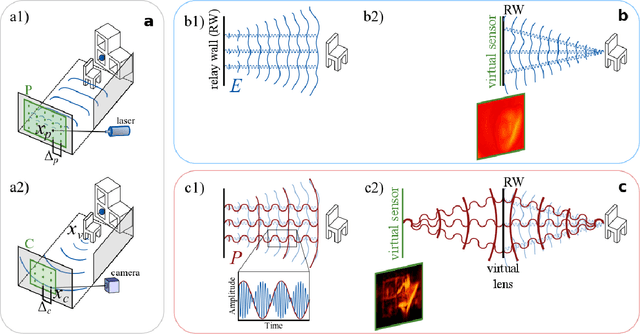

Abstract:Non-Line-of-Sight (NLOS) imaging allows to observe objects partially or fully occluded from direct view, by analyzing indirect diffuse reflections off a secondary, relay surface. Despite its many potential applications, existing methods lack practical usability due to several shared limitations, including the assumption of single scattering only, lack of occlusions, and Lambertian reflectance. We lift these limitations by transforming the NLOS problem into a virtual Line-Of-Sight (LOS) one. Since imaging information cannot be recovered from the irradiance arriving at the relay surface, we introduce the concept of the phasor field, a mathematical construct representing a fast variation in irradiance. We show that NLOS light transport can be modeled as the propagation of a phasor field wave, which can be solved accurately by the Rayleigh-Sommerfeld diffraction integral. We demonstrate for the first time NLOS reconstruction of complex scenes with strong multiply scattered and ambient light, arbitrary materials, large depth range, and occlusions. Our method handles these challenging cases without explicitly developing a light transport model. By leveraging existing fast algorithms, we outperform existing methods in terms of execution speed, computational complexity, and memory use. We believe that our approach will help unlock the potential of NLOS imaging, and the development of novel applications not restricted to lab conditions. For example, we demonstrate both refocusing and transient NLOS videos of real-world, complex scenes with large depth.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge