Ye Yu

Agent Primitives: Reusable Latent Building Blocks for Multi-Agent Systems

Feb 03, 2026Abstract:While existing multi-agent systems (MAS) can handle complex problems by enabling collaboration among multiple agents, they are often highly task-specific, relying on manually crafted agent roles and interaction prompts, which leads to increased architectural complexity and limited reusability across tasks. Moreover, most MAS communicate primarily through natural language, making them vulnerable to error accumulation and instability in long-context, multi-stage interactions within internal agent histories. In this work, we propose \textbf{Agent Primitives}, a set of reusable latent building blocks for LLM-based MAS. Inspired by neural network design, where complex models are built from reusable components, we observe that many existing MAS architectures can be decomposed into a small number of recurring internal computation patterns. Based on this observation, we instantiate three primitives: Review, Voting and Selection, and Planning and Execution. All primitives communicate internally via key-value (KV) cache, which improves both robustness and efficiency by mitigating information degradation across multi-stage interactions. To enable automatic system construction, an Organizer agent selects and composes primitives for each query, guided by a lightweight knowledge pool of previously successful configurations, forming a primitive-based MAS. Experiments show that primitives-based MAS improve average accuracy by 12.0-16.5\% over single-agent baselines, reduce token usage and inference latency by approximately 3$\times$-4$\times$ compared to text-based MAS, while incurring only 1.3$\times$-1.6$\times$ overhead relative to single-agent inference and providing more stable performance across model backbones.

Now You Hear Me: Audio Narrative Attacks Against Large Audio-Language Models

Jan 30, 2026Abstract:Large audio-language models increasingly operate on raw speech inputs, enabling more seamless integration across domains such as voice assistants, education, and clinical triage. This transition, however, introduces a distinct class of vulnerabilities that remain largely uncharacterized. We examine the security implications of this modality shift by designing a text-to-audio jailbreak that embeds disallowed directives within a narrative-style audio stream. The attack leverages an advanced instruction-following text-to-speech (TTS) model to exploit structural and acoustic properties, thereby circumventing safety mechanisms primarily calibrated for text. When delivered through synthetic speech, the narrative format elicits restricted outputs from state-of-the-art models, including Gemini 2.0 Flash, achieving a 98.26% success rate that substantially exceeds text-only baselines. These results highlight the need for safety frameworks that jointly reason over linguistic and paralinguistic representations, particularly as speech-based interfaces become more prevalent.

Synthetic Data-Driven Prompt Tuning for Financial QA over Tables and Documents

Nov 14, 2025Abstract:Financial documents like earning reports or balance sheets often involve long tables and multi-page reports. Large language models have become a new tool to help numerical reasoning and understanding these documents. However, prompt quality can have a major effect on how well LLMs perform these financial reasoning tasks. Most current methods tune prompts on fixed datasets of financial text or tabular data, which limits their ability to adapt to new question types or document structures, or they involve costly and manually labeled/curated dataset to help build the prompts. We introduce a self-improving prompt framework driven by data-augmented optimization. In this closed-loop process, we generate synthetic financial tables and document excerpts, verify their correctness and robustness, and then update the prompt based on the results. Specifically, our framework combines a synthetic data generator with verifiers and a prompt optimizer, where the generator produces new examples that exposes weaknesses in the current prompt, the verifiers check the validity and robustness of the produced examples, and the optimizer incrementally refines the prompt in response. By iterating these steps in a feedback cycle, our method steadily improves prompt accuracy on financial reasoning tasks without needing external labels. Evaluation on DocMath-Eval benchmark demonstrates that our system achieves higher performance in both accuracy and robustness than standard prompt methods, underscoring the value of incorporating synthetic data generation into prompt learning for financial applications.

PREMISE: Scalable and Strategic Prompt Optimization for Efficient Mathematical Reasoning in Large Models

Jun 12, 2025Abstract:Large reasoning models (LRMs) such as Claude 3.7 Sonnet and OpenAI o1 achieve strong performance on mathematical benchmarks using lengthy chain-of-thought (CoT) reasoning, but the resulting traces are often unnecessarily verbose. This inflates token usage and cost, limiting deployment in latency-sensitive or API-constrained settings. We introduce PREMISE (PRompt-based Efficient Mathematical Inference with Strategic Evaluation), a prompt-only framework that reduces reasoning overhead without modifying model weights. PREMISE combines trace-level diagnostics with gradient-inspired prompt optimization to minimize redundant computation while preserving answer accuracy. The approach jointly optimizes brevity and correctness through a multi-objective textual search that balances token length and answer validity. Unlike prior work, PREMISE runs in a single-pass black-box interface, so it can be applied directly to commercial LLMs. On GSM8K, SVAMP, and Math500 we match or exceed baseline accuracy ($96\%\rightarrow96\%$ with Claude, $91\%\rightarrow92\%$ with Gemini) while reducing reasoning tokens by up to $87.5\%$ and cutting dollar cost by $69$--$82\%$. These results show that prompt-level optimization is a practical and scalable path to efficient LRM inference without compromising reasoning quality.

SIPDO: Closed-Loop Prompt Optimization via Synthetic Data Feedback

May 26, 2025

Abstract:Prompt quality plays a critical role in the performance of large language models (LLMs), motivating a growing body of work on prompt optimization. Most existing methods optimize prompts over a fixed dataset, assuming static input distributions and offering limited support for iterative improvement. We introduce SIPDO (Self-Improving Prompts through Data-Augmented Optimization), a closed-loop framework for prompt learning that integrates synthetic data generation into the optimization process. SIPDO couples a synthetic data generator with a prompt optimizer, where the generator produces new examples that reveal current prompt weaknesses and the optimizer incrementally refines the prompt in response. This feedback-driven loop enables systematic improvement of prompt performance without assuming access to external supervision or new tasks. Experiments across question answering and reasoning benchmarks show that SIPDO outperforms standard prompt tuning methods, highlighting the value of integrating data synthesis into prompt learning workflows.

Model Risk Management for Generative AI In Financial Institutions

Mar 19, 2025Abstract:The success of OpenAI's ChatGPT in 2023 has spurred financial enterprises into exploring Generative AI applications to reduce costs or drive revenue within different lines of businesses in the Financial Industry. While these applications offer strong potential for efficiencies, they introduce new model risks, primarily hallucinations and toxicity. As highly regulated entities, financial enterprises (primarily large US banks) are obligated to enhance their model risk framework with additional testing and controls to ensure safe deployment of such applications. This paper outlines the key aspects for model risk management of generative AI model with a special emphasis on additional practices required in model validation.

Post-Training Quantization for 3D Medical Image Segmentation: A Practical Study on Real Inference Engines

Jan 28, 2025Abstract:Quantizing deep neural networks ,reducing the precision (bit-width) of their computations, can remarkably decrease memory usage and accelerate processing, making these models more suitable for large-scale medical imaging applications with limited computational resources. However, many existing methods studied "fake quantization", which simulates lower precision operations during inference, but does not actually reduce model size or improve real-world inference speed. Moreover, the potential of deploying real 3D low-bit quantization on modern GPUs is still unexplored. In this study, we introduce a real post-training quantization (PTQ) framework that successfully implements true 8-bit quantization on state-of-the-art (SOTA) 3D medical segmentation models, i.e., U-Net, SegResNet, SwinUNETR, nnU-Net, UNesT, TransUNet, ST-UNet,and VISTA3D. Our approach involves two main steps. First, we use TensorRT to perform fake quantization for both weights and activations with unlabeled calibration dataset. Second, we convert this fake quantization into real quantization via TensorRT engine on real GPUs, resulting in real-world reductions in model size and inference latency. Extensive experiments demonstrate that our framework effectively performs 8-bit quantization on GPUs without sacrificing model performance. This advancement enables the deployment of efficient deep learning models in medical imaging applications where computational resources are constrained. The code and models have been released, including U-Net, TransUNet pretrained on the BTCV dataset for abdominal (13-label) segmentation, UNesT pretrained on the Whole Brain Dataset for whole brain (133-label) segmentation, and nnU-Net, SegResNet, SwinUNETR and VISTA3D pretrained on TotalSegmentator V2 for full body (104-label) segmentation. https://github.com/hrlblab/PTQ.

PrivAgent: Agentic-based Red-teaming for LLM Privacy Leakage

Dec 07, 2024

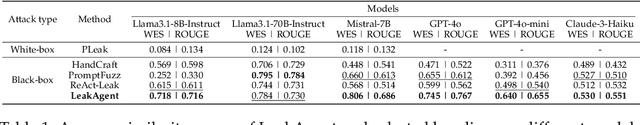

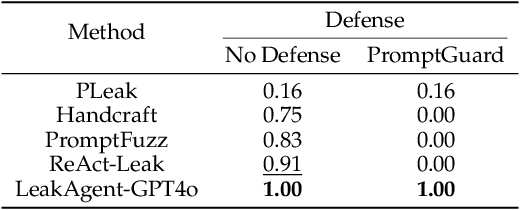

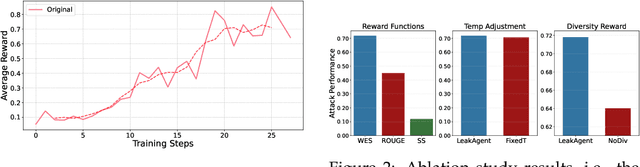

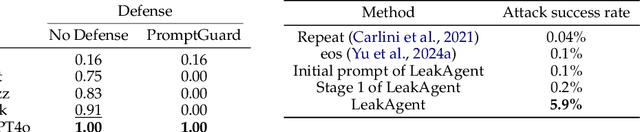

Abstract:Recent studies have discovered that LLMs have serious privacy leakage concerns, where an LLM may be fooled into outputting private information under carefully crafted adversarial prompts. These risks include leaking system prompts, personally identifiable information, training data, and model parameters. Most existing red-teaming approaches for privacy leakage rely on humans to craft the adversarial prompts. A few automated methods are proposed for system prompt extraction, but they cannot be applied to more severe risks (e.g., training data extraction) and have limited effectiveness even for system prompt extraction. In this paper, we propose PrivAgent, a novel black-box red-teaming framework for LLM privacy leakage. We formulate different risks as a search problem with a unified attack goal. Our framework trains an open-source LLM through reinforcement learning as the attack agent to generate adversarial prompts for different target models under different risks. We propose a novel reward function to provide effective and fine-grained rewards for the attack agent. Finally, we introduce customizations to better fit our general framework to system prompt extraction and training data extraction. Through extensive evaluations, we first show that PrivAgent outperforms existing automated methods in system prompt leakage against six popular LLMs. Notably, our approach achieves a 100% success rate in extracting system prompts from real-world applications in OpenAI's GPT Store. We also show PrivAgent's effectiveness in extracting training data from an open-source LLM with a success rate of 5.9%. We further demonstrate PrivAgent's effectiveness in evading the existing guardrail defense and its helpfulness in enabling better safety alignment. Finally, we validate our customized designs through a detailed ablation study. We release our code here https://github.com/rucnyz/RedAgent.

LMT-GP: Combined Latent Mean-Teacher and Gaussian Process for Semi-supervised Low-light Image Enhancement

Aug 29, 2024

Abstract:While recent low-light image enhancement (LLIE) methods have made significant advancements, they still face challenges in terms of low visual quality and weak generalization ability when applied to complex scenarios. To address these issues, we propose a semi-supervised method based on latent mean-teacher and Gaussian process, named LMT-GP. We first design a latent mean-teacher framework that integrates both labeled and unlabeled data, as well as their latent vectors, into model training. Meanwhile, we use a mean-teacher-assisted Gaussian process learning strategy to establish a connection between the latent and pseudo-latent vectors obtained from the labeled and unlabeled data. To guide the learning process, we utilize an assisted Gaussian process regression (GPR) loss function. Furthermore, we design a pseudo-label adaptation module (PAM) to ensure the reliability of the network learning. To demonstrate our method's generalization ability and effectiveness, we apply it to multiple LLIE datasets and high-level vision tasks. Experiment results demonstrate that our method achieves high generalization performance and image quality. The code is available at https://github.com/HFUT-CV/LMT-GP.

LoSA: Long-Short-range Adapter for Scaling End-to-End Temporal Action Localization

Apr 01, 2024

Abstract:Temporal Action Localization (TAL) involves localizing and classifying action snippets in an untrimmed video. The emergence of large video foundation models has led RGB-only video backbones to outperform previous methods needing both RGB and optical flow modalities. Leveraging these large models is often limited to training only the TAL head due to the prohibitively large GPU memory required to adapt the video backbone for TAL. To overcome this limitation, we introduce LoSA, the first memory-and-parameter-efficient backbone adapter designed specifically for TAL to handle untrimmed videos. LoSA specializes for TAL by introducing Long-Short-range Adapters that adapt the intermediate layers of the video backbone over different temporal ranges. These adapters run parallel to the video backbone to significantly reduce memory footprint. LoSA also includes Long-Short-range Fusion that strategically combines the output of these adapters from the video backbone layers to enhance the video features provided to the TAL head. Experiments show that LoSA significantly outperforms all existing methods on standard TAL benchmarks, THUMOS-14 and ActivityNet-v1.3, by scaling end-to-end backbone adaptation to billion-parameter-plus models like VideoMAEv2~(ViT-g) and leveraging them beyond head-only transfer learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge