Jie Chen

Shanghai Research Institute for Intelligent Autonomous Systems, Tongji University, Shanghai, China

ApET: Approximation-Error Guided Token Compression for Efficient VLMs

Feb 23, 2026Abstract:Recent Vision-Language Models (VLMs) have demonstrated remarkable multimodal understanding capabilities, yet the redundant visual tokens incur prohibitive computational overhead and degrade inference efficiency. Prior studies typically relies on [CLS] attention or text-vision cross-attention to identify and discard redundant visual tokens. Despite promising results, such solutions are prone to introduce positional bias and, more critically, are incompatible with efficient attention kernels such as FlashAttention, limiting their practical deployment for VLM acceleration. In this paper, we step away from attention dependencies and revisit visual token compression from an information-theoretic perspective, aiming to maximally preserve visual information without any attention involvement. We present ApET, an Approximation-Error guided Token compression framework. ApET first reconstructs the original visual tokens with a small set of basis tokens via linear approximation, then leverages the approximation error to identify and drop the least informative tokens. Extensive experiments across multiple VLMs and benchmarks demonstrate that ApET retains 95.2% of the original performance on image-understanding tasks and even attains 100.4% on video-understanding tasks, while compressing the token budgets by 88.9% and 87.5%, respectively. Thanks to its attention-free design, ApET seamlessly integrates with FlashAttention, enabling further inference acceleration and making VLM deployment more practical. Code is available at https://github.com/MaQianKun0/ApET.

Multiview Self-Representation Learning across Heterogeneous Views

Feb 04, 2026Abstract:Features of the same sample generated by different pretrained models often exhibit inherently distinct feature distributions because of discrepancies in the model pretraining objectives or architectures. Learning invariant representations from large-scale unlabeled visual data with various pretrained models in a fully unsupervised transfer manner remains a significant challenge. In this paper, we propose a multiview self-representation learning (MSRL) method in which invariant representations are learned by exploiting the self-representation property of features across heterogeneous views. The features are derived from large-scale unlabeled visual data through transfer learning with various pretrained models and are referred to as heterogeneous multiview data. An individual linear model is stacked on top of its corresponding frozen pretrained backbone. We introduce an information-passing mechanism that relies on self-representation learning to support feature aggregation over the outputs of the linear model. Moreover, an assignment probability distribution consistency scheme is presented to guide multiview self-representation learning by exploiting complementary information across different views. Consequently, representation invariance across different linear models is enforced through this scheme. In addition, we provide a theoretical analysis of the information-passing mechanism, the assignment probability distribution consistency and the incremental views. Extensive experiments with multiple benchmark visual datasets demonstrate that the proposed MSRL method consistently outperforms several state-of-the-art approaches.

DELTA: Deliberative Multi-Agent Reasoning with Reinforcement Learning for Multimodal Psychological Counseling

Feb 04, 2026Abstract:Psychological counseling is a fundamentally multimodal cognitive process in which clinicians integrate verbal content with visual and vocal cues to infer clients' mental states and respond empathically. However, most existing language-model-based counseling systems operate on text alone and rely on implicit mental state inference. We introduce DELTA, a deliberative multi-agent framework that models counseling as a structured reasoning process over multimodal signals, separating evidence grounding, mental state abstraction, and response generation. DELTA further incorporates reinforcement learning guided by a distribution-level Emotion Attunement Score to encourage emotionally attuned responses. Experiments on a multimodal counseling benchmark show that DELTA improves both counseling quality and emotion attunement across models. Ablation and qualitative analyses suggest that explicit multimodal reasoning and structured mental state representations play complementary roles in supporting empathic human-AI interaction.

Mixture-of-World Models: Scaling Multi-Task Reinforcement Learning with Modular Latent Dynamics

Feb 01, 2026Abstract:A fundamental challenge in multi-task reinforcement learning (MTRL) is achieving sample efficiency in visual domains where tasks exhibit substantial heterogeneity in both observations and dynamics. Model-based reinforcement learning offers a promising path to improved sample efficiency through world models, but standard monolithic architectures struggle to capture diverse task dynamics, resulting in poor reconstruction and prediction accuracy. We introduce Mixture-of-World Models (MoW), a scalable architecture that combines modular variational autoencoders for task-adaptive visual compression, a hybrid Transformer-based dynamics model with task-conditioned experts and a shared backbone, and a gradient-based task clustering strategy for efficient parameter allocation. On the Atari 100k benchmark, a single MoW agent trained once on 26 Atari games achieves a mean human-normalized score of 110.4%, competitive with the score of 114.2% achieved by STORM, an ensemble of 26 task-specific models, while using 50% fewer parameters. On Meta-World, MoW achieves a 74.5% average success rate within 300 thousand environment steps, establishing a new state of the art. These results demonstrate that MoW provides a scalable and parameter-efficient foundation for generalist world models.

Tune-Your-Style: Intensity-tunable 3D Style Transfer with Gaussian Splatting

Jan 31, 2026Abstract:3D style transfer refers to the artistic stylization of 3D assets based on reference style images. Recently, 3DGS-based stylization methods have drawn considerable attention, primarily due to their markedly enhanced training and rendering speeds. However, a vital challenge for 3D style transfer is to strike a balance between the content and the patterns and colors of the style. Although the existing methods strive to achieve relatively balanced outcomes, the fixed-output paradigm struggles to adapt to the diverse content-style balance requirements from different users. In this work, we introduce a creative intensity-tunable 3D style transfer paradigm, dubbed \textbf{Tune-Your-Style}, which allows users to flexibly adjust the style intensity injected into the scene to match their desired content-style balance, thus enhancing the customizability of 3D style transfer. To achieve this goal, we first introduce Gaussian neurons to explicitly model the style intensity and parameterize a learnable style tuner to achieve intensity-tunable style injection. To facilitate the learning of tunable stylization, we further propose the tunable stylization guidance, which obtains multi-view consistent stylized views from diffusion models through cross-view style alignment, and then employs a two-stage optimization strategy to provide stable and efficient guidance by modulating the balance between full-style guidance from the stylized views and zero-style guidance from the initial rendering. Extensive experiments demonstrate that our method not only delivers visually appealing results, but also exhibits flexible customizability for 3D style transfer. Project page is available at https://zhao-yian.github.io/TuneStyle.

PCL-Reasoner-V1.5: Advancing Math Reasoning with Offline Reinforcement Learning

Jan 21, 2026Abstract:We present PCL-Reasoner-V1.5, a 32-billion-parameter large language model (LLM) for mathematical reasoning. The model is built upon Qwen2.5-32B and refined via supervised fine-tuning (SFT) followed by reinforcement learning (RL). A central innovation is our proposed offline RL method, which provides superior training stability and efficiency over standard online RL methods such as GRPO. Our model achieves state-of-the-art performance among models post-trained on Qwen2.5-32B, attaining average accuracies of 90.9% on AIME 2024 and 85.6% on AIME 2025. Our work demonstrates offline RL as a stable and efficient paradigm for advancing reasoning in LLMs. All experiments were conducted on Huawei Ascend 910C NPUs.

WaveFormer: Frequency-Time Decoupled Vision Modeling with Wave Equation

Jan 13, 2026Abstract:Vision modeling has advanced rapidly with Transformers, whose attention mechanisms capture visual dependencies but lack a principled account of how semantic information propagates spatially. We revisit this problem from a wave-based perspective: feature maps are treated as spatial signals whose evolution over an internal propagation time (aligned with network depth) is governed by an underdamped wave equation. In this formulation, spatial frequency-from low-frequency global layout to high-frequency edges and textures-is modeled explicitly, and its interaction with propagation time is controlled rather than implicitly fixed. We derive a closed-form, frequency-time decoupled solution and implement it as the Wave Propagation Operator (WPO), a lightweight module that models global interactions in O(N log N) time-far lower than attention. Building on WPO, we propose a family of WaveFormer models as drop-in replacements for standard ViTs and CNNs, achieving competitive accuracy across image classification, object detection, and semantic segmentation, while delivering up to 1.6x higher throughput and 30% fewer FLOPs than attention-based alternatives. Furthermore, our results demonstrate that wave propagation introduces a complementary modeling bias to heat-based methods, effectively capturing both global coherence and high-frequency details essential for rich visual semantics. Codes are available at: https://github.com/ZishanShu/WaveFormer.

MANGO:Natural Multi-speaker 3D Talking Head Generation via 2D-Lifted Enhancement

Jan 05, 2026Abstract:Current audio-driven 3D head generation methods mainly focus on single-speaker scenarios, lacking natural, bidirectional listen-and-speak interaction. Achieving seamless conversational behavior, where speaking and listening states transition fluidly remains a key challenge. Existing 3D conversational avatar approaches rely on error-prone pseudo-3D labels that fail to capture fine-grained facial dynamics. To address these limitations, we introduce a novel two-stage framework MANGO, which leveraging pure image-level supervision by alternately training to mitigate the noise introduced by pseudo-3D labels, thereby achieving better alignment with real-world conversational behaviors. Specifically, in the first stage, a diffusion-based transformer with a dual-audio interaction module models natural 3D motion from multi-speaker audio. In the second stage, we use a fast 3D Gaussian Renderer to generate high-fidelity images and provide 2D-level photometric supervision for the 3D motions through alternate training. Additionally, we introduce MANGO-Dialog, a high-quality dataset with over 50 hours of aligned 2D-3D conversational data across 500+ identities. Extensive experiments demonstrate that our method achieves exceptional accuracy and realism in modeling two-person 3D dialogue motion, significantly advancing the fidelity and controllability of audio-driven talking heads.

A Language-Agnostic Hierarchical LoRA-MoE Architecture for CTC-based Multilingual ASR

Jan 02, 2026Abstract:Large-scale multilingual ASR (mASR) models such as Whisper achieve strong performance but incur high computational and latency costs, limiting their deployment on resource-constrained edge devices. In this study, we propose a lightweight and language-agnostic multilingual ASR system based on a CTC architecture with domain adaptation. Specifically, we introduce a Language-agnostic Hierarchical LoRA-MoE (HLoRA) framework integrated into an mHuBERT-CTC model, enabling end-to-end decoding via LID-posterior-driven LoRA routing. The hierarchical design consists of a multilingual shared LoRA for learning language-invariant acoustic representations and language-specific LoRA experts for modeling language-dependent characteristics. The proposed routing mechanism removes the need for prior language identity information or explicit language labels during inference, achieving true language-agnostic decoding. Experiments on MSR-86K and the MLC-SLM 2025 Challenge datasets demonstrate that HLoRA achieves competitive performance with state-of-the-art two-stage inference methods using only single-pass decoding, significantly improving decoding efficiency for low-resource mASR applications.

Learning-Enabled Elastic Network Topology for Distributed ISAC Service Provisioning

Dec 23, 2025

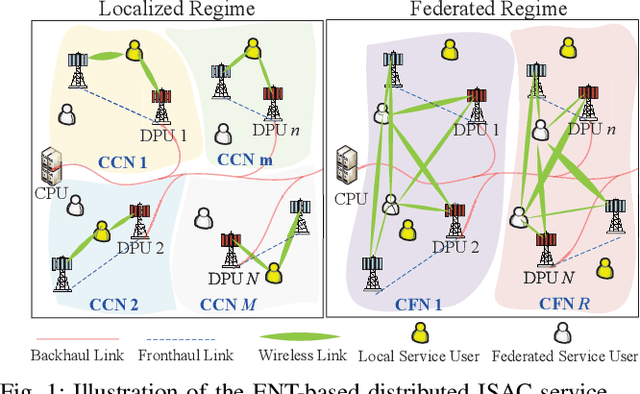

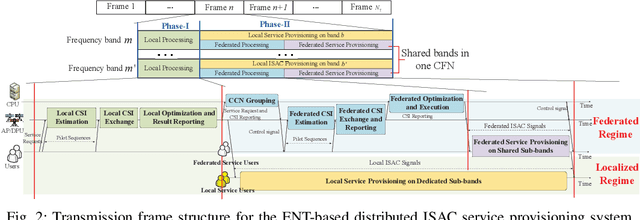

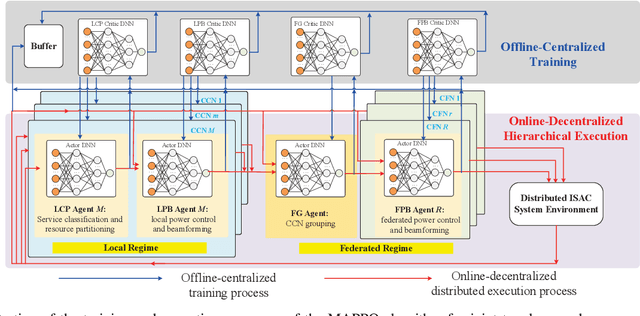

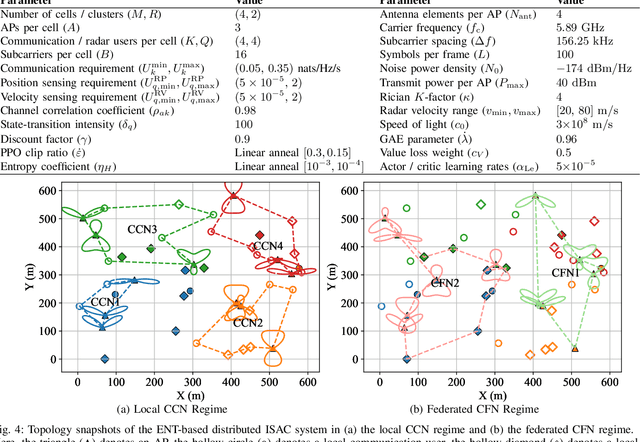

Abstract:Conventional mobile networks, including both localized cell-centric and cooperative cell-free networks (CCN/CFN), are built upon rigid network topologies. However, neither architecture is adequate to flexibly support distributed integrated sensing and communication (ISAC) services, due to the increasing difficulty of aligning spatiotemporally distributed heterogeneous service demands with available radio resources. In this paper, we propose an elastic network topology (ENT) for distributed ISAC service provisioning, where multiple co-existing localized CCNs can be dynamically aggregated into CFNs with expanded boundaries for federated network operation. This topology elastically orchestrates localized CCN and federated CFN boundaries to balance signaling overhead and distributed resource utilization, thereby enabling efficient ISAC service provisioning. A two-phase operation protocol is then developed. In Phase I, each CCN autonomously classifies ISAC services as either local or federated and partitions its resources into dedicated and shared segments. In Phase II, each CCN employs its dedicated resources for local ISAC services, while the aggregated CFN consolidates shared resources from its constituent CCNs to cooperatively deliver federated services. Furthermore, we design a utility-to-signaling ratio (USR) to quantify the tradeoff between sensing/communication utility and signaling overhead. Consequently, a USR maximization problem is formulated by jointly optimizing the network topology (i.e., service classification and CCN aggregation) and the allocation of dedicated and shared resources. However, this problem is challenging due to its distributed optimization nature and the absence of complete channel state information. To address this problem efficiently, we propose a multi-agent deep reinforcement learning (MADRL) framework with centralized training and decentralized execution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge