Yan Han

Beyond Believability: Accurate Human Behavior Simulation with Fine-Tuned LLMs

Mar 27, 2025Abstract:Recent research shows that LLMs can simulate ``believable'' human behaviors to power LLM agents via prompt-only methods. In this work, we focus on evaluating and improving LLM's objective ``accuracy'' rather than the subjective ``believability'' in the web action generation task, leveraging a large-scale, real-world dataset collected from online shopping human actions. We present the first comprehensive quantitative evaluation of state-of-the-art LLMs (e.g., DeepSeek-R1, Llama, and Claude) on the task of web action generation. Our results show that fine-tuning LLMs on real-world behavioral data substantially improves their ability to generate actions compared to prompt-only methods. Furthermore, incorporating synthesized reasoning traces into model training leads to additional performance gains, demonstrating the value of explicit rationale in behavior modeling. This work establishes a new benchmark for evaluating LLMs in behavior simulation and offers actionable insights into how real-world action data and reasoning augmentation can enhance the fidelity of LLM agents.

Learning from Noisy Labels with Contrastive Co-Transformer

Mar 04, 2025

Abstract:Deep learning with noisy labels is an interesting challenge in weakly supervised learning. Despite their significant learning capacity, CNNs have a tendency to overfit in the presence of samples with noisy labels. Alleviating this issue, the well known Co-Training framework is used as a fundamental basis for our work. In this paper, we introduce a Contrastive Co-Transformer framework, which is simple and fast, yet able to improve the performance by a large margin compared to the state-of-the-art approaches. We argue the robustness of transformers when dealing with label noise. Our Contrastive Co-Transformer approach is able to utilize all samples in the dataset, irrespective of whether they are clean or noisy. Transformers are trained by a combination of contrastive loss and classification loss. Extensive experimental results on corrupted data from six standard benchmark datasets including Clothing1M, demonstrate that our Contrastive Co-Transformer is superior to existing state-of-the-art methods.

Stepwise Perplexity-Guided Refinement for Efficient Chain-of-Thought Reasoning in Large Language Models

Feb 18, 2025Abstract:Chain-of-Thought (CoT) reasoning, which breaks down complex tasks into intermediate reasoning steps, has significantly enhanced the performance of large language models (LLMs) on challenging tasks. However, the detailed reasoning process in CoT often incurs long generation times and high computational costs, partly due to the inclusion of unnecessary steps. To address this, we propose a method to identify critical reasoning steps using perplexity as a measure of their importance: a step is deemed critical if its removal causes a significant increase in perplexity. Our method enables models to focus solely on generating these critical steps. This can be achieved through two approaches: refining demonstration examples in few-shot CoT or fine-tuning the model using selected examples that include only critical steps. Comprehensive experiments validate the effectiveness of our method, which achieves a better balance between the reasoning accuracy and efficiency of CoT.

Extracting and Understanding the Superficial Knowledge in Alignment

Feb 07, 2025

Abstract:Alignment of large language models (LLMs) with human values and preferences, often achieved through fine-tuning based on human feedback, is essential for ensuring safe and responsible AI behaviors. However, the process typically requires substantial data and computation resources. Recent studies have revealed that alignment might be attainable at lower costs through simpler methods, such as in-context learning. This leads to the question: Is alignment predominantly superficial? In this paper, we delve into this question and provide a quantitative analysis. We formalize the concept of superficial knowledge, defining it as knowledge that can be acquired through easily token restyling, without affecting the model's ability to capture underlying causal relationships between tokens. We propose a method to extract and isolate superficial knowledge from aligned models, focusing on the shallow modifications to the final token selection process. By comparing models augmented only with superficial knowledge to fully aligned models, we quantify the superficial portion of alignment. Our findings reveal that while superficial knowledge constitutes a significant portion of alignment, particularly in safety and detoxification tasks, it is not the whole story. Tasks requiring reasoning and contextual understanding still rely on deeper knowledge. Additionally, we demonstrate two practical advantages of isolated superficial knowledge: (1) it can be transferred between models, enabling efficient offsite alignment of larger models using extracted superficial knowledge from smaller models, and (2) it is recoverable, allowing for the restoration of alignment in compromised models without sacrificing performance.

A Survey of Calibration Process for Black-Box LLMs

Dec 17, 2024

Abstract:Large Language Models (LLMs) demonstrate remarkable performance in semantic understanding and generation, yet accurately assessing their output reliability remains a significant challenge. While numerous studies have explored calibration techniques, they primarily focus on White-Box LLMs with accessible parameters. Black-Box LLMs, despite their superior performance, pose heightened requirements for calibration techniques due to their API-only interaction constraints. Although recent researches have achieved breakthroughs in black-box LLMs calibration, a systematic survey of these methodologies is still lacking. To bridge this gap, we presents the first comprehensive survey on calibration techniques for black-box LLMs. We first define the Calibration Process of LLMs as comprising two interrelated key steps: Confidence Estimation and Calibration. Second, we conduct a systematic review of applicable methods within black-box settings, and provide insights on the unique challenges and connections in implementing these key steps. Furthermore, we explore typical applications of Calibration Process in black-box LLMs and outline promising future research directions, providing new perspectives for enhancing reliability and human-machine alignment. This is our GitHub link: https://github.com/LiangruXie/Calibration-Process-in-Black-Box-LLMs

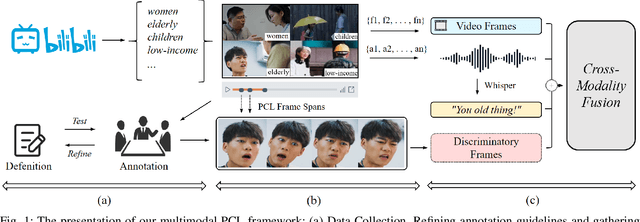

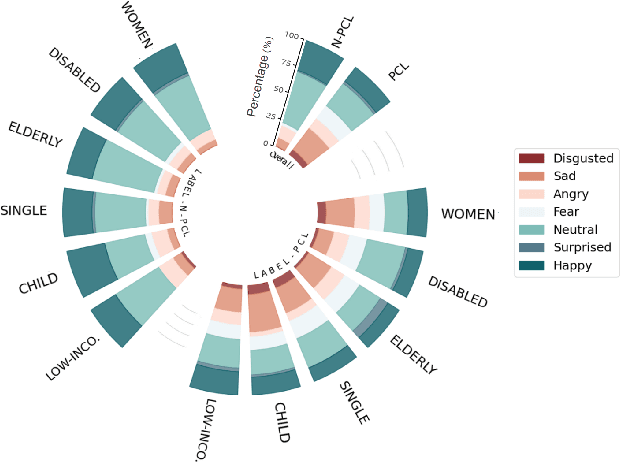

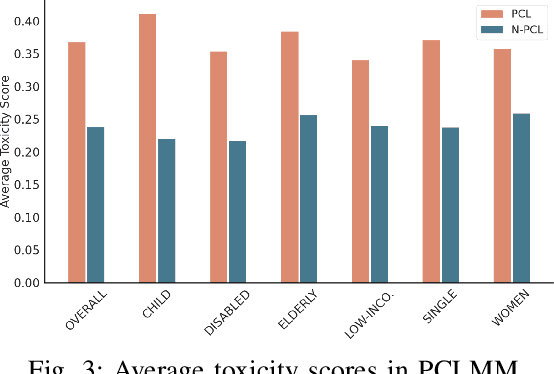

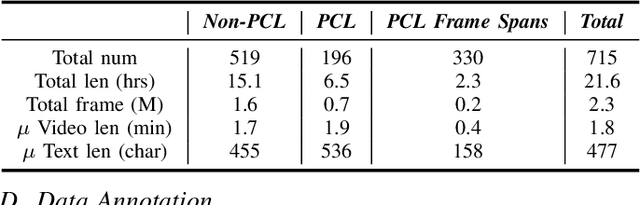

Towards Patronizing and Condescending Language in Chinese Videos: A Multimodal Dataset and Detector

Sep 10, 2024

Abstract:Patronizing and Condescending Language (PCL) is a form of discriminatory toxic speech targeting vulnerable groups, threatening both online and offline safety. While toxic speech research has mainly focused on overt toxicity, such as hate speech, microaggressions in the form of PCL remain underexplored. Additionally, dominant groups' discriminatory facial expressions and attitudes toward vulnerable communities can be more impactful than verbal cues, yet these frame features are often overlooked. In this paper, we introduce the PCLMM dataset, the first Chinese multimodal dataset for PCL, consisting of 715 annotated videos from Bilibili, with high-quality PCL facial frame spans. We also propose the MultiPCL detector, featuring a facial expression detection module for PCL recognition, demonstrating the effectiveness of modality complementarity in this challenging task. Our work makes an important contribution to advancing microaggression detection within the domain of toxic speech.

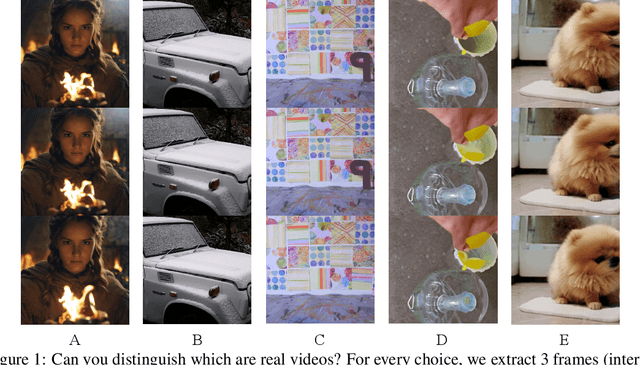

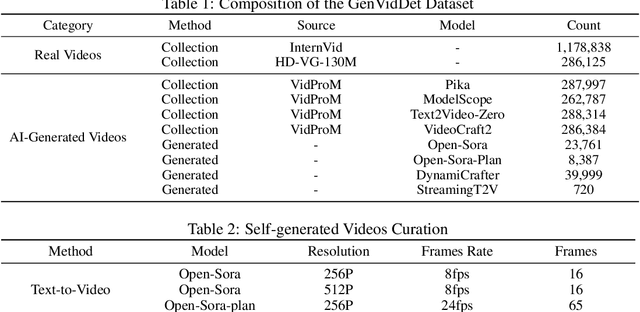

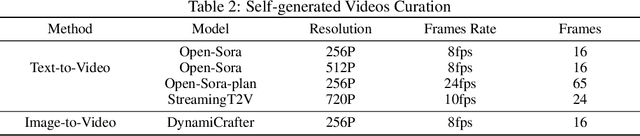

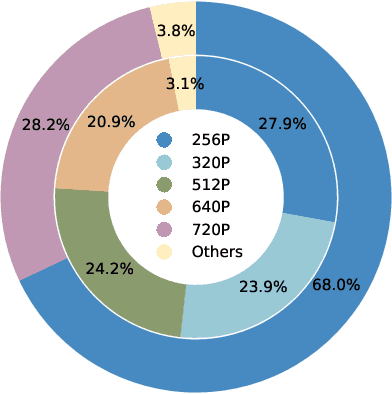

Distinguish Any Fake Videos: Unleashing the Power of Large-scale Data and Motion Features

May 24, 2024

Abstract:The development of AI-Generated Content (AIGC) has empowered the creation of remarkably realistic AI-generated videos, such as those involving Sora. However, the widespread adoption of these models raises concerns regarding potential misuse, including face video scams and copyright disputes. Addressing these concerns requires the development of robust tools capable of accurately determining video authenticity. The main challenges lie in the dataset and neural classifier for training. Current datasets lack a varied and comprehensive repository of real and generated content for effective discrimination. In this paper, we first introduce an extensive video dataset designed specifically for AI-Generated Video Detection (GenVidDet). It includes over 2.66 M instances of both real and generated videos, varying in categories, frames per second, resolutions, and lengths. The comprehensiveness of GenVidDet enables the training of a generalizable video detector. We also present the Dual-Branch 3D Transformer (DuB3D), an innovative and effective method for distinguishing between real and generated videos, enhanced by incorporating motion information alongside visual appearance. DuB3D utilizes a dual-branch architecture that adaptively leverages and fuses raw spatio-temporal data and optical flow. We systematically explore the critical factors affecting detection performance, achieving the optimal configuration for DuB3D. Trained on GenVidDet, DuB3D can distinguish between real and generated video content with 96.77% accuracy, and strong generalization capability even for unseen types.

Geometric-Aware Low-Light Image and Video Enhancement via Depth Guidance

Dec 26, 2023Abstract:Low-Light Enhancement (LLE) is aimed at improving the quality of photos/videos captured under low-light conditions. It is worth noting that most existing LLE methods do not take advantage of geometric modeling. We believe that incorporating geometric information can enhance LLE performance, as it provides insights into the physical structure of the scene that influences illumination conditions. To address this, we propose a Geometry-Guided Low-Light Enhancement Refine Framework (GG-LLERF) designed to assist low-light enhancement models in learning improved features for LLE by integrating geometric priors into the feature representation space. In this paper, we employ depth priors as the geometric representation. Our approach focuses on the integration of depth priors into various LLE frameworks using a unified methodology. This methodology comprises two key novel modules. First, a depth-aware feature extraction module is designed to inject depth priors into the image representation. Then, Hierarchical Depth-Guided Feature Fusion Module (HDGFFM) is formulated with a cross-domain attention mechanism, which combines depth-aware features with the original image features within the LLE model. We conducted extensive experiments on public low-light image and video enhancement benchmarks. The results illustrate that our designed framework significantly enhances existing LLE methods.

Video Frame Interpolation with Region-Distinguishable Priors from SAM

Dec 26, 2023Abstract:In existing Video Frame Interpolation (VFI) approaches, the motion estimation between neighboring frames plays a crucial role. However, the estimation accuracy in existing methods remains a challenge, primarily due to the inherent ambiguity in identifying corresponding areas in adjacent frames for interpolation. Therefore, enhancing accuracy by distinguishing different regions before motion estimation is of utmost importance. In this paper, we introduce a novel solution involving the utilization of open-world segmentation models, e.g., SAM (Segment Anything Model), to derive Region-Distinguishable Priors (RDPs) in different frames. These RDPs are represented as spatial-varying Gaussian mixtures, distinguishing an arbitrary number of areas with a unified modality. RDPs can be integrated into existing motion-based VFI methods to enhance features for motion estimation, facilitated by our designed play-and-plug Hierarchical Region-aware Feature Fusion Module (HRFFM). HRFFM incorporates RDP into various hierarchical stages of VFI's encoder, using RDP-guided Feature Normalization (RDPFN) in a residual learning manner. With HRFFM and RDP, the features within VFI's encoder exhibit similar representations for matched regions in neighboring frames, thus improving the synthesis of intermediate frames. Extensive experiments demonstrate that HRFFM consistently enhances VFI performance across various scenes.

Large-scale data extraction from the UNOS organ donor documents

Aug 30, 2023Abstract:The scope of our study is all UNOS data of the USA organ donors since 2008. The data is not analyzable in a large scale in the past because it was captured in PDF documents known as "Attachments", whereby every donor is represented by dozens of PDF documents in heterogenous formats. To make the data analyzable, one needs to convert the content inside these PDFs to an analyzable data format, such as a standard SQL database. In this paper we will focus on 2022 UNOS data comprised of $\approx 400,000$ PDF documents spanning millions of pages. The totality of UNOS data covers 15 years (2008--20022) and our results will be quickly extended to the entire data. Our method captures a portion of the data in DCD flowsheets, kidney perfusion data, and data captured during patient hospital stay (e.g. vital signs, ventilator settings, etc.). The current paper assumes that the reader is familiar with the content of the UNOS data. The overview of the types of data and challenges they present is a subject of another paper. Here we focus on demonstrating that the goal of building a comprehensive, analyzable database from UNOS documents is an attainable task, and we provide an overview of our methodology. The project resulted in datasets by far larger than previously available even in this preliminary phase.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge