Hongbo Wang

Propagating Similarity, Mitigating Uncertainty: Similarity Propagation-enhanced Uncertainty for Multimodal Recommendation

Jan 27, 2026Abstract:Multimodal Recommendation (MMR) systems are crucial for modern platforms but are often hampered by inherent noise and uncertainty in modal features, such as blurry images, diverse visual appearances, or ambiguous text. Existing methods often overlook this modality-specific uncertainty, leading to ineffective feature fusion. Furthermore, they fail to leverage rich similarity patterns among users and items to refine representations and their corresponding uncertainty estimates. To address these challenges, we propose a novel framework, Similarity Propagation-enhanced Uncertainty for Multimodal Recommendation (SPUMR). SPUMR explicitly models and mitigates uncertainty by first constructing the Modality Similarity Graph and the Collaborative Similarity Graph to refine representations from both content and behavioral perspectives. The Uncertainty-aware Preference Aggregation module then adaptively fuses the refined multimodal features, assigning greater weight to more reliable modalities. Extensive experiments on three benchmark datasets demonstrate that SPUMR achieves significant improvements over existing leading methods.

SGM: Safety Glasses for Multimodal Large Language Models via Neuron-Level Detoxification

Dec 17, 2025Abstract:Disclaimer: Samples in this paper may be harmful and cause discomfort. Multimodal large language models (MLLMs) enable multimodal generation but inherit toxic, biased, and NSFW signals from weakly curated pretraining corpora, causing safety risks, especially under adversarial triggers that late, opaque training-free detoxification methods struggle to handle. We propose SGM, a white-box neuron-level multimodal intervention that acts like safety glasses for toxic neurons: it selectively recalibrates a small set of toxic expert neurons via expertise-weighted soft suppression, neutralizing harmful cross-modal activations without any parameter updates. We establish MM-TOXIC-QA, a multimodal toxicity evaluation framework, and compare SGM with existing detoxification techniques. Experiments on open-source MLLMs show that SGM mitigates toxicity in standard and adversarial conditions, cutting harmful rates from 48.2\% to 2.5\% while preserving fluency and multimodal reasoning. SGM is extensible, and its combined defenses, denoted as SGM*, integrate with existing detoxification methods for stronger safety performance, providing an interpretable, low-cost solution for toxicity-controlled multimodal generation.

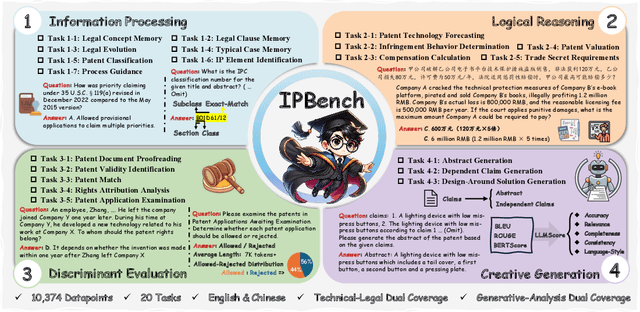

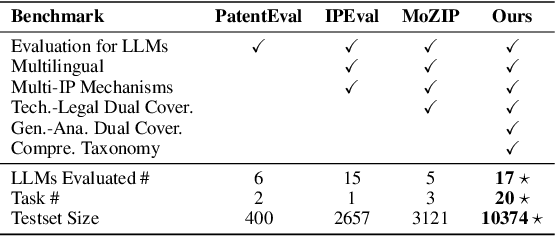

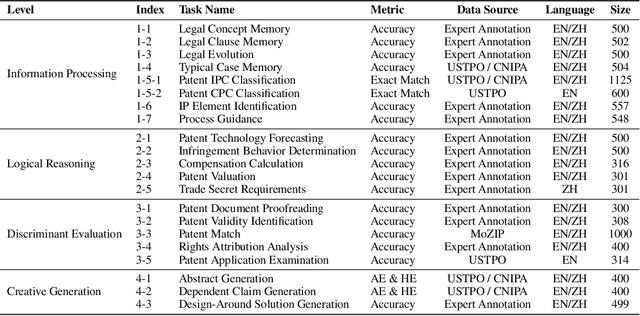

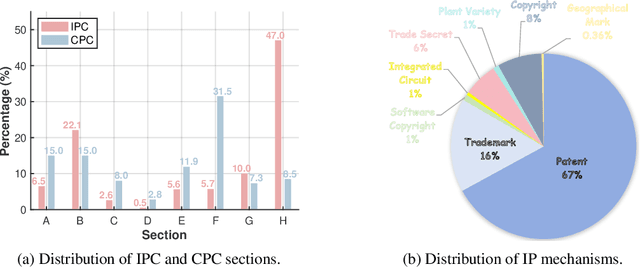

IPBench: Benchmarking the Knowledge of Large Language Models in Intellectual Property

Apr 22, 2025

Abstract:Intellectual Property (IP) is a unique domain that integrates technical and legal knowledge, making it inherently complex and knowledge-intensive. As large language models (LLMs) continue to advance, they show great potential for processing IP tasks, enabling more efficient analysis, understanding, and generation of IP-related content. However, existing datasets and benchmarks either focus narrowly on patents or cover limited aspects of the IP field, lacking alignment with real-world scenarios. To bridge this gap, we introduce the first comprehensive IP task taxonomy and a large, diverse bilingual benchmark, IPBench, covering 8 IP mechanisms and 20 tasks. This benchmark is designed to evaluate LLMs in real-world intellectual property applications, encompassing both understanding and generation. We benchmark 16 LLMs, ranging from general-purpose to domain-specific models, and find that even the best-performing model achieves only 75.8% accuracy, revealing substantial room for improvement. Notably, open-source IP and law-oriented models lag behind closed-source general-purpose models. We publicly release all data and code of IPBench and will continue to update it with additional IP-related tasks to better reflect real-world challenges in the intellectual property domain.

Task Adaptive Feature Distribution Based Network for Few-shot Fine-grained Target Classification

Oct 13, 2024Abstract:Metric-based few-shot fine-grained classification has shown promise due to its simplicity and efficiency. However, existing methods often overlook task-level special cases and struggle with accurate category description and irrelevant sample information. To tackle these, we propose TAFD-Net: a task adaptive feature distribution network. It features a task-adaptive component for embedding to capture task-level nuances, an asymmetric metric for calculating feature distribution similarities between query samples and support categories, and a contrastive measure strategy to boost performance. Extensive experiments have been conducted on three datasets and the experimental results show that our proposed algorithm outperforms recent incremental learning algorithms.

Towards Comprehensive Detection of Chinese Harmful Memes

Oct 03, 2024

Abstract:This paper has been accepted in the NeurIPS 2024 D & B Track. Harmful memes have proliferated on the Chinese Internet, while research on detecting Chinese harmful memes significantly lags behind due to the absence of reliable datasets and effective detectors. To this end, we focus on the comprehensive detection of Chinese harmful memes. We construct ToxiCN MM, the first Chinese harmful meme dataset, which consists of 12,000 samples with fine-grained annotations for various meme types. Additionally, we propose a baseline detector, Multimodal Knowledge Enhancement (MKE), incorporating contextual information of meme content generated by the LLM to enhance the understanding of Chinese memes. During the evaluation phase, we conduct extensive quantitative experiments and qualitative analyses on multiple baselines, including LLMs and our MKE. The experimental results indicate that detecting Chinese harmful memes is challenging for existing models while demonstrating the effectiveness of MKE. The resources for this paper are available at https://github.com/DUT-lujunyu/ToxiCN_MM.

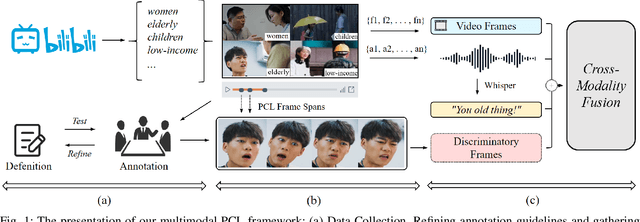

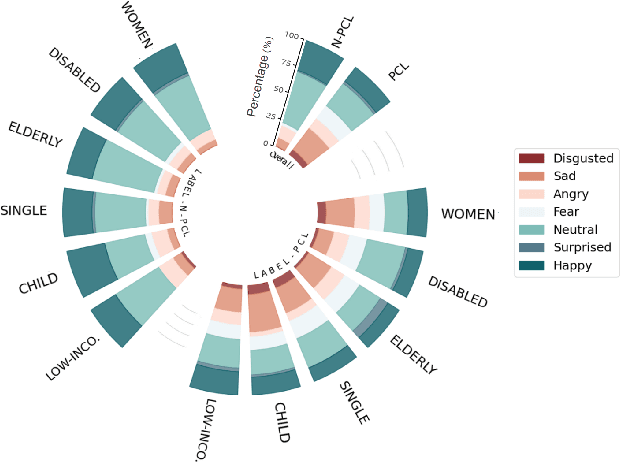

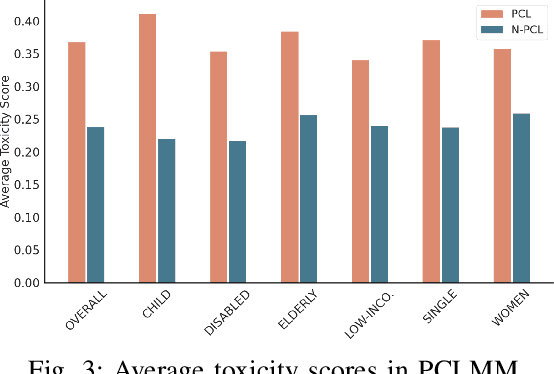

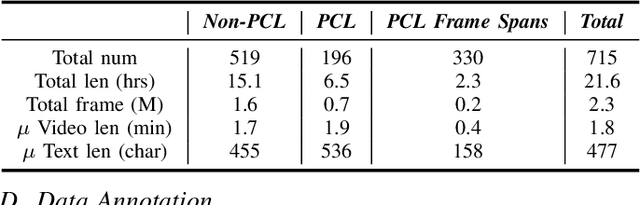

Towards Patronizing and Condescending Language in Chinese Videos: A Multimodal Dataset and Detector

Sep 10, 2024

Abstract:Patronizing and Condescending Language (PCL) is a form of discriminatory toxic speech targeting vulnerable groups, threatening both online and offline safety. While toxic speech research has mainly focused on overt toxicity, such as hate speech, microaggressions in the form of PCL remain underexplored. Additionally, dominant groups' discriminatory facial expressions and attitudes toward vulnerable communities can be more impactful than verbal cues, yet these frame features are often overlooked. In this paper, we introduce the PCLMM dataset, the first Chinese multimodal dataset for PCL, consisting of 715 annotated videos from Bilibili, with high-quality PCL facial frame spans. We also propose the MultiPCL detector, featuring a facial expression detection module for PCL recognition, demonstrating the effectiveness of modality complementarity in this challenging task. Our work makes an important contribution to advancing microaggression detection within the domain of toxic speech.

Rethinking Mutual Information for Language Conditioned Skill Discovery on Imitation Learning

Feb 27, 2024Abstract:Language-conditioned robot behavior plays a vital role in executing complex tasks by associating human commands or instructions with perception and actions. The ability to compose long-horizon tasks based on unconstrained language instructions necessitates the acquisition of a diverse set of general-purpose skills. However, acquiring inherent primitive skills in a coupled and long-horizon environment without external rewards or human supervision presents significant challenges. In this paper, we evaluate the relationship between skills and language instructions from a mathematical perspective, employing two forms of mutual information within the framework of language-conditioned policy learning. To maximize the mutual information between language and skills in an unsupervised manner, we propose an end-to-end imitation learning approach known as Language Conditioned Skill Discovery (LCSD). Specifically, we utilize vector quantization to learn discrete latent skills and leverage skill sequences of trajectories to reconstruct high-level semantic instructions. Through extensive experiments on language-conditioned robotic navigation and manipulation tasks, encompassing BabyAI, LORel, and CALVIN, we demonstrate the superiority of our method over prior works. Our approach exhibits enhanced generalization capabilities towards unseen tasks, improved skill interpretability, and notably higher rates of task completion success.

Enhancing Cloud-Based Large Language Model Processing with Elasticsearch and Transformer Models

Feb 24, 2024

Abstract:Large Language Models (LLMs) are a class of generative AI models built using the Transformer network, capable of leveraging vast datasets to identify, summarize, translate, predict, and generate language. LLMs promise to revolutionize society, yet training these foundational models poses immense challenges. Semantic vector search within large language models is a potent technique that can significantly enhance search result accuracy and relevance. Unlike traditional keyword-based search methods, semantic search utilizes the meaning and context of words to grasp the intent behind queries and deliver more precise outcomes. Elasticsearch emerges as one of the most popular tools for implementing semantic search an exceptionally scalable and robust search engine designed for indexing and searching extensive datasets. In this article, we delve into the fundamentals of semantic search and explore how to harness Elasticsearch and Transformer models to bolster large language model processing paradigms. We gain a comprehensive understanding of semantic search principles and acquire practical skills for implementing semantic search in real-world model application scenarios.

Enhanced User Interaction in Operating Systems through Machine Learning Language Models

Feb 24, 2024Abstract:With the large language model showing human-like logical reasoning and understanding ability, whether agents based on the large language model can simulate the interaction behavior of real users, so as to build a reliable virtual recommendation A/B test scene to help the application of recommendation research is an urgent, important and economic value problem. The combination of interaction design and machine learning can provide a more efficient and personalized user experience for products and services. This personalized service can meet the specific needs of users and improve user satisfaction and loyalty. Second, the interactive system can understand the user's views and needs for the product by providing a good user interface and interactive experience, and then use machine learning algorithms to improve and optimize the product. This iterative optimization process can continuously improve the quality and performance of the product to meet the changing needs of users. At the same time, designers need to consider how these algorithms and tools can be combined with interactive systems to provide a good user experience. This paper explores the potential applications of large language models, machine learning and interaction design for user interaction in recommendation systems and operating systems. By integrating these technologies, more intelligent and personalized services can be provided to meet user needs and promote continuous improvement and optimization of products. This is of great value for both recommendation research and user experience applications.

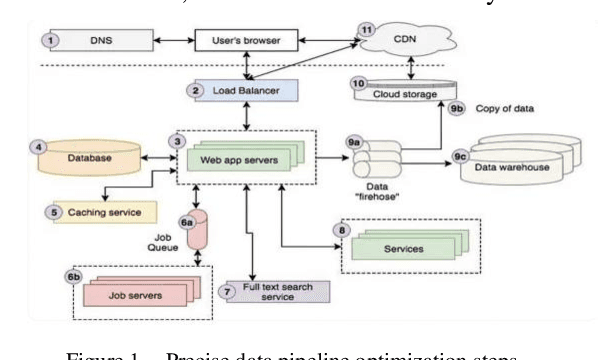

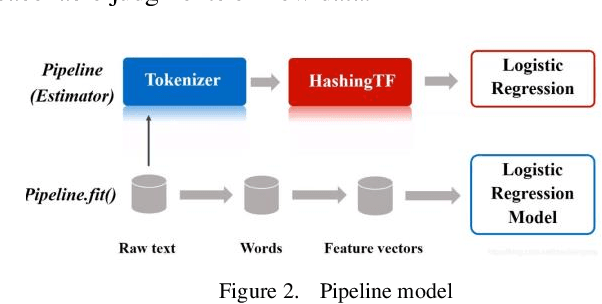

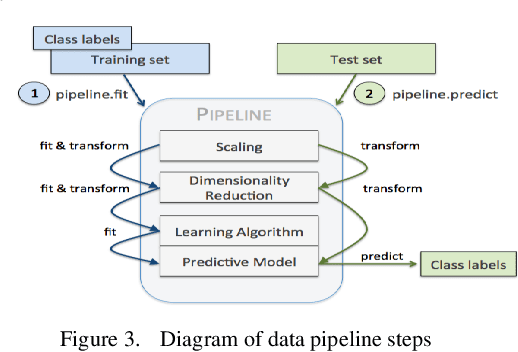

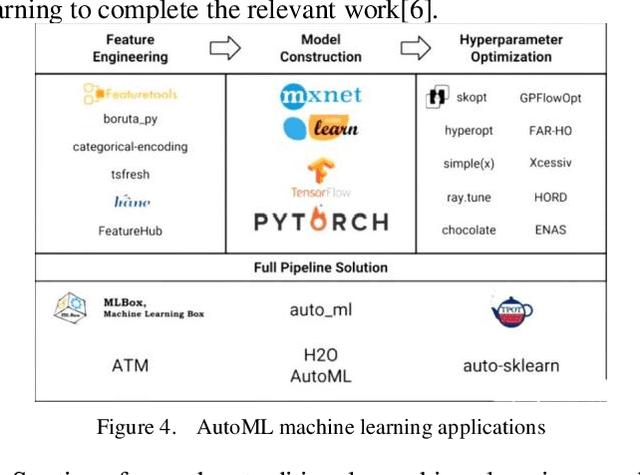

Data Pipeline Training: Integrating AutoML to Optimize the Data Flow of Machine Learning Models

Feb 20, 2024

Abstract:Data Pipeline plays an indispensable role in tasks such as modeling machine learning and developing data products. With the increasing diversification and complexity of Data sources, as well as the rapid growth of data volumes, building an efficient Data Pipeline has become crucial for improving work efficiency and solving complex problems. This paper focuses on exploring how to optimize data flow through automated machine learning methods by integrating AutoML with Data Pipeline. We will discuss how to leverage AutoML technology to enhance the intelligence of Data Pipeline, thereby achieving better results in machine learning tasks. By delving into the automation and optimization of Data flows, we uncover key strategies for constructing efficient data pipelines that can adapt to the ever-changing data landscape. This not only accelerates the modeling process but also provides innovative solutions to complex problems, enabling more significant outcomes in increasingly intricate data domains. Keywords- Data Pipeline Training;AutoML; Data environment; Machine learning

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge