Xinyue Liu

Fence off Anomaly Interference: Cross-Domain Distillation for Fully Unsupervised Anomaly Detection

Aug 25, 2025

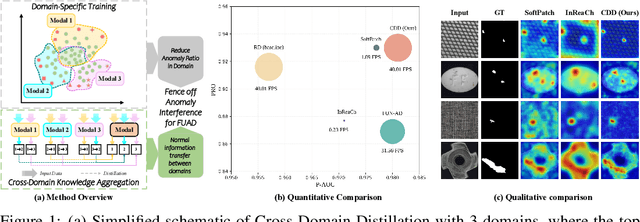

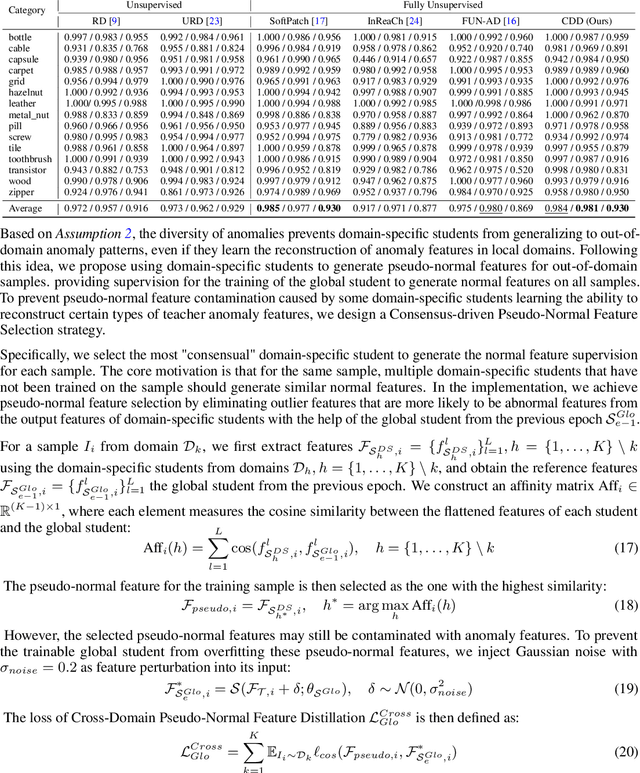

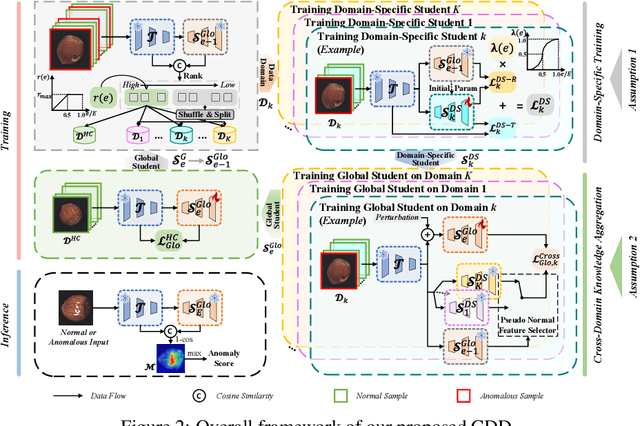

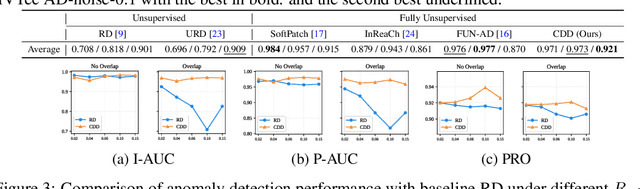

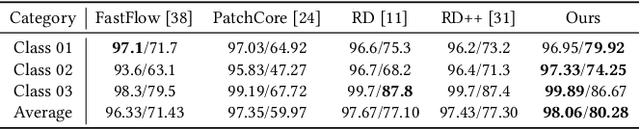

Abstract:Fully Unsupervised Anomaly Detection (FUAD) is a practical extension of Unsupervised Anomaly Detection (UAD), aiming to detect anomalies without any labels even when the training set may contain anomalous samples. To achieve FUAD, we pioneer the introduction of Knowledge Distillation (KD) paradigm based on teacher-student framework into the FUAD setting. However, due to the presence of anomalies in the training data, traditional KD methods risk enabling the student to learn the teacher's representation of anomalies under FUAD setting, thereby resulting in poor anomaly detection performance. To address this issue, we propose a novel Cross-Domain Distillation (CDD) framework based on the widely studied reverse distillation (RD) paradigm. Specifically, we design a Domain-Specific Training, which divides the training set into multiple domains with lower anomaly ratios and train a domain-specific student for each. Cross-Domain Knowledge Aggregation is then performed, where pseudo-normal features generated by domain-specific students collaboratively guide a global student to learn generalized normal representations across all samples. Experimental results on noisy versions of the MVTec AD and VisA datasets demonstrate that our method achieves significant performance improvements over the baseline, validating its effectiveness under FUAD setting.

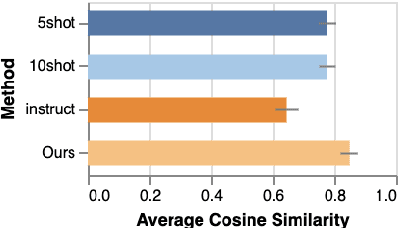

NoveltyBench: Evaluating Language Models for Humanlike Diversity

Apr 08, 2025Abstract:Language models have demonstrated remarkable capabilities on standard benchmarks, yet they struggle increasingly from mode collapse, the inability to generate diverse and novel outputs. Our work introduces NoveltyBench, a benchmark specifically designed to evaluate the ability of language models to produce multiple distinct and high-quality outputs. NoveltyBench utilizes prompts curated to elicit diverse answers and filtered real-world user queries. Evaluating 20 leading language models, we find that current state-of-the-art systems generate significantly less diversity than human writers. Notably, larger models within a family often exhibit less diversity than their smaller counterparts, challenging the notion that capability on standard benchmarks translates directly to generative utility. While prompting strategies like in-context regeneration can elicit diversity, our findings highlight a fundamental lack of distributional diversity in current models, reducing their utility for users seeking varied responses and suggesting the need for new training and evaluation paradigms that prioritize diversity alongside quality.

Hawkes based Representation Learning for Reasoning over Scale-free Community-structured Temporal Knowledge Graphs

Dec 28, 2024Abstract:Temporal knowledge graph (TKG) reasoning has become a hot topic due to its great value in many practical tasks. The key to TKG reasoning is modeling the structural information and evolutional patterns of the TKGs. While great efforts have been devoted to TKG reasoning, the structural and evolutional characteristics of real-world networks have not been considered. In the aspect of structure, real-world networks usually exhibit clear community structure and scale-free (long-tailed distribution) properties. In the aspect of evolution, the impact of an event decays with the time elapsing. In this paper, we propose a novel TKG reasoning model called Hawkes process-based Evolutional Representation Learning Network (HERLN), which learns structural information and evolutional patterns of a TKG simultaneously, considering the characteristics of real-world networks: community structure, scale-free and temporal decaying. First, we find communities in the input TKG to make the encoding get more similar intra-community embeddings. Second, we design a Hawkes process-based relational graph convolutional network to cope with the event impact-decaying phenomenon. Third, we design a conditional decoding method to alleviate biases towards frequent entities caused by long-tailed distribution. Experimental results show that HERLN achieves significant improvements over the state-of-the-art models.

Unlocking the Potential of Reverse Distillation for Anomaly Detection

Dec 10, 2024Abstract:Knowledge Distillation (KD) is a promising approach for unsupervised Anomaly Detection (AD). However, the student network's over-generalization often diminishes the crucial representation differences between teacher and student in anomalous regions, leading to detection failures. To addresses this problem, the widely accepted Reverse Distillation (RD) paradigm designs the asymmetry teacher and student, using an encoder as teacher and a decoder as student. Yet, the design of RD does not ensure that the teacher encoder effectively distinguishes between normal and abnormal features or that the student decoder generates anomaly-free features. Additionally, the absence of skip connections results in a loss of fine details during feature reconstruction. To address these issues, we propose RD with Expert, which introduces a novel Expert-Teacher-Student network for simultaneous distillation of both the teacher encoder and student decoder. The added expert network enhances the student's ability to generate normal features and optimizes the teacher's differentiation between normal and abnormal features, reducing missed detections. Additionally, Guided Information Injection is designed to filter and transfer features from teacher to student, improving detail reconstruction and minimizing false positives. Experiments on several benchmarks prove that our method outperforms existing unsupervised AD methods under RD paradigm, fully unlocking RD's potential.

ONER: Online Experience Replay for Incremental Anomaly Detection

Dec 05, 2024Abstract:Incremental anomaly detection sequentially recognizes abnormal regions in novel categories for dynamic industrial scenarios. This remains highly challenging due to knowledge overwriting and feature conflicts, leading to catastrophic forgetting. In this work, we propose ONER, an end-to-end ONline Experience Replay method, which efficiently mitigates catastrophic forgetting while adapting to new tasks with minimal cost. Specifically, our framework utilizes two types of experiences from past tasks: decomposed prompts and semantic prototypes, addressing both model parameter updates and feature optimization. The decomposed prompts consist of learnable components that assemble to produce attention-conditioned prompts. These prompts reuse previously learned knowledge, enabling model to learn novel tasks effectively. The semantic prototypes operate at both pixel and image levels, performing regularization in the latent feature space to prevent forgetting across various tasks. Extensive experiments demonstrate that our method achieves state-of-the-art performance in incremental anomaly detection with significantly reduced forgetting, as well as efficiently adapting to new categories with minimal costs. These results confirm the efficiency and stability of ONER, making it a powerful solution for real-world applications.

Improve Meta-learning for Few-Shot Text Classification with All You Can Acquire from the Tasks

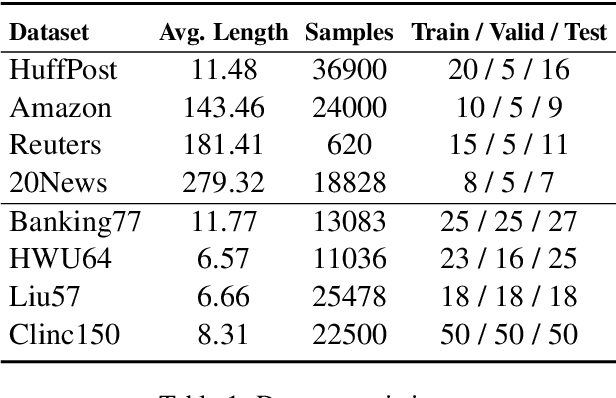

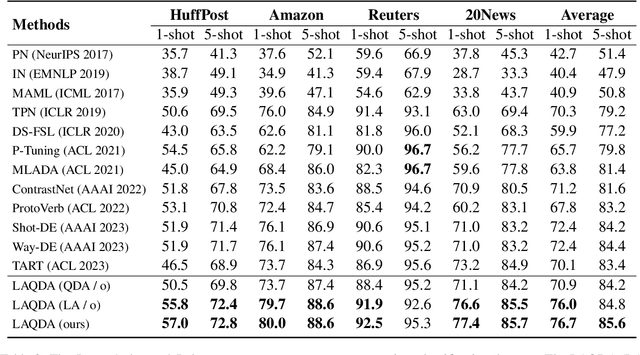

Oct 14, 2024

Abstract:Meta-learning has emerged as a prominent technology for few-shot text classification and has achieved promising performance. However, existing methods often encounter difficulties in drawing accurate class prototypes from support set samples, primarily due to probable large intra-class differences and small inter-class differences within the task. Recent approaches attempt to incorporate external knowledge or pre-trained language models to augment data, but this requires additional resources and thus does not suit many few-shot scenarios. In this paper, we propose a novel solution to address this issue by adequately leveraging the information within the task itself. Specifically, we utilize label information to construct a task-adaptive metric space, thereby adaptively reducing the intra-class differences and magnifying the inter-class differences. We further employ the optimal transport technique to estimate class prototypes with query set samples together, mitigating the problem of inaccurate and ambiguous support set samples caused by large intra-class differences. We conduct extensive experiments on eight benchmark datasets, and our approach shows obvious advantages over state-of-the-art models across all the tasks on all the datasets. For reproducibility, all the datasets and codes are available at https://github.com/YvoGao/LAQDA.

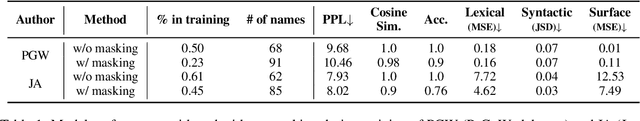

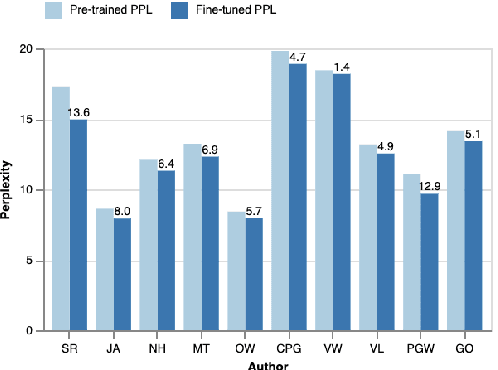

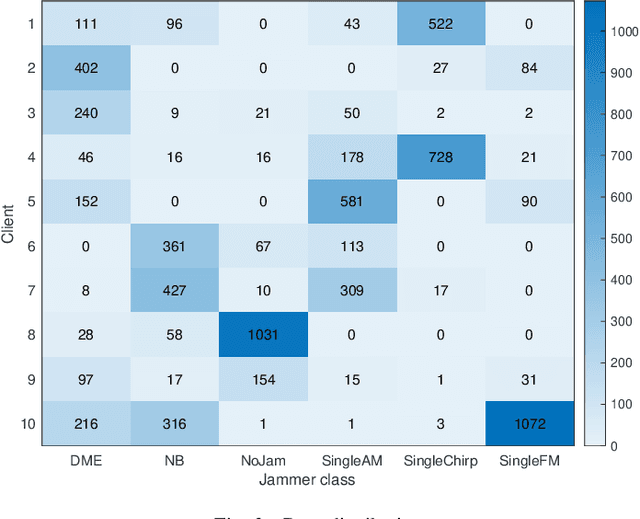

Customizing Large Language Model Generation Style using Parameter-Efficient Finetuning

Sep 06, 2024

Abstract:One-size-fits-all large language models (LLMs) are increasingly being used to help people with their writing. However, the style these models are trained to write in may not suit all users or use cases. LLMs would be more useful as writing assistants if their idiolect could be customized to match each user. In this paper, we explore whether parameter-efficient finetuning (PEFT) with Low-Rank Adaptation can effectively guide the style of LLM generations. We use this method to customize LLaMA-2 to ten different authors and show that the generated text has lexical, syntactic, and surface alignment with the target author but struggles with content memorization. Our findings highlight the potential of PEFT to support efficient, user-level customization of LLMs.

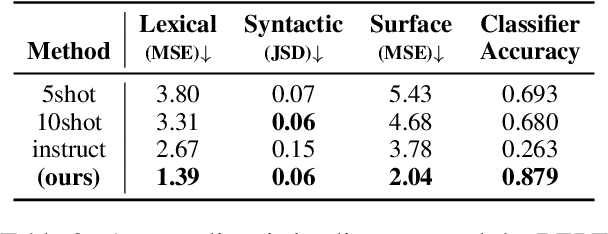

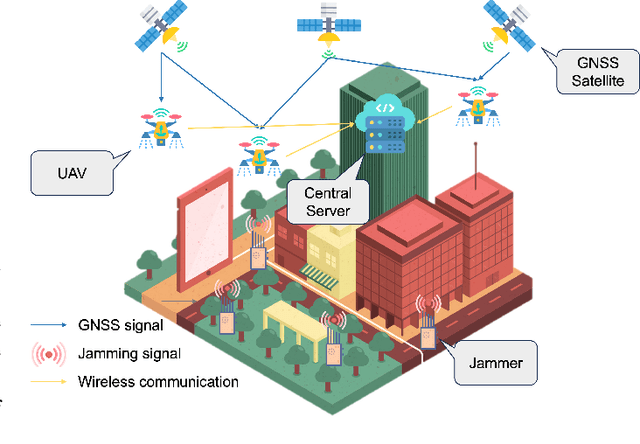

GNSS Interference Classification Using Federated Reservoir Computing

Aug 23, 2024

Abstract:The expanding use of Unmanned Aerial Vehicles (UAVs) in vital areas like traffic management, surveillance, and environmental monitoring highlights the need for robust communication and navigation systems. Particularly vulnerable are Global Navigation Satellite Systems (GNSS), which face a spectrum of interference and jamming threats that can significantly undermine their performance. While traditional deep learning approaches are adept at mitigating these issues, they often fall short for UAV applications due to significant computational demands and the complexities of managing large, centralized datasets. In response, this paper introduces Federated Reservoir Computing (FedRC) as a potent and efficient solution tailored to enhance interference classification in GNSS systems used by UAVs. Our experimental results demonstrate that FedRC not only achieves faster convergence but also sustains lower loss levels than traditional models, highlighting its exceptional adaptability and operational efficiency.

Dual-Modeling Decouple Distillation for Unsupervised Anomaly Detection

Aug 07, 2024

Abstract:Knowledge distillation based on student-teacher network is one of the mainstream solution paradigms for the challenging unsupervised Anomaly Detection task, utilizing the difference in representation capabilities of the teacher and student networks to implement anomaly localization. However, over-generalization of the student network to the teacher network may lead to negligible differences in representation capabilities of anomaly, thus affecting the detection effectiveness. Existing methods address the possible over-generalization by using differentiated students and teachers from the structural perspective or explicitly expanding distilled information from the content perspective, which inevitably result in an increased likelihood of underfitting of the student network and poor anomaly detection capabilities in anomaly center or edge. In this paper, we propose Dual-Modeling Decouple Distillation (DMDD) for the unsupervised anomaly detection. In DMDD, a Decouple Student-Teacher Network is proposed to decouple the initial student features into normality and abnormality features. We further introduce Dual-Modeling Distillation based on normal-anomaly image pairs, fitting normality features of anomalous image and the teacher features of the corresponding normal image, widening the distance between abnormality features and the teacher features in anomalous regions. Synthesizing these two distillation ideas, we achieve anomaly detection which focuses on both edge and center of anomaly. Finally, a Multi-perception Segmentation Network is proposed to achieve focused anomaly map fusion based on multiple attention. Experimental results on MVTec AD show that DMDD surpasses SOTA localization performance of previous knowledge distillation-based methods, reaching 98.85% on pixel-level AUC and 96.13% on PRO.

Leveraging Foundation Models for Multi-modal Federated Learning with Incomplete Modality

Jun 16, 2024

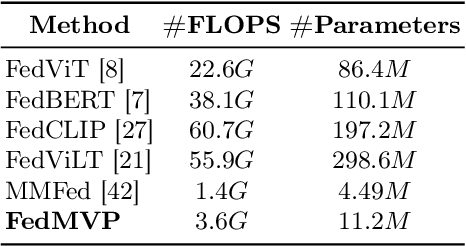

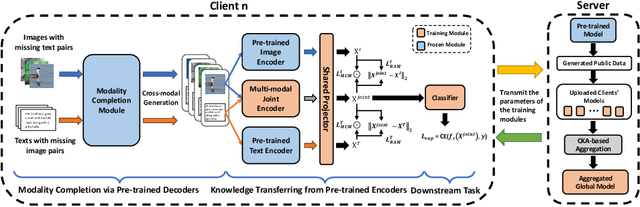

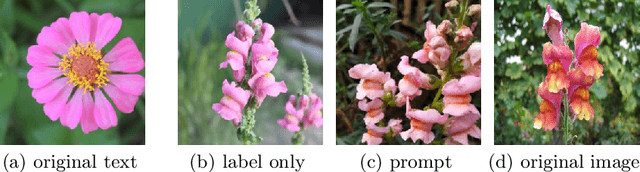

Abstract:Federated learning (FL) has obtained tremendous progress in providing collaborative training solutions for distributed data silos with privacy guarantees. However, few existing works explore a more realistic scenario where the clients hold multiple data modalities. In this paper, we aim to solve a novel challenge in multi-modal federated learning (MFL) -- modality missing -- the clients may lose part of the modalities in their local data sets. To tackle the problems, we propose a novel multi-modal federated learning method, Federated Multi-modal contrastiVe training with Pre-trained completion (FedMVP), which integrates the large-scale pre-trained models to enhance the federated training. In the proposed FedMVP framework, each client deploys a large-scale pre-trained model with frozen parameters for modality completion and representation knowledge transfer, enabling efficient and robust local training. On the server side, we utilize generated data to uniformly measure the representation similarity among the uploaded client models and construct a graph perspective to aggregate them according to their importance in the system. We demonstrate that the model achieves superior performance over two real-world image-text classification datasets and is robust to the performance degradation caused by missing modality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge