Wen Yang

AIMS: An Adaptive Integration of Multi-Sensor Measurements for Quadrupedal Robot Localization

Jan 04, 2026Abstract:This paper addresses the problem of accurate localization for quadrupedal robots operating in narrow tunnel-like environments. Due to the long and homogeneous characteristics of such scenarios, LiDAR measurements often provide weak geometric constraints, making traditional sensor fusion methods susceptible to accumulated motion estimation errors. To address these challenges, we propose AIMS, an adaptive LiDAR-IMU-leg odometry fusion method for robust quadrupedal robot localization in degenerate environments. The proposed method is formulated within an error-state Kalman filtering framework, where LiDAR and leg odometry measurements are integrated with IMU-based state prediction, and measurement noise covariance matrices are adaptively adjusted based on online degeneracy-aware reliability assessment. Experimental results obtained in narrow corridor environments demonstrate that the proposed method improves localization accuracy and robustness compared with state-of-the-art approaches.

Parallel Scaling Law: Unveiling Reasoning Generalization through A Cross-Linguistic Perspective

Oct 02, 2025Abstract:Recent advancements in Reinforcement Post-Training (RPT) have significantly enhanced the capabilities of Large Reasoning Models (LRMs), sparking increased interest in the generalization of RL-based reasoning. While existing work has primarily focused on investigating its generalization across tasks or modalities, this study proposes a novel cross-linguistic perspective to investigate reasoning generalization. This raises a crucial question: $\textit{Does the reasoning capability achieved from English RPT effectively transfer to other languages?}$ We address this by systematically evaluating English-centric LRMs on multilingual reasoning benchmarks and introducing a metric to quantify cross-lingual transferability. Our findings reveal that cross-lingual transferability varies significantly across initial model, target language, and training paradigm. Through interventional studies, we find that models with stronger initial English capabilities tend to over-rely on English-specific patterns, leading to diminished cross-lingual generalization. To address this, we conduct a thorough parallel training study. Experimental results yield three key findings: $\textbf{First-Parallel Leap}$, a substantial leap in performance when transitioning from monolingual to just a single parallel language, and a predictable $\textbf{Parallel Scaling Law}$, revealing that cross-lingual reasoning transfer follows a power-law with the number of training parallel languages. Moreover, we identify the discrepancy between actual monolingual performance and the power-law prediction as $\textbf{Monolingual Generalization Gap}$, indicating that English-centric LRMs fail to fully generalize across languages. Our study challenges the assumption that LRM reasoning mirrors human cognition, providing critical insights for the development of more language-agnostic LRMs.

GUI-ARP: Enhancing Grounding with Adaptive Region Perception for GUI Agents

Sep 19, 2025Abstract:Existing GUI grounding methods often struggle with fine-grained localization in high-resolution screenshots. To address this, we propose GUI-ARP, a novel framework that enables adaptive multi-stage inference. Equipped with the proposed Adaptive Region Perception (ARP) and Adaptive Stage Controlling (ASC), GUI-ARP dynamically exploits visual attention for cropping task-relevant regions and adapts its inference strategy, performing a single-stage inference for simple cases and a multi-stage analysis for more complex scenarios. This is achieved through a two-phase training pipeline that integrates supervised fine-tuning with reinforcement fine-tuning based on Group Relative Policy Optimization (GRPO). Extensive experiments demonstrate that the proposed GUI-ARP achieves state-of-the-art performance on challenging GUI grounding benchmarks, with a 7B model reaching 60.8% accuracy on ScreenSpot-Pro and 30.9% on UI-Vision benchmark. Notably, GUI-ARP-7B demonstrates strong competitiveness against open-source 72B models (UI-TARS-72B at 38.1%) and proprietary models.

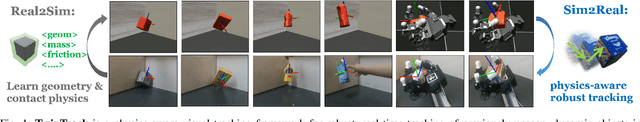

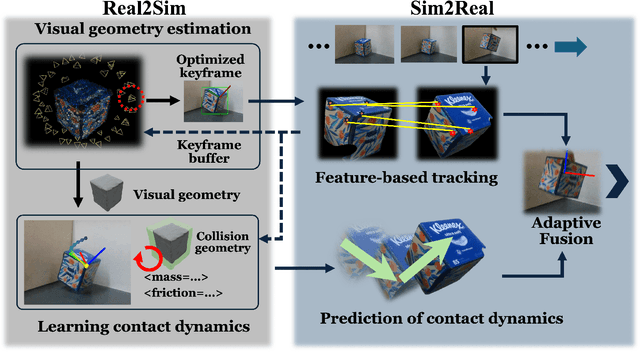

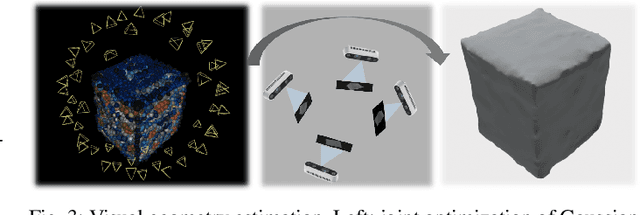

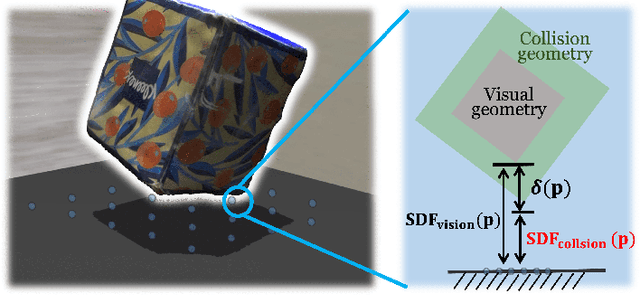

TwinTrack: Bridging Vision and Contact Physics for Real-Time Tracking of Unknown Dynamic Objects

May 28, 2025

Abstract:Real-time tracking of previously unseen, highly dynamic objects in contact-rich environments -- such as during dexterous in-hand manipulation -- remains a significant challenge. Purely vision-based tracking often suffers from heavy occlusions due to the frequent contact interactions and motion blur caused by abrupt motion during contact impacts. We propose TwinTrack, a physics-aware visual tracking framework that enables robust and real-time 6-DoF pose tracking of unknown dynamic objects in a contact-rich scene by leveraging the contact physics of the observed scene. At the core of TwinTrack is an integration of Real2Sim and Sim2Real. In Real2Sim, we combine the complementary strengths of vision and contact physics to estimate object's collision geometry and physical properties: object's geometry is first reconstructed from vision, then updated along with other physical parameters from contact dynamics for physical accuracy. In Sim2Real, robust pose estimation of the object is achieved by adaptive fusion between visual tracking and prediction of the learned contact physics. TwinTrack is built on a GPU-accelerated, deeply customized physics engine to ensure real-time performance. We evaluate our method on two contact-rich scenarios: object falling with rich contact impacts against the environment, and contact-rich in-hand manipulation. Experimental results demonstrate that, compared to baseline methods, TwinTrack achieves significantly more robust, accurate, and real-time 6-DoF tracking in these challenging scenarios, with tracking speed exceeding 20 Hz. Project page: https://irislab.tech/TwinTrack-webpage/

KTAE: A Model-Free Algorithm to Key-Tokens Advantage Estimation in Mathematical Reasoning

May 22, 2025Abstract:Recent advances have demonstrated that integrating reinforcement learning with rule-based rewards can significantly enhance the reasoning capabilities of large language models, even without supervised fine-tuning. However, prevalent reinforcement learning algorithms such as GRPO and its variants like DAPO, suffer from a coarse granularity issue when computing the advantage. Specifically, they compute rollout-level advantages that assign identical values to every token within a sequence, failing to capture token-specific contributions and hindering effective learning. To address this limitation, we propose Key-token Advantage Estimation (KTAE) - a novel algorithm that estimates fine-grained, token-level advantages without introducing additional models. KTAE leverages the correctness of sampled rollouts and applies statistical analysis to quantify the importance of individual tokens within a sequence to the final outcome. This quantified token-level importance is then combined with the rollout-level advantage to obtain a more fine-grained token-level advantage estimation. Empirical results show that models trained with GRPO+KTAE and DAPO+KTAE outperform baseline methods across five mathematical reasoning benchmarks. Notably, they achieve higher accuracy with shorter responses and even surpass R1-Distill-Qwen-1.5B using the same base model.

DF-Calib: Targetless LiDAR-Camera Calibration via Depth Flow

Apr 02, 2025

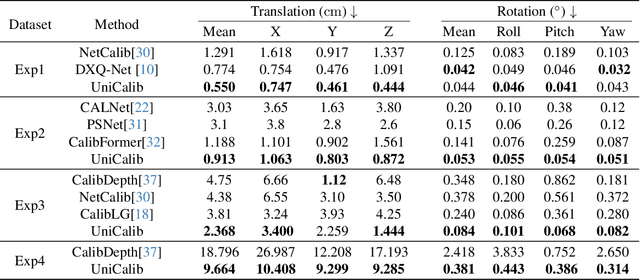

Abstract:Precise LiDAR-camera calibration is crucial for integrating these two sensors into robotic systems to achieve robust perception. In applications like autonomous driving, online targetless calibration enables a prompt sensor misalignment correction from mechanical vibrations without extra targets. However, existing methods exhibit limitations in effectively extracting consistent features from LiDAR and camera data and fail to prioritize salient regions, compromising cross-modal alignment robustness. To address these issues, we propose DF-Calib, a LiDAR-camera calibration method that reformulates calibration as an intra-modality depth flow estimation problem. DF-Calib estimates a dense depth map from the camera image and completes the sparse LiDAR projected depth map, using a shared feature encoder to extract consistent depth-to-depth features, effectively bridging the 2D-3D cross-modal gap. Additionally, we introduce a reliability map to prioritize valid pixels and propose a perceptually weighted sparse flow loss to enhance depth flow estimation. Experimental results across multiple datasets validate its accuracy and generalization,with DF-Calib achieving a mean translation error of 0.635cm and rotation error of 0.045 degrees on the KITTI dataset.

LiDAR-enhanced 3D Gaussian Splatting Mapping

Mar 07, 2025

Abstract:This paper introduces LiGSM, a novel LiDAR-enhanced 3D Gaussian Splatting (3DGS) mapping framework that improves the accuracy and robustness of 3D scene mapping by integrating LiDAR data. LiGSM constructs joint loss from images and LiDAR point clouds to estimate the poses and optimize their extrinsic parameters, enabling dynamic adaptation to variations in sensor alignment. Furthermore, it leverages LiDAR point clouds to initialize 3DGS, providing a denser and more reliable starting points compared to sparse SfM points. In scene rendering, the framework augments standard image-based supervision with depth maps generated from LiDAR projections, ensuring an accurate scene representation in both geometry and photometry. Experiments on public and self-collected datasets demonstrate that LiGSM outperforms comparative methods in pose tracking and scene rendering.

Implicit Cross-Lingual Rewarding for Efficient Multilingual Preference Alignment

Mar 06, 2025

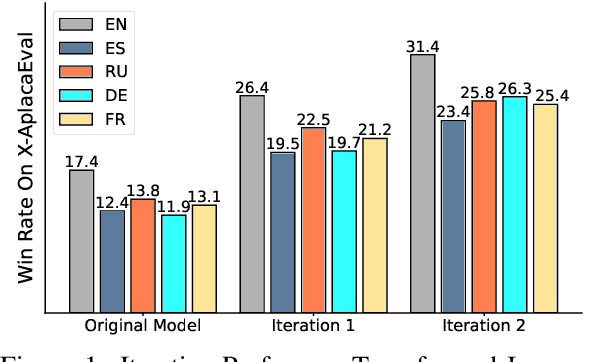

Abstract:Direct Preference Optimization (DPO) has become a prominent method for aligning Large Language Models (LLMs) with human preferences. While DPO has enabled significant progress in aligning English LLMs, multilingual preference alignment is hampered by data scarcity. To address this, we propose a novel approach that $\textit{captures}$ learned preferences from well-aligned English models by implicit rewards and $\textit{transfers}$ them to other languages through iterative training. Specifically, we derive an implicit reward model from the logits of an English DPO-aligned model and its corresponding reference model. This reward model is then leveraged to annotate preference relations in cross-lingual instruction-following pairs, using English instructions to evaluate multilingual responses. The annotated data is subsequently used for multilingual DPO fine-tuning, facilitating preference knowledge transfer from English to other languages. Fine-tuning Llama3 for two iterations resulted in a 12.72% average improvement in Win Rate and a 5.97% increase in Length Control Win Rate across all training languages on the X-AlpacaEval leaderboard. Our findings demonstrate that leveraging existing English-aligned models can enable efficient and effective multilingual preference alignment, significantly reducing the need for extensive multilingual preference data. The code is available at https://github.com/ZNLP/Implicit-Cross-Lingual-Rewarding

Mitigating the Impact of Prominent Position Shift in Drone-based RGBT Object Detection

Feb 13, 2025Abstract:Drone-based RGBT object detection plays a crucial role in many around-the-clock applications. However, real-world drone-viewed RGBT data suffers from the prominent position shift problem, i.e., the position of a tiny object differs greatly in different modalities. For instance, a slight deviation of a tiny object in the thermal modality will induce it to drift from the main body of itself in the RGB modality. Considering RGBT data are usually labeled on one modality (reference), this will cause the unlabeled modality (sensed) to lack accurate supervision signals and prevent the detector from learning a good representation. Moreover, the mismatch of the corresponding feature point between the modalities will make the fused features confusing for the detection head. In this paper, we propose to cast the cross-modality box shift issue as the label noise problem and address it on the fly via a novel Mean Teacher-based Cross-modality Box Correction head ensemble (CBC). In this way, the network can learn more informative representations for both modalities. Furthermore, to alleviate the feature map mismatch problem in RGBT fusion, we devise a Shifted Window-Based Cascaded Alignment (SWCA) module. SWCA mines long-range dependencies between the spatially unaligned features inside shifted windows and cascaded aligns the sensed features with the reference ones. Extensive experiments on two drone-based RGBT object detection datasets demonstrate that the correction results are both visually and quantitatively favorable, thereby improving the detection performance. In particular, our CBC module boosts the precision of the sensed modality ground truth by 25.52 aSim points. Overall, the proposed detector achieves an mAP_50 of 43.55 points on RGBTDronePerson and surpasses a state-of-the-art method by 8.6 mAP50 on a shift subset of DroneVehicle dataset. The code and data will be made publicly available.

Oriented Tiny Object Detection: A Dataset, Benchmark, and Dynamic Unbiased Learning

Dec 16, 2024Abstract:Detecting oriented tiny objects, which are limited in appearance information yet prevalent in real-world applications, remains an intricate and under-explored problem. To address this, we systemically introduce a new dataset, benchmark, and a dynamic coarse-to-fine learning scheme in this study. Our proposed dataset, AI-TOD-R, features the smallest object sizes among all oriented object detection datasets. Based on AI-TOD-R, we present a benchmark spanning a broad range of detection paradigms, including both fully-supervised and label-efficient approaches. Through investigation, we identify a learning bias presents across various learning pipelines: confident objects become increasingly confident, while vulnerable oriented tiny objects are further marginalized, hindering their detection performance. To mitigate this issue, we propose a Dynamic Coarse-to-Fine Learning (DCFL) scheme to achieve unbiased learning. DCFL dynamically updates prior positions to better align with the limited areas of oriented tiny objects, and it assigns samples in a way that balances both quantity and quality across different object shapes, thus mitigating biases in prior settings and sample selection. Extensive experiments across eight challenging object detection datasets demonstrate that DCFL achieves state-of-the-art accuracy, high efficiency, and remarkable versatility. The dataset, benchmark, and code are available at https://chasel-tsui.github.io/AI-TOD-R/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge