Weiming Li

MoSE: Skill-by-Skill Mixture-of-Expert Learning for Autonomous Driving

Jul 10, 2025

Abstract:Recent studies show large language models (LLMs) and vision language models (VLMs) trained using web-scale data can empower end-to-end autonomous driving systems for a better generalization and interpretation. Specifically, by dynamically routing inputs to specialized subsets of parameters, the Mixture-of-Experts (MoE) technique enables general LLMs or VLMs to achieve substantial performance improvements while maintaining computational efficiency. However, general MoE models usually demands extensive training data and complex optimization. In this work, inspired by the learning process of human drivers, we propose a skill-oriented MoE, called MoSE, which mimics human drivers' learning process and reasoning process, skill-by-skill and step-by-step. We propose a skill-oriented routing mechanism that begins with defining and annotating specific skills, enabling experts to identify the necessary driving competencies for various scenarios and reasoning tasks, thereby facilitating skill-by-skill learning. Further align the driving process to multi-step planning in human reasoning and end-to-end driving models, we build a hierarchical skill dataset and pretrain the router to encourage the model to think step-by-step. Unlike multi-round dialogs, MoSE integrates valuable auxiliary tasks (e.g.\ description, reasoning, planning) in one single forward process without introducing any extra computational cost. With less than 3B sparsely activated parameters, our model outperforms several 8B+ parameters on CODA AD corner case reasoning task. Compared to existing methods based on open-source models and data, our approach achieves state-of-the-art performance with significantly reduced activated model size (at least by $62.5\%$) with a single-turn conversation.

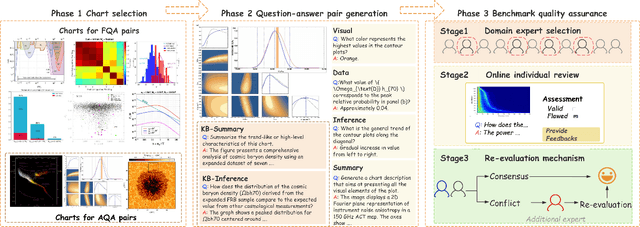

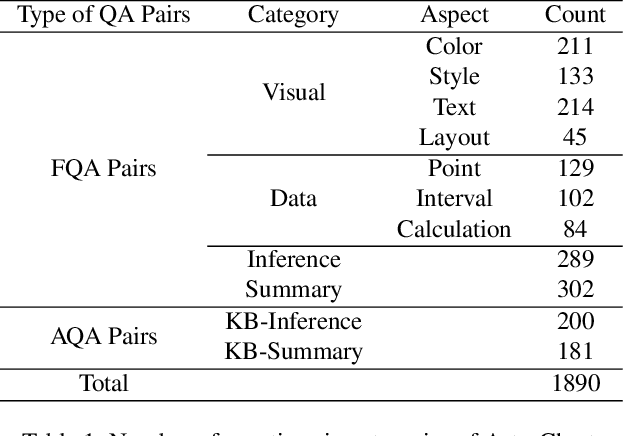

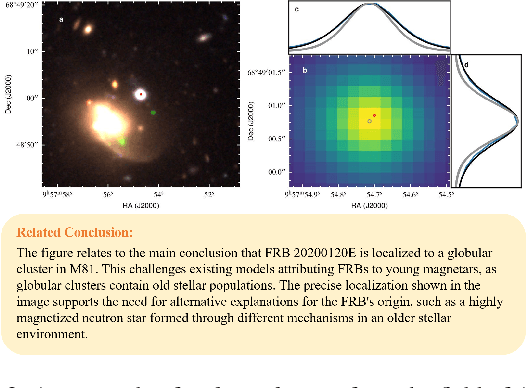

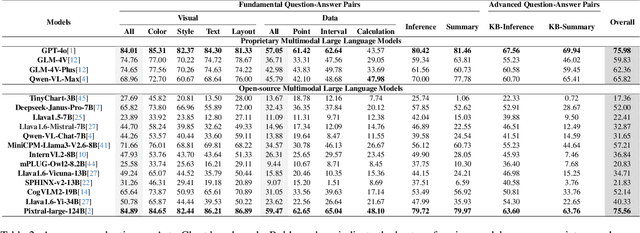

DomainCQA: Crafting Expert-Level QA from Domain-Specific Charts

Mar 25, 2025

Abstract:Chart Question Answering (CQA) benchmarks are essential for evaluating the capability of Multimodal Large Language Models (MLLMs) to interpret visual data. However, current benchmarks focus primarily on the evaluation of general-purpose CQA but fail to adequately capture domain-specific challenges. We introduce DomainCQA, a systematic methodology for constructing domain-specific CQA benchmarks, and demonstrate its effectiveness by developing AstroChart, a CQA benchmark in the field of astronomy. Our evaluation shows that chart reasoning and combining chart information with domain knowledge for deeper analysis and summarization, rather than domain-specific knowledge, pose the primary challenge for existing MLLMs, highlighting a critical gap in current benchmarks. By providing a scalable and rigorous framework, DomainCQA enables more precise assessment and improvement of MLLMs for domain-specific applications.

MapFusion: A Novel BEV Feature Fusion Network for Multi-modal Map Construction

Feb 05, 2025Abstract:Map construction task plays a vital role in providing precise and comprehensive static environmental information essential for autonomous driving systems. Primary sensors include cameras and LiDAR, with configurations varying between camera-only, LiDAR-only, or camera-LiDAR fusion, based on cost-performance considerations. While fusion-based methods typically perform best, existing approaches often neglect modality interaction and rely on simple fusion strategies, which suffer from the problems of misalignment and information loss. To address these issues, we propose MapFusion, a novel multi-modal Bird's-Eye View (BEV) feature fusion method for map construction. Specifically, to solve the semantic misalignment problem between camera and LiDAR BEV features, we introduce the Cross-modal Interaction Transform (CIT) module, enabling interaction between two BEV feature spaces and enhancing feature representation through a self-attention mechanism. Additionally, we propose an effective Dual Dynamic Fusion (DDF) module to adaptively select valuable information from different modalities, which can take full advantage of the inherent information between different modalities. Moreover, MapFusion is designed to be simple and plug-and-play, easily integrated into existing pipelines. We evaluate MapFusion on two map construction tasks, including High-definition (HD) map and BEV map segmentation, to show its versatility and effectiveness. Compared with the state-of-the-art methods, MapFusion achieves 3.6% and 6.2% absolute improvements on the HD map construction and BEV map segmentation tasks on the nuScenes dataset, respectively, demonstrating the superiority of our approach.

Terahertz Channels in Atmospheric Conditions: Propagation Characteristics and Security Performance

Aug 28, 2024

Abstract:With the growing demand for higher wireless data rates, the interest in extending the carrier frequency of wireless links to the terahertz (THz) range has significantly increased. For long-distance outdoor wireless communications, THz channels may suffer substantial power loss and security issues due to atmospheric weather effects. It is crucial to assess the impact of weather on high-capacity data transmission to evaluate wireless system link budgets and performance accurately. In this article, we provide an insight into the propagation characteristics of THz channels under atmospheric conditions and the security aspects of THz communication systems in future applications. We conduct a comprehensive survey of our recent research and experimental findings on THz channel transmission and physical layer security, synthesizing and categorizing the state-of-the-art research in this domain. Our analysis encompasses various atmospheric phenomena, including molecular absorption, scattering effects, and turbulence, elucidating their intricate interactions with THz waves and the resultant implications for channel modeling and system design. Furthermore, we investigate the unique security challenges posed by THz communications, examining potential vulnerabilities and proposing novel countermeasures to enhance the resilience of these high-frequency systems against eavesdropping and other security threats. Finally, we discuss the challenges and limitations of such high-frequency wireless communications and provide insights into future research prospects for realizing the 6G vision, emphasizing the need for innovative solutions to overcome the atmospheric hurdles and security concerns in THz communications.

Large Language Models Understand Layouts

Jul 08, 2024Abstract:Large language models (LLMs) demonstrate extraordinary abilities in a wide range of natural language processing (NLP) tasks. In this paper, we show that, beyond text understanding capability, LLMs are capable of processing text layouts that are denoted by spatial markers. They are able to answer questions that require explicit spatial perceiving and reasoning, while a drastic performance drop is observed when the spatial markers from the original data are excluded. We perform a series of experiments with the GPT-3.5, Baichuan2, Llama2 and ChatGLM3 models on various types of layout-sensitive datasets for further analysis. The experimental results reveal that the layout understanding ability of LLMs is mainly introduced by the coding data for pretraining, which is further enhanced at the instruction-tuning stage. In addition, layout understanding can be enhanced by integrating low-cost, auto-generated data approached by a novel text game. Finally, we show that layout understanding ability is beneficial for building efficient visual question-answering (VQA) systems.

Is Your HD Map Constructor Reliable under Sensor Corruptions?

Jun 18, 2024

Abstract:Driving systems often rely on high-definition (HD) maps for precise environmental information, which is crucial for planning and navigation. While current HD map constructors perform well under ideal conditions, their resilience to real-world challenges, \eg, adverse weather and sensor failures, is not well understood, raising safety concerns. This work introduces MapBench, the first comprehensive benchmark designed to evaluate the robustness of HD map construction methods against various sensor corruptions. Our benchmark encompasses a total of 29 types of corruptions that occur from cameras and LiDAR sensors. Extensive evaluations across 31 HD map constructors reveal significant performance degradation of existing methods under adverse weather conditions and sensor failures, underscoring critical safety concerns. We identify effective strategies for enhancing robustness, including innovative approaches that leverage multi-modal fusion, advanced data augmentation, and architectural techniques. These insights provide a pathway for developing more reliable HD map construction methods, which are essential for the advancement of autonomous driving technology. The benchmark toolkit and affiliated code and model checkpoints have been made publicly accessible.

Lightweight Change Detection in Heterogeneous Remote Sensing Images with Online All-Integer Pruning Training

May 03, 2024Abstract:Detection of changes in heterogeneous remote sensing images is vital, especially in response to emergencies like earthquakes and floods. Current homogenous transformation-based change detection (CD) methods often suffer from high computation and memory costs, which are not friendly to edge-computation devices like onboard CD devices at satellites. To address this issue, this paper proposes a new lightweight CD method for heterogeneous remote sensing images that employs the online all-integer pruning (OAIP) training strategy to efficiently fine-tune the CD network using the current test data. The proposed CD network consists of two visual geometry group (VGG) subnetworks as the backbone architecture. In the OAIP-based training process, all the weights, gradients, and intermediate data are quantized to integers to speed up training and reduce memory usage, where the per-layer block exponentiation scaling scheme is utilized to reduce the computation errors of network parameters caused by quantization. Second, an adaptive filter-level pruning method based on the L1-norm criterion is employed to further lighten the fine-tuning process of the CD network. Experimental results show that the proposed OAIP-based method attains similar detection performance (but with significantly reduced computation complexity and memory usage) in comparison with state-of-the-art CD methods.

DOCTR: Disentangled Object-Centric Transformer for Point Scene Understanding

Mar 25, 2024

Abstract:Point scene understanding is a challenging task to process real-world scene point cloud, which aims at segmenting each object, estimating its pose, and reconstructing its mesh simultaneously. Recent state-of-the-art method first segments each object and then processes them independently with multiple stages for the different sub-tasks. This leads to a complex pipeline to optimize and makes it hard to leverage the relationship constraints between multiple objects. In this work, we propose a novel Disentangled Object-Centric TRansformer (DOCTR) that explores object-centric representation to facilitate learning with multiple objects for the multiple sub-tasks in a unified manner. Each object is represented as a query, and a Transformer decoder is adapted to iteratively optimize all the queries involving their relationship. In particular, we introduce a semantic-geometry disentangled query (SGDQ) design that enables the query features to attend separately to semantic information and geometric information relevant to the corresponding sub-tasks. A hybrid bipartite matching module is employed to well use the supervisions from all the sub-tasks during training. Qualitative and quantitative experimental results demonstrate that our method achieves state-of-the-art performance on the challenging ScanNet dataset. Code is available at https://github.com/SAITPublic/DOCTR.

DOR3D-Net: Dense Ordinal Regression Network for 3D Hand Pose Estimation

Mar 20, 2024

Abstract:Depth-based 3D hand pose estimation is an important but challenging research task in human-machine interaction community. Recently, dense regression methods have attracted increasing attention in 3D hand pose estimation task, which provide a low computational burden and high accuracy regression way by densely regressing hand joint offset maps. However, large-scale regression offset values are often affected by noise and outliers, leading to a significant drop in accuracy. To tackle this, we re-formulate 3D hand pose estimation as a dense ordinal regression problem and propose a novel Dense Ordinal Regression 3D Pose Network (DOR3D-Net). Specifically, we first decompose offset value regression into sub-tasks of binary classifications with ordinal constraints. Then, each binary classifier can predict the probability of a binary spatial relationship relative to joint, which is easier to train and yield much lower level of noise. The estimated hand joint positions are inferred by aggregating the ordinal regression results at local positions with a weighted sum. Furthermore, both joint regression loss and ordinal regression loss are used to train our DOR3D-Net in an end-to-end manner. Extensive experiments on public datasets (ICVL, MSRA, NYU and HANDS2017) show that our design provides significant improvements over SOTA methods.

DVI-SLAM: A Dual Visual Inertial SLAM Network

Sep 25, 2023

Abstract:Recent deep learning based visual simultaneous localization and mapping (SLAM) methods have made significant progress. However, how to make full use of visual information as well as better integrate with inertial measurement unit (IMU) in visual SLAM has potential research value. This paper proposes a novel deep SLAM network with dual visual factors. The basic idea is to integrate both photometric factor and re-projection factor into the end-to-end differentiable structure through multi-factor data association module. We show that the proposed network dynamically learns and adjusts the confidence maps of both visual factors and it can be further extended to include the IMU factors as well. Extensive experiments validate that our proposed method significantly outperforms the state-of-the-art methods on several public datasets, including TartanAir, EuRoC and ETH3D-SLAM. Specifically, when dynamically fusing the three factors together, the absolute trajectory error for both monocular and stereo configurations on EuRoC dataset has reduced by 45.3% and 36.2% respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge