Xiongfeng Peng

MoSE: Skill-by-Skill Mixture-of-Expert Learning for Autonomous Driving

Jul 10, 2025

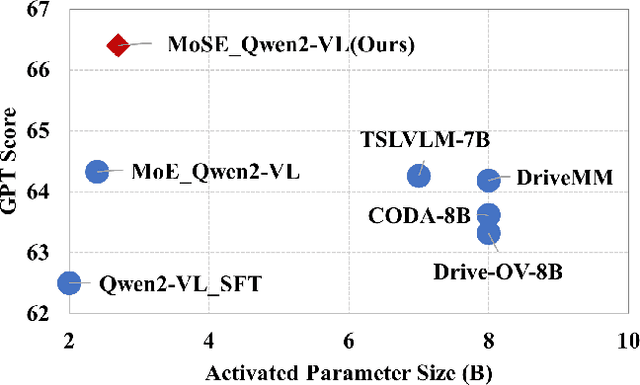

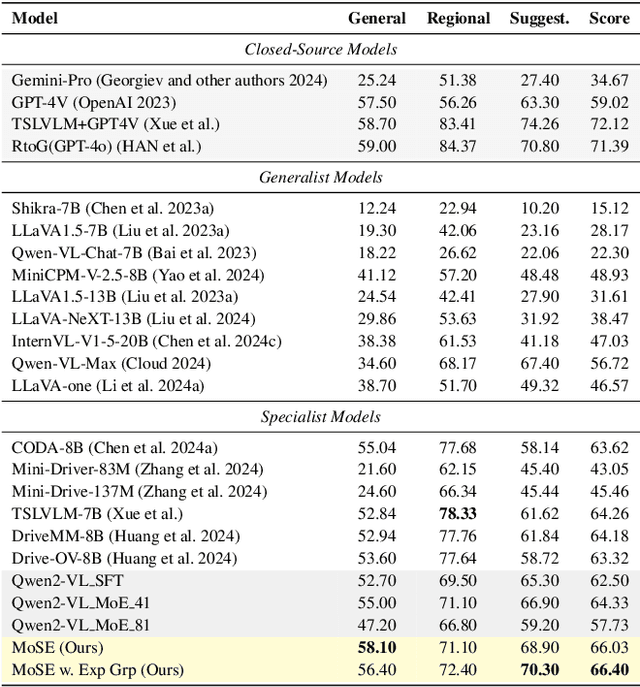

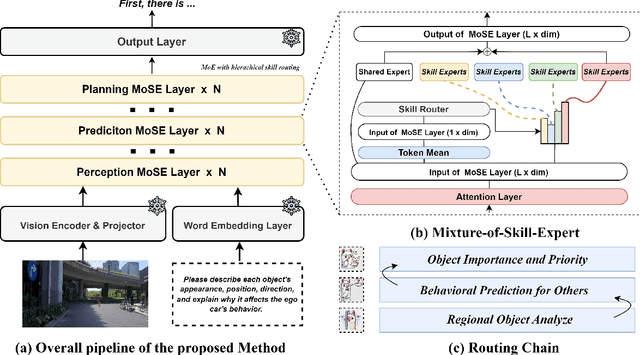

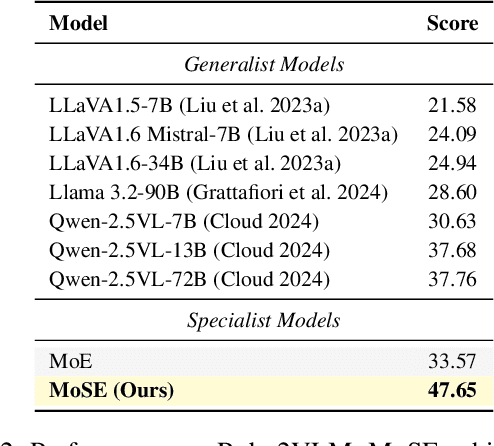

Abstract:Recent studies show large language models (LLMs) and vision language models (VLMs) trained using web-scale data can empower end-to-end autonomous driving systems for a better generalization and interpretation. Specifically, by dynamically routing inputs to specialized subsets of parameters, the Mixture-of-Experts (MoE) technique enables general LLMs or VLMs to achieve substantial performance improvements while maintaining computational efficiency. However, general MoE models usually demands extensive training data and complex optimization. In this work, inspired by the learning process of human drivers, we propose a skill-oriented MoE, called MoSE, which mimics human drivers' learning process and reasoning process, skill-by-skill and step-by-step. We propose a skill-oriented routing mechanism that begins with defining and annotating specific skills, enabling experts to identify the necessary driving competencies for various scenarios and reasoning tasks, thereby facilitating skill-by-skill learning. Further align the driving process to multi-step planning in human reasoning and end-to-end driving models, we build a hierarchical skill dataset and pretrain the router to encourage the model to think step-by-step. Unlike multi-round dialogs, MoSE integrates valuable auxiliary tasks (e.g.\ description, reasoning, planning) in one single forward process without introducing any extra computational cost. With less than 3B sparsely activated parameters, our model outperforms several 8B+ parameters on CODA AD corner case reasoning task. Compared to existing methods based on open-source models and data, our approach achieves state-of-the-art performance with significantly reduced activated model size (at least by $62.5\%$) with a single-turn conversation.

DVI-SLAM: A Dual Visual Inertial SLAM Network

Sep 25, 2023

Abstract:Recent deep learning based visual simultaneous localization and mapping (SLAM) methods have made significant progress. However, how to make full use of visual information as well as better integrate with inertial measurement unit (IMU) in visual SLAM has potential research value. This paper proposes a novel deep SLAM network with dual visual factors. The basic idea is to integrate both photometric factor and re-projection factor into the end-to-end differentiable structure through multi-factor data association module. We show that the proposed network dynamically learns and adjusts the confidence maps of both visual factors and it can be further extended to include the IMU factors as well. Extensive experiments validate that our proposed method significantly outperforms the state-of-the-art methods on several public datasets, including TartanAir, EuRoC and ETH3D-SLAM. Specifically, when dynamically fusing the three factors together, the absolute trajectory error for both monocular and stereo configurations on EuRoC dataset has reduced by 45.3% and 36.2% respectively.

Accurate Visual-Inertial SLAM by Feature Re-identification

Feb 26, 2021

Abstract:We propose a novel feature re-identification method for real-time visual-inertial SLAM. The front-end module of the state-of-the-art visual-inertial SLAM methods (e.g. visual feature extraction and matching schemes) relies on feature tracks across image frames, which are easily broken in challenging scenarios, resulting in insufficient visual measurement and accumulated error in pose estimation. In this paper, we propose an efficient drift-less SLAM method by re-identifying existing features from a spatial-temporal sensitive sub-global map. The re-identified features over a long time span serve as augmented visual measurements and are incorporated into the optimization module which can gradually decrease the accumulative error in the long run, and further build a drift-less global map in the system. Extensive experiments show that our feature re-identification method is both effective and efficient. Specifically, when combining the feature re-identification with the state-of-the-art SLAM method [11], our method achieves 67.3% and 87.5% absolute translation error reduction with only a small additional computational cost on two public SLAM benchmark DBs: EuRoC and TUM-VI respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge