Tianrui Li

Senior member, IEEE

Kernel Alignment-based Multi-view Unsupervised Feature Selection with Sample-level Adaptive Graph Learning

Jan 12, 2026Abstract:Although multi-view unsupervised feature selection (MUFS) has demonstrated success in dimensionality reduction for unlabeled multi-view data, most existing methods reduce feature redundancy by focusing on linear correlations among features but often overlook complex nonlinear dependencies. This limits the effectiveness of feature selection. In addition, existing methods fuse similarity graphs from multiple views by employing sample-invariant weights to preserve local structure. However, this process fails to account for differences in local neighborhood clarity among samples within each view, thereby hindering accurate characterization of the intrinsic local structure of the data. In this paper, we propose a Kernel Alignment-based multi-view unsupervised FeatUre selection with Sample-level adaptive graph lEarning method (KAFUSE) to address these issues. Specifically, we first employ kernel alignment with an orthogonal constraint to reduce feature redundancy in both linear and nonlinear relationships. Then, a cross-view consistent similarity graph is learned by applying sample-level fusion to each slice of a tensor formed by stacking similarity graphs from different views, which automatically adjusts the view weights for each sample during fusion. These two steps are integrated into a unified model for feature selection, enabling mutual enhancement between them. Extensive experiments on real multi-view datasets demonstrate the superiority of KAFUSE over state-of-the-art methods.

Joint Learning of Unsupervised Multi-view Feature and Instance Co-selection with Cross-view Imputation

Dec 17, 2025

Abstract:Feature and instance co-selection, which aims to reduce both feature dimensionality and sample size by identifying the most informative features and instances, has attracted considerable attention in recent years. However, when dealing with unlabeled incomplete multi-view data, where some samples are missing in certain views, existing methods typically first impute the missing data and then concatenate all views into a single dataset for subsequent co-selection. Such a strategy treats co-selection and missing data imputation as two independent processes, overlooking potential interactions between them. The inter-sample relationships gleaned from co-selection can aid imputation, which in turn enhances co-selection performance. Additionally, simply merging multi-view data fails to capture the complementary information among views, ultimately limiting co-selection effectiveness. To address these issues, we propose a novel co-selection method, termed Joint learning of Unsupervised multI-view feature and instance Co-selection with cross-viEw imputation (JUICE). JUICE first reconstructs incomplete multi-view data using available observations, bringing missing data recovery and feature and instance co-selection together in a unified framework. Then, JUICE leverages cross-view neighborhood information to learn inter-sample relationships and further refine the imputation of missing values during reconstruction. This enables the selection of more representative features and instances. Extensive experiments demonstrate that JUICE outperforms state-of-the-art methods.

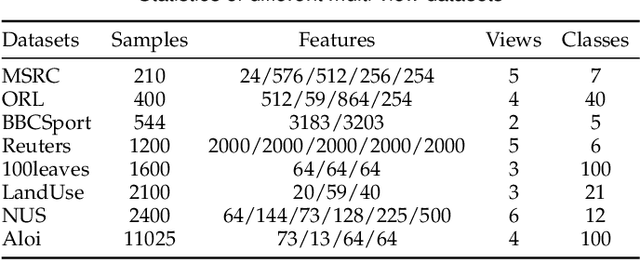

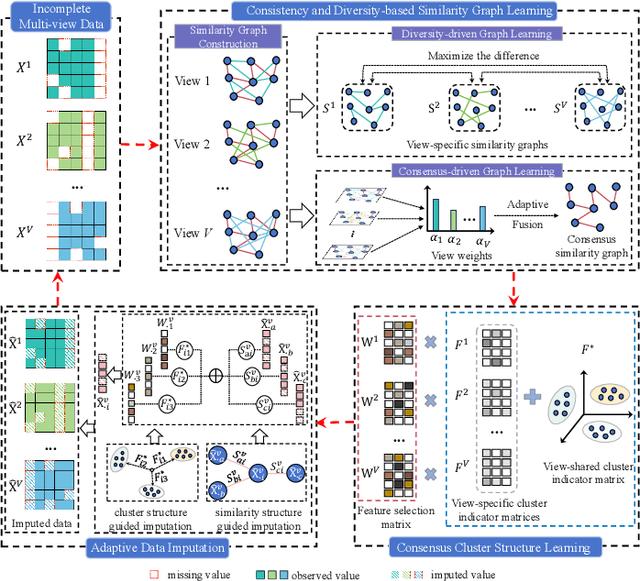

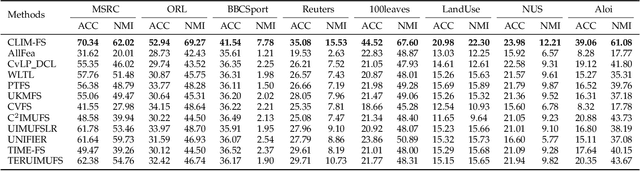

Cross-view Joint Learning for Mixed-Missing Multi-view Unsupervised Feature Selection

Nov 15, 2025

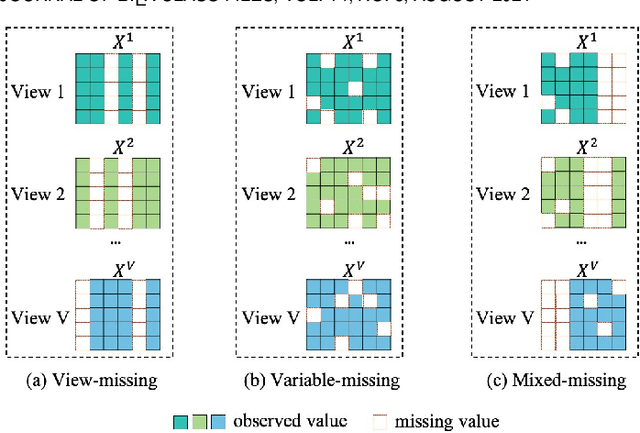

Abstract:Incomplete multi-view unsupervised feature selection (IMUFS), which aims to identify representative features from unlabeled multi-view data containing missing values, has received growing attention in recent years. Despite their promising performance, existing methods face three key challenges: 1) by focusing solely on the view-missing problem, they are not well-suited to the more prevalent mixed-missing scenario in practice, where some samples lack entire views or only partial features within views; 2) insufficient utilization of consistency and diversity across views limits the effectiveness of feature selection; and 3) the lack of theoretical analysis makes it unclear how feature selection and data imputation interact during the joint learning process. Being aware of these, we propose CLIM-FS, a novel IMUFS method designed to address the mixed-missing problem. Specifically, we integrate the imputation of both missing views and variables into a feature selection model based on nonnegative orthogonal matrix factorization, enabling the joint learning of feature selection and adaptive data imputation. Furthermore, we fully leverage consensus cluster structure and cross-view local geometrical structure to enhance the synergistic learning process. We also provide a theoretical analysis to clarify the underlying collaborative mechanism of CLIM-FS. Experimental results on eight real-world multi-view datasets demonstrate that CLIM-FS outperforms state-of-the-art methods.

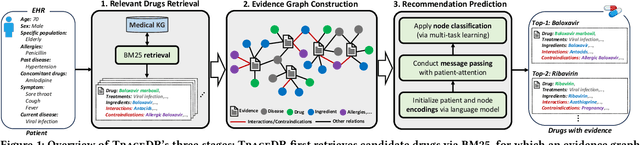

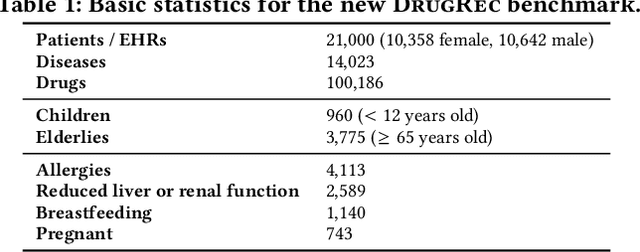

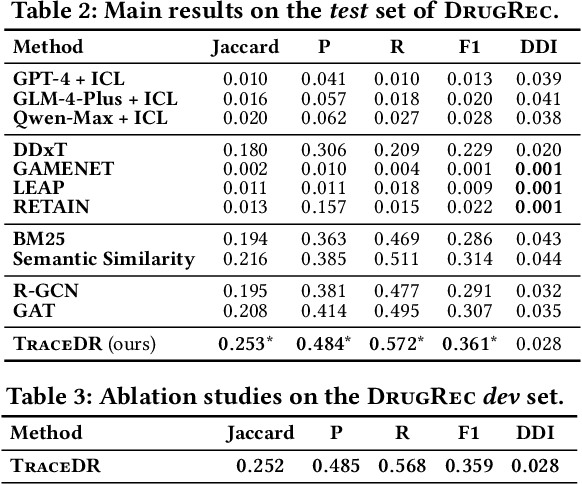

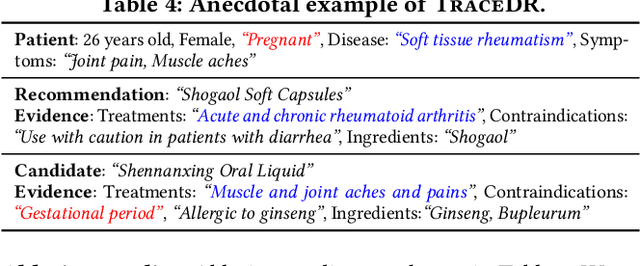

Traceable Drug Recommendation over Medical Knowledge Graphs

Oct 31, 2025

Abstract:Drug recommendation (DR) systems aim to support healthcare professionals in selecting appropriate medications based on patients' medical conditions. State-of-the-art approaches utilize deep learning techniques for improving DR, but fall short in providing any insights on the derivation process of recommendations -- a critical limitation in such high-stake applications. We propose TraceDR, a novel DR system operating over a medical knowledge graph (MKG), which ensures access to large-scale and high-quality information. TraceDR simultaneously predicts drug recommendations and related evidence within a multi-task learning framework, enabling traceability of medication recommendations. For covering a more diverse set of diseases and drugs than existing works, we devise a framework for automatically constructing patient health records and release DrugRec, a new large-scale testbed for DR.

TRUST-FS: Tensorized Reliable Unsupervised Multi-View Feature Selection for Incomplete Data

Sep 16, 2025Abstract:Multi-view unsupervised feature selection (MUFS), which selects informative features from multi-view unlabeled data, has attracted increasing research interest in recent years. Although great efforts have been devoted to MUFS, several challenges remain: 1) existing methods for incomplete multi-view data are limited to handling missing views and are unable to address the more general scenario of missing variables, where some features have missing values in certain views; 2) most methods address incomplete data by first imputing missing values and then performing feature selection, treating these two processes independently and overlooking their interactions; 3) missing data can result in an inaccurate similarity graph, which reduces the performance of feature selection. To solve this dilemma, we propose a novel MUFS method for incomplete multi-view data with missing variables, termed Tensorized Reliable UnSupervised mulTi-view Feature Selection (TRUST-FS). TRUST-FS introduces a new adaptive-weighted CP decomposition that simultaneously performs feature selection, missing-variable imputation, and view weight learning within a unified tensor factorization framework. By utilizing Subjective Logic to acquire trustworthy cross-view similarity information, TRUST-FS facilitates learning a reliable similarity graph, which subsequently guides feature selection and imputation. Comprehensive experimental results demonstrate the effectiveness and superiority of our method over state-of-the-art methods.

Harnessing Meta-Learning for Controllable Full-Frame Video Stabilization

Aug 26, 2025Abstract:Video stabilization remains a fundamental problem in computer vision, particularly pixel-level synthesis solutions for video stabilization, which synthesize full-frame outputs, add to the complexity of this task. These methods aim to enhance stability while synthesizing full-frame videos, but the inherent diversity in motion profiles and visual content present in each video sequence makes robust generalization with fixed parameters difficult. To address this, we present a novel method that improves pixel-level synthesis video stabilization methods by rapidly adapting models to each input video at test time. The proposed approach takes advantage of low-level visual cues available during inference to improve both the stability and visual quality of the output. Notably, the proposed rapid adaptation achieves significant performance gains even with a single adaptation pass. We further propose a jerk localization module and a targeted adaptation strategy, which focuses the adaptation on high-jerk segments for maximizing stability with fewer adaptation steps. The proposed methodology enables modern stabilizers to overcome the longstanding SOTA approaches while maintaining the full frame nature of the modern methods, while offering users with control mechanisms akin to classical approaches. Extensive experiments on diverse real-world datasets demonstrate the versatility of the proposed method. Our approach consistently improves the performance of various full-frame synthesis models in both qualitative and quantitative terms, including results on downstream applications.

CoIFNet: A Unified Framework for Multivariate Time Series Forecasting with Missing Values

Jun 16, 2025Abstract:Multivariate time series forecasting (MTSF) is a critical task with broad applications in domains such as meteorology, transportation, and economics. Nevertheless, pervasive missing values caused by sensor failures or human errors significantly degrade forecasting accuracy. Prior efforts usually employ an impute-then-forecast paradigm, leading to suboptimal predictions due to error accumulation and misaligned objectives between the two stages. To address this challenge, we propose the Collaborative Imputation-Forecasting Network (CoIFNet), a novel framework that unifies imputation and forecasting to achieve robust MTSF in the presence of missing values. Specifically, CoIFNet takes the observed values, mask matrix and timestamp embeddings as input, processing them sequentially through the Cross-Timestep Fusion (CTF) and Cross-Variate Fusion (CVF) modules to capture temporal dependencies that are robust to missing values. We provide theoretical justifications on how our CoIFNet learning objective improves the performance bound of MTSF with missing values. Through extensive experiments on challenging MSTF benchmarks, we demonstrate the effectiveness and computational efficiency of our proposed approach across diverse missing-data scenarios, e.g., CoIFNet outperforms the state-of-the-art method by $\underline{\textbf{24.40}}$% ($\underline{\textbf{23.81}}$%) at a point (block) missing rate of 0.6, while improving memory and time efficiency by $\underline{\boldsymbol{4.3\times}}$ and $\underline{\boldsymbol{2.1\times}}$, respectively.

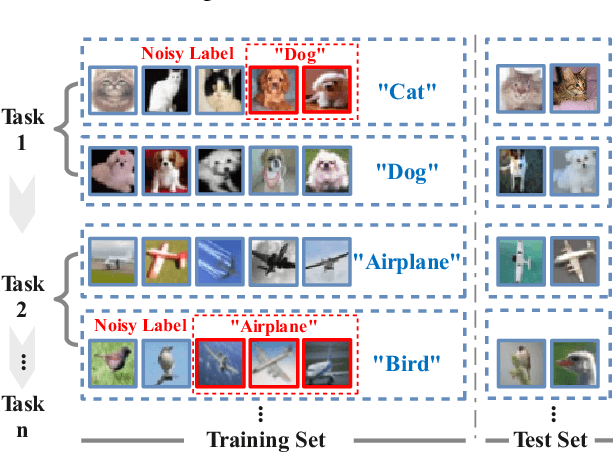

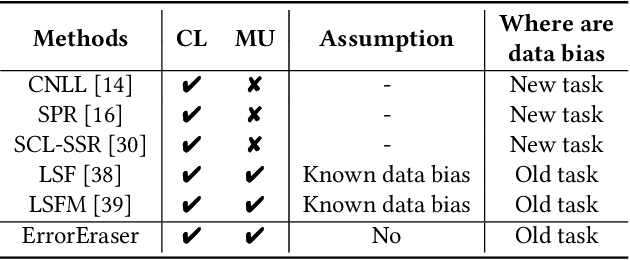

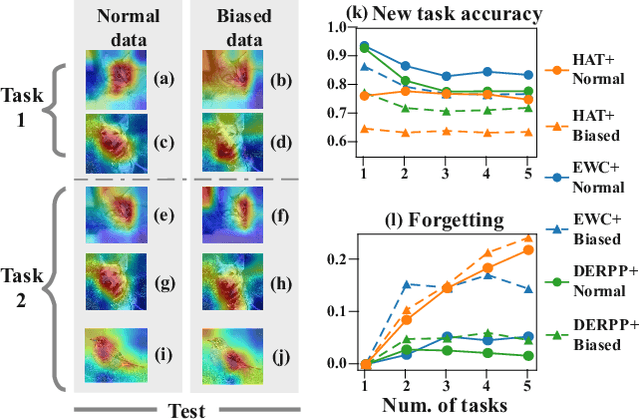

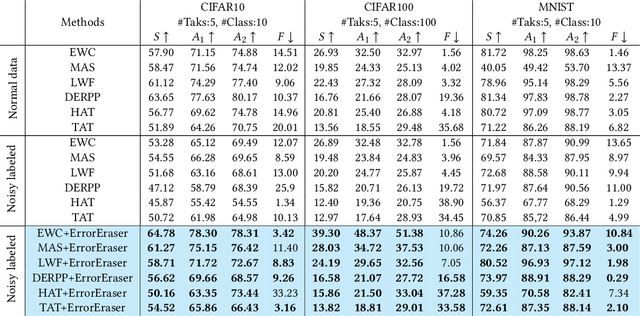

ErrorEraser: Unlearning Data Bias for Improved Continual Learning

Jun 11, 2025

Abstract:Continual Learning (CL) primarily aims to retain knowledge to prevent catastrophic forgetting and transfer knowledge to facilitate learning new tasks. Unlike traditional methods, we propose a novel perspective: CL not only needs to prevent forgetting, but also requires intentional forgetting.This arises from existing CL methods ignoring biases in real-world data, leading the model to learn spurious correlations that transfer and amplify across tasks. From feature extraction and prediction results, we find that data biases simultaneously reduce CL's ability to retain and transfer knowledge. To address this, we propose ErrorEraser, a universal plugin that removes erroneous memories caused by biases in CL, enhancing performance in both new and old tasks. ErrorEraser consists of two modules: Error Identification and Error Erasure. The former learns the probability density distribution of task data in the feature space without prior knowledge, enabling accurate identification of potentially biased samples. The latter ensures only erroneous knowledge is erased by shifting the decision space of representative outlier samples. Additionally, an incremental feature distribution learning strategy is designed to reduce the resource overhead during error identification in downstream tasks. Extensive experimental results show that ErrorEraser significantly mitigates the negative impact of data biases, achieving higher accuracy and lower forgetting rates across three types of CL methods. The code is available at https://github.com/diadai/ErrorEraser.

Rethinking Gradient-based Adversarial Attacks on Point Cloud Classification

May 28, 2025Abstract:Gradient-based adversarial attacks have become a dominant approach for evaluating the robustness of point cloud classification models. However, existing methods often rely on uniform update rules that fail to consider the heterogeneous nature of point clouds, resulting in excessive and perceptible perturbations. In this paper, we rethink the design of gradient-based attacks by analyzing the limitations of conventional gradient update mechanisms and propose two new strategies to improve both attack effectiveness and imperceptibility. First, we introduce WAAttack, a novel framework that incorporates weighted gradients and an adaptive step-size strategy to account for the non-uniform contribution of points during optimization. This approach enables more targeted and subtle perturbations by dynamically adjusting updates according to the local structure and sensitivity of each point. Second, we propose SubAttack, a complementary strategy that decomposes the point cloud into subsets and focuses perturbation efforts on structurally critical regions. Together, these methods represent a principled rethinking of gradient-based adversarial attacks for 3D point cloud classification. Extensive experiments demonstrate that our approach outperforms state-of-the-art baselines in generating highly imperceptible adversarial examples. Code will be released upon paper acceptance.

Less is More: Efficient Point Cloud Reconstruction via Multi-Head Decoders

May 25, 2025Abstract:We challenge the common assumption that deeper decoder architectures always yield better performance in point cloud reconstruction. Our analysis reveals that, beyond a certain depth, increasing decoder complexity leads to overfitting and degraded generalization. Additionally, we propose a novel multi-head decoder architecture that exploits the inherent redundancy in point clouds by reconstructing complete shapes from multiple independent heads, each operating on a distinct subset of points. The final output is obtained by concatenating the predictions from all heads, enhancing both diversity and fidelity. Extensive experiments on ModelNet40 and ShapeNetPart demonstrate that our approach achieves consistent improvements across key metrics--including Chamfer Distance (CD), Hausdorff Distance (HD), Earth Mover's Distance (EMD), and F1-score--outperforming standard single-head baselines. Our findings highlight that output diversity and architectural design can be more critical than depth alone for effective and efficient point cloud reconstruction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge