Yujie Li

Nighttime Hazy Image Enhancement via Progressively and Mutually Reinforcing Night-Haze Priors

Jan 05, 2026Abstract:Enhancing the visibility of nighttime hazy images is challenging due to the complex degradation distributions. Existing methods mainly address a single type of degradation (e.g., haze or low-light) at a time, ignoring the interplay of different degradation types and resulting in limited visibility improvement. We observe that the domain knowledge shared between low-light and haze priors can be reinforced mutually for better visibility. Based on this key insight, in this paper, we propose a novel framework that enhances visibility in nighttime hazy images by reinforcing the intrinsic consistency between haze and low-light priors mutually and progressively. In particular, our model utilizes image-, patch-, and pixel-level experts that operate across visual and frequency domains to recover global scene structure, regional patterns, and fine-grained details progressively. A frequency-aware router is further introduced to adaptively guide the contribution of each expert, ensuring robust image restoration. Extensive experiments demonstrate the superior performance of our model on nighttime dehazing benchmarks both quantitatively and qualitatively. Moreover, we showcase the generalizability of our model in daytime dehazing and low-light enhancement tasks.

API: Empowering Generalizable Real-World Image Dehazing via Adaptive Patch Importance Learning

Jan 05, 2026Abstract:Real-world image dehazing is a fundamental yet challenging task in low-level vision. Existing learning-based methods often suffer from significant performance degradation when applied to complex real-world hazy scenes, primarily due to limited training data and the intrinsic complexity of haze density distributions.To address these challenges, we introduce a novel Adaptive Patch Importance-aware (API) framework for generalizable real-world image dehazing. Specifically, our framework consists of an Automatic Haze Generation (AHG) module and a Density-aware Haze Removal (DHR) module. AHG provides a hybrid data augmentation strategy by generating realistic and diverse hazy images as additional high-quality training data. DHR considers hazy regions with varying haze density distributions for generalizable real-world image dehazing in an adaptive patch importance-aware manner. To alleviate the ambiguity of the dehazed image details, we further introduce a new Multi-Negative Contrastive Dehazing (MNCD) loss, which fully utilizes information from multiple negative samples across both spatial and frequency domains. Extensive experiments demonstrate that our framework achieves state-of-the-art performance across multiple real-world benchmarks, delivering strong results in both quantitative metrics and qualitative visual quality, and exhibiting robust generalization across diverse haze distributions.

APT: Affine Prototype-Timestamp For Time Series Forecasting Under Distribution Shift

Nov 17, 2025Abstract:Time series forecasting under distribution shift remains challenging, as existing deep learning models often rely on local statistical normalization (e.g., mean and variance) that fails to capture global distribution shift. Methods like RevIN and its variants attempt to decouple distribution and pattern but still struggle with missing values, noisy observations, and invalid channel-wise affine transformation. To address these limitations, we propose Affine Prototype Timestamp (APT), a lightweight and flexible plug-in module that injects global distribution features into the normalization-forecasting pipeline. By leveraging timestamp conditioned prototype learning, APT dynamically generates affine parameters that modulate both input and output series, enabling the backbone to learn from self-supervised, distribution-aware clustered instances. APT is compatible with arbitrary forecasting backbones and normalization strategies while introducing minimal computational overhead. Extensive experiments across six benchmark datasets and multiple backbone-normalization combinations demonstrate that APT significantly improves forecasting performance under distribution shift.

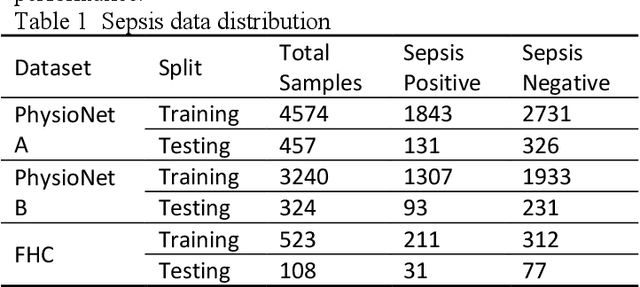

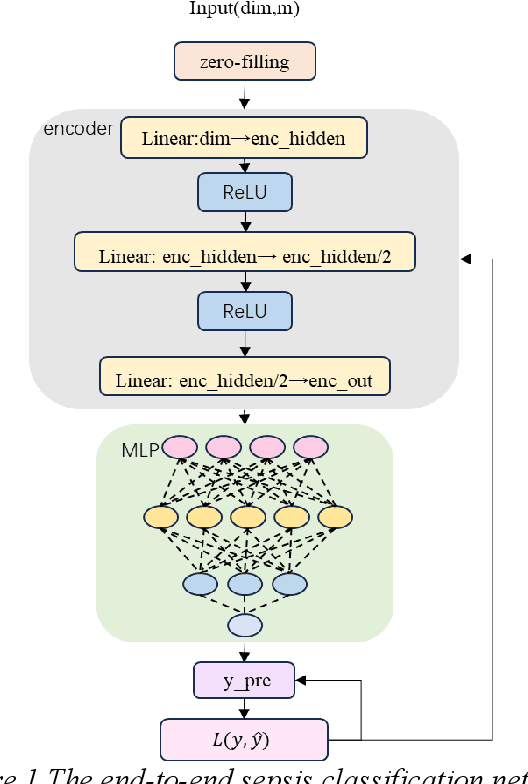

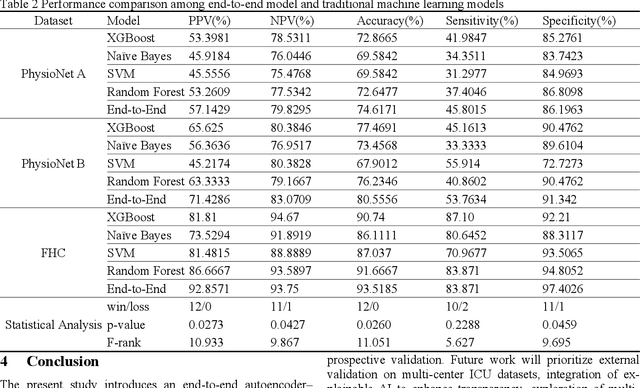

End to End Autoencoder MLP Framework for Sepsis Prediction

Aug 26, 2025

Abstract:Sepsis is a life threatening condition that requires timely detection in intensive care settings. Traditional machine learning approaches, including Naive Bayes, Support Vector Machine (SVM), Random Forest, and XGBoost, often rely on manual feature engineering and struggle with irregular, incomplete time-series data commonly present in electronic health records. We introduce an end-to-end deep learning framework integrating an unsupervised autoencoder for automatic feature extraction with a multilayer perceptron classifier for binary sepsis risk prediction. To enhance clinical applicability, we implement a customized down sampling strategy that extracts high information density segments during training and a non-overlapping dynamic sliding window mechanism for real-time inference. Preprocessed time series data are represented as fixed dimension vectors with explicit missingness indicators, mitigating bias and noise. We validate our approach on three ICU cohorts. Our end-to-end model achieves accuracies of 74.6 percent, 80.6 percent, and 93.5 percent, respectively, consistently outperforming traditional machine learning baselines. These results demonstrate the framework's superior robustness, generalizability, and clinical utility for early sepsis detection across heterogeneous ICU environments.

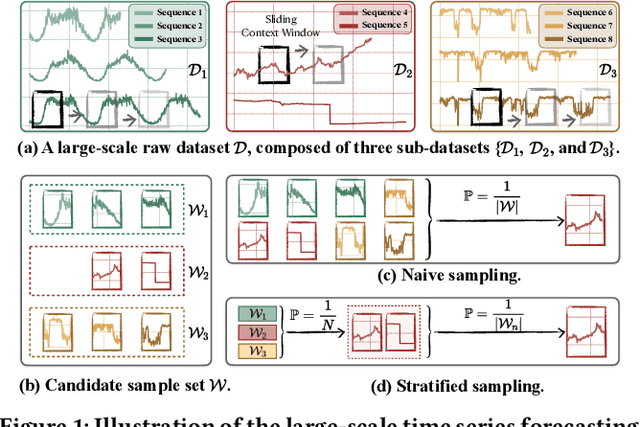

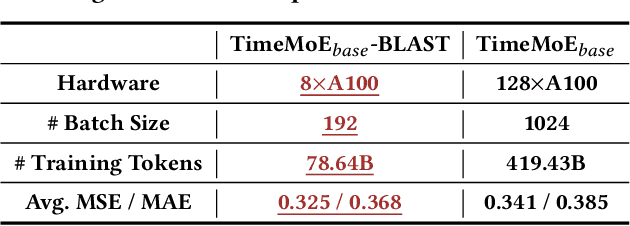

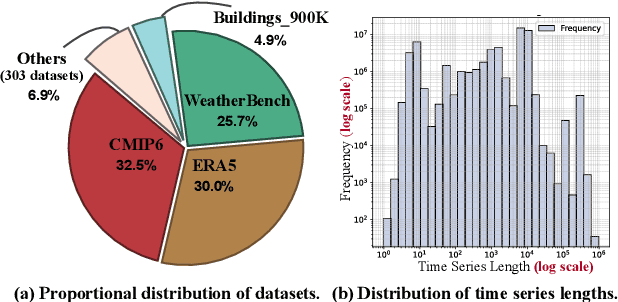

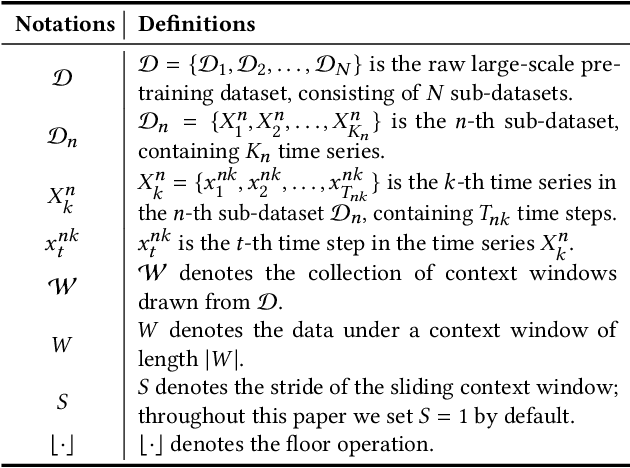

BLAST: Balanced Sampling Time Series Corpus for Universal Forecasting Models

May 23, 2025

Abstract:The advent of universal time series forecasting models has revolutionized zero-shot forecasting across diverse domains, yet the critical role of data diversity in training these models remains underexplored. Existing large-scale time series datasets often suffer from inherent biases and imbalanced distributions, leading to suboptimal model performance and generalization. To address this gap, we introduce BLAST, a novel pre-training corpus designed to enhance data diversity through a balanced sampling strategy. First, BLAST incorporates 321 billion observations from publicly available datasets and employs a comprehensive suite of statistical metrics to characterize time series patterns. Then, to facilitate pattern-oriented sampling, the data is implicitly clustered using grid-based partitioning. Furthermore, by integrating grid sampling and grid mixup techniques, BLAST ensures a balanced and representative coverage of diverse patterns. Experimental results demonstrate that models pre-trained on BLAST achieve state-of-the-art performance with a fraction of the computational resources and training tokens required by existing methods. Our findings highlight the pivotal role of data diversity in improving both training efficiency and model performance for the universal forecasting task.

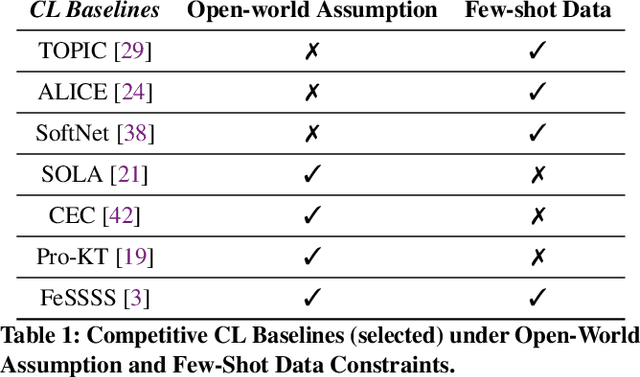

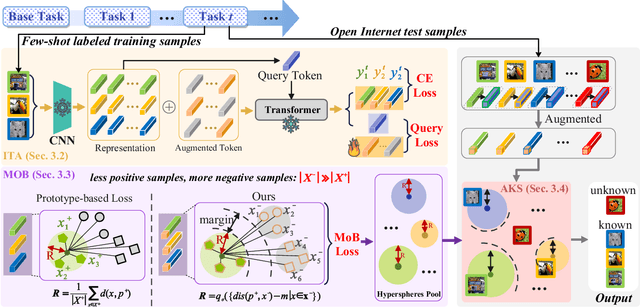

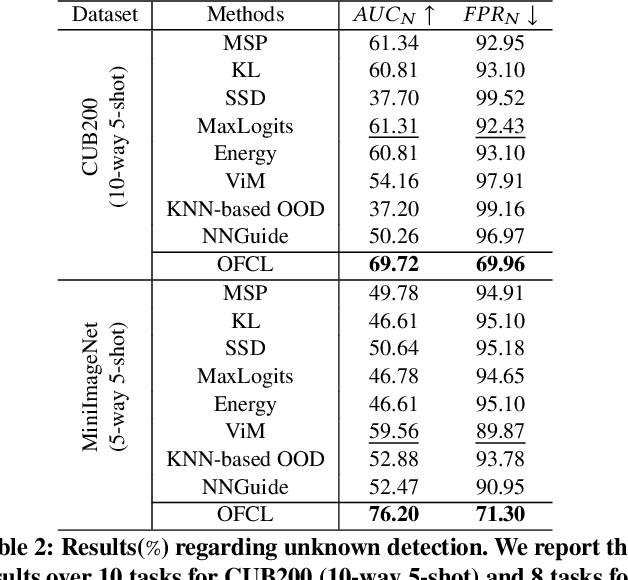

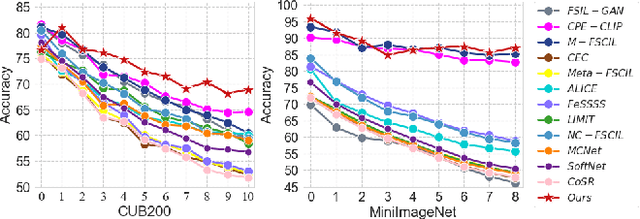

Improving Open-world Continual Learning under the Constraints of Scarce Labeled Data

Feb 28, 2025

Abstract:Open-world continual learning (OWCL) adapts to sequential tasks with open samples, learning knowledge incrementally while preventing forgetting. However, existing OWCL still requires a large amount of labeled data for training, which is often impractical in real-world applications. Given that new categories/entities typically come with limited annotations and are in small quantities, a more realistic situation is OWCL with scarce labeled data, i.e., few-shot training samples. Hence, this paper investigates the problem of open-world few-shot continual learning (OFCL), challenging in (i) learning unbounded tasks without forgetting previous knowledge and avoiding overfitting, (ii) constructing compact decision boundaries for open detection with limited labeled data, and (iii) transferring knowledge about knowns and unknowns and even update the unknowns to knowns once the labels of open samples are learned. In response, we propose a novel OFCL framework that integrates three key components: (1) an instance-wise token augmentation (ITA) that represents and enriches sample representations with additional knowledge, (2) a margin-based open boundary (MOB) that supports open detection with new tasks emerge over time, and (3) an adaptive knowledge space (AKS) that endows unknowns with knowledge for the updating from unknowns to knowns. Finally, extensive experiments show the proposed OFCL framework outperforms all baselines remarkably with practical importance and reproducibility. The source code is released at https://github.com/liyj1201/OFCL.

Exploring Open-world Continual Learning with Knowns-Unknowns Knowledge Transfer

Feb 27, 2025

Abstract:Open-World Continual Learning (OWCL) is a challenging paradigm where models must incrementally learn new knowledge without forgetting while operating under an open-world assumption. This requires handling incomplete training data and recognizing unknown samples during inference. However, existing OWCL methods often treat open detection and continual learning as separate tasks, limiting their ability to integrate open-set detection and incremental classification in OWCL. Moreover, current approaches primarily focus on transferring knowledge from known samples, neglecting the insights derived from unknown/open samples. To address these limitations, we formalize four distinct OWCL scenarios and conduct comprehensive empirical experiments to explore potential challenges in OWCL. Our findings reveal a significant interplay between the open detection of unknowns and incremental classification of knowns, challenging a widely held assumption that unknown detection and known classification are orthogonal processes. Building on our insights, we propose \textbf{HoliTrans} (Holistic Knowns-Unknowns Knowledge Transfer), a novel OWCL framework that integrates nonlinear random projection (NRP) to create a more linearly separable embedding space and distribution-aware prototypes (DAPs) to construct an adaptive knowledge space. Particularly, our HoliTrans effectively supports knowledge transfer for both known and unknown samples while dynamically updating representations of open samples during OWCL. Extensive experiments across various OWCL scenarios demonstrate that HoliTrans outperforms 22 competitive baselines, bridging the gap between OWCL theory and practice and providing a robust, scalable framework for advancing open-world learning paradigms.

Order-Robust Class Incremental Learning: Graph-Driven Dynamic Similarity Grouping

Feb 27, 2025Abstract:Class Incremental Learning (CIL) requires a model to continuously learn new classes without forgetting previously learned ones. While recent studies have significantly alleviated the problem of catastrophic forgetting (CF), more and more research reveals that the order in which classes appear have significant influences on CIL models. Specifically, prioritizing the learning of classes with lower similarity will enhance the model's generalization performance and its ability to mitigate forgetting. Hence, it is imperative to develop an order-robust class incremental learning model that maintains stable performance even when faced with varying levels of class similarity in different orders. In response, we first provide additional theoretical analysis, which reveals that when the similarity among a group of classes is lower, the model demonstrates increased robustness to the class order. Then, we introduce a novel \textbf{G}raph-\textbf{D}riven \textbf{D}ynamic \textbf{S}imilarity \textbf{G}rouping (\textbf{GDDSG}) method, which leverages a graph coloring algorithm for class-based similarity grouping. The proposed approach trains independent CIL models for each group of classes, ultimately combining these models to facilitate joint prediction. Experimental results demonstrate that our method effectively addresses the issue of class order sensitivity while achieving optimal performance in both model accuracy and anti-forgetting capability. Our code is available at https://github.com/AIGNLAI/GDDSG.

UniRS: Unifying Multi-temporal Remote Sensing Tasks through Vision Language Models

Dec 30, 2024

Abstract:The domain gap between remote sensing imagery and natural images has recently received widespread attention and Vision-Language Models (VLMs) have demonstrated excellent generalization performance in remote sensing multimodal tasks. However, current research is still limited in exploring how remote sensing VLMs handle different types of visual inputs. To bridge this gap, we introduce \textbf{UniRS}, the first vision-language model \textbf{uni}fying multi-temporal \textbf{r}emote \textbf{s}ensing tasks across various types of visual input. UniRS supports single images, dual-time image pairs, and videos as input, enabling comprehensive remote sensing temporal analysis within a unified framework. We adopt a unified visual representation approach, enabling the model to accept various visual inputs. For dual-time image pair tasks, we customize a change extraction module to further enhance the extraction of spatiotemporal features. Additionally, we design a prompt augmentation mechanism tailored to the model's reasoning process, utilizing the prior knowledge of the general-purpose VLM to provide clues for UniRS. To promote multi-task knowledge sharing, the model is jointly fine-tuned on a mixed dataset. Experimental results show that UniRS achieves state-of-the-art performance across diverse tasks, including visual question answering, change captioning, and video scene classification, highlighting its versatility and effectiveness in unifying these multi-temporal remote sensing tasks. Our code and dataset will be released soon.

Ordering-Based Causal Discovery for Linear and Nonlinear Relations

Oct 08, 2024

Abstract:Identifying causal relations from purely observational data typically requires additional assumptions on relations and/or noise. Most current methods restrict their analysis to datasets that are assumed to have pure linear or nonlinear relations, which is often not reflective of real-world datasets that contain a combination of both. This paper presents CaPS, an ordering-based causal discovery algorithm that effectively handles linear and nonlinear relations. CaPS introduces a novel identification criterion for topological ordering and incorporates the concept of "parent score" during the post-processing optimization stage. These scores quantify the strength of the average causal effect, helping to accelerate the pruning process and correct inaccurate predictions in the pruning step. Experimental results demonstrate that our proposed solutions outperform state-of-the-art baselines on synthetic data with varying ratios of linear and nonlinear relations. The results obtained from real-world data also support the competitiveness of CaPS. Code and datasets are available at https://github.com/E2real/CaPS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge