Marcello Bonsangue

Improving Open-world Continual Learning under the Constraints of Scarce Labeled Data

Feb 28, 2025

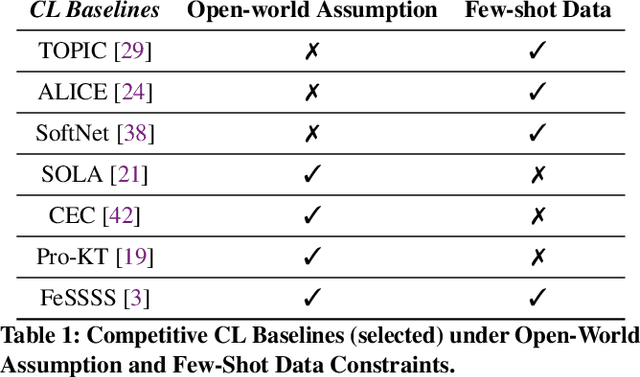

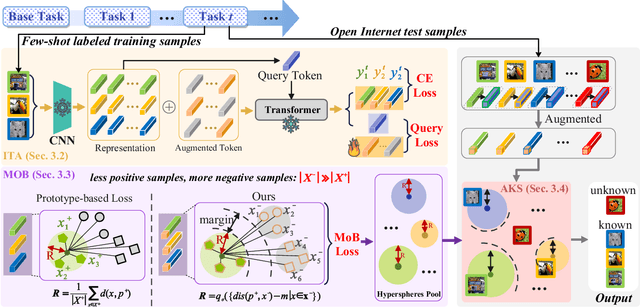

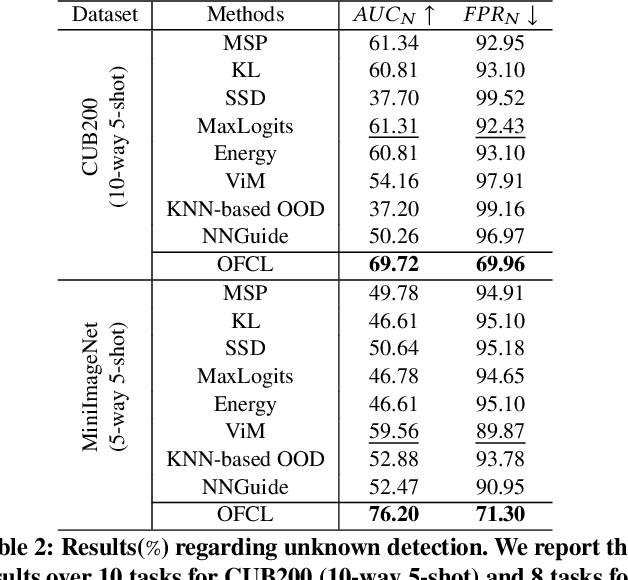

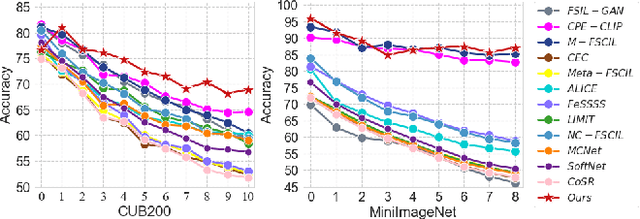

Abstract:Open-world continual learning (OWCL) adapts to sequential tasks with open samples, learning knowledge incrementally while preventing forgetting. However, existing OWCL still requires a large amount of labeled data for training, which is often impractical in real-world applications. Given that new categories/entities typically come with limited annotations and are in small quantities, a more realistic situation is OWCL with scarce labeled data, i.e., few-shot training samples. Hence, this paper investigates the problem of open-world few-shot continual learning (OFCL), challenging in (i) learning unbounded tasks without forgetting previous knowledge and avoiding overfitting, (ii) constructing compact decision boundaries for open detection with limited labeled data, and (iii) transferring knowledge about knowns and unknowns and even update the unknowns to knowns once the labels of open samples are learned. In response, we propose a novel OFCL framework that integrates three key components: (1) an instance-wise token augmentation (ITA) that represents and enriches sample representations with additional knowledge, (2) a margin-based open boundary (MOB) that supports open detection with new tasks emerge over time, and (3) an adaptive knowledge space (AKS) that endows unknowns with knowledge for the updating from unknowns to knowns. Finally, extensive experiments show the proposed OFCL framework outperforms all baselines remarkably with practical importance and reproducibility. The source code is released at https://github.com/liyj1201/OFCL.

Exploring Open-world Continual Learning with Knowns-Unknowns Knowledge Transfer

Feb 27, 2025

Abstract:Open-World Continual Learning (OWCL) is a challenging paradigm where models must incrementally learn new knowledge without forgetting while operating under an open-world assumption. This requires handling incomplete training data and recognizing unknown samples during inference. However, existing OWCL methods often treat open detection and continual learning as separate tasks, limiting their ability to integrate open-set detection and incremental classification in OWCL. Moreover, current approaches primarily focus on transferring knowledge from known samples, neglecting the insights derived from unknown/open samples. To address these limitations, we formalize four distinct OWCL scenarios and conduct comprehensive empirical experiments to explore potential challenges in OWCL. Our findings reveal a significant interplay between the open detection of unknowns and incremental classification of knowns, challenging a widely held assumption that unknown detection and known classification are orthogonal processes. Building on our insights, we propose \textbf{HoliTrans} (Holistic Knowns-Unknowns Knowledge Transfer), a novel OWCL framework that integrates nonlinear random projection (NRP) to create a more linearly separable embedding space and distribution-aware prototypes (DAPs) to construct an adaptive knowledge space. Particularly, our HoliTrans effectively supports knowledge transfer for both known and unknown samples while dynamically updating representations of open samples during OWCL. Extensive experiments across various OWCL scenarios demonstrate that HoliTrans outperforms 22 competitive baselines, bridging the gap between OWCL theory and practice and providing a robust, scalable framework for advancing open-world learning paradigms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge