Xuemei Cao

ErrorEraser: Unlearning Data Bias for Improved Continual Learning

Jun 11, 2025

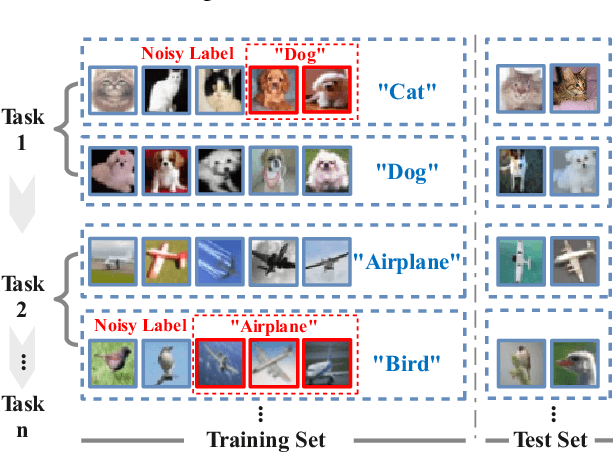

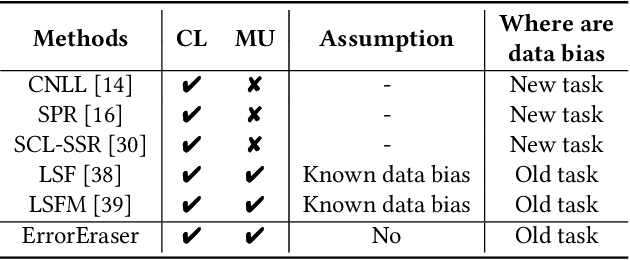

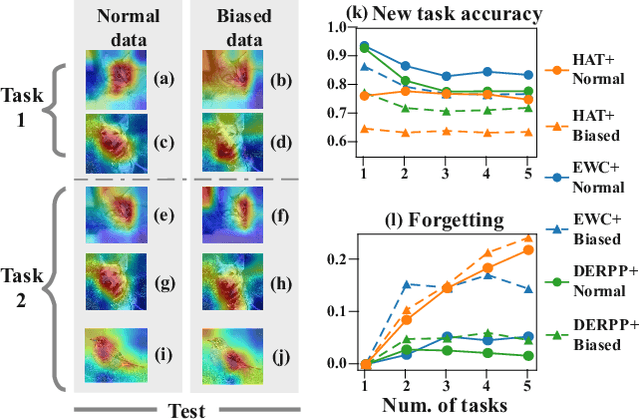

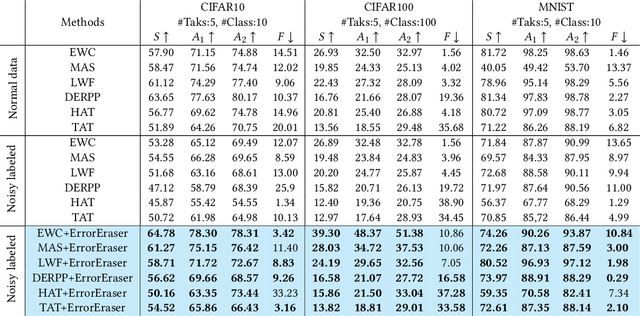

Abstract:Continual Learning (CL) primarily aims to retain knowledge to prevent catastrophic forgetting and transfer knowledge to facilitate learning new tasks. Unlike traditional methods, we propose a novel perspective: CL not only needs to prevent forgetting, but also requires intentional forgetting.This arises from existing CL methods ignoring biases in real-world data, leading the model to learn spurious correlations that transfer and amplify across tasks. From feature extraction and prediction results, we find that data biases simultaneously reduce CL's ability to retain and transfer knowledge. To address this, we propose ErrorEraser, a universal plugin that removes erroneous memories caused by biases in CL, enhancing performance in both new and old tasks. ErrorEraser consists of two modules: Error Identification and Error Erasure. The former learns the probability density distribution of task data in the feature space without prior knowledge, enabling accurate identification of potentially biased samples. The latter ensures only erroneous knowledge is erased by shifting the decision space of representative outlier samples. Additionally, an incremental feature distribution learning strategy is designed to reduce the resource overhead during error identification in downstream tasks. Extensive experimental results show that ErrorEraser significantly mitigates the negative impact of data biases, achieving higher accuracy and lower forgetting rates across three types of CL methods. The code is available at https://github.com/diadai/ErrorEraser.

Open Continual Feature Selection via Granular-Ball Knowledge Transfer

Mar 15, 2024

Abstract:This paper presents a novel framework for continual feature selection (CFS) in data preprocessing, particularly in the context of an open and dynamic environment where unknown classes may emerge. CFS encounters two primary challenges: the discovery of unknown knowledge and the transfer of known knowledge. To this end, the proposed CFS method combines the strengths of continual learning (CL) with granular-ball computing (GBC), which focuses on constructing a granular-ball knowledge base to detect unknown classes and facilitate the transfer of previously learned knowledge for further feature selection. CFS consists of two stages: initial learning and open learning. The former aims to establish an initial knowledge base through multi-granularity representation using granular-balls. The latter utilizes prior granular-ball knowledge to identify unknowns, updates the knowledge base for granular-ball knowledge transfer, reinforces old knowledge, and integrates new knowledge. Subsequently, we devise an optimal feature subset mechanism that incorporates minimal new features into the existing optimal subset, often yielding superior results during each period. Extensive experimental results on public benchmark datasets demonstrate our method's superiority in terms of both effectiveness and efficiency compared to state-of-the-art feature selection methods.

Towards Interpreting Vulnerability of Multi-Instance Learning via Customized and Universal Adversarial Perturbations

Nov 30, 2022

Abstract:Multi-instance learning (MIL) is a great paradigm for dealing with complex data and has achieved impressive achievements in a number of fields, including image classification, video anomaly detection, and far more. Each data sample is referred to as a bag containing several unlabeled instances, and the supervised information is only provided at the bag-level. The safety of MIL learners is concerning, though, as we can greatly fool them by introducing a few adversarial perturbations. This can be fatal in some cases, such as when users are unable to access desired images and criminals are attempting to trick surveillance cameras. In this paper, we design two adversarial perturbations to interpret the vulnerability of MIL methods. The first method can efficiently generate the bag-specific perturbation (called customized) with the aim of outsiding it from its original classification region. The second method builds on the first one by investigating the image-agnostic perturbation (called universal) that aims to affect all bags in a given data set and obtains some generalizability. We conduct various experiments to verify the performance of these two perturbations, and the results show that both of them can effectively fool MIL learners. We additionally propose a simple strategy to lessen the effects of adversarial perturbations. Source codes are available at https://github.com/InkiInki/MI-UAP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge