Shan He

University of Birmingham

REST: Diffusion-based Real-time End-to-end Streaming Talking Head Generation via ID-Context Caching and Asynchronous Streaming Distillation

Dec 12, 2025Abstract:Diffusion models have significantly advanced the field of talking head generation. However, the slow inference speeds and non-autoregressive paradigms severely constrain the application of diffusion-based THG models. In this study, we propose REST, the first diffusion-based, real-time, end-to-end streaming audio-driven talking head generation framework. To support real-time end-to-end generation, a compact video latent space is first learned through high spatiotemporal VAE compression. Additionally, to enable autoregressive streaming within the compact video latent space, we introduce an ID-Context Cache mechanism, which integrates ID-Sink and Context-Cache principles to key-value caching for maintaining temporal consistency and identity coherence during long-time streaming generation. Furthermore, an Asynchronous Streaming Distillation (ASD) training strategy is proposed to mitigate error accumulation in autoregressive generation and enhance temporal consistency, which leverages a non-streaming teacher with an asynchronous noise schedule to supervise the training of the streaming student model. REST bridges the gap between autoregressive and diffusion-based approaches, demonstrating substantial value for applications requiring real-time talking head generation. Experimental results demonstrate that REST outperforms state-of-the-art methods in both generation speed and overall performance.

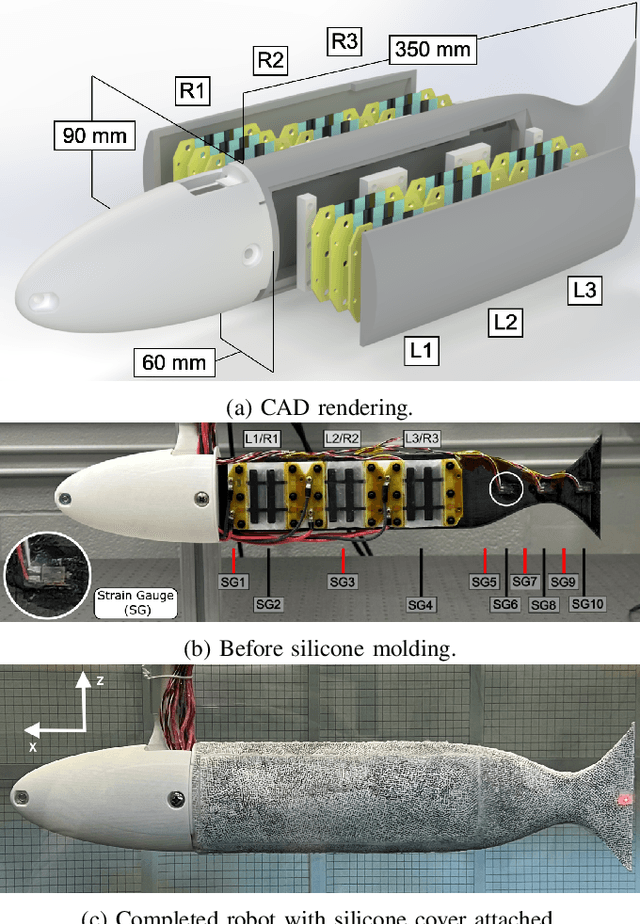

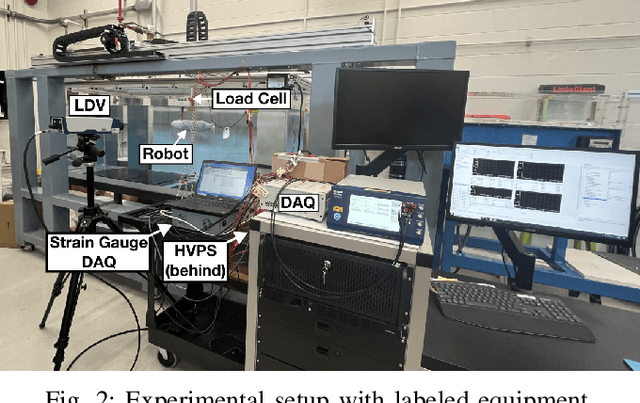

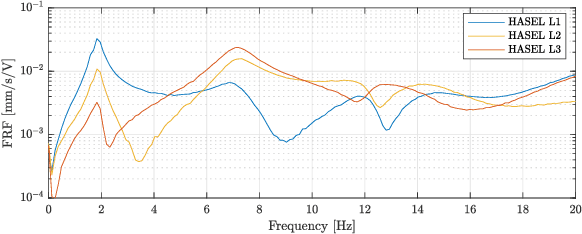

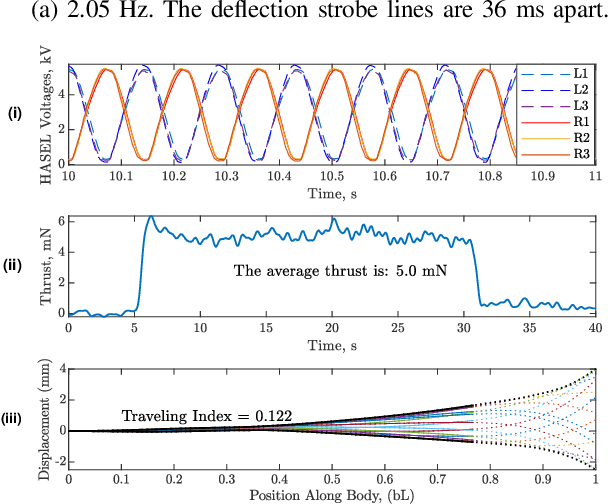

Improving Swimming Performance in Soft Robotic Fish with Distributed Muscles and Embedded Kinematic Sensing

Apr 15, 2025

Abstract:Bio-inspired underwater vehicles could yield improved efficiency, maneuverability, and environmental compatibility over conventional propeller-driven underwater vehicles. However, to realize the swimming performance of biology, there is a need for soft robotic swimmers with both distributed muscles and kinematic feedback. This study presents the design and swimming performance of a soft robotic fish with independently controllable muscles and embedded kinematic sensing distributed along the body. The soft swimming robot consists of an interior flexible spine, three axially distributed sets of HASEL artificial muscles, embedded strain gauges, a streamlined silicone body, and off-board electronics. In a fixed configuration, the soft robot generates a maximum thrust of 7.9 mN when excited near its first resonant frequency (2 Hz) with synchronized antagonistic actuation of all muscles. When excited near its second resonant frequency (8 Hz), synchronized muscle actuation generates 5.0 mN of thrust. By introducing a sequential phase offset into the muscle actuation, the thrust at the second resonant frequency increases to 7.2 mN, a 44% increase from simple antagonistic activation. The sequential muscle activation improves the thrust by increasing 1) the tail-beat velocity and 2) traveling wave content in the swimming kinematics by four times. Further, the second resonant frequency (8 Hz) generates nearly as much thrust as the first resonance (2 Hz) while requiring only $\approx25$% of the tail displacement, indicating that higher resonant frequencies have benefits for swimming in confined environments where a smaller kinematic envelope is necessary. These results demonstrate the performance benefits of independently controllable muscles and distributed kinematic sensing, and this type of soft robotic swimmer provides a platform to address the open challenge of sensorimotor control.

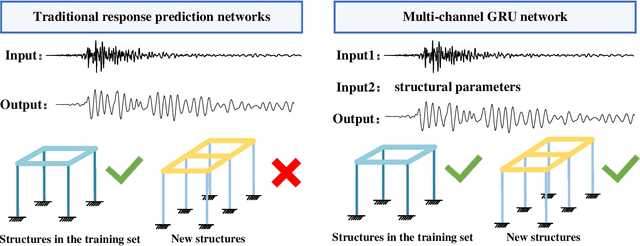

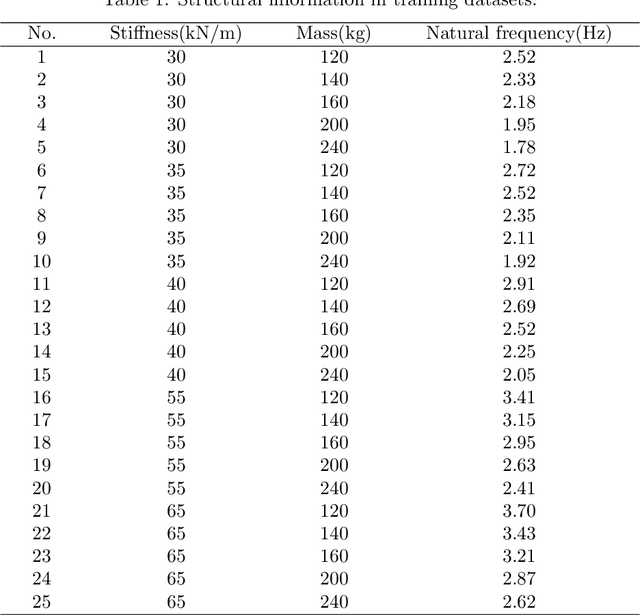

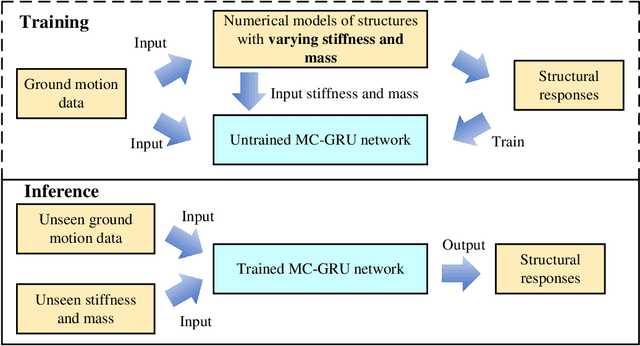

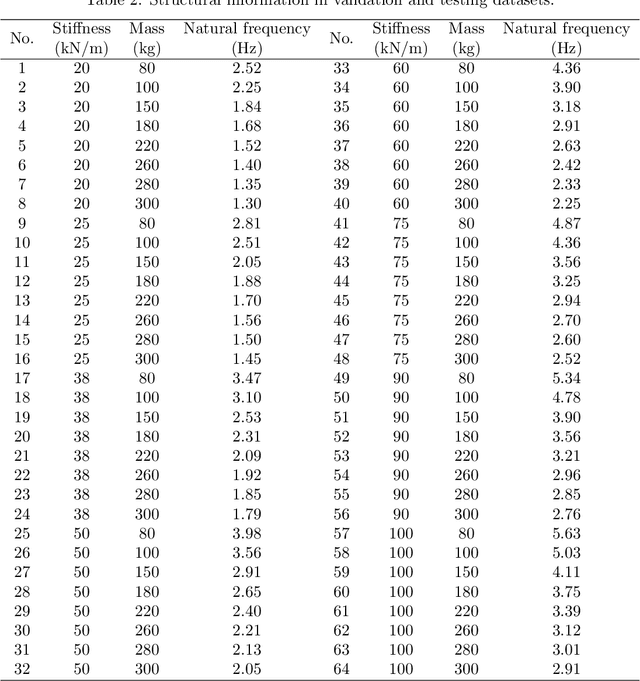

MC-GRU:a Multi-Channel GRU network for generalized nonlinear structural response prediction across structures

Mar 10, 2025

Abstract:Accurate prediction of seismic responses and quantification of structural damage are critical in civil engineering. Traditional approaches such as finite element analysis could lack computational efficiency, especially for complex structural systems under extreme hazards. Recently, artificial intelligence has provided an alternative to efficiently model highly nonlinear behaviors. However, existing models face challenges in generalizing across diverse structural systems. This paper proposes a novel multi-channel gated recurrent unit (MC-GRU) network aimed at achieving generalized nonlinear structural response prediction for varying structures. The key concept lies in the integration of a multi-channel input mechanism to GRU with an extra input of structural information to the candidate hidden state, which enables the network to learn the dynamic characteristics of diverse structures and thus empower the generalizability and adaptiveness to unseen structures. The performance of the proposed MC-GRU is validated through a series of case studies, including a single-degree-of-freedom linear system, a hysteretic Bouc-Wen system, and a nonlinear reinforced concrete column from experimental testing. Results indicate that the proposed MC-GRU overcomes the major generalizability issues of existing methods, with capability of accurately inferring seismic responses of varying structures. Additionally, it demonstrates enhanced capabilities in representing nonlinear structural dynamics compared to traditional models such as GRU and LSTM.

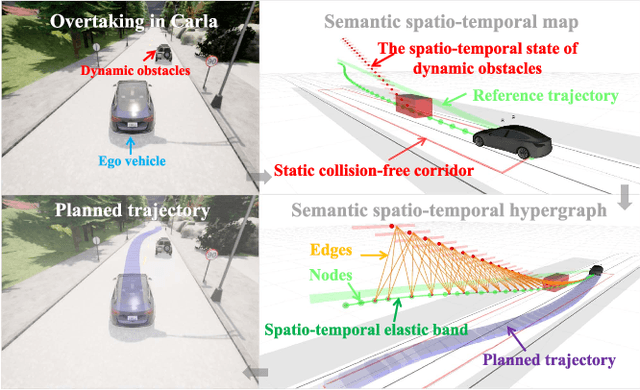

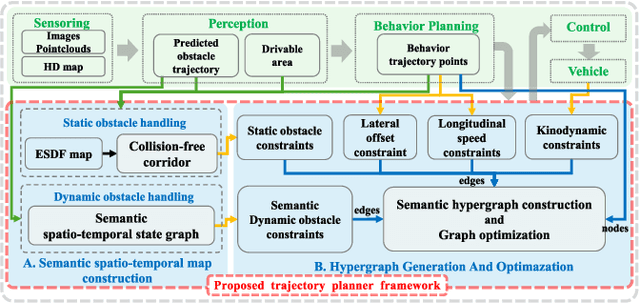

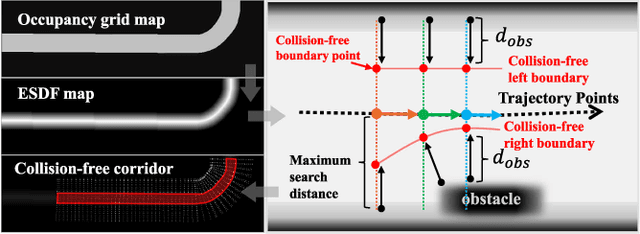

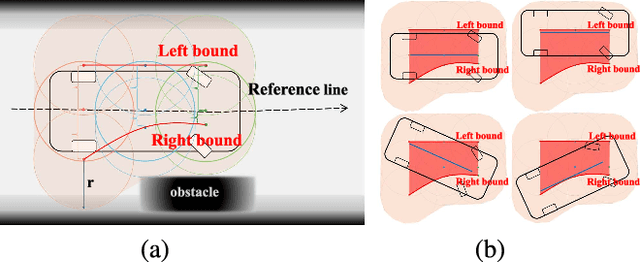

A Real-time Spatio-Temporal Trajectory Planner for Autonomous Vehicles with Semantic Graph Optimization

Feb 25, 2025

Abstract:Planning a safe and feasible trajectory for autonomous vehicles in real-time by fully utilizing perceptual information in complex urban environments is challenging. In this paper, we propose a spatio-temporal trajectory planning method based on graph optimization. It efficiently extracts the multi-modal information of the perception module by constructing a semantic spatio-temporal map through separation processing of static and dynamic obstacles, and then quickly generates feasible trajectories via sparse graph optimization based on a semantic spatio-temporal hypergraph. Extensive experiments have proven that the proposed method can effectively handle complex urban public road scenarios and perform in real time. We will also release our codes to accommodate benchmarking for the research community

* This work has been accepted for publication in IEEE Robotics and Automation Letters (RA-L). The final published version is available in IEEE Xplore (DOI: 10.1109/LRA.2024.3504239)

PoAct: Policy and Action Dual-Control Agent for Generalized Applications

Jan 13, 2025

Abstract:Based on their superior comprehension and reasoning capabilities, Large Language Model (LLM) driven agent frameworks have achieved significant success in numerous complex reasoning tasks. ReAct-like agents can solve various intricate problems step-by-step through progressive planning and tool calls, iteratively optimizing new steps based on environmental feedback. However, as the planning capabilities of LLMs improve, the actions invoked by tool calls in ReAct-like frameworks often misalign with complex planning and challenging data organization. Code Action addresses these issues while also introducing the challenges of a more complex action space and more difficult action organization. To leverage Code Action and tackle the challenges of its complexity, this paper proposes Policy and Action Dual-Control Agent (PoAct) for generalized applications. The aim is to achieve higher-quality code actions and more accurate reasoning paths by dynamically switching reasoning policies and modifying the action space. Experimental results on the Agent Benchmark for both legal and generic scenarios demonstrate the superior reasoning capabilities and reduced token consumption of our approach in complex tasks. On the LegalAgentBench, our method shows a 20 percent improvement over the baseline while requiring fewer tokens. We conducted experiments and analyses on the GPT-4o and GLM-4 series models, demonstrating the significant potential and scalability of our approach to solve complex problems.

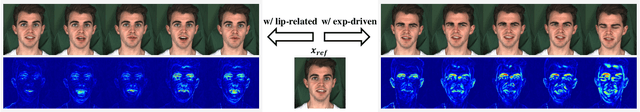

EmotiveTalk: Expressive Talking Head Generation through Audio Information Decoupling and Emotional Video Diffusion

Nov 23, 2024

Abstract:Diffusion models have revolutionized the field of talking head generation, yet still face challenges in expressiveness, controllability, and stability in long-time generation. In this research, we propose an EmotiveTalk framework to address these issues. Firstly, to realize better control over the generation of lip movement and facial expression, a Vision-guided Audio Information Decoupling (V-AID) approach is designed to generate audio-based decoupled representations aligned with lip movements and expression. Specifically, to achieve alignment between audio and facial expression representation spaces, we present a Diffusion-based Co-speech Temporal Expansion (Di-CTE) module within V-AID to generate expression-related representations under multi-source emotion condition constraints. Then we propose a well-designed Emotional Talking Head Diffusion (ETHD) backbone to efficiently generate highly expressive talking head videos, which contains an Expression Decoupling Injection (EDI) module to automatically decouple the expressions from reference portraits while integrating the target expression information, achieving more expressive generation performance. Experimental results show that EmotiveTalk can generate expressive talking head videos, ensuring the promised controllability of emotions and stability during long-time generation, yielding state-of-the-art performance compared to existing methods.

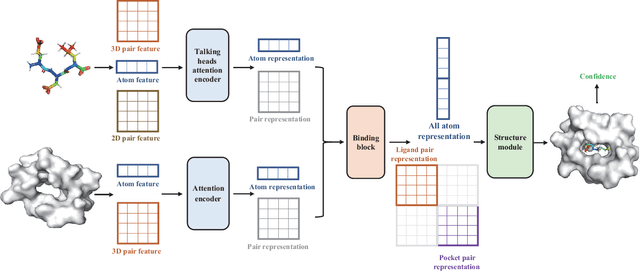

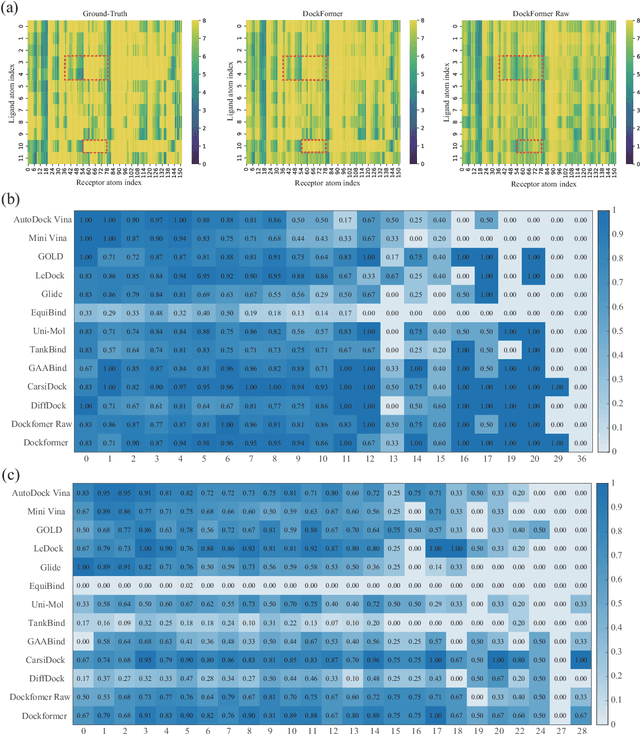

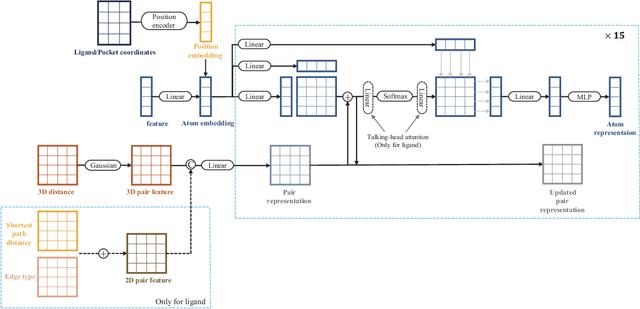

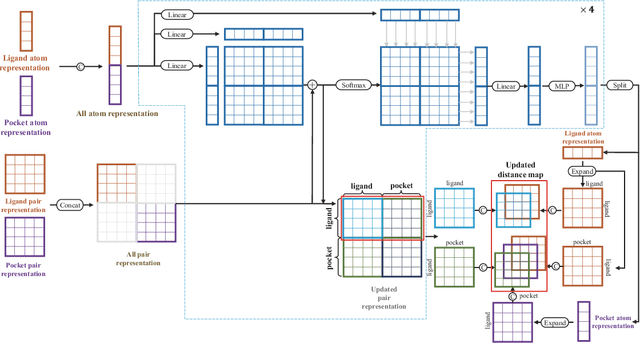

Dockformer: A transformer-based molecular docking paradigm for large-scale virtual screening

Nov 15, 2024

Abstract:Molecular docking enables virtual screening of compound libraries to identify potential ligands that target proteins of interest, a crucial step in drug development; however, as the size of the compound library increases, the computational complexity of traditional docking models increases. Deep learning algorithms can provide data-driven research and development models to increase the speed of the docking process. Unfortunately, few models can achieve superior screening performance compared to that of traditional models. Therefore, a novel deep learning-based docking approach named Dockformer is introduced in this study. Dockformer leverages multimodal information to capture the geometric topology and structural knowledge of molecules and can directly generate binding conformations with the corresponding confidence measures in an end-to-end manner. The experimental results show that Dockformer achieves success rates of 90.53\% and 82.71\% on the PDBbind core set and PoseBusters benchmarks, respectively, and more than a 100-fold increase in the inference process speed, outperforming almost all state-of-the-art docking methods. In addition, the ability of Dockformer to identify the main protease inhibitors of coronaviruses is demonstrated in a real-world virtual screening scenario. Considering its high docking accuracy and screening efficiency, Dockformer can be regarded as a powerful and robust tool in the field of drug design.

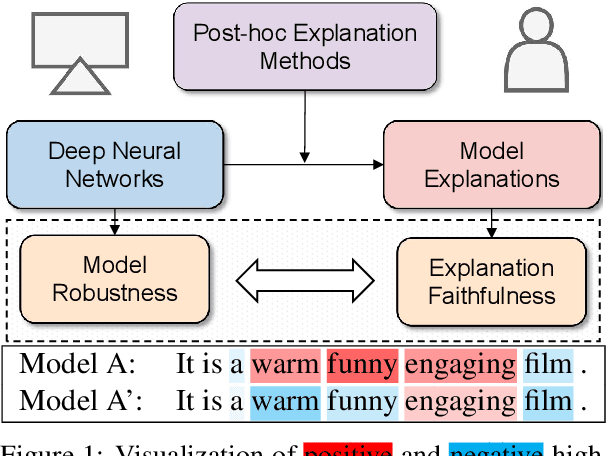

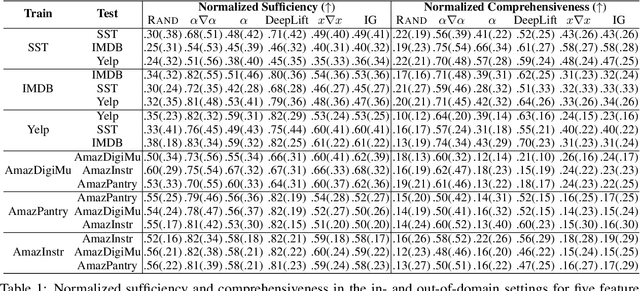

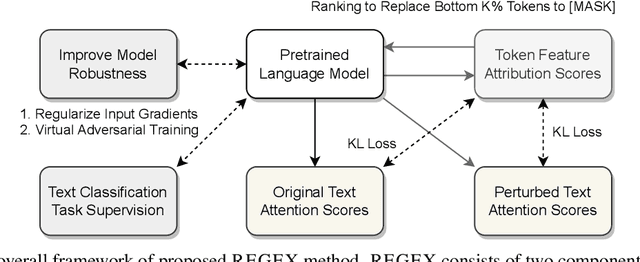

Towards Faithful Explanations for Text Classification with Robustness Improvement and Explanation Guided Training

Dec 29, 2023

Abstract:Feature attribution methods highlight the important input tokens as explanations to model predictions, which have been widely applied to deep neural networks towards trustworthy AI. However, recent works show that explanations provided by these methods face challenges of being faithful and robust. In this paper, we propose a method with Robustness improvement and Explanation Guided training towards more faithful EXplanations (REGEX) for text classification. First, we improve model robustness by input gradient regularization technique and virtual adversarial training. Secondly, we use salient ranking to mask noisy tokens and maximize the similarity between model attention and feature attribution, which can be seen as a self-training procedure without importing other external information. We conduct extensive experiments on six datasets with five attribution methods, and also evaluate the faithfulness in the out-of-domain setting. The results show that REGEX improves fidelity metrics of explanations in all settings and further achieves consistent gains based on two randomization tests. Moreover, we show that using highlight explanations produced by REGEX to train select-then-predict models results in comparable task performance to the end-to-end method.

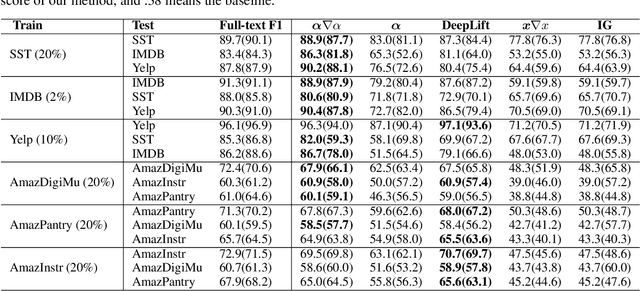

Perturbation-Based Two-Stage Multi-Domain Active Learning

Jun 19, 2023Abstract:In multi-domain learning (MDL) scenarios, high labeling effort is required due to the complexity of collecting data from various domains. Active Learning (AL) presents an encouraging solution to this issue by annotating a smaller number of highly informative instances, thereby reducing the labeling effort. Previous research has relied on conventional AL strategies for MDL scenarios, which underutilize the domain-shared information of each instance during the selection procedure. To mitigate this issue, we propose a novel perturbation-based two-stage multi-domain active learning (P2S-MDAL) method incorporated into the well-regarded ASP-MTL model. Specifically, P2S-MDAL involves allocating budgets for domains and establishing regions for diversity selection, which are further used to select the most cross-domain influential samples in each region. A perturbation metric has been introduced to evaluate the robustness of the shared feature extractor of the model, facilitating the identification of potentially cross-domain influential samples. Experiments are conducted on three real-world datasets, encompassing both texts and images. The superior performance over conventional AL strategies shows the effectiveness of the proposed strategy. Additionally, an ablation study has been carried out to demonstrate the validity of each component. Finally, we outline several intriguing potential directions for future MDAL research, thus catalyzing the field's advancement.

Multi-Domain Learning From Insufficient Annotations

May 04, 2023Abstract:Multi-domain learning (MDL) refers to simultaneously constructing a model or a set of models on datasets collected from different domains. Conventional approaches emphasize domain-shared information extraction and domain-private information preservation, following the shared-private framework (SP models), which offers significant advantages over single-domain learning. However, the limited availability of annotated data in each domain considerably hinders the effectiveness of conventional supervised MDL approaches in real-world applications. In this paper, we introduce a novel method called multi-domain contrastive learning (MDCL) to alleviate the impact of insufficient annotations by capturing both semantic and structural information from both labeled and unlabeled data.Specifically, MDCL comprises two modules: inter-domain semantic alignment and intra-domain contrast. The former aims to align annotated instances of the same semantic category from distinct domains within a shared hidden space, while the latter focuses on learning a cluster structure of unlabeled instances in a private hidden space for each domain. MDCL is readily compatible with many SP models, requiring no additional model parameters and allowing for end-to-end training. Experimental results across five textual and image multi-domain datasets demonstrate that MDCL brings noticeable improvement over various SP models.Furthermore, MDCL can further be employed in multi-domain active learning (MDAL) to achieve a superior initialization, eventually leading to better overall performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge