Ruiyang Zhang

SketchThinker-R1: Towards Efficient Sketch-Style Reasoning in Large Multimodal Models

Jan 06, 2026Abstract:Despite the empirical success of extensive, step-by-step reasoning in large multimodal models, long reasoning processes inevitably incur substantial computational overhead, i.e., in terms of higher token costs and increased response time, which undermines inference efficiency. In contrast, humans often employ sketch-style reasoning: a concise, goal-directed cognitive process that prioritizes salient information and enables efficient problem-solving. Inspired by this cognitive efficiency, we propose SketchThinker-R1, which incentivizes sketch-style reasoning ability in large multimodal models. Our method consists of three primary stages. In the Sketch-Mode Cold Start stage, we convert standard long reasoning process into sketch-style reasoning and finetune base multimodal model, instilling initial sketch-style reasoning capability. Next, we train SketchJudge Reward Model, which explicitly evaluates thinking process of model and assigns higher scores to sketch-style reasoning. Finally, we conduct Sketch-Thinking Reinforcement Learning under supervision of SketchJudge to further generalize sketch-style reasoning ability. Experimental evaluation on four benchmarks reveals that our SketchThinker-R1 achieves over 64% reduction in reasoning token cost without compromising final answer accuracy. Qualitative analysis further shows that sketch-style reasoning focuses more on key cues during problem solving.

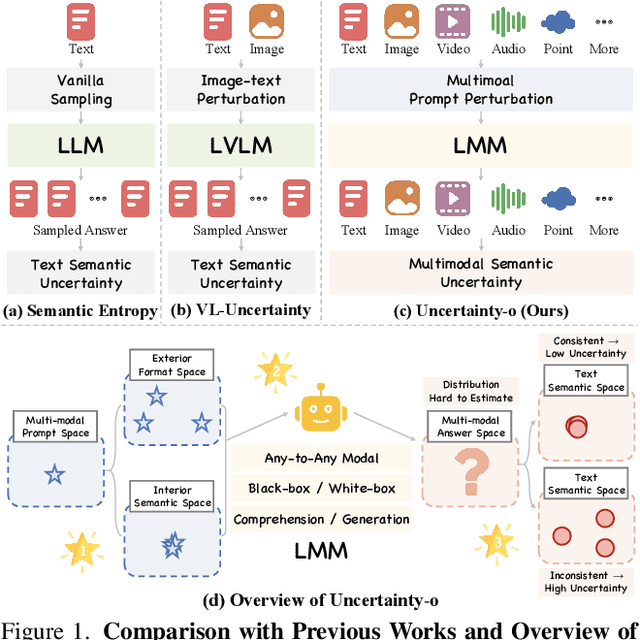

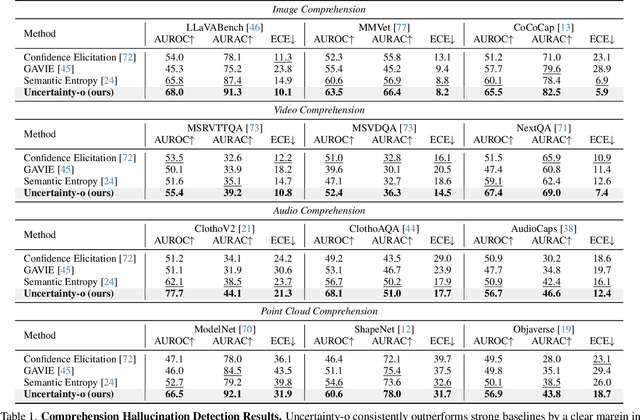

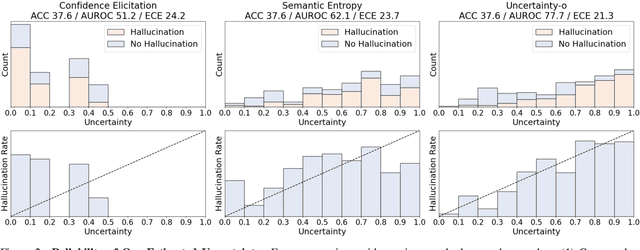

Uncertainty-o: One Model-agnostic Framework for Unveiling Uncertainty in Large Multimodal Models

Jun 09, 2025

Abstract:Large Multimodal Models (LMMs), harnessing the complementarity among diverse modalities, are often considered more robust than pure Language Large Models (LLMs); yet do LMMs know what they do not know? There are three key open questions remaining: (1) how to evaluate the uncertainty of diverse LMMs in a unified manner, (2) how to prompt LMMs to show its uncertainty, and (3) how to quantify uncertainty for downstream tasks. In an attempt to address these challenges, we introduce Uncertainty-o: (1) a model-agnostic framework designed to reveal uncertainty in LMMs regardless of their modalities, architectures, or capabilities, (2) an empirical exploration of multimodal prompt perturbations to uncover LMM uncertainty, offering insights and findings, and (3) derive the formulation of multimodal semantic uncertainty, which enables quantifying uncertainty from multimodal responses. Experiments across 18 benchmarks spanning various modalities and 10 LMMs (both open- and closed-source) demonstrate the effectiveness of Uncertainty-o in reliably estimating LMM uncertainty, thereby enhancing downstream tasks such as hallucination detection, hallucination mitigation, and uncertainty-aware Chain-of-Thought reasoning.

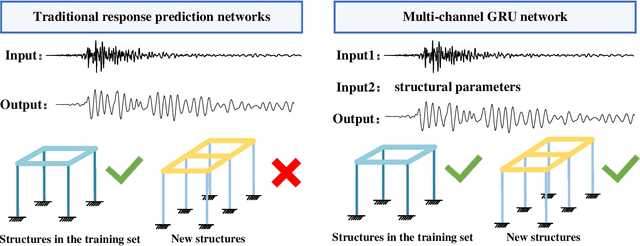

MC-GRU:a Multi-Channel GRU network for generalized nonlinear structural response prediction across structures

Mar 10, 2025

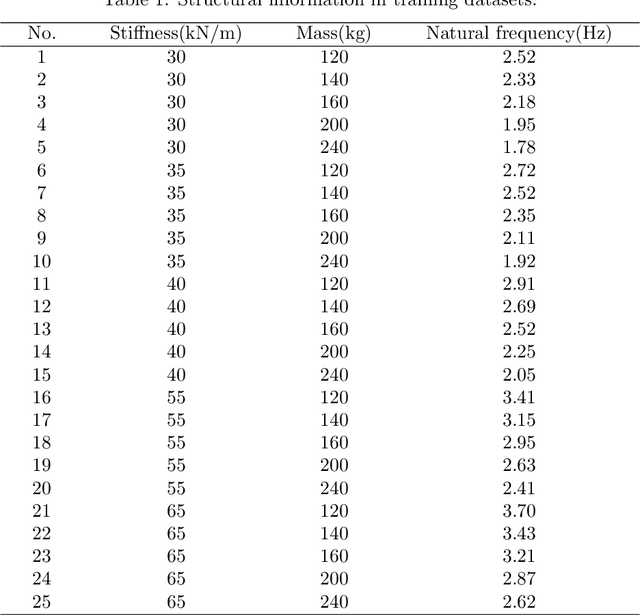

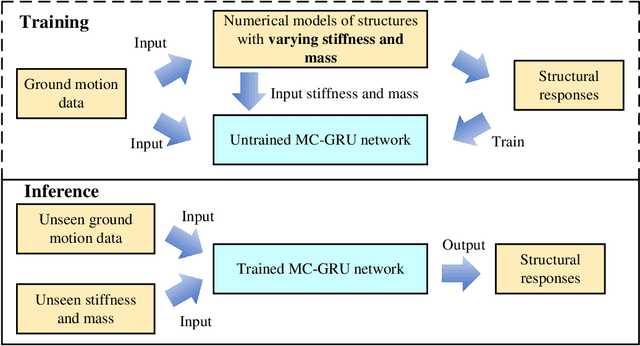

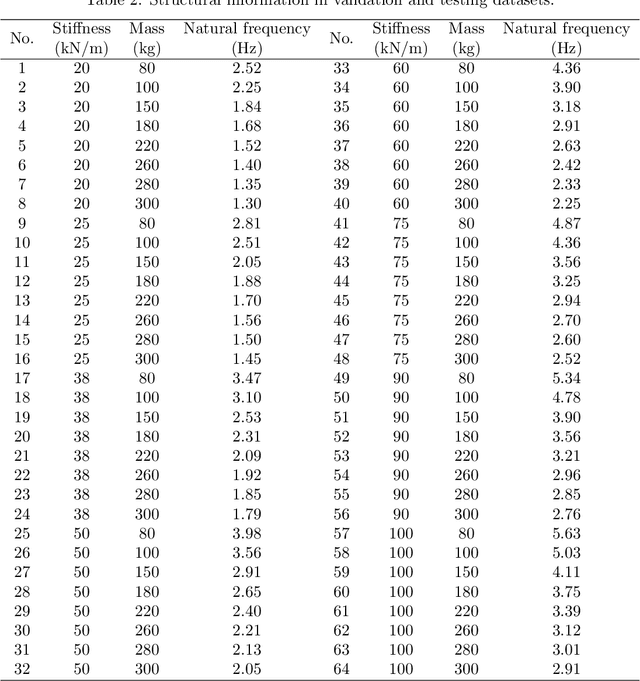

Abstract:Accurate prediction of seismic responses and quantification of structural damage are critical in civil engineering. Traditional approaches such as finite element analysis could lack computational efficiency, especially for complex structural systems under extreme hazards. Recently, artificial intelligence has provided an alternative to efficiently model highly nonlinear behaviors. However, existing models face challenges in generalizing across diverse structural systems. This paper proposes a novel multi-channel gated recurrent unit (MC-GRU) network aimed at achieving generalized nonlinear structural response prediction for varying structures. The key concept lies in the integration of a multi-channel input mechanism to GRU with an extra input of structural information to the candidate hidden state, which enables the network to learn the dynamic characteristics of diverse structures and thus empower the generalizability and adaptiveness to unseen structures. The performance of the proposed MC-GRU is validated through a series of case studies, including a single-degree-of-freedom linear system, a hysteretic Bouc-Wen system, and a nonlinear reinforced concrete column from experimental testing. Results indicate that the proposed MC-GRU overcomes the major generalizability issues of existing methods, with capability of accurately inferring seismic responses of varying structures. Additionally, it demonstrates enhanced capabilities in representing nonlinear structural dynamics compared to traditional models such as GRU and LSTM.

VL-Uncertainty: Detecting Hallucination in Large Vision-Language Model via Uncertainty Estimation

Nov 18, 2024

Abstract:Given the higher information load processed by large vision-language models (LVLMs) compared to single-modal LLMs, detecting LVLM hallucinations requires more human and time expense, and thus rise a wider safety concerns. In this paper, we introduce VL-Uncertainty, the first uncertainty-based framework for detecting hallucinations in LVLMs. Different from most existing methods that require ground-truth or pseudo annotations, VL-Uncertainty utilizes uncertainty as an intrinsic metric. We measure uncertainty by analyzing the prediction variance across semantically equivalent but perturbed prompts, including visual and textual data. When LVLMs are highly confident, they provide consistent responses to semantically equivalent queries. However, when uncertain, the responses of the target LVLM become more random. Considering semantically similar answers with different wordings, we cluster LVLM responses based on their semantic content and then calculate the cluster distribution entropy as the uncertainty measure to detect hallucination. Our extensive experiments on 10 LVLMs across four benchmarks, covering both free-form and multi-choice tasks, show that VL-Uncertainty significantly outperforms strong baseline methods in hallucination detection.

Harnessing Uncertainty-aware Bounding Boxes for Unsupervised 3D Object Detection

Aug 01, 2024

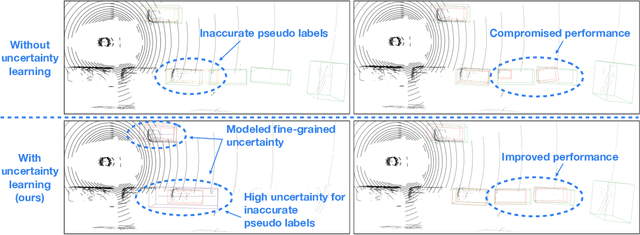

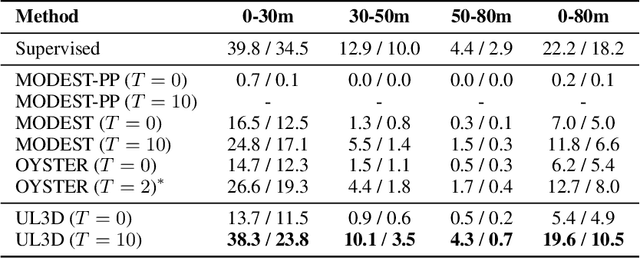

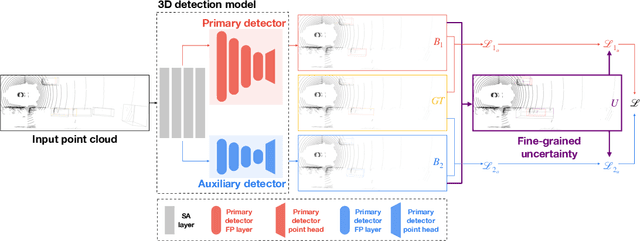

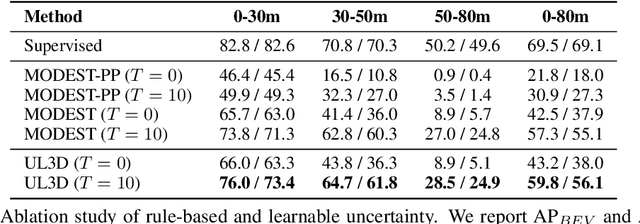

Abstract:Unsupervised 3D object detection aims to identify objects of interest from unlabeled raw data, such as LiDAR points. Recent approaches usually adopt pseudo 3D bounding boxes (3D bboxes) from clustering algorithm to initialize the model training, and then iteratively updating both pseudo labels and the trained model. However, pseudo bboxes inevitably contain noises, and such inaccurate annotation accumulates to the final model, compromising the performance. Therefore, in an attempt to mitigate the negative impact of pseudo bboxes, we introduce a new uncertainty-aware framework. In particular, Our method consists of two primary components: uncertainty estimation and uncertainty regularization. (1) In the uncertainty estimation phase, we incorporate an extra auxiliary detection branch alongside the primary detector. The prediction disparity between the primary and auxiliary detectors is leveraged to estimate uncertainty at the box coordinate level, including position, shape, orientation. (2) Based on the assessed uncertainty, we regularize the model training via adaptively adjusting every 3D bboxes coordinates. For pseudo bbox coordinates with high uncertainty, we assign a relatively low loss weight. Experiment verifies that the proposed method is robust against the noisy pseudo bboxes, yielding substantial improvements on nuScenes and Lyft compared to existing techniques, with increases of 6.9% in AP$_{BEV}$ and 2.5% in AP$_{3D}$ on nuScenes, and 2.2% in AP$_{BEV}$ and 1.0% in AP$_{3D}$ on Lyft.

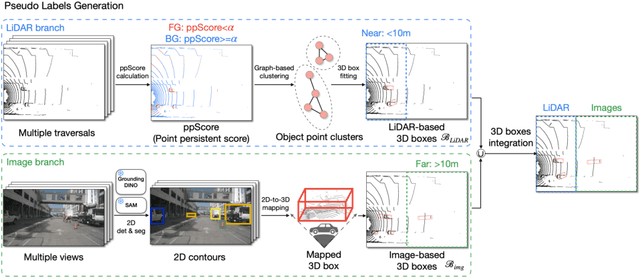

Approaching Outside: Scaling Unsupervised 3D Object Detection from 2D Scene

Jul 11, 2024

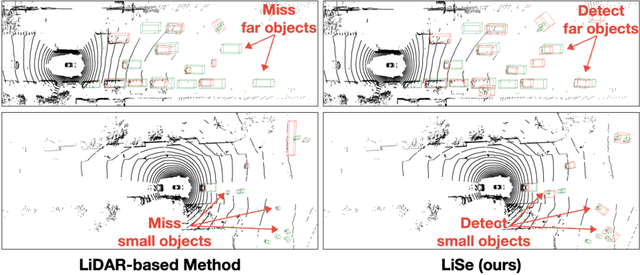

Abstract:The unsupervised 3D object detection is to accurately detect objects in unstructured environments with no explicit supervisory signals. This task, given sparse LiDAR point clouds, often results in compromised performance for detecting distant or small objects due to the inherent sparsity and limited spatial resolution. In this paper, we are among the early attempts to integrate LiDAR data with 2D images for unsupervised 3D detection and introduce a new method, dubbed LiDAR-2D Self-paced Learning (LiSe). We argue that RGB images serve as a valuable complement to LiDAR data, offering precise 2D localization cues, particularly when scarce LiDAR points are available for certain objects. Considering the unique characteristics of both modalities, our framework devises a self-paced learning pipeline that incorporates adaptive sampling and weak model aggregation strategies. The adaptive sampling strategy dynamically tunes the distribution of pseudo labels during training, countering the tendency of models to overfit easily detected samples, such as nearby and large-sized objects. By doing so, it ensures a balanced learning trajectory across varying object scales and distances. The weak model aggregation component consolidates the strengths of models trained under different pseudo label distributions, culminating in a robust and powerful final model. Experimental evaluations validate the efficacy of our proposed LiSe method, manifesting significant improvements of +7.1% AP$_{BEV}$ and +3.4% AP$_{3D}$ on nuScenes, and +8.3% AP$_{BEV}$ and +7.4% AP$_{3D}$ on Lyft compared to existing techniques.

Large AI Models in Health Informatics: Applications, Challenges, and the Future

Mar 21, 2023

Abstract:Large AI models, or foundation models, are models recently emerging with massive scales both parameter-wise and data-wise, the magnitudes of which often reach beyond billions. Once pretrained, large AI models demonstrate impressive performance in various downstream tasks. A concrete example is the recent debut of ChatGPT, whose capability has compelled people's imagination about the far-reaching influence that large AI models can have and their potential to transform different domains of our life. In health informatics, the advent of large AI models has brought new paradigms for the design of methodologies. The scale of multimodality data in the biomedical and health domain has been ever-expanding especially since the community embraced the era of deep learning, which provides the ground to develop, validate, and advance large AI models for breakthroughs in health-related areas. This article presents an up-to-date comprehensive review of large AI models, from background to their applications. We identify seven key sectors that large AI models are applicable and might have substantial influence, including 1) molecular biology and drug discovery; 2) medical diagnosis and decision-making; 3) medical imaging and vision; 4) medical informatics; 5) medical education; 6) public health; and 7) medical robotics. We examine their challenges in health informatics, followed by a critical discussion about potential future directions and pitfalls of large AI models in transforming the field of health informatics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge