Pengcheng Zhao

LD-DETR: Loop Decoder DEtection TRansformer for Video Moment Retrieval and Highlight Detection

Jan 18, 2025Abstract:Video Moment Retrieval and Highlight Detection aim to find corresponding content in the video based on a text query. Existing models usually first use contrastive learning methods to align video and text features, then fuse and extract multimodal information, and finally use a Transformer Decoder to decode multimodal information. However, existing methods face several issues: (1) Overlapping semantic information between different samples in the dataset hinders the model's multimodal aligning performance; (2) Existing models are not able to efficiently extract local features of the video; (3) The Transformer Decoder used by the existing model cannot adequately decode multimodal features. To address the above issues, we proposed the LD-DETR model for Video Moment Retrieval and Highlight Detection tasks. Specifically, we first distilled the similarity matrix into the identity matrix to mitigate the impact of overlapping semantic information. Then, we designed a method that enables convolutional layers to extract multimodal local features more efficiently. Finally, we fed the output of the Transformer Decoder back into itself to adequately decode multimodal information. We evaluated LD-DETR on four public benchmarks and conducted extensive experiments to demonstrate the superiority and effectiveness of our approach. Our model outperforms the State-Of-The-Art models on QVHighlight, Charades-STA and TACoS datasets. Our code is available at https://github.com/qingchen239/ld-detr.

OMG-HD: A High-Resolution AI Weather Model for End-to-End Forecasts from Observations

Dec 24, 2024

Abstract:In recent years, Artificial Intelligence Weather Prediction (AIWP) models have achieved performance comparable to, or even surpassing, traditional Numerical Weather Prediction (NWP) models by leveraging reanalysis data. However, a less-explored approach involves training AIWP models directly on observational data, enhancing computational efficiency and improving forecast accuracy by reducing the uncertainties introduced through data assimilation processes. In this study, we propose OMG-HD, a novel AI-based regional high-resolution weather forecasting model designed to make predictions directly from observational data sources, including surface stations, radar, and satellite, thereby removing the need for operational data assimilation. Our evaluation shows that OMG-HD outperforms both the European Centre for Medium-Range Weather Forecasts (ECMWF)'s high-resolution operational forecasting system, IFS-HRES, and the High-Resolution Rapid Refresh (HRRR) model at lead times of up to 12 hours across the contiguous United States (CONUS) region. We achieve up to a 13% improvement on RMSE for 2-meter temperature, 17% on 10-meter wind speed, 48% on 2-meter specific humidity, and 32% on surface pressure compared to HRRR. Our method shows that it is possible to use AI-driven approaches for rapid weather predictions without relying on NWP-derived weather fields as model input. This is a promising step towards using observational data directly to make operational forecasts with AIWP models.

Multimodal Class-aware Semantic Enhancement Network for Audio-Visual Video Parsing

Dec 17, 2024

Abstract:The Audio-Visual Video Parsing task aims to recognize and temporally localize all events occurring in either the audio or visual stream, or both. Capturing accurate event semantics for each audio/visual segment is vital. Prior works directly utilize the extracted holistic audio and visual features for intra- and cross-modal temporal interactions. However, each segment may contain multiple events, resulting in semantically mixed holistic features that can lead to semantic interference during intra- or cross-modal interactions: the event semantics of one segment may incorporate semantics of unrelated events from other segments. To address this issue, our method begins with a Class-Aware Feature Decoupling (CAFD) module, which explicitly decouples the semantically mixed features into distinct class-wise features, including multiple event-specific features and a dedicated background feature. The decoupled class-wise features enable our model to selectively aggregate useful semantics for each segment from clearly matched classes contained in other segments, preventing semantic interference from irrelevant classes. Specifically, we further design a Fine-Grained Semantic Enhancement module for encoding intra- and cross-modal relations. It comprises a Segment-wise Event Co-occurrence Modeling (SECM) block and a Local-Global Semantic Fusion (LGSF) block. The SECM exploits inter-class dependencies of concurrent events within the same timestamp with the aid of a new event co-occurrence loss. The LGSF further enhances the event semantics of each segment by incorporating relevant semantics from more informative global video features. Extensive experiments validate the effectiveness of the proposed modules and loss functions, resulting in a new state-of-the-art parsing performance.

ADAF: An Artificial Intelligence Data Assimilation Framework for Weather Forecasting

Nov 25, 2024

Abstract:The forecasting skill of numerical weather prediction (NWP) models critically depends on the accurate initial conditions, also known as analysis, provided by data assimilation (DA). Traditional DA methods often face a trade-off between computational cost and accuracy due to complex linear algebra computations and the high dimensionality of the model, especially in nonlinear systems. Moreover, processing massive data in real-time requires substantial computational resources. To address this, we introduce an artificial intelligence-based data assimilation framework (ADAF) to generate high-quality kilometer-scale analysis. This study is the pioneering work using real-world observations from varied locations and multiple sources to verify the AI method's efficacy in DA, including sparse surface weather observations and satellite imagery. We implemented ADAF for four near-surface variables in the Contiguous United States (CONUS). The results indicate that ADAF surpasses the High Resolution Rapid Refresh Data Assimilation System (HRRRDAS) in accuracy by 16% to 33% for near-surface atmospheric conditions, aligning more closely with actual observations, and can effectively reconstruct extreme events, such as tropical cyclone wind fields. Sensitivity experiments reveal that ADAF can generate high-quality analysis even with low-accuracy backgrounds and extremely sparse surface observations. ADAF can assimilate massive observations within a three-hour window at low computational cost, taking about two seconds on an AMD MI200 graphics processing unit (GPU). ADAF has been shown to be efficient and effective in real-world DA, underscoring its potential role in operational weather forecasting.

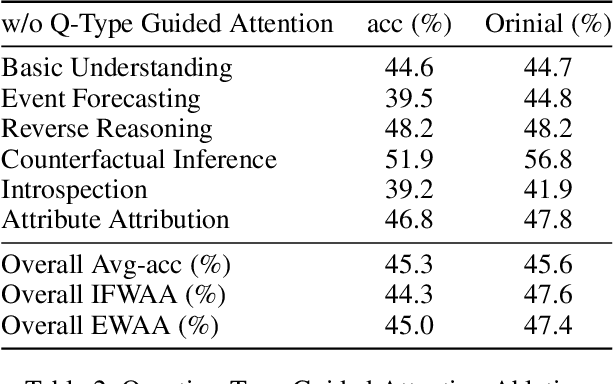

QTG-VQA: Question-Type-Guided Architectural for VideoQA Systems

Sep 14, 2024

Abstract:In the domain of video question answering (VideoQA), the impact of question types on VQA systems, despite its critical importance, has been relatively under-explored to date. However, the richness of question types directly determines the range of concepts a model needs to learn, thereby affecting the upper limit of its learning capability. This paper focuses on exploring the significance of different question types for VQA systems and their impact on performance, revealing a series of issues such as insufficient learning and model degradation due to uneven distribution of question types. Particularly, considering the significant variation in dependency on temporal information across different question types, and given that the representation of such information coincidentally represents a principal challenge and difficulty for VideoQA as opposed to ImageQA. To address these challenges, we propose QTG-VQA, a novel architecture that incorporates question-type-guided attention and adaptive learning mechanism. Specifically, as to temporal-type questions, we design Masking Frame Modeling technique to enhance temporal modeling, aimed at encouraging the model to grasp richer visual-language relationships and manage more intricate temporal dependencies. Furthermore, a novel evaluation metric tailored to question types is introduced. Experimental results confirm the effectiveness of our approach.

WeatherReal: A Benchmark Based on In-Situ Observations for Evaluating Weather Models

Sep 14, 2024

Abstract:In recent years, AI-based weather forecasting models have matched or even outperformed numerical weather prediction systems. However, most of these models have been trained and evaluated on reanalysis datasets like ERA5. These datasets, being products of numerical models, often diverge substantially from actual observations in some crucial variables like near-surface temperature, wind, precipitation and clouds - parameters that hold significant public interest. To address this divergence, we introduce WeatherReal, a novel benchmark dataset for weather forecasting, derived from global near-surface in-situ observations. WeatherReal also features a publicly accessible quality control and evaluation framework. This paper details the sources and processing methodologies underlying the dataset, and further illustrates the advantage of in-situ observations in capturing hyper-local and extreme weather through comparative analyses and case studies. Using WeatherReal, we evaluated several data-driven models and compared them with leading numerical models. Our work aims to advance the AI-based weather forecasting research towards a more application-focused and operation-ready approach.

Audio-Infused Automatic Image Colorization by Exploiting Audio Scene Semantics

Jan 24, 2024Abstract:Automatic image colorization is inherently an ill-posed problem with uncertainty, which requires an accurate semantic understanding of scenes to estimate reasonable colors for grayscale images. Although recent interaction-based methods have achieved impressive performance, it is still a very difficult task to infer realistic and accurate colors for automatic colorization. To reduce the difficulty of semantic understanding of grayscale scenes, this paper tries to utilize corresponding audio, which naturally contains extra semantic information about the same scene. Specifically, a novel audio-infused automatic image colorization (AIAIC) network is proposed, which consists of three stages. First, we take color image semantics as a bridge and pretrain a colorization network guided by color image semantics. Second, the natural co-occurrence of audio and video is utilized to learn the color semantic correlations between audio and visual scenes. Third, the implicit audio semantic representation is fed into the pretrained network to finally realize the audio-guided colorization. The whole process is trained in a self-supervised manner without human annotation. In addition, an audiovisual colorization dataset is established for training and testing. Experiments demonstrate that audio guidance can effectively improve the performance of automatic colorization, especially for some scenes that are difficult to understand only from visual modality.

Spinal nerve segmentation method and dataset construction in endoscopic surgical scenarios

Jul 20, 2023

Abstract:Endoscopic surgery is currently an important treatment method in the field of spinal surgery and avoiding damage to the spinal nerves through video guidance is a key challenge. This paper presents the first real-time segmentation method for spinal nerves in endoscopic surgery, which provides crucial navigational information for surgeons. A finely annotated segmentation dataset of approximately 10,000 consec-utive frames recorded during surgery is constructed for the first time for this field, addressing the problem of semantic segmentation. Based on this dataset, we propose FUnet (Frame-Unet), which achieves state-of-the-art performance by utilizing inter-frame information and self-attention mechanisms. We also conduct extended exper-iments on a similar polyp endoscopy video dataset and show that the model has good generalization ability with advantageous performance. The dataset and code of this work are presented at: https://github.com/zzzzzzpc/FUnet .

Safe, Optimal, Real-time Trajectory Planning with a Parallel Constrained Bernstein Algorithm

Mar 03, 2020

Abstract:To move through the world, mobile robots typically use a receding-horizon strategy, wherein they execute an old plan while computing a new plan to incorporate new sensor information. A plan should be dynamically feasible, meaning it obeys constraints like the robot's dynamics and obstacle avoidance; it should have liveness, meaning the robot does not stop to plan so frequently that it cannot accomplish tasks; and it should be optimal, meaning that the robot tries to satisfy a user-specified cost function such as reaching a goal location as quickly as possible. Reachability-based Trajectory Design (RTD) is a planning method that can generate provably dynamically-feasible plans. However, RTD solves a nonlinear polynmial optimization program at each planning iteration, preventing optimality guarantees; furthermore, RTD can struggle with liveness because the robot must brake to a stop when the solver finds local minima or cannot find a feasible solution. This paper proposes RTD*, which certifiably finds the globally optimal plan (if such a plan exists) at each planning iteration. This method is enabled by a novel Parallelized Constrained Bernstein Algorithm (PCBA), which is a branch-and-bound method for polynomial optimization. The contributions of this paper are: the implementation of PCBA; proofs of bounds on the time and memory usage of PCBA; a comparison of PCBA to state of the art solvers; and the demonstration of PCBA/RTD* on a mobile robot. RTD* outperforms RTD in terms of optimality and liveness for real-time planning in a variety of environments with randomly-placed obstacles.

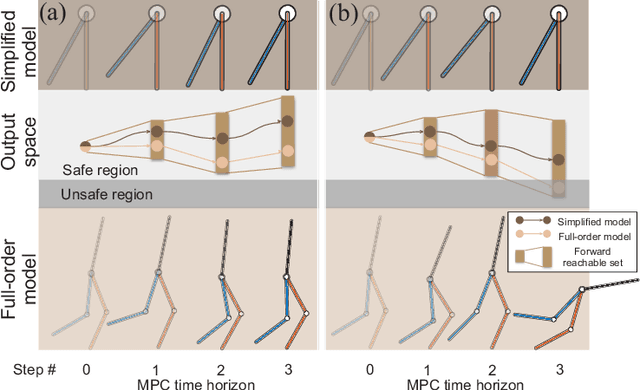

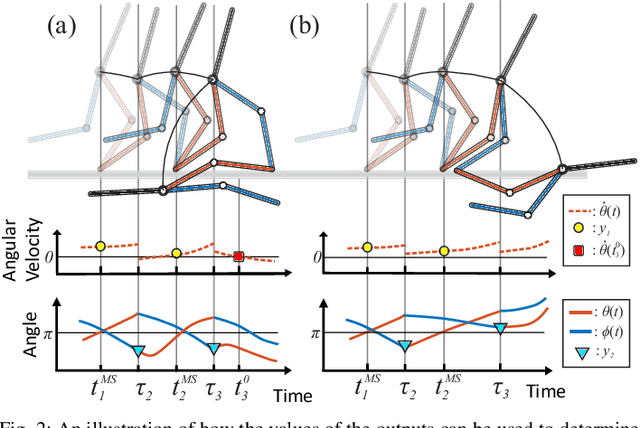

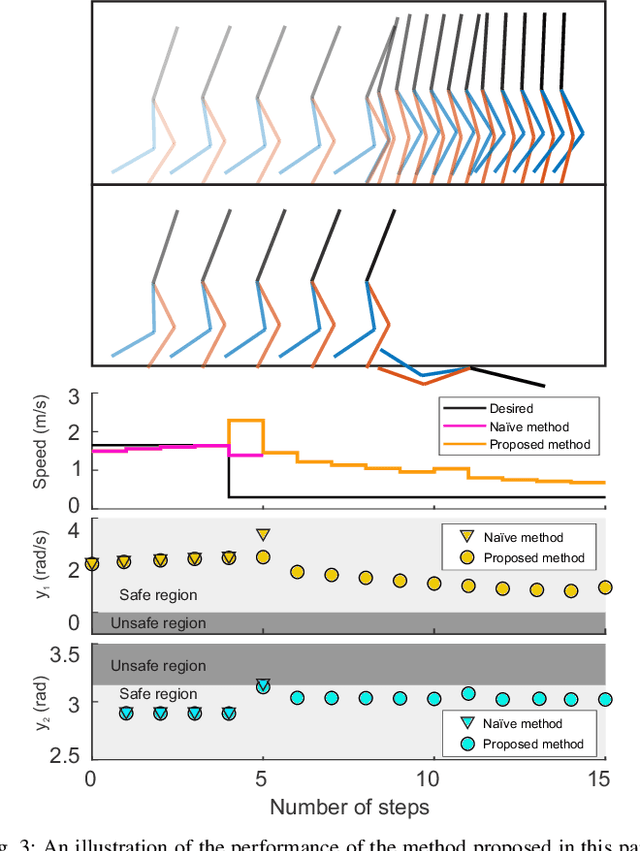

Leveraging the Template and Anchor Framework for Safe, Online Robotic Gait Design

Sep 24, 2019

Abstract:Online control design using a high-fidelity, full-order model for a bipedal robot can be challenging due to the size of the state space of the model. A commonly adopted solution to overcome this challenge is to approximate the full-order model (anchor) with a simplified, reduced-order model (template), while performing control synthesis. Unfortunately it is challenging to make formal guarantees about the safety of an anchor model using a controller designed in an online fashion using a template model. To address this problem, this paper proposes a method to generate safety-preserving controllers for anchor models by performing reachability analysis on template models while bounding the modeling error. This paper describes how this reachable set can be incorporated into a Model Predictive Control framework to select controllers that result in safe walking on the anchor model in an online fashion. The method is illustrated on a 5-link RABBIT model, and is shown to allow the robot to walk safely while utilizing controllers designed in an online fashion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge