Ram Vasudevan

SurfSLAM: Sim-to-Real Underwater Stereo Reconstruction For Real-Time SLAM

Jan 15, 2026Abstract:Localization and mapping are core perceptual capabilities for underwater robots. Stereo cameras provide a low-cost means of directly estimating metric depth to support these tasks. However, despite recent advances in stereo depth estimation on land, computing depth from image pairs in underwater scenes remains challenging. In underwater environments, images are degraded by light attenuation, visual artifacts, and dynamic lighting conditions. Furthermore, real-world underwater scenes frequently lack rich texture useful for stereo depth estimation and 3D reconstruction. As a result, stereo estimation networks trained on in-air data cannot transfer directly to the underwater domain. In addition, there is a lack of real-world underwater stereo datasets for supervised training of neural networks. Poor underwater depth estimation is compounded in stereo-based Simultaneous Localization and Mapping (SLAM) algorithms, making it a fundamental challenge for underwater robot perception. To address these challenges, we propose a novel framework that enables sim-to-real training of underwater stereo disparity estimation networks using simulated data and self-supervised finetuning. We leverage our learned depth predictions to develop \algname, a novel framework for real-time underwater SLAM that fuses stereo cameras with IMU, barometric, and Doppler Velocity Log (DVL) measurements. Lastly, we collect a challenging real-world dataset of shipwreck surveys using an underwater robot. Our dataset features over 24,000 stereo pairs, along with high-quality, dense photogrammetry models and reference trajectories for evaluation. Through extensive experiments, we demonstrate the advantages of the proposed training approach on real-world data for improving stereo estimation in the underwater domain and for enabling accurate trajectory estimation and 3D reconstruction of complex shipwreck sites.

SLIM-VDB: A Real-Time 3D Probabilistic Semantic Mapping Framework

Dec 15, 2025Abstract:This paper introduces SLIM-VDB, a new lightweight semantic mapping system with probabilistic semantic fusion for closed-set or open-set dictionaries. Advances in data structures from the computer graphics community, such as OpenVDB, have demonstrated significantly improved computational and memory efficiency in volumetric scene representation. Although OpenVDB has been used for geometric mapping in robotics applications, semantic mapping for scene understanding with OpenVDB remains unexplored. In addition, existing semantic mapping systems lack support for integrating both fixed-category and open-language label predictions within a single framework. In this paper, we propose a novel 3D semantic mapping system that leverages the OpenVDB data structure and integrates a unified Bayesian update framework for both closed- and open-set semantic fusion. Our proposed framework, SLIM-VDB, achieves significant reduction in both memory and integration times compared to current state-of-the-art semantic mapping approaches, while maintaining comparable mapping accuracy. An open-source C++ codebase with a Python interface is available at https://github.com/umfieldrobotics/slim-vdb.

Imaginative World Modeling with Scene Graphs for Embodied Agent Navigation

Aug 09, 2025Abstract:Semantic navigation requires an agent to navigate toward a specified target in an unseen environment. Employing an imaginative navigation strategy that predicts future scenes before taking action, can empower the agent to find target faster. Inspired by this idea, we propose SGImagineNav, a novel imaginative navigation framework that leverages symbolic world modeling to proactively build a global environmental representation. SGImagineNav maintains an evolving hierarchical scene graphs and uses large language models to predict and explore unseen parts of the environment. While existing methods solely relying on past observations, this imaginative scene graph provides richer semantic context, enabling the agent to proactively estimate target locations. Building upon this, SGImagineNav adopts an adaptive navigation strategy that exploits semantic shortcuts when promising and explores unknown areas otherwise to gather additional context. This strategy continuously expands the known environment and accumulates valuable semantic contexts, ultimately guiding the agent toward the target. SGImagineNav is evaluated in both real-world scenarios and simulation benchmarks. SGImagineNav consistently outperforms previous methods, improving success rate to 65.4 and 66.8 on HM3D and HSSD, and demonstrating cross-floor and cross-room navigation in real-world environments, underscoring its effectiveness and generalizability.

Riemannian Direct Trajectory Optimization of Rigid Bodies on Matrix Lie Groups

May 05, 2025

Abstract:Designing dynamically feasible trajectories for rigid bodies is a fundamental problem in robotics. Although direct trajectory optimization is widely applied to solve this problem, inappropriate parameterizations of rigid body dynamics often result in slow convergence and violations of the intrinsic topological structure of the rotation group. This paper introduces a Riemannian optimization framework for direct trajectory optimization of rigid bodies. We first use the Lie Group Variational Integrator to formulate the discrete rigid body dynamics on matrix Lie groups. We then derive the closed-form first- and second-order Riemannian derivatives of the dynamics. Finally, this work applies a line-search Riemannian Interior Point Method (RIPM) to perform trajectory optimization with general nonlinear constraints. As the optimization is performed on matrix Lie groups, it is correct-by-construction to respect the topological structure of the rotation group and be free of singularities. The paper demonstrates that both the derivative evaluations and Newton steps required to solve the RIPM exhibit linear complexity with respect to the planning horizon and system degrees of freedom. Simulation results illustrate that the proposed method is faster than conventional methods by an order of magnitude in challenging robotics tasks.

Phasing Through the Flames: Rapid Motion Planning with the AGHF PDE for Arbitrary Objective Functions and Constraints

May 02, 2025Abstract:The generation of optimal trajectories for high-dimensional robotic systems under constraints remains computationally challenging due to the need to simultaneously satisfy dynamic feasibility, input limits, and task-specific objectives while searching over high-dimensional spaces. Recent approaches using the Affine Geometric Heat Flow (AGHF) Partial Differential Equation (PDE) have demonstrated promising results, generating dynamically feasible trajectories for complex systems like the Digit V3 humanoid within seconds. These methods efficiently solve trajectory optimization problems over a two-dimensional domain by evolving an initial trajectory to minimize control effort. However, these AGHF approaches are limited to a single type of optimal control problem (i.e., minimizing the integral of squared control norms) and typically require initial guesses that satisfy constraints to ensure satisfactory convergence. These limitations restrict the potential utility of the AGHF PDE especially when trying to synthesize trajectories for robotic systems. This paper generalizes the AGHF formulation to accommodate arbitrary cost functions, significantly expanding the classes of trajectories that can be generated. This work also introduces a Phase1 - Phase 2 Algorithm that enables the use of constraint-violating initial guesses while guaranteeing satisfactory convergence. The effectiveness of the proposed method is demonstrated through comparative evaluations against state-of-the-art techniques across various dynamical systems and challenging trajectory generation problems. Project Page: https://roahmlab.github.io/BLAZE/

Provably-Safe, Online System Identification

Apr 30, 2025

Abstract:Precise manipulation tasks require accurate knowledge of payload inertial parameters. Unfortunately, identifying these parameters for unknown payloads while ensuring that the robotic system satisfies its input and state constraints while avoiding collisions with the environment remains a significant challenge. This paper presents an integrated framework that enables robotic manipulators to safely and automatically identify payload parameters while maintaining operational safety guarantees. The framework consists of two synergistic components: an online trajectory planning and control framework that generates provably-safe exciting trajectories for system identification that can be tracked while respecting robot constraints and avoiding obstacles and a robust system identification method that computes rigorous overapproximative bounds on end-effector inertial parameters assuming bounded sensor noise. Experimental validation on a robotic manipulator performing challenging tasks with various unknown payloads demonstrates the framework's effectiveness in establishing accurate parameter bounds while maintaining safety throughout the identification process. The code is available at our project webpage: https://roahmlab.github.io/OnlineSafeSysID/.

These Magic Moments: Differentiable Uncertainty Quantification of Radiance Field Models

Mar 20, 2025

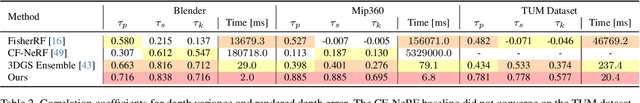

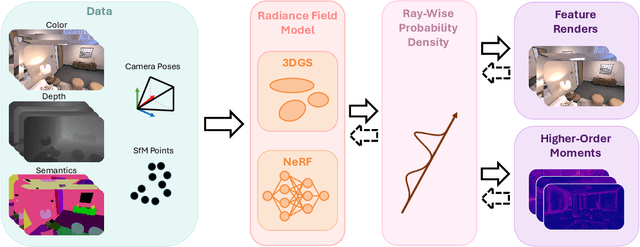

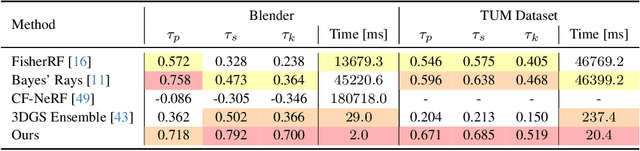

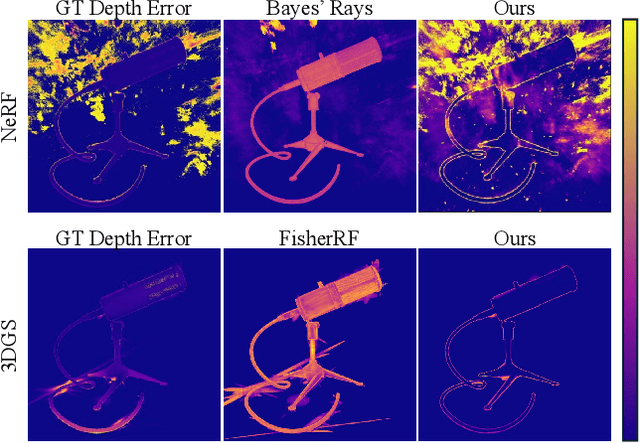

Abstract:This paper introduces a novel approach to uncertainty quantification for radiance fields by leveraging higher-order moments of the rendering equation. Uncertainty quantification is crucial for downstream tasks including view planning and scene understanding, where safety and robustness are paramount. However, the high dimensionality and complexity of radiance fields pose significant challenges for uncertainty quantification, limiting the use of these uncertainty quantification methods in high-speed decision-making. We demonstrate that the probabilistic nature of the rendering process enables efficient and differentiable computation of higher-order moments for radiance field outputs, including color, depth, and semantic predictions. Our method outperforms existing radiance field uncertainty estimation techniques while offering a more direct, computationally efficient, and differentiable formulation without the need for post-processing. Beyond uncertainty quantification, we also illustrate the utility of our approach in downstream applications such as next-best-view (NBV) selection and active ray sampling for neural radiance field training. Extensive experiments on synthetic and real-world scenes confirm the efficacy of our approach, which achieves state-of-the-art performance while maintaining simplicity.

DEFT: Differentiable Branched Discrete Elastic Rods for Modeling Furcated DLOs in Real-Time

Feb 20, 2025Abstract:Autonomous wire harness assembly requires robots to manipulate complex branched cables with high precision and reliability. A key challenge in automating this process is predicting how these flexible and branched structures behave under manipulation. Without accurate predictions, it is difficult for robots to reliably plan or execute assembly operations. While existing research has made progress in modeling single-threaded Deformable Linear Objects (DLOs), extending these approaches to Branched Deformable Linear Objects (BDLOs) presents fundamental challenges. The junction points in BDLOs create complex force interactions and strain propagation patterns that cannot be adequately captured by simply connecting multiple single-DLO models. To address these challenges, this paper presents Differentiable discrete branched Elastic rods for modeling Furcated DLOs in real-Time (DEFT), a novel framework that combines a differentiable physics-based model with a learning framework to: 1) accurately model BDLO dynamics, including dynamic propagation at junction points and grasping in the middle of a BDLO, 2) achieve efficient computation for real-time inference, and 3) enable planning to demonstrate dexterous BDLO manipulation. A comprehensive series of real-world experiments demonstrates DEFT's efficacy in terms of accuracy, computational speed, and generalizability compared to state-of-the-art alternatives. Project page:https://roahmlab.github.io/DEFT/.

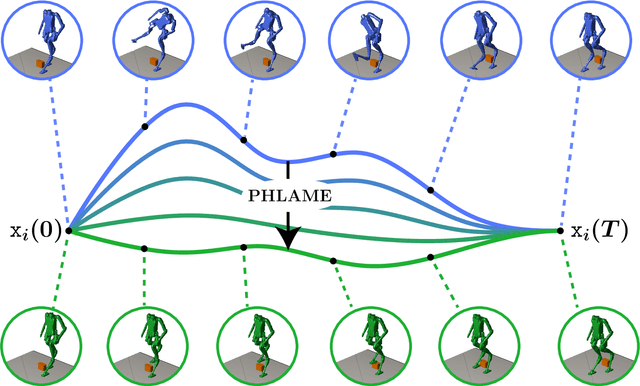

Bring the Heat: Rapid Trajectory Optimization with Pseudospectral Techniques and the Affine Geometric Heat Flow Equation

Nov 20, 2024

Abstract:Generating optimal trajectories for high-dimensional robotic systems in a time-efficient manner while adhering to constraints is a challenging task. To address this challenge, this paper introduces PHLAME, which applies pseudospectral collocation and spatial vector algebra to efficiently solve the Affine Geometric Heat Flow (AGHF) Partial Differential Equation (PDE) for trajectory optimization. Unlike traditional PDE approaches like the Hamilton-Jacobi-Bellman (HJB) PDE, which solve for a function over the entire state space, computing a solution to the AGHF PDE scales more efficiently because its solution is defined over a two-dimensional domain, thereby avoiding the intractability of state-space scaling. To solve the AGHF one usually applies the Method of Lines (MOL), which works by discretizing one variable of the AGHF PDE, effectively converting the PDE into a system of ordinary differential equations (ODEs) that can be solved using standard time-integration methods. Though powerful, this method requires a fine discretization to generate accurate solutions and still requires evaluating the AGHF PDE which can be computationally expensive for high-dimensional systems. PHLAME overcomes this deficiency by using a pseudospectral method, which reduces the number of function evaluations required to yield a high accuracy solution thereby allowing it to scale efficiently to high-dimensional robotic systems. To further increase computational speed, this paper presents analytical expressions for the AGHF and its Jacobian, both of which can be computed efficiently using rigid body dynamics algorithms. The proposed method PHLAME is tested across various dynamical systems, with and without obstacles and compared to a number of state-of-the-art techniques. PHLAME generates trajectories for a 44-dimensional state-space system in $\sim3$ seconds, much faster than current state-of-the-art techniques.

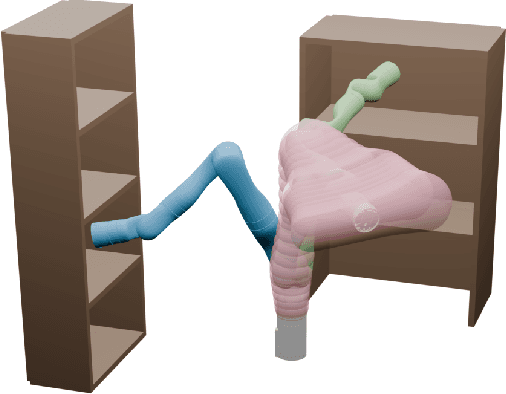

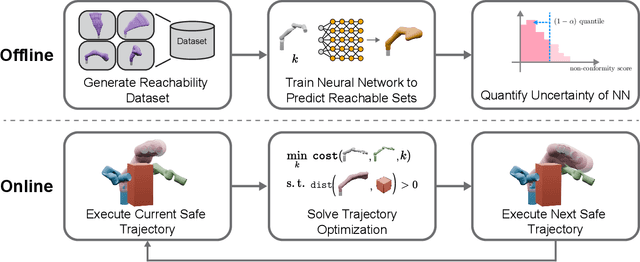

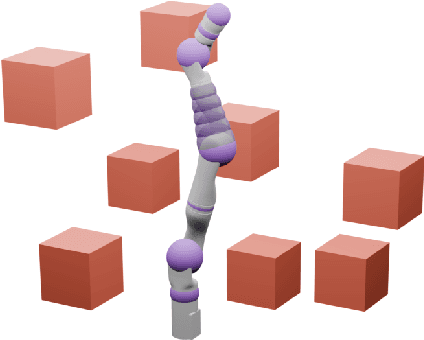

Conformalized Reachable Sets for Obstacle Avoidance With Spheres

Oct 13, 2024

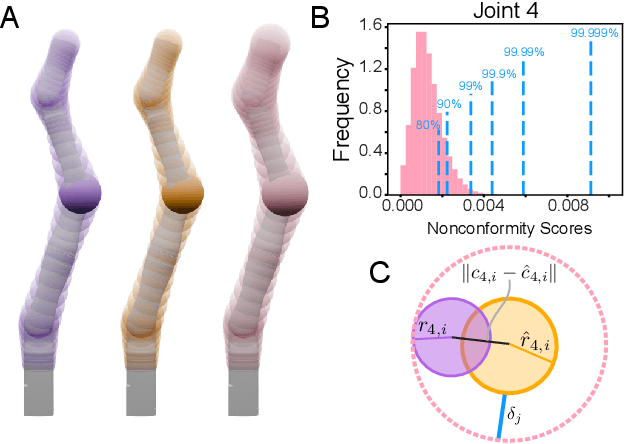

Abstract:Safe motion planning algorithms are necessary for deploying autonomous robots in unstructured environments. Motion plans must be safe to ensure that the robot does not harm humans or damage any nearby objects. Generating these motion plans in real-time is also important to ensure that the robot can adapt to sudden changes in its environment. Many trajectory optimization methods introduce heuristics that balance safety and real-time performance, potentially increasing the risk of the robot colliding with its environment. This paper addresses this challenge by proposing Conformalized Reachable Sets for Obstacle Avoidance With Spheres (CROWS). CROWS is a novel real-time, receding-horizon trajectory planner that generates probalistically-safe motion plans. Offline, CROWS learns a novel neural network-based representation of a spherebased reachable set that overapproximates the swept volume of the robot's motion. CROWS then uses conformal prediction to compute a confidence bound that provides a probabilistic safety guarantee on the learned reachable set. At runtime, CROWS performs trajectory optimization to select a trajectory that is probabilstically-guaranteed to be collision-free. We demonstrate that CROWS outperforms a variety of state-of-the-art methods in solving challenging motion planning tasks in cluttered environments while remaining collision-free. Code, data, and video demonstrations can be found at https://roahmlab.github.io/crows/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge