Katherine A. Skinner

SurfSLAM: Sim-to-Real Underwater Stereo Reconstruction For Real-Time SLAM

Jan 15, 2026Abstract:Localization and mapping are core perceptual capabilities for underwater robots. Stereo cameras provide a low-cost means of directly estimating metric depth to support these tasks. However, despite recent advances in stereo depth estimation on land, computing depth from image pairs in underwater scenes remains challenging. In underwater environments, images are degraded by light attenuation, visual artifacts, and dynamic lighting conditions. Furthermore, real-world underwater scenes frequently lack rich texture useful for stereo depth estimation and 3D reconstruction. As a result, stereo estimation networks trained on in-air data cannot transfer directly to the underwater domain. In addition, there is a lack of real-world underwater stereo datasets for supervised training of neural networks. Poor underwater depth estimation is compounded in stereo-based Simultaneous Localization and Mapping (SLAM) algorithms, making it a fundamental challenge for underwater robot perception. To address these challenges, we propose a novel framework that enables sim-to-real training of underwater stereo disparity estimation networks using simulated data and self-supervised finetuning. We leverage our learned depth predictions to develop \algname, a novel framework for real-time underwater SLAM that fuses stereo cameras with IMU, barometric, and Doppler Velocity Log (DVL) measurements. Lastly, we collect a challenging real-world dataset of shipwreck surveys using an underwater robot. Our dataset features over 24,000 stereo pairs, along with high-quality, dense photogrammetry models and reference trajectories for evaluation. Through extensive experiments, we demonstrate the advantages of the proposed training approach on real-world data for improving stereo estimation in the underwater domain and for enabling accurate trajectory estimation and 3D reconstruction of complex shipwreck sites.

SLIM-VDB: A Real-Time 3D Probabilistic Semantic Mapping Framework

Dec 15, 2025Abstract:This paper introduces SLIM-VDB, a new lightweight semantic mapping system with probabilistic semantic fusion for closed-set or open-set dictionaries. Advances in data structures from the computer graphics community, such as OpenVDB, have demonstrated significantly improved computational and memory efficiency in volumetric scene representation. Although OpenVDB has been used for geometric mapping in robotics applications, semantic mapping for scene understanding with OpenVDB remains unexplored. In addition, existing semantic mapping systems lack support for integrating both fixed-category and open-language label predictions within a single framework. In this paper, we propose a novel 3D semantic mapping system that leverages the OpenVDB data structure and integrates a unified Bayesian update framework for both closed- and open-set semantic fusion. Our proposed framework, SLIM-VDB, achieves significant reduction in both memory and integration times compared to current state-of-the-art semantic mapping approaches, while maintaining comparable mapping accuracy. An open-source C++ codebase with a Python interface is available at https://github.com/umfieldrobotics/slim-vdb.

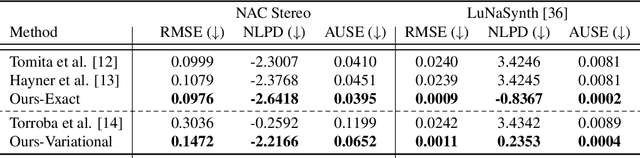

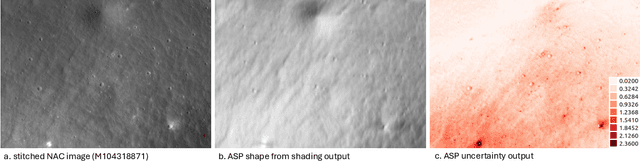

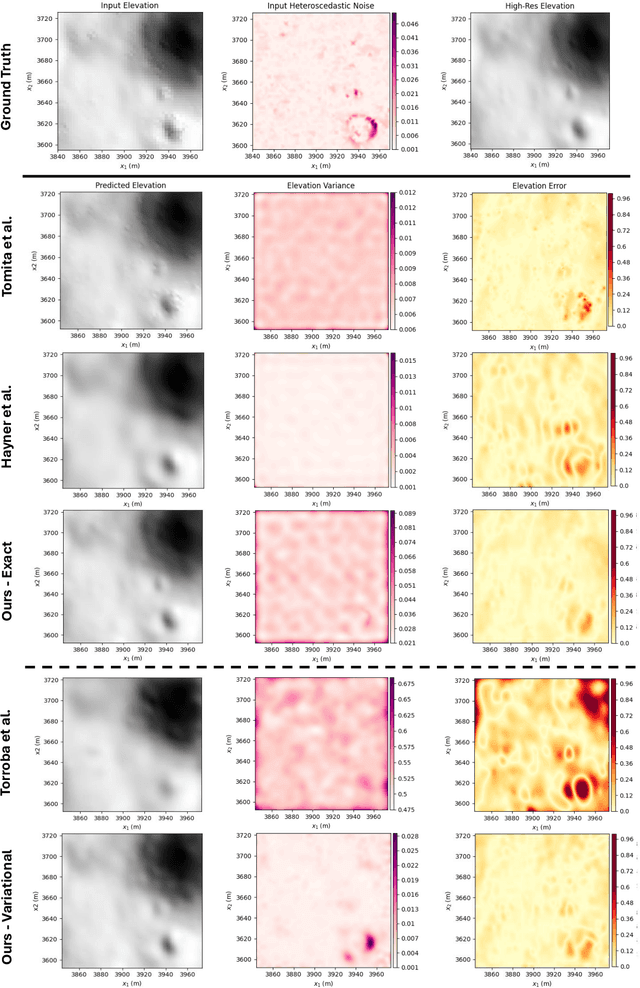

A Stochastic Approach to Terrain Maps for Safe Lunar Landing

Dec 12, 2025

Abstract:Safely landing on the lunar surface is a challenging task, especially in the heavily-shadowed South Pole region where traditional vision-based hazard detection methods are not reliable. The potential existence of valuable resources at the lunar South Pole has made landing in that region a high priority for many space agencies and commercial companies. However, relying on a LiDAR for hazard detection during descent is risky, as this technology is fairly untested in the lunar environment. There exists a rich log of lunar surface data from the Lunar Reconnaissance Orbiter (LRO), which could be used to create informative prior maps of the surface before descent. In this work, we propose a method for generating stochastic elevation maps from LRO data using Gaussian processes (GPs), which are a powerful Bayesian framework for non-parametric modeling that produce accompanying uncertainty estimates. In high-risk environments such as autonomous spaceflight, interpretable estimates of terrain uncertainty are critical. However, no previous approaches to stochastic elevation mapping have taken LRO Digital Elevation Model (DEM) confidence maps into account, despite this data containing key information about the quality of the DEM in different areas. To address this gap, we introduce a two-stage GP model in which a secondary GP learns spatially varying noise characteristics from DEM confidence data. This heteroscedastic information is then used to inform the noise parameters for the primary GP, which models the lunar terrain. Additionally, we use stochastic variational GPs to enable scalable training. By leveraging GPs, we are able to more accurately model the impact of heteroscedastic sensor noise on the resulting elevation map. As a result, our method produces more informative terrain uncertainty, which can be used for downstream tasks such as hazard detection and safe landing site selection.

Field Report on Ground Penetrating Radar for Localization at the Mars Desert Research Station

Apr 21, 2025Abstract:In this field report, we detail the lessons learned from our field expedition to collect Ground Penetrating Radar (GPR) data in a Mars analog environment for the purpose of validating GPR localization techniques in rugged environments. Planetary rovers are already equipped with GPR for geologic subsurface characterization. GPR has been successfully used to localize vehicles on Earth, but it has not yet been explored as another modality for localization on a planetary rover. Leveraging GPR for localization can aid in efficient and robust rover pose estimation. In order to demonstrate localizing GPR in a Mars analog environment, we collected over 50 individual survey trajectories during a two-week period at the Mars Desert Research Station (MDRS). In this report, we discuss our methodology, lessons learned, and opportunities for future work.

SonarSplat: Novel View Synthesis of Imaging Sonar via Gaussian Splatting

Mar 31, 2025

Abstract:In this paper, we present SonarSplat, a novel Gaussian splatting framework for imaging sonar that demonstrates realistic novel view synthesis and models acoustic streaking phenomena. Our method represents the scene as a set of 3D Gaussians with acoustic reflectance and saturation properties. We develop a novel method to efficiently rasterize learned Gaussians to produce a range/azimuth image that is faithful to the acoustic image formation model of imaging sonar. In particular, we develop a novel approach to model azimuth streaking in a Gaussian splatting framework. We evaluate SonarSplat using real-world datasets of sonar images collected from an underwater robotic platform in a controlled test tank and in a real-world river environment. Compared to the state-of-the-art, SonarSplat offers improved image synthesis capabilities (+2.5 dB PSNR). We also demonstrate that SonarSplat can be leveraged for azimuth streak removal and 3D scene reconstruction.

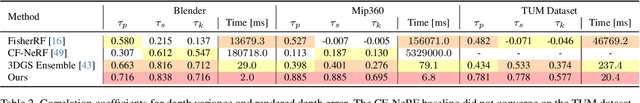

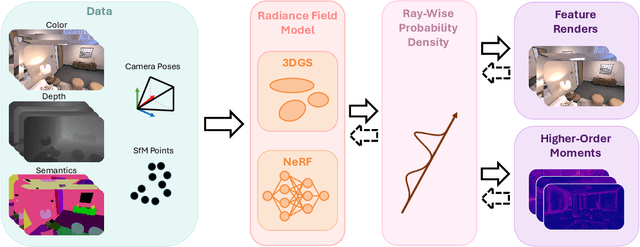

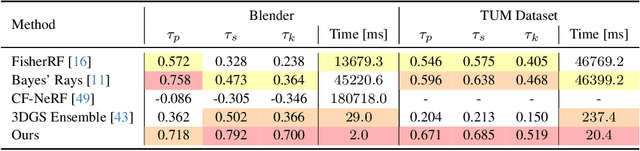

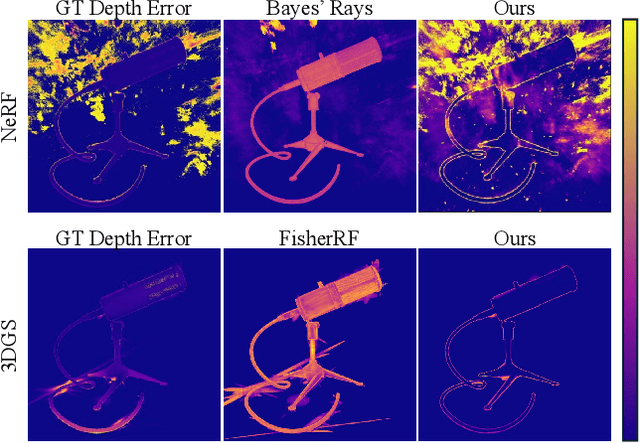

These Magic Moments: Differentiable Uncertainty Quantification of Radiance Field Models

Mar 20, 2025

Abstract:This paper introduces a novel approach to uncertainty quantification for radiance fields by leveraging higher-order moments of the rendering equation. Uncertainty quantification is crucial for downstream tasks including view planning and scene understanding, where safety and robustness are paramount. However, the high dimensionality and complexity of radiance fields pose significant challenges for uncertainty quantification, limiting the use of these uncertainty quantification methods in high-speed decision-making. We demonstrate that the probabilistic nature of the rendering process enables efficient and differentiable computation of higher-order moments for radiance field outputs, including color, depth, and semantic predictions. Our method outperforms existing radiance field uncertainty estimation techniques while offering a more direct, computationally efficient, and differentiable formulation without the need for post-processing. Beyond uncertainty quantification, we also illustrate the utility of our approach in downstream applications such as next-best-view (NBV) selection and active ray sampling for neural radiance field training. Extensive experiments on synthetic and real-world scenes confirm the efficacy of our approach, which achieves state-of-the-art performance while maintaining simplicity.

MarsLGPR: Mars Rover Localization with Ground Penetrating Radar

Mar 06, 2025Abstract:In this work, we propose the use of Ground Penetrating Radar (GPR) for rover localization on Mars. Precise pose estimation is an important task for mobile robots exploring planetary surfaces, as they operate in GPS-denied environments. Although visual odometry provides accurate localization, it is computationally expensive and can fail in dim or high-contrast lighting. Wheel encoders can also provide odometry estimation, but are prone to slipping on the sandy terrain encountered on Mars. Although traditionally a scientific surveying sensor, GPR has been used on Earth for terrain classification and localization through subsurface feature matching. The Perseverance rover and the upcoming ExoMars rover have GPR sensors already equipped to aid in the search of water and mineral resources. We propose to leverage GPR to aid in Mars rover localization. Specifically, we develop a novel GPR-based deep learning model that predicts 1D relative pose translation. We fuse our GPR pose prediction method with inertial and wheel encoder data in a filtering framework to output rover localization. We perform experiments in a Mars analog environment and demonstrate that our GPR-based displacement predictions both outperform wheel encoders and improve multi-modal filtering estimates in high-slip environments. Lastly, we present the first dataset aimed at GPR-based localization in Mars analog environments, which will be made publicly available upon publication.

OceanSim: A GPU-Accelerated Underwater Robot Perception Simulation Framework

Mar 03, 2025Abstract:Underwater simulators offer support for building robust underwater perception solutions. Significant work has recently been done to develop new simulators and to advance the performance of existing underwater simulators. Still, there remains room for improvement on physics-based underwater sensor modeling and rendering efficiency. In this paper, we propose OceanSim, a high-fidelity GPU-accelerated underwater simulator to address this research gap. We propose advanced physics-based rendering techniques to reduce the sim-to-real gap for underwater image simulation. We develop OceanSim to fully leverage the computing advantages of GPUs and achieve real-time imaging sonar rendering and fast synthetic data generation. We evaluate the capabilities and realism of OceanSim using real-world data to provide qualitative and quantitative results. The project page for OceanSim is https://umfieldrobotics.github.io/OceanSim.

LiHi-GS: LiDAR-Supervised Gaussian Splatting for Highway Driving Scene Reconstruction

Dec 19, 2024

Abstract:Photorealistic 3D scene reconstruction plays an important role in autonomous driving, enabling the generation of novel data from existing datasets to simulate safety-critical scenarios and expand training data without additional acquisition costs. Gaussian Splatting (GS) facilitates real-time, photorealistic rendering with an explicit 3D Gaussian representation of the scene, providing faster processing and more intuitive scene editing than the implicit Neural Radiance Fields (NeRFs). While extensive GS research has yielded promising advancements in autonomous driving applications, they overlook two critical aspects: First, existing methods mainly focus on low-speed and feature-rich urban scenes and ignore the fact that highway scenarios play a significant role in autonomous driving. Second, while LiDARs are commonplace in autonomous driving platforms, existing methods learn primarily from images and use LiDAR only for initial estimates or without precise sensor modeling, thus missing out on leveraging the rich depth information LiDAR offers and limiting the ability to synthesize LiDAR data. In this paper, we propose a novel GS method for dynamic scene synthesis and editing with improved scene reconstruction through LiDAR supervision and support for LiDAR rendering. Unlike prior works that are tested mostly on urban datasets, to the best of our knowledge, we are the first to focus on the more challenging and highly relevant highway scenes for autonomous driving, with sparse sensor views and monotone backgrounds.

VAIR: Visuo-Acoustic Implicit Representations for Low-Cost, Multi-Modal Transparent Surface Reconstruction in Indoor Scenes

Nov 07, 2024

Abstract:Mobile robots operating indoors must be prepared to navigate challenging scenes that contain transparent surfaces. This paper proposes a novel method for the fusion of acoustic and visual sensing modalities through implicit neural representations to enable dense reconstruction of transparent surfaces in indoor scenes. We propose a novel model that leverages generative latent optimization to learn an implicit representation of indoor scenes consisting of transparent surfaces. We demonstrate that we can query the implicit representation to enable volumetric rendering in image space or 3D geometry reconstruction (point clouds or mesh) with transparent surface prediction. We evaluate our method's effectiveness qualitatively and quantitatively on a new dataset collected using a custom, low-cost sensing platform featuring RGB-D cameras and ultrasonic sensors. Our method exhibits significant improvement over state-of-the-art for transparent surface reconstruction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge