Tiancheng Zhang

VISTA: Knowledge-Driven Interpretable Vessel Trajectory Imputation via Large Language Models

Jan 11, 2026Abstract:The Automatic Identification System provides critical information for maritime navigation and safety, yet its trajectories are often incomplete due to signal loss or deliberate tampering. Existing imputation methods emphasize trajectory recovery, paying limited attention to interpretability and failing to provide underlying knowledge that benefits downstream tasks such as anomaly detection and route planning. We propose knowledge-driven interpretable vessel trajectory imputation (VISTA), the first trajectory imputation framework that offers interpretability while simultaneously providing underlying knowledge to support downstream analysis. Specifically, we first define underlying knowledge as a combination of Structured Data-derived Knowledge (SDK) distilled from AIS data and Implicit LLM Knowledge acquired from large-scale Internet corpora. Second, to manage and leverage the SDK effectively at scale, we develop a data-knowledge-data loop that employs a Structured Data-derived Knowledge Graph for SDK extraction and knowledge-driven trajectory imputation. Third, to efficiently process large-scale AIS data, we introduce a workflow management layer that coordinates the end-to-end pipeline, enabling parallel knowledge extraction and trajectory imputation with anomaly handling and redundancy elimination. Experiments on two large AIS datasets show that VISTA is capable of state-of-the-art imputation accuracy and computational efficiency, improving over state-of-the-art baselines by 5%-94% and reducing time cost by 51%-93%, while producing interpretable knowledge cues that benefit downstream tasks. The source code and implementation details of VISTA are publicly available.

MSC-180: A Benchmark for Automated Formal Theorem Proving from Mathematical Subject Classification

Dec 20, 2025

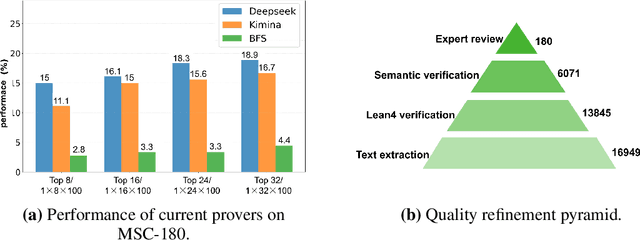

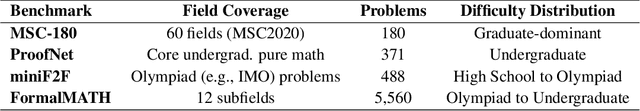

Abstract:Automated Theorem Proving (ATP) represents a core research direction in artificial intelligence for achieving formal reasoning and verification, playing a significant role in advancing machine intelligence. However, current large language model (LLM)-based theorem provers suffer from limitations such as restricted domain coverage and weak generalization in mathematical reasoning. To address these issues, we propose MSC-180, a benchmark for evaluation based on the MSC2020 mathematical subject classification. It comprises 180 formal verification problems, 3 advanced problems from each of 60 mathematical branches, spanning from undergraduate to graduate levels. Each problem has undergone multiple rounds of verification and refinement by domain experts to ensure formal accuracy. Evaluations of state-of-the-art LLM-based theorem provers under the pass@32 setting reveal that the best model achieves only an 18.89% overall pass rate, with prominent issues including significant domain bias (maximum domain coverage 41.7%) and a difficulty gap (significantly lower pass rates on graduate-level problems). To further quantify performance variability across mathematical domains, we introduce the coefficient of variation (CV) as an evaluation metric. The observed CV values are 4-6 times higher than the statistical high-variability threshold, indicating that the models still rely on pattern matching from training corpora rather than possessing transferable reasoning mechanisms and systematic generalization capabilities. MSC-180, together with its multi-dimensional evaluation framework, provides a discriminative and systematic benchmark for driving the development of next-generation AI systems with genuine mathematical reasoning abilities.

Advancing Knowledge Tracing by Exploring Follow-up Performance Trends

Aug 11, 2025

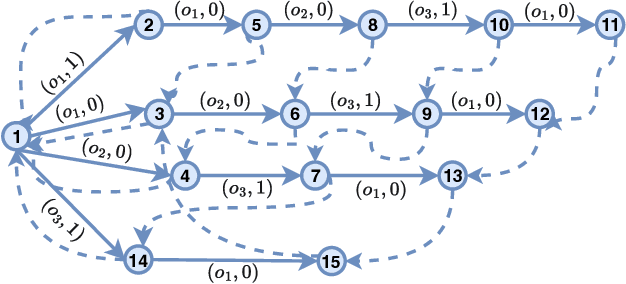

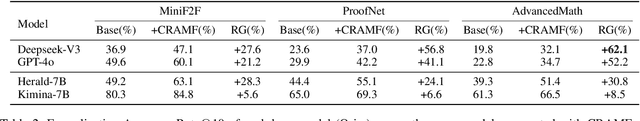

Abstract:Intelligent Tutoring Systems (ITS), such as Massive Open Online Courses, offer new opportunities for human learning. At the core of such systems, knowledge tracing (KT) predicts students' future performance by analyzing their historical learning activities, enabling an accurate evaluation of students' knowledge states over time. We show that existing KT methods often encounter correlation conflicts when analyzing the relationships between historical learning sequences and future performance. To address such conflicts, we propose to extract so-called Follow-up Performance Trends (FPTs) from historical ITS data and to incorporate them into KT. We propose a method called Forward-Looking Knowledge Tracing (FINER) that combines historical learning sequences with FPTs to enhance student performance prediction accuracy. FINER constructs learning patterns that facilitate the retrieval of FPTs from historical ITS data in linear time; FINER includes a novel similarity-aware attention mechanism that aggregates FPTs based on both frequency and contextual similarity; and FINER offers means of combining FPTs and historical learning sequences to enable more accurate prediction of student future performance. Experiments on six real-world datasets show that FINER can outperform ten state-of-the-art KT methods, increasing accuracy by 8.74% to 84.85%.

Automated Formalization via Conceptual Retrieval-Augmented LLMs

Aug 09, 2025

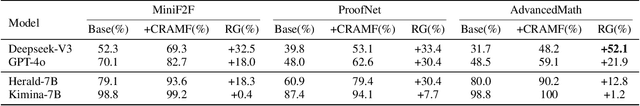

Abstract:Interactive theorem provers (ITPs) require manual formalization, which is labor-intensive and demands expert knowledge. While automated formalization offers a potential solution, it faces two major challenges: model hallucination (e.g., undefined predicates, symbol misuse, and version incompatibility) and the semantic gap caused by ambiguous or missing premises in natural language descriptions. To address these issues, we propose CRAMF, a Concept-driven Retrieval-Augmented Mathematical Formalization framework. CRAMF enhances LLM-based autoformalization by retrieving formal definitions of core mathematical concepts, providing contextual grounding during code generation. However, applying retrieval-augmented generation (RAG) in this setting is non-trivial due to the lack of structured knowledge bases, the polymorphic nature of mathematical concepts, and the high precision required in formal retrieval. We introduce a framework for automatically constructing a concept-definition knowledge base from Mathlib4, the standard mathematical library for the Lean 4 theorem prover, indexing over 26,000 formal definitions and 1,000+ core mathematical concepts. To address conceptual polymorphism, we propose contextual query augmentation with domain- and application-level signals. In addition, we design a dual-channel hybrid retrieval strategy with reranking to ensure accurate and relevant definition retrieval. Experiments on miniF2F, ProofNet, and our newly proposed AdvancedMath benchmark show that CRAMF can be seamlessly integrated into LLM-based autoformalizers, yielding consistent improvements in translation accuracy, achieving up to 62.1% and an average of 29.9% relative improvement.

MetaEformer: Unveiling and Leveraging Meta-patterns for Complex and Dynamic Systems Load Forecasting

Jun 15, 2025Abstract:Time series forecasting is a critical and practical problem in many real-world applications, especially for industrial scenarios, where load forecasting underpins the intelligent operation of modern systems like clouds, power grids and traffic networks.However, the inherent complexity and dynamics of these systems present significant challenges. Despite advances in methods such as pattern recognition and anti-non-stationarity have led to performance gains, current methods fail to consistently ensure effectiveness across various system scenarios due to the intertwined issues of complex patterns, concept-drift, and few-shot problems. To address these challenges simultaneously, we introduce a novel scheme centered on fundamental waveform, a.k.a., meta-pattern. Specifically, we develop a unique Meta-pattern Pooling mechanism to purify and maintain meta-patterns, capturing the nuanced nature of system loads. Complementing this, the proposed Echo mechanism adaptively leverages the meta-patterns, enabling a flexible and precise pattern reconstruction. Our Meta-pattern Echo transformer (MetaEformer) seamlessly incorporates these mechanisms with the transformer-based predictor, offering end-to-end efficiency and interpretability of core processes. Demonstrating superior performance across eight benchmarks under three system scenarios, MetaEformer marks a significant advantage in accuracy, with a 37% relative improvement on fifteen state-of-the-art baselines.

Autoformalization in the Era of Large Language Models: A Survey

May 29, 2025Abstract:Autoformalization, the process of transforming informal mathematical propositions into verifiable formal representations, is a foundational task in automated theorem proving, offering a new perspective on the use of mathematics in both theoretical and applied domains. Driven by the rapid progress in artificial intelligence, particularly large language models (LLMs), this field has witnessed substantial growth, bringing both new opportunities and unique challenges. In this survey, we provide a comprehensive overview of recent advances in autoformalization from both mathematical and LLM-centric perspectives. We examine how autoformalization is applied across various mathematical domains and levels of difficulty, and analyze the end-to-end workflow from data preprocessing to model design and evaluation. We further explore the emerging role of autoformalization in enhancing the verifiability of LLM-generated outputs, highlighting its potential to improve both the trustworthiness and reasoning capabilities of LLMs. Finally, we summarize key open-source models and datasets supporting current research, and discuss open challenges and promising future directions for the field.

Differentiable Discrete Elastic Rods for Real-Time Modeling of Deformable Linear Objects

Jun 09, 2024Abstract:This paper addresses the task of modeling Deformable Linear Objects (DLOs), such as ropes and cables, during dynamic motion over long time horizons. This task presents significant challenges due to the complex dynamics of DLOs. To address these challenges, this paper proposes differentiable Discrete Elastic Rods For deformable linear Objects with Real-time Modeling (DEFORM), a novel framework that combines a differentiable physics-based model with a learning framework to model DLOs accurately and in real-time. The performance of DEFORM is evaluated in an experimental setup involving two industrial robots and a variety of sensors. A comprehensive series of experiments demonstrate the efficacy of DEFORM in terms of accuracy, computational speed, and generalizability when compared to state-of-the-art alternatives. To further demonstrate the utility of DEFORM, this paper integrates it into a perception pipeline and illustrates its superior performance when compared to the state-of-the-art methods while tracking a DLO even in the presence of occlusions. Finally, this paper illustrates the superior performance of DEFORM when compared to state-of-the-art methods when it is applied to perform autonomous planning and control of DLOs.

Bayesian Personalized Federated Learning with Shared and Personalized Uncertainty Representations

Oct 03, 2023Abstract:Bayesian personalized federated learning (BPFL) addresses challenges in existing personalized FL (PFL). BPFL aims to quantify the uncertainty and heterogeneity within and across clients towards uncertainty representations by addressing the statistical heterogeneity of client data. In PFL, some recent preliminary work proposes to decompose hidden neural representations into shared and local components and demonstrates interesting results. However, most of them do not address client uncertainty and heterogeneity in FL systems, while appropriately decoupling neural representations is challenging and often ad hoc. In this paper, we make the first attempt to introduce a general BPFL framework to decompose and jointly learn shared and personalized uncertainty representations on statistically heterogeneous client data over time. A Bayesian federated neural network BPFed instantiates BPFL by jointly learning cross-client shared uncertainty and client-specific personalized uncertainty over statistically heterogeneous and randomly participating clients. We further involve continual updating of prior distribution in BPFed to speed up the convergence and avoid catastrophic forgetting. Theoretical analysis and guarantees are provided in addition to the experimental evaluation of BPFed against the diversified baselines.

A Probabilistic Generative Model for Tracking Multi-Knowledge Concept Mastery Probability

Feb 17, 2023

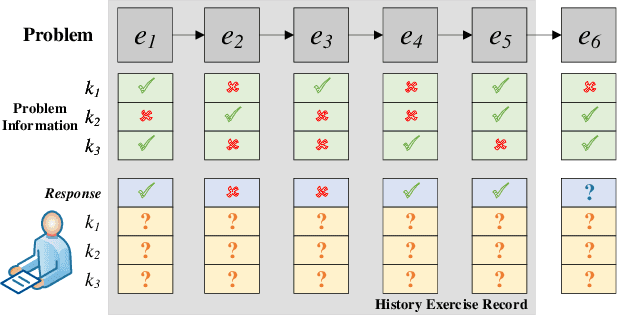

Abstract:Knowledge tracing aims to track students' knowledge status over time to predict students' future performance accurately. Markov chain-based knowledge tracking (MCKT) models can track knowledge concept mastery probability over time. However, as the number of tracked knowledge concepts increases, the time complexity of MCKT predicting student performance increases exponentially (also called explaining away problem. In addition, the existing MCKT models only consider the relationship between students' knowledge status and problems when modeling students' responses but ignore the relationship between knowledge concepts in the same problem. To address these challenges, we propose an inTerpretable pRobAbilistiC gEnerative moDel (TRACED), which can track students' numerous knowledge concepts mastery probabilities over time. To solve \emph{explain away problem}, we design Long and Short-Term Memory (LSTM)-based networks to approximate the posterior distribution, predict students' future performance, and propose a heuristic algorithm to train LSTMs and probabilistic graphical model jointly. To better model students' exercise responses, we proposed a logarithmic linear model with three interactive strategies, which models students' exercise responses by considering the relationship among students' knowledge status, knowledge concept, and problems. We conduct experiments with four real-world datasets in three knowledge-driven tasks. The experimental results show that TRACED outperforms existing knowledge tracing methods in predicting students' future performance and can learn the relationship among students, knowledge concepts, and problems from students' exercise sequences. We also conduct several case studies. The case studies show that TRACED exhibits excellent interpretability and thus has the potential for personalized automatic feedback in the real-world educational environment.

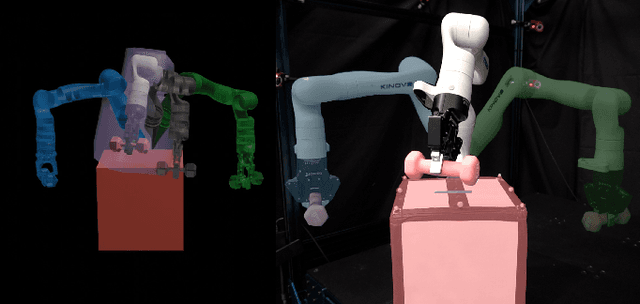

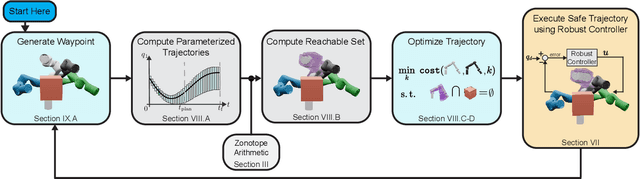

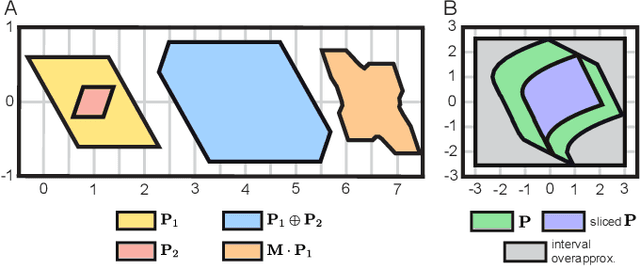

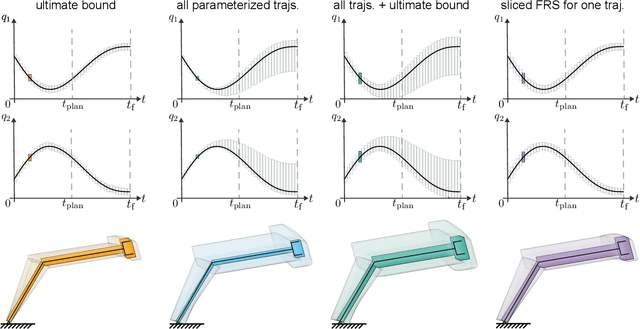

Can't Touch This: Real-Time, Safe Motion Planning and Control for Manipulators Under Uncertainty

Jan 30, 2023

Abstract:A key challenge to the widespread deployment of robotic manipulators is the need to ensure safety in arbitrary environments while generating new motion plans in real-time. In particular, one must ensure that a manipulator does not collide with obstacles, collide with itself, or exceed its joint torque limits. This challenge is compounded by the need to account for uncertainty in the mass and inertia of manipulated objects, and potentially the robot itself. The present work addresses this challenge by proposing Autonomous Robust Manipulation via Optimization with Uncertainty-aware Reachability (ARMOUR), a provably-safe, receding-horizon trajectory planner and tracking controller framework for serial link manipulators. ARMOUR works by first constructing a robust, passivity-based controller that is proven to enable a manipulator to track desired trajectories with bounded error despite uncertain dynamics. Next, ARMOUR uses a novel variation on the Recursive Newton-Euler Algorithm (RNEA) to compute the set of all possible inputs required to track any trajectory within a continuum of desired trajectories. Finally, the method computes an over-approximation to the swept volume of the manipulator; this enables one to formulate an optimization problem, which can be solved in real-time, to synthesize provably-safe motion. The proposed method is compared to state of the art methods and demonstrated on a variety of challenging manipulation examples in simulation and on real hardware, such as maneuvering a dumbbell with uncertain mass around obstacles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge