Lanjun Wang

Huawei Cananda Technologies Co. Ltd

Key Decision-Makers in Multi-Agent Debates: Who Holds the Power?

Nov 14, 2025Abstract:Recent studies on LLM agent scaling have highlighted the potential of Multi-Agent Debate (MAD) to enhance reasoning abilities. However, the critical aspect of role allocation strategies remains underexplored. In this study, we demonstrate that allocating roles with differing viewpoints to specific positions significantly impacts MAD's performance in reasoning tasks. Specifically, we find a novel role allocation strategy, "Truth Last", which can improve MAD performance by up to 22% in reasoning tasks. To address the issue of unknown truth in practical applications, we propose the Multi-Agent Debate Consistency (MADC) strategy, which systematically simulates and optimizes its core mechanisms. MADC incorporates path consistency to assess agreement among independent roles, simulating the role with the highest consistency score as the truth. We validated MADC across a range of LLMs (9 models), including the DeepSeek-R1 Distilled Models, on challenging reasoning tasks. MADC consistently demonstrated advanced performance, effectively overcoming MAD's performance bottlenecks and providing a crucial pathway for further improvements in LLM agent scaling.

T2I-RiskyPrompt: A Benchmark for Safety Evaluation, Attack, and Defense on Text-to-Image Model

Oct 25, 2025

Abstract:Using risky text prompts, such as pornography and violent prompts, to test the safety of text-to-image (T2I) models is a critical task. However, existing risky prompt datasets are limited in three key areas: 1) limited risky categories, 2) coarse-grained annotation, and 3) low effectiveness. To address these limitations, we introduce T2I-RiskyPrompt, a comprehensive benchmark designed for evaluating safety-related tasks in T2I models. Specifically, we first develop a hierarchical risk taxonomy, which consists of 6 primary categories and 14 fine-grained subcategories. Building upon this taxonomy, we construct a pipeline to collect and annotate risky prompts. Finally, we obtain 6,432 effective risky prompts, where each prompt is annotated with both hierarchical category labels and detailed risk reasons. Moreover, to facilitate the evaluation, we propose a reason-driven risky image detection method that explicitly aligns the MLLM with safety annotations. Based on T2I-RiskyPrompt, we conduct a comprehensive evaluation of eight T2I models, nine defense methods, five safety filters, and five attack strategies, offering nine key insights into the strengths and limitations of T2I model safety. Finally, we discuss potential applications of T2I-RiskyPrompt across various research fields. The dataset and code are provided in https://github.com/datar001/T2I-RiskyPrompt.

Organ-Agents: Virtual Human Physiology Simulator via LLMs

Aug 20, 2025Abstract:Recent advances in large language models (LLMs) have enabled new possibilities in simulating complex physiological systems. We introduce Organ-Agents, a multi-agent framework that simulates human physiology via LLM-driven agents. Each Simulator models a specific system (e.g., cardiovascular, renal, immune). Training consists of supervised fine-tuning on system-specific time-series data, followed by reinforcement-guided coordination using dynamic reference selection and error correction. We curated data from 7,134 sepsis patients and 7,895 controls, generating high-resolution trajectories across 9 systems and 125 variables. Organ-Agents achieved high simulation accuracy on 4,509 held-out patients, with per-system MSEs <0.16 and robustness across SOFA-based severity strata. External validation on 22,689 ICU patients from two hospitals showed moderate degradation under distribution shifts with stable simulation. Organ-Agents faithfully reproduces critical multi-system events (e.g., hypotension, hyperlactatemia, hypoxemia) with coherent timing and phase progression. Evaluation by 15 critical care physicians confirmed realism and physiological plausibility (mean Likert ratings 3.9 and 3.7). Organ-Agents also enables counterfactual simulations under alternative sepsis treatment strategies, generating trajectories and APACHE II scores aligned with matched real-world patients. In downstream early warning tasks, classifiers trained on synthetic data showed minimal AUROC drops (<0.04), indicating preserved decision-relevant patterns. These results position Organ-Agents as a credible, interpretable, and generalizable digital twin for precision diagnosis, treatment simulation, and hypothesis testing in critical care.

MetaEformer: Unveiling and Leveraging Meta-patterns for Complex and Dynamic Systems Load Forecasting

Jun 15, 2025Abstract:Time series forecasting is a critical and practical problem in many real-world applications, especially for industrial scenarios, where load forecasting underpins the intelligent operation of modern systems like clouds, power grids and traffic networks.However, the inherent complexity and dynamics of these systems present significant challenges. Despite advances in methods such as pattern recognition and anti-non-stationarity have led to performance gains, current methods fail to consistently ensure effectiveness across various system scenarios due to the intertwined issues of complex patterns, concept-drift, and few-shot problems. To address these challenges simultaneously, we introduce a novel scheme centered on fundamental waveform, a.k.a., meta-pattern. Specifically, we develop a unique Meta-pattern Pooling mechanism to purify and maintain meta-patterns, capturing the nuanced nature of system loads. Complementing this, the proposed Echo mechanism adaptively leverages the meta-patterns, enabling a flexible and precise pattern reconstruction. Our Meta-pattern Echo transformer (MetaEformer) seamlessly incorporates these mechanisms with the transformer-based predictor, offering end-to-end efficiency and interpretability of core processes. Demonstrating superior performance across eight benchmarks under three system scenarios, MetaEformer marks a significant advantage in accuracy, with a 37% relative improvement on fifteen state-of-the-art baselines.

RoCo-Sim: Enhancing Roadside Collaborative Perception through Foreground Simulation

Mar 13, 2025

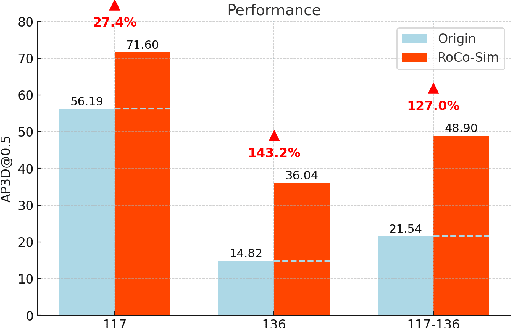

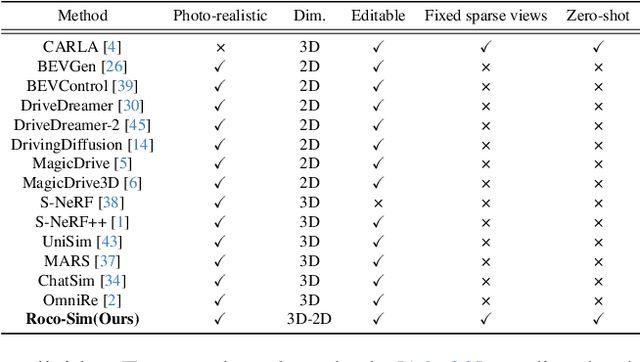

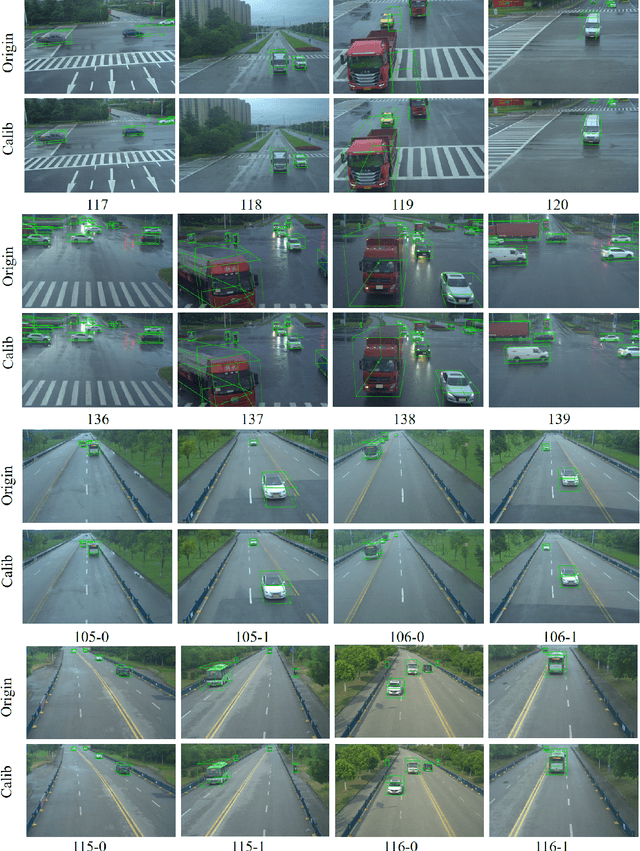

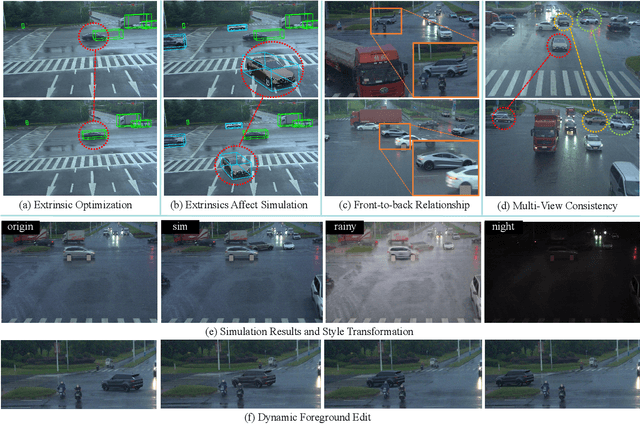

Abstract:Roadside Collaborative Perception refers to a system where multiple roadside units collaborate to pool their perceptual data, assisting vehicles in enhancing their environmental awareness. Existing roadside perception methods concentrate on model design but overlook data issues like calibration errors, sparse information, and multi-view consistency, leading to poor performance on recent published datasets. To significantly enhance roadside collaborative perception and address critical data issues, we present the first simulation framework RoCo-Sim for road-side collaborative perception. RoCo-Sim is capable of generating diverse, multi-view consistent simulated roadside data through dynamic foreground editing and full-scene style transfer of a single image. RoCo-Sim consists of four components: (1) Camera Extrinsic Optimization ensures accurate 3D to 2D projection for roadside cameras; (2) A novel Multi-View Occlusion-Aware Sampler (MOAS) determines the placement of diverse digital assets within 3D space; (3) DepthSAM innovatively models foreground-background relationships from single-frame fixed-view images, ensuring multi-view consistency of foreground; and (4) Scalable Post-Processing Toolkit generates more realistic and enriched scenes through style transfer and other enhancements. RoCo-Sim significantly improves roadside 3D object detection, outperforming SOTA methods by 83.74 on Rcooper-Intersection and 83.12 on TUMTraf-V2X for AP70. RoCo-Sim fills a critical gap in roadside perception simulation. Code and pre-trained models will be released soon: https://github.com/duyuwen-duen/RoCo-Sim

TRCE: Towards Reliable Malicious Concept Erasure in Text-to-Image Diffusion Models

Mar 10, 2025Abstract:Recent advances in text-to-image diffusion models enable photorealistic image generation, but they also risk producing malicious content, such as NSFW images. To mitigate risk, concept erasure methods are studied to facilitate the model to unlearn specific concepts. However, current studies struggle to fully erase malicious concepts implicitly embedded in prompts (e.g., metaphorical expressions or adversarial prompts) while preserving the model's normal generation capability. To address this challenge, our study proposes TRCE, using a two-stage concept erasure strategy to achieve an effective trade-off between reliable erasure and knowledge preservation. Firstly, TRCE starts by erasing the malicious semantics implicitly embedded in textual prompts. By identifying a critical mapping objective(i.e., the [EoT] embedding), we optimize the cross-attention layers to map malicious prompts to contextually similar prompts but with safe concepts. This step prevents the model from being overly influenced by malicious semantics during the denoising process. Following this, considering the deterministic properties of the sampling trajectory of the diffusion model, TRCE further steers the early denoising prediction toward the safe direction and away from the unsafe one through contrastive learning, thus further avoiding the generation of malicious content. Finally, we conduct comprehensive evaluations of TRCE on multiple malicious concept erasure benchmarks, and the results demonstrate its effectiveness in erasing malicious concepts while better preserving the model's original generation ability. The code is available at: http://github.com/ddgoodgood/TRCE. CAUTION: This paper includes model-generated content that may contain offensive material.

Domain Adaptation from Generated Multi-Weather Images for Unsupervised Maritime Object Classification

Jan 26, 2025

Abstract:The classification and recognition of maritime objects are crucial for enhancing maritime safety, monitoring, and intelligent sea environment prediction. However, existing unsupervised methods for maritime object classification often struggle with the long-tail data distributions in both object categories and weather conditions. In this paper, we construct a dataset named AIMO produced by large-scale generative models with diverse weather conditions and balanced object categories, and collect a dataset named RMO with real-world images where long-tail issue exists. We propose a novel domain adaptation approach that leverages AIMO (source domain) to address the problem of limited labeled data, unbalanced distribution and domain shift in RMO (target domain), and enhance the generalization of source features with the Vision-Language Models such as CLIP. Experimental results shows that the proposed method significantly improves the classification accuracy, particularly for samples within rare object categories and weather conditions. Datasets and codes will be publicly available at https://github.com/honoria0204/AIMO.

Towards Deconfounded Image-Text Matching with Causal Inference

Aug 22, 2024

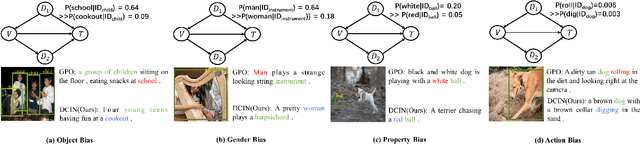

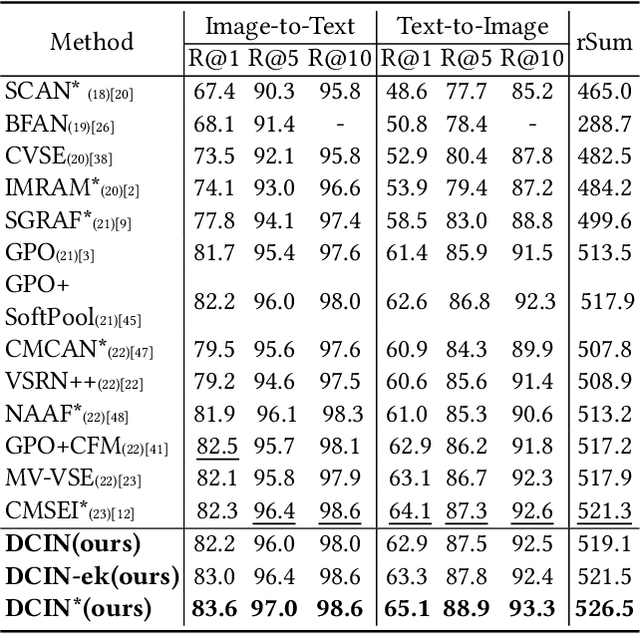

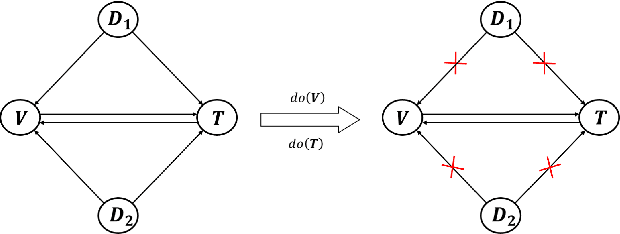

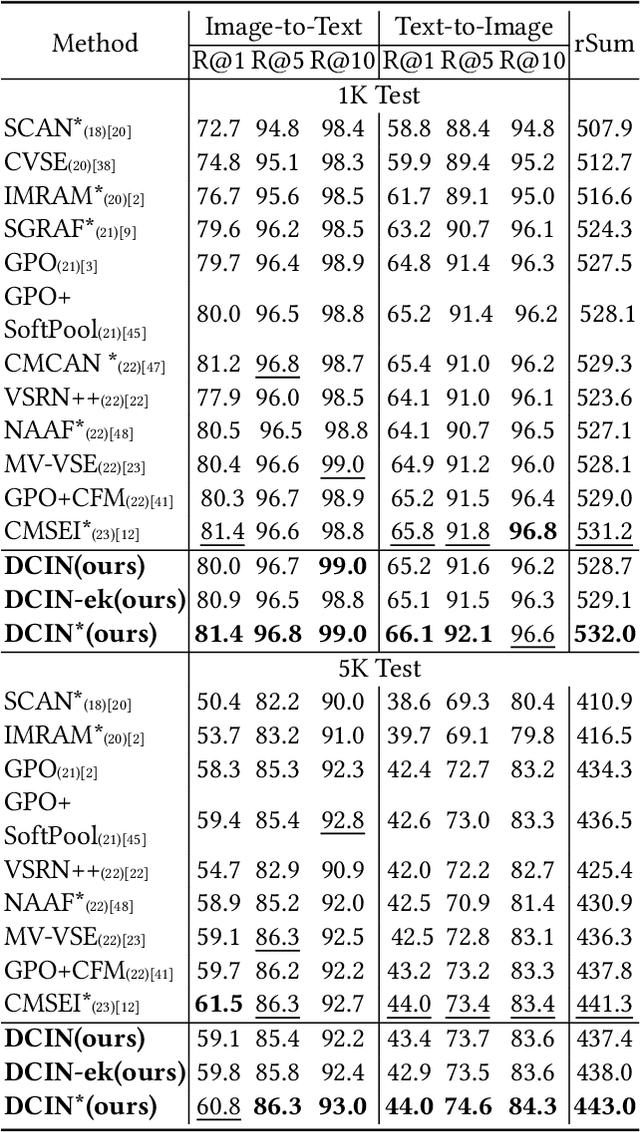

Abstract:Prior image-text matching methods have shown remarkable performance on many benchmark datasets, but most of them overlook the bias in the dataset, which exists in intra-modal and inter-modal, and tend to learn the spurious correlations that extremely degrade the generalization ability of the model. Furthermore, these methods often incorporate biased external knowledge from large-scale datasets as prior knowledge into image-text matching model, which is inevitable to force model further learn biased associations. To address above limitations, this paper firstly utilizes Structural Causal Models (SCMs) to illustrate how intra- and inter-modal confounders damage the image-text matching. Then, we employ backdoor adjustment to propose an innovative Deconfounded Causal Inference Network (DCIN) for image-text matching task. DCIN (1) decomposes the intra- and inter-modal confounders and incorporates them into the encoding stage of visual and textual features, effectively eliminating the spurious correlations during image-text matching, and (2) uses causal inference to mitigate biases of external knowledge. Consequently, the model can learn causality instead of spurious correlations caused by dataset bias. Extensive experiments on two well-known benchmark datasets, i.e., Flickr30K and MSCOCO, demonstrate the superiority of our proposed method.

* ACM MM

A Survey of Datasets for Information Diffusion Tasks

Jul 06, 2024

Abstract:Information diffusion across various new media platforms gradually influences perceptions, decisions, and social behaviors of individual users. In communication studies, the famous Five W's of Communication model (5W Model) has displayed the process of information diffusion clearly. At present, although plenty of studies and corresponding datasets about information diffusion have emerged, a systematic categorization of tasks and an integration of datasets are still lacking. To address this gap, we survey a systematic taxonomy of information diffusion tasks and datasets based on the "5W Model" framework. We first categorize the information diffusion tasks into ten subtasks with definitions and datasets analysis, from three main tasks of information diffusion prediction, social bot detection, and misinformation detection. We also collect the publicly available dataset repository of information diffusion tasks with the available links and compare them based on six attributes affiliated to users and content: user information, social network, bot label, propagation content, propagation network, and veracity label. In addition, we discuss the limitations and future directions of current datasets and research topics to advance the future development of information diffusion. The dataset repository can be accessed at our website https://github.com/fuxiaG/Information-Diffusion-Datasets.

A Training-Free Plug-and-Play Watermark Framework for Stable Diffusion

Apr 08, 2024

Abstract:Nowadays, the family of Stable Diffusion (SD) models has gained prominence for its high quality outputs and scalability. This has also raised security concerns on social media, as malicious users can create and disseminate harmful content. Existing approaches involve training components or entire SDs to embed a watermark in generated images for traceability and responsibility attribution. However, in the era of AI-generated content (AIGC), the rapid iteration of SDs renders retraining with watermark models costly. To address this, we propose a training-free plug-and-play watermark framework for SDs. Without modifying any components of SDs, we embed diverse watermarks in the latent space, adapting to the denoising process. Our experimental findings reveal that our method effectively harmonizes image quality and watermark invisibility. Furthermore, it performs robustly under various attacks. We also have validated that our method is generalized to multiple versions of SDs, even without retraining the watermark model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge