Yanfei Xiang

Advancing Ocean State Estimation with efficient and scalable AI

Nov 08, 2025Abstract:Accurate and efficient global ocean state estimation remains a grand challenge for Earth system science, hindered by the dual bottlenecks of computational scalability and degraded data fidelity in traditional data assimilation (DA) and deep learning (DL) approaches. Here we present an AI-driven Data Assimilation Framework for Ocean (ADAF-Ocean) that directly assimilates multi-source and multi-scale observations, ranging from sparse in-situ measurements to 4 km satellite swaths, without any interpolation or data thinning. Inspired by Neural Processes, ADAF-Ocean learns a continuous mapping from heterogeneous inputs to ocean states, preserving native data fidelity. Through AI-driven super-resolution, it reconstructs 0.25$^\circ$ mesoscale dynamics from coarse 1$^\circ$ fields, which ensures both efficiency and scalability, with just 3.7\% more parameters than the 1$^\circ$ configuration. When coupled with a DL forecasting system, ADAF-Ocean extends global forecast skill by up to 20 days compared to baselines without assimilation. This framework establishes a computationally viable and scientifically rigorous pathway toward real-time, high-resolution Earth system monitoring.

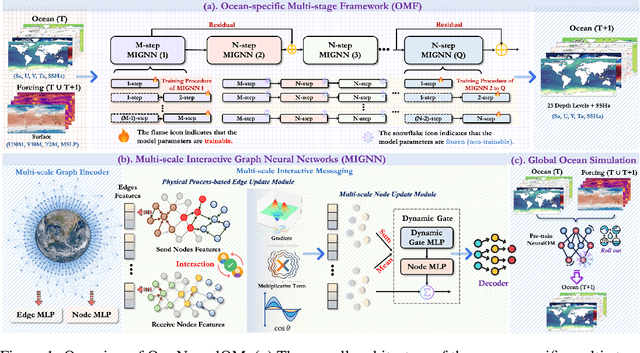

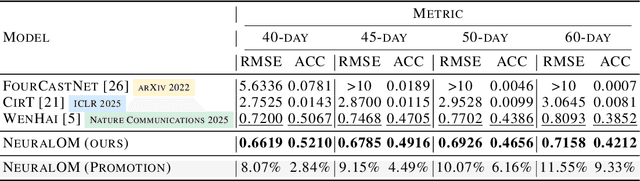

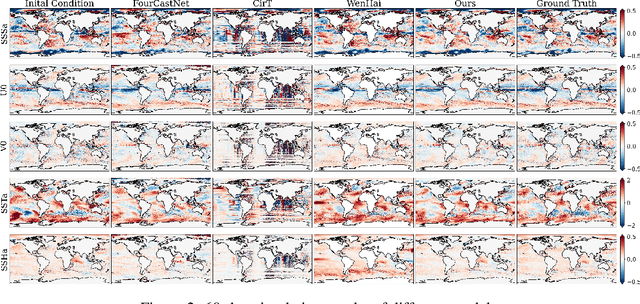

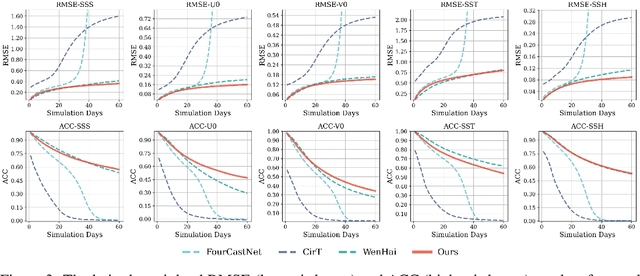

NeuralOM: Neural Ocean Model for Subseasonal-to-Seasonal Simulation

May 27, 2025

Abstract:Accurate Subseasonal-to-Seasonal (S2S) ocean simulation is critically important for marine research, yet remains challenging due to its substantial thermal inertia and extended time delay. Machine learning (ML)-based models have demonstrated significant advancements in simulation accuracy and computational efficiency compared to traditional numerical methods. Nevertheless, a significant limitation of current ML models for S2S ocean simulation is their inadequate incorporation of physical consistency and the slow-changing properties of the ocean system. In this work, we propose a neural ocean model (NeuralOM) for S2S ocean simulation with a multi-scale interactive graph neural network to emulate diverse physical phenomena associated with ocean systems effectively. Specifically, we propose a multi-stage framework tailored to model the ocean's slowly changing nature. Additionally, we introduce a multi-scale interactive messaging module to capture complex dynamical behaviors, such as gradient changes and multiplicative coupling relationships inherent in ocean dynamics. Extensive experimental evaluations confirm that our proposed NeuralOM outperforms state-of-the-art models in S2S and extreme event simulation. The codes are available at https://github.com/YuanGao-YG/NeuralOM.

Advanced long-term earth system forecasting by learning the small-scale nature

May 26, 2025Abstract:Reliable long-term forecast of Earth system dynamics is heavily hampered by instabilities in current AI models during extended autoregressive simulations. These failures often originate from inherent spectral bias, leading to inadequate representation of critical high-frequency, small-scale processes and subsequent uncontrolled error amplification. We present Triton, an AI framework designed to address this fundamental challenge. Inspired by increasing grids to explicitly resolve small scales in numerical models, Triton employs a hierarchical architecture processing information across multiple resolutions to mitigate spectral bias and explicitly model cross-scale dynamics. We demonstrate Triton's superior performance on challenging forecast tasks, achieving stable year-long global temperature forecasts, skillful Kuroshio eddy predictions till 120 days, and high-fidelity turbulence simulations preserving fine-scale structures all without external forcing, with significantly surpassing baseline AI models in long-term stability and accuracy. By effectively suppressing high-frequency error accumulation, Triton offers a promising pathway towards trustworthy AI-driven simulation for climate and earth system science.

ADAF: An Artificial Intelligence Data Assimilation Framework for Weather Forecasting

Nov 25, 2024

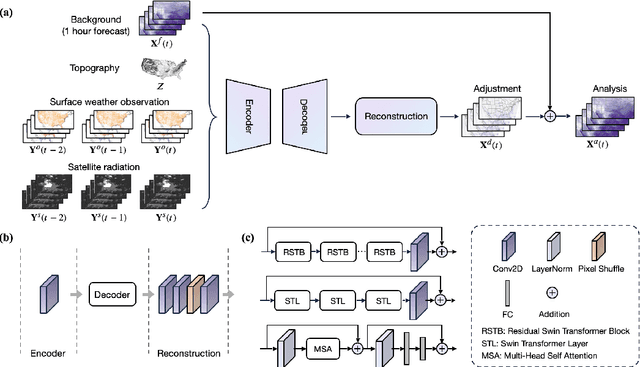

Abstract:The forecasting skill of numerical weather prediction (NWP) models critically depends on the accurate initial conditions, also known as analysis, provided by data assimilation (DA). Traditional DA methods often face a trade-off between computational cost and accuracy due to complex linear algebra computations and the high dimensionality of the model, especially in nonlinear systems. Moreover, processing massive data in real-time requires substantial computational resources. To address this, we introduce an artificial intelligence-based data assimilation framework (ADAF) to generate high-quality kilometer-scale analysis. This study is the pioneering work using real-world observations from varied locations and multiple sources to verify the AI method's efficacy in DA, including sparse surface weather observations and satellite imagery. We implemented ADAF for four near-surface variables in the Contiguous United States (CONUS). The results indicate that ADAF surpasses the High Resolution Rapid Refresh Data Assimilation System (HRRRDAS) in accuracy by 16% to 33% for near-surface atmospheric conditions, aligning more closely with actual observations, and can effectively reconstruct extreme events, such as tropical cyclone wind fields. Sensitivity experiments reveal that ADAF can generate high-quality analysis even with low-accuracy backgrounds and extremely sparse surface observations. ADAF can assimilate massive observations within a three-hour window at low computational cost, taking about two seconds on an AMD MI200 graphics processing unit (GPU). ADAF has been shown to be efficient and effective in real-world DA, underscoring its potential role in operational weather forecasting.

AI-GOMS: Large AI-Driven Global Ocean Modeling System

Aug 10, 2023

Abstract:Ocean modeling is a powerful tool for simulating the physical, chemical, and biological processes of the ocean, which is the foundation for marine science research and operational oceanography. Modern numerical ocean modeling mainly consists of governing equations and numerical algorithms. Nonlinear instability, computational expense, low reusability efficiency and high coupling costs have gradually become the main bottlenecks for the further development of numerical ocean modeling. Recently, artificial intelligence-based modeling in scientific computing has shown revolutionary potential for digital twins and scientific simulations, but the bottlenecks of numerical ocean modeling have not been further solved. Here, we present AI-GOMS, a large AI-driven global ocean modeling system, for accurate and efficient global ocean daily prediction. AI-GOMS consists of a backbone model with the Fourier-based Masked Autoencoder structure for basic ocean variable prediction and lightweight fine-tuning models incorporating regional downscaling, wave decoding, and biochemistry coupling modules. AI-GOMS has achieved the best performance in 30 days of prediction for the global ocean basic variables with 15 depth layers at 1/4{\deg} spatial resolution. Beyond the good performance in statistical metrics, AI-GOMS realizes the simulation of mesoscale eddies in the Kuroshio region at 1/12{\deg} spatial resolution and ocean stratification in the tropical Pacific Ocean. AI-GOMS provides a new backbone-downstream paradigm for Earth system modeling, which makes the system transferable, scalable and reusable.

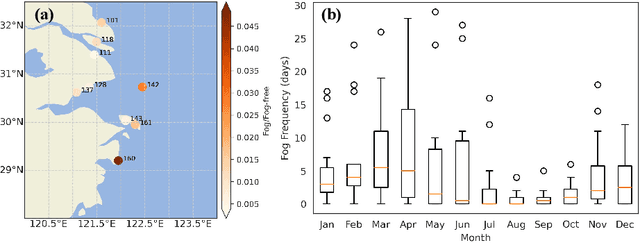

Intelligent model for offshore China sea fog forecasting

Jul 20, 2023

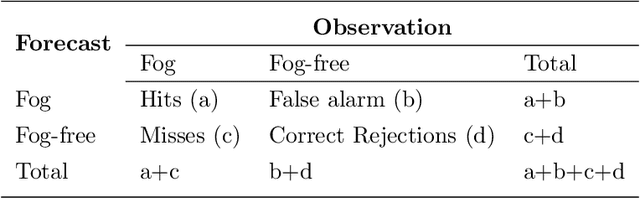

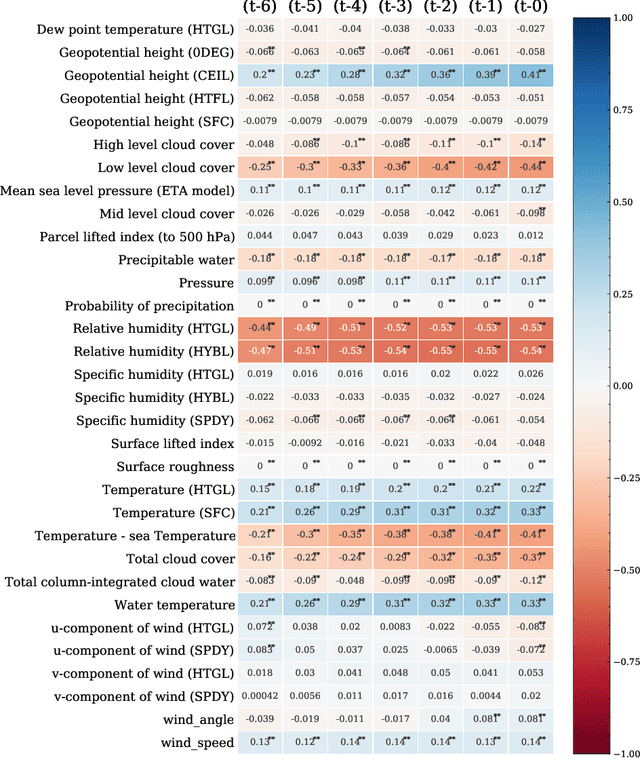

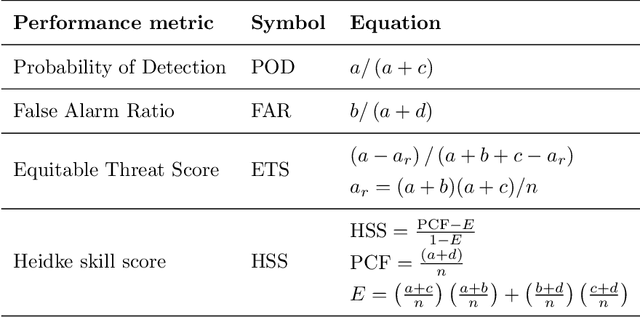

Abstract:Accurate and timely prediction of sea fog is very important for effectively managing maritime and coastal economic activities. Given the intricate nature and inherent variability of sea fog, traditional numerical and statistical forecasting methods are often proven inadequate. This study aims to develop an advanced sea fog forecasting method embedded in a numerical weather prediction model using the Yangtze River Estuary (YRE) coastal area as a case study. Prior to training our machine learning model, we employ a time-lagged correlation analysis technique to identify key predictors and decipher the underlying mechanisms driving sea fog occurrence. In addition, we implement ensemble learning and a focal loss function to address the issue of imbalanced data, thereby enhancing the predictive ability of our model. To verify the accuracy of our method, we evaluate its performance using a comprehensive dataset spanning one year, which encompasses both weather station observations and historical forecasts. Remarkably, our machine learning-based approach surpasses the predictive performance of two conventional methods, the weather research and forecasting nonhydrostatic mesoscale model (WRF-NMM) and the algorithm developed by the National Oceanic and Atmospheric Administration (NOAA) Forecast Systems Laboratory (FSL). Specifically, in regard to predicting sea fog with a visibility of less than or equal to 1 km with a lead time of 60 hours, our methodology achieves superior results by increasing the probability of detection (POD) while simultaneously reducing the false alarm ratio (FAR).

RMBench: Benchmarking Deep Reinforcement Learning for Robotic Manipulator Control

Oct 20, 2022

Abstract:Reinforcement learning is applied to solve actual complex tasks from high-dimensional, sensory inputs. The last decade has developed a long list of reinforcement learning algorithms. Recent progress benefits from deep learning for raw sensory signal representation. One question naturally arises: how well do they perform concerning different robotic manipulation tasks? Benchmarks use objective performance metrics to offer a scientific way to compare algorithms. In this paper, we present RMBench, the first benchmark for robotic manipulations, which have high-dimensional continuous action and state spaces. We implement and evaluate reinforcement learning algorithms that directly use observed pixels as inputs. We report their average performance and learning curves to show their performance and stability of training. Our study concludes that none of the studied algorithms can handle all tasks well, soft Actor-Critic outperforms most algorithms in average reward and stability, and an algorithm combined with data augmentation may facilitate learning policies. Our code is publicly available at https://anonymous.4open.science/r/RMBench-2022-3424, including all benchmark tasks and studied algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge