Maurice Gerczuk

EIHW -- Chair of Embedded Intelligence for Health Care and Wellbeing, University of Augsburg, Germany

SmoothCLAP: Soft-Target Enhanced Contrastive Language\--Audio Pretraining for Affective Computing

Jan 18, 2026Abstract:The ambiguity of human emotions poses several challenges for machine learning models, as they often overlap and lack clear delineating boundaries. Contrastive language-audio pretraining (CLAP) has emerged as a key technique for generalisable emotion recognition. However, as conventional CLAP enforces a strict one-to-one alignment between paired audio-text samples, it overlooks intra-modal similarity and treats all non-matching pairs as equally negative. This conflicts with the fuzzy boundaries between different emotions. To address this limitation, we propose SmoothCLAP, which introduces softened targets derived from intra-modal similarity and paralinguistic features. By combining these softened targets with conventional contrastive supervision, SmoothCLAP learns embeddings that respect graded emotional relationships, while retaining the same inference pipeline as CLAP. Experiments on eight affective computing tasks across English and German demonstrate that SmoothCLAP is consistently achieving superior performance. Our results highlight that leveraging soft supervision is a promising strategy for building emotion-aware audio-text models.

ExHuBERT: Enhancing HuBERT Through Block Extension and Fine-Tuning on 37 Emotion Datasets

Jun 11, 2024

Abstract:Foundation models have shown great promise in speech emotion recognition (SER) by leveraging their pre-trained representations to capture emotion patterns in speech signals. To further enhance SER performance across various languages and domains, we propose a novel twofold approach. First, we gather EmoSet++, a comprehensive multi-lingual, multi-cultural speech emotion corpus with 37 datasets, 150,907 samples, and a total duration of 119.5 hours. Second, we introduce ExHuBERT, an enhanced version of HuBERT achieved by backbone extension and fine-tuning on EmoSet++. We duplicate each encoder layer and its weights, then freeze the first duplicate, integrating an extra zero-initialized linear layer and skip connections to preserve functionality and ensure its adaptability for subsequent fine-tuning. Our evaluation on unseen datasets shows the efficacy of ExHuBERT, setting a new benchmark for various SER tasks. Model and details on EmoSet++: https://huggingface.co/amiriparian/ExHuBERT.

The MuSe 2024 Multimodal Sentiment Analysis Challenge: Social Perception and Humor Recognition

Jun 11, 2024

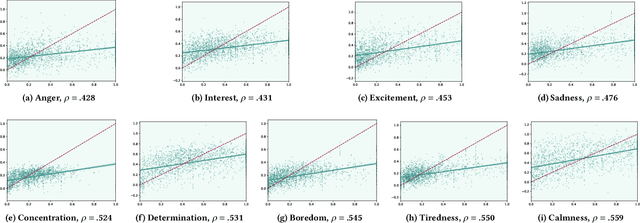

Abstract:The Multimodal Sentiment Analysis Challenge (MuSe) 2024 addresses two contemporary multimodal affect and sentiment analysis problems: In the Social Perception Sub-Challenge (MuSe-Perception), participants will predict 16 different social attributes of individuals such as assertiveness, dominance, likability, and sincerity based on the provided audio-visual data. The Cross-Cultural Humor Detection Sub-Challenge (MuSe-Humor) dataset expands upon the Passau Spontaneous Football Coach Humor (Passau-SFCH) dataset, focusing on the detection of spontaneous humor in a cross-lingual and cross-cultural setting. The main objective of MuSe 2024 is to unite a broad audience from various research domains, including multimodal sentiment analysis, audio-visual affective computing, continuous signal processing, and natural language processing. By fostering collaboration and exchange among experts in these fields, the MuSe 2024 endeavors to advance the understanding and application of sentiment analysis and affective computing across multiple modalities. This baseline paper provides details on each sub-challenge and its corresponding dataset, extracted features from each data modality, and discusses challenge baselines. For our baseline system, we make use of a range of Transformers and expert-designed features and train Gated Recurrent Unit (GRU)-Recurrent Neural Network (RNN) models on them, resulting in a competitive baseline system. On the unseen test datasets of the respective sub-challenges, it achieves a mean Pearson's Correlation Coefficient ($\rho$) of 0.3573 for MuSe-Perception and an Area Under the Curve (AUC) value of 0.8682 for MuSe-Humor.

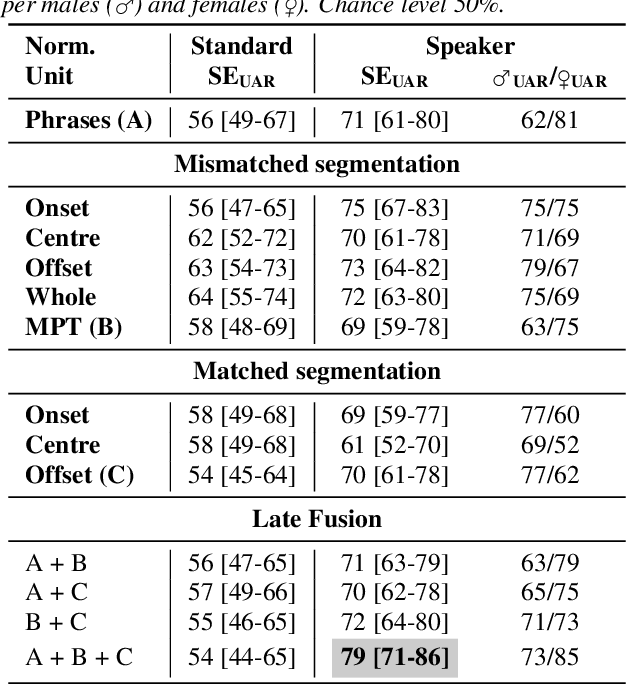

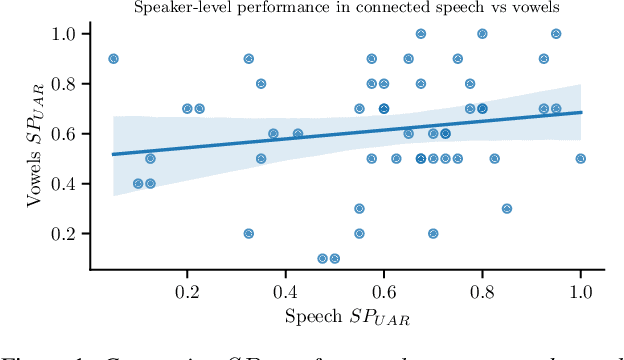

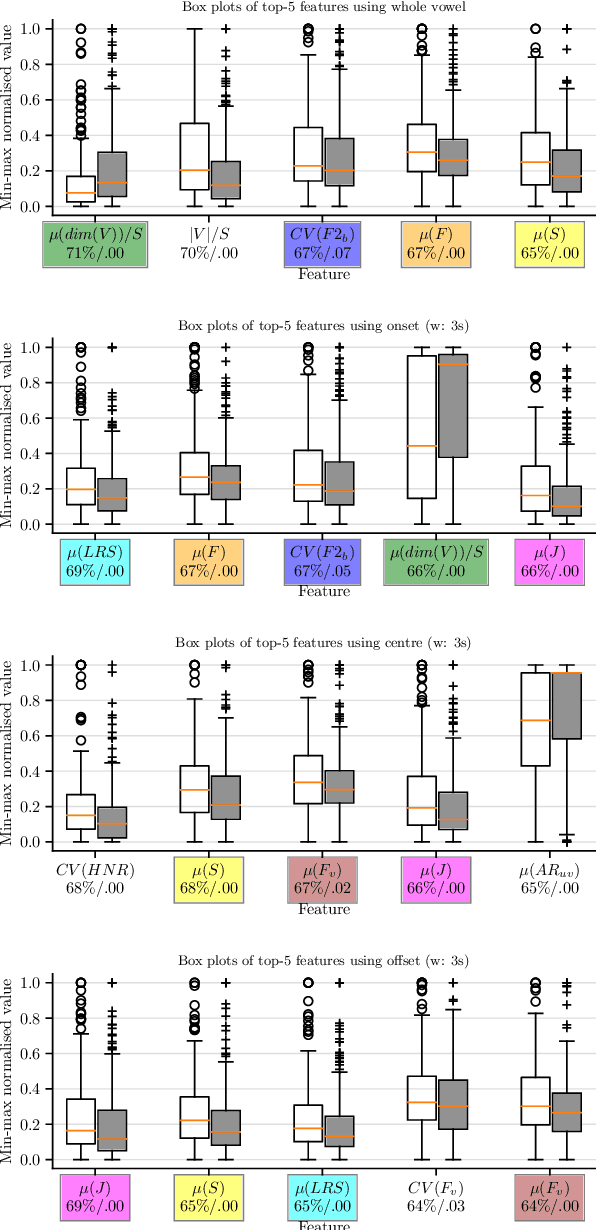

Sustained Vowels for Pre- vs Post-Treatment COPD Classification

Jun 10, 2024

Abstract:Chronic obstructive pulmonary disease (COPD) is a serious inflammatory lung disease affecting millions of people around the world. Due to an obstructed airflow from the lungs, it also becomes manifest in patients' vocal behaviour. Of particular importance is the detection of an exacerbation episode, which marks an acute phase and often requires hospitalisation and treatment. Previous work has shown that it is possible to distinguish between a pre- and a post-treatment state using automatic analysis of read speech. In this contribution, we examine whether sustained vowels can provide a complementary lens for telling apart these two states. Using a cohort of 50 patients, we show that the inclusion of sustained vowels can improve performance to up to 79\% unweighted average recall, from a 71\% baseline using read speech. We further identify and interpret the most important acoustic features that characterise the manifestation of COPD in sustained vowels.

Enhancing Suicide Risk Assessment: A Speech-Based Automated Approach in Emergency Medicine

Apr 18, 2024Abstract:The delayed access to specialized psychiatric assessments and care for patients at risk of suicidal tendencies in emergency departments creates a notable gap in timely intervention, hindering the provision of adequate mental health support during critical situations. To address this, we present a non-invasive, speech-based approach for automatic suicide risk assessment. For our study, we have collected a novel dataset of speech recordings from $20$ patients from which we extract three sets of features, including wav2vec, interpretable speech and acoustic features, and deep learning-based spectral representations. We proceed by conducting a binary classification to assess suicide risk in a leave-one-subject-out fashion. Our most effective speech model achieves a balanced accuracy of $66.2\,\%$. Moreover, we show that integrating our speech model with a series of patients' metadata, such as the history of suicide attempts or access to firearms, improves the overall result. The metadata integration yields a balanced accuracy of $94.4\,\%$, marking an absolute improvement of $28.2\,\%$, demonstrating the efficacy of our proposed approaches for automatic suicide risk assessment in emergency medicine.

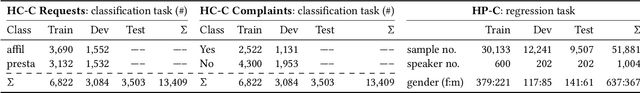

The ACM Multimedia 2023 Computational Paralinguistics Challenge: Emotion Share & Requests

May 01, 2023

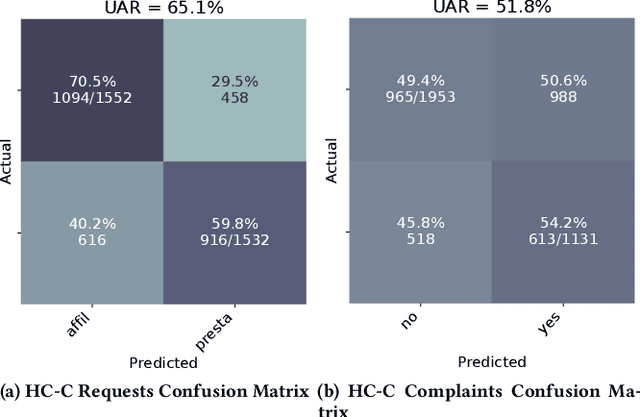

Abstract:The ACM Multimedia 2023 Computational Paralinguistics Challenge addresses two different problems for the first time in a research competition under well-defined conditions: In the Emotion Share Sub-Challenge, a regression on speech has to be made; and in the Requests Sub-Challenges, requests and complaints need to be detected. We describe the Sub-Challenges, baseline feature extraction, and classifiers based on the usual ComPaRE features, the auDeep toolkit, and deep feature extraction from pre-trained CNNs using the DeepSpectRum toolkit; in addition, wav2vec2 models are used.

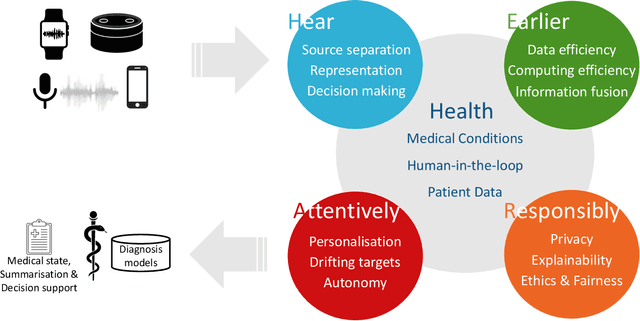

HEAR4Health: A blueprint for making computer audition a staple of modern healthcare

Jan 25, 2023

Abstract:Recent years have seen a rapid increase in digital medicine research in an attempt to transform traditional healthcare systems to their modern, intelligent, and versatile equivalents that are adequately equipped to tackle contemporary challenges. This has led to a wave of applications that utilise AI technologies; first and foremost in the fields of medical imaging, but also in the use of wearables and other intelligent sensors. In comparison, computer audition can be seen to be lagging behind, at least in terms of commercial interest. Yet, audition has long been a staple assistant for medical practitioners, with the stethoscope being the quintessential sign of doctors around the world. Transforming this traditional technology with the use of AI entails a set of unique challenges. We categorise the advances needed in four key pillars: Hear, corresponding to the cornerstone technologies needed to analyse auditory signals in real-life conditions; Earlier, for the advances needed in computational and data efficiency; Attentively, for accounting to individual differences and handling the longitudinal nature of medical data; and, finally, Responsibly, for ensuring compliance to the ethical standards accorded to the field of medicine.

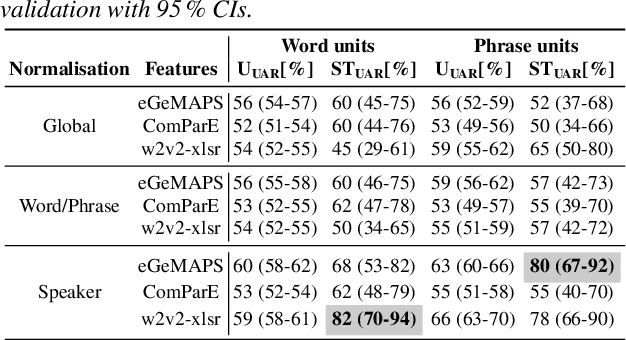

Distinguishing between pre- and post-treatment in the speech of patients with chronic obstructive pulmonary disease

Jul 26, 2022

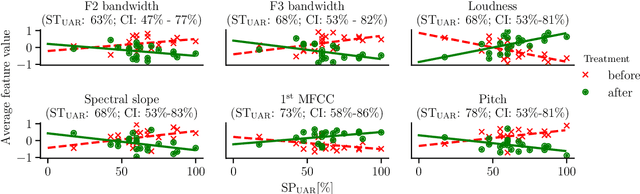

Abstract:Chronic obstructive pulmonary disease (COPD) causes lung inflammation and airflow blockage leading to a variety of respiratory symptoms; it is also a leading cause of death and affects millions of individuals around the world. Patients often require treatment and hospitalisation, while no cure is currently available. As COPD predominantly affects the respiratory system, speech and non-linguistic vocalisations present a major avenue for measuring the effect of treatment. In this work, we present results on a new COPD dataset of 20 patients, showing that, by employing personalisation through speaker-level feature normalisation, we can distinguish between pre- and post-treatment speech with an unweighted average recall (UAR) of up to 82\,\% in (nested) leave-one-speaker-out cross-validation. We further identify the most important features and link them to pathological voice properties, thus enabling an auditory interpretation of treatment effects. Monitoring tools based on such approaches may help objectivise the clinical status of COPD patients and facilitate personalised treatment plans.

The ACM Multimedia 2022 Computational Paralinguistics Challenge: Vocalisations, Stuttering, Activity, & Mosquitoes

May 13, 2022

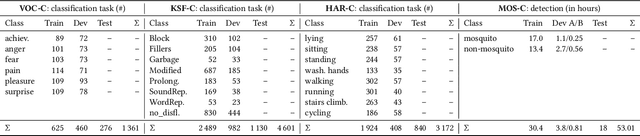

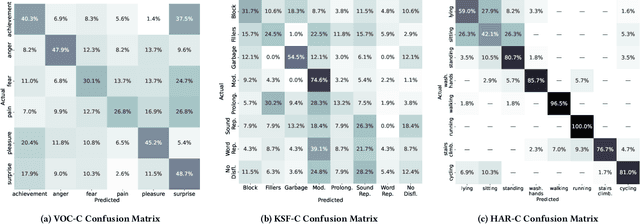

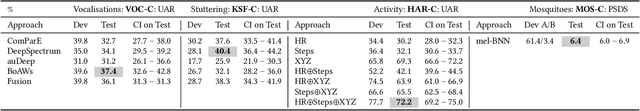

Abstract:The ACM Multimedia 2022 Computational Paralinguistics Challenge addresses four different problems for the first time in a research competition under well-defined conditions: In the Vocalisations and Stuttering Sub-Challenges, a classification on human non-verbal vocalisations and speech has to be made; the Activity Sub-Challenge aims at beyond-audio human activity recognition from smartwatch sensor data; and in the Mosquitoes Sub-Challenge, mosquitoes need to be detected. We describe the Sub-Challenges, baseline feature extraction, and classifiers based on the usual ComPaRE and BoAW features, the auDeep toolkit, and deep feature extraction from pre-trained CNNs using the DeepSpectRum toolkit; in addition, we add end-to-end sequential modelling, and a log-mel-128-BNN.

Fatigue Prediction in Outdoor Running Conditions using Audio Data

May 09, 2022

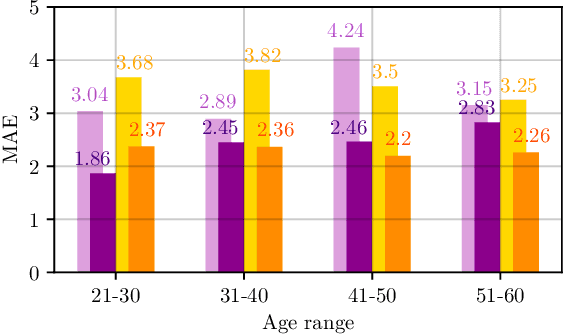

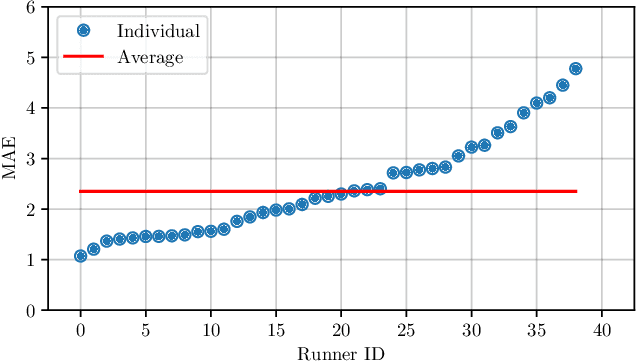

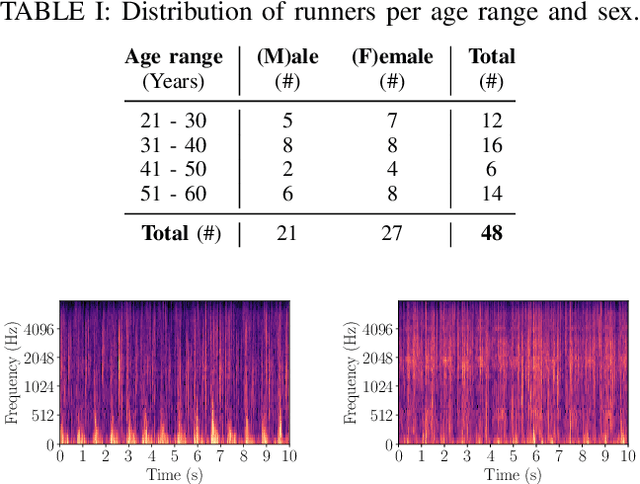

Abstract:Although running is a common leisure activity and a core training regiment for several athletes, between $29\%$ and $79\%$ of runners sustain an overuse injury each year. These injuries are linked to excessive fatigue, which alters how someone runs. In this work, we explore the feasibility of modelling the Borg received perception of exertion (RPE) scale (range: $[6-20]$), a well-validated subjective measure of fatigue, using audio data captured in realistic outdoor environments via smartphones attached to the runners' arms. Using convolutional neural networks (CNNs) on log-Mel spectrograms, we obtain a mean absolute error of $2.35$ in subject-dependent experiments, demonstrating that audio can be effectively used to model fatigue, while being more easily and non-invasively acquired than by signals from other sensors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge