Björn W. Schuller

EIHW -- Chair of Embedded Intelligence for Health Care and Wellbeing, University of Augsburg, Germany, GLAM -- Group on Language, Audio, and Music, Imperial College London, UK

Cross-Dialect Bird Species Recognition with Dialect-Calibrated Augmentation

Sep 26, 2025Abstract:Dialect variation hampers automatic recognition of bird calls collected by passive acoustic monitoring. We address the problem on DB3V, a three-region, ten-species corpus of 8-s clips, and propose a deployable framework built on Time-Delay Neural Networks (TDNNs). Frequency-sensitive normalisation (Instance Frequency Normalisation and a gated Relaxed-IFN) is paired with gradient-reversal adversarial training to learn region-invariant embeddings. A multi-level augmentation scheme combines waveform perturbations, Mixup for rare classes, and CycleGAN transfer that synthesises Region 2 (Interior Plains)-style audio, , with Dialect-Calibrated Augmentation (DCA) softly down-weighting synthetic samples to limit artifacts. The complete system lifts cross-dialect accuracy by up to twenty percentage points over baseline TDNNs while preserving in-region performance. Grad-CAM and LIME analyses show that robust models concentrate on stable harmonic bands, providing ecologically meaningful explanations. The study demonstrates that lightweight, transparent, and dialect-resilient bird-sound recognition is attainable.

Affine Modulation-based Audiogram Fusion Network for Joint Noise Reduction and Hearing Loss Compensation

Sep 09, 2025

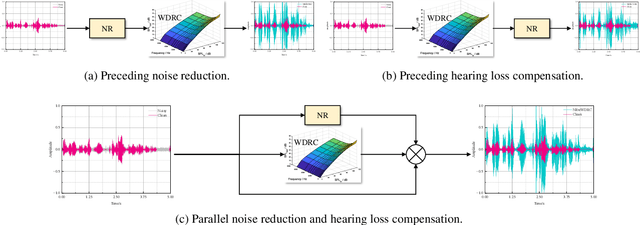

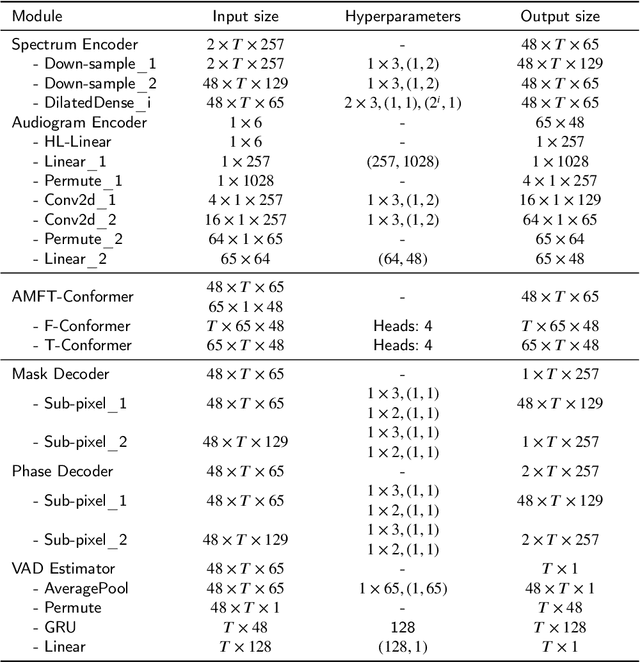

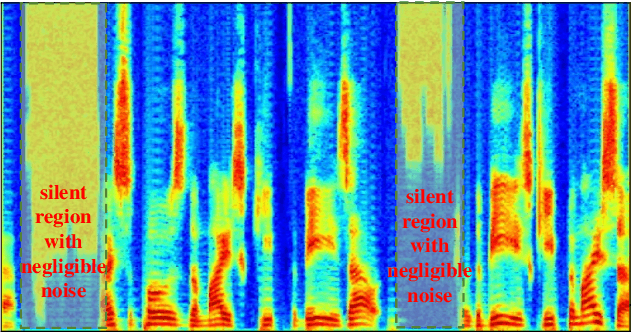

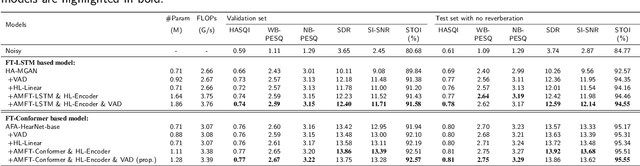

Abstract:Hearing aids (HAs) are widely used to provide personalized speech enhancement (PSE) services, improving the quality of life for individuals with hearing loss. However, HA performance significantly declines in noisy environments as it treats noise reduction (NR) and hearing loss compensation (HLC) as separate tasks. This separation leads to a lack of systematic optimization, overlooking the interactions between these two critical tasks, and increases the system complexity. To address these challenges, we propose a novel audiogram fusion network, named AFN-HearNet, which simultaneously tackles the NR and HLC tasks by fusing cross-domain audiogram and spectrum features. We propose an audiogram-specific encoder that transforms the sparse audiogram profile into a deep representation, addressing the alignment problem of cross-domain features prior to fusion. To incorporate the interactions between NR and HLC tasks, we propose the affine modulation-based audiogram fusion frequency-temporal Conformer that adaptively fuses these two features into a unified deep representation for speech reconstruction. Furthermore, we introduce a voice activity detection auxiliary training task to embed speech and non-speech patterns into the unified deep representation implicitly. We conduct comprehensive experiments across multiple datasets to validate the effectiveness of each proposed module. The results indicate that the AFN-HearNet significantly outperforms state-of-the-art in-context fusion joint models regarding key metrics such as HASQI and PESQ, achieving a considerable trade-off between performance and efficiency. The source code and data will be released at https://github.com/deepnetni/AFN-HearNet.

Speech-Based Depressive Mood Detection in the Presence of Multiple Sclerosis: A Cross-Corpus and Cross-Lingual Study

Aug 25, 2025

Abstract:Depression commonly co-occurs with neurodegenerative disorders like Multiple Sclerosis (MS), yet the potential of speech-based Artificial Intelligence for detecting depression in such contexts remains unexplored. This study examines the transferability of speech-based depression detection methods to people with MS (pwMS) through cross-corpus and cross-lingual analysis using English data from the general population and German data from pwMS. Our approach implements supervised machine learning models using: 1) conventional speech and language features commonly used in the field, 2) emotional dimensions derived from a Speech Emotion Recognition (SER) model, and 3) exploratory speech feature analysis. Despite limited data, our models detect depressive mood in pwMS with moderate generalisability, achieving a 66% Unweighted Average Recall (UAR) on a binary task. Feature selection further improved performance, boosting UAR to 74%. Our findings also highlight the relevant role emotional changes have as an indicator of depressive mood in both the general population and within PwMS. This study provides an initial exploration into generalising speech-based depression detection, even in the presence of co-occurring conditions, such as neurodegenerative diseases.

I$^2$S-TFCKD: Intra-Inter Set Knowledge Distillation with Time-Frequency Calibration for Speech Enhancement

Jun 16, 2025Abstract:In recent years, complexity compression of neural network (NN)-based speech enhancement (SE) models has gradually attracted the attention of researchers, especially in scenarios with limited hardware resources or strict latency requirements. The main difficulties and challenges lie in achieving a balance between complexity and performance according to the characteristics of the task. In this paper, we propose an intra-inter set knowledge distillation (KD) framework with time-frequency calibration (I$^2$S-TFCKD) for SE. Different from previous distillation strategies for SE, the proposed framework fully utilizes the time-frequency differential information of speech while promoting global knowledge flow. Firstly, we propose a multi-layer interactive distillation based on dual-stream time-frequency cross-calibration, which calculates the teacher-student similarity calibration weights in the time and frequency domains respectively and performs cross-weighting, thus enabling refined allocation of distillation contributions across different layers according to speech characteristics. Secondly, we construct a collaborative distillation paradigm for intra-set and inter-set correlations. Within a correlated set, multi-layer teacher-student features are pairwise matched for calibrated distillation. Subsequently, we generate representative features from each correlated set through residual fusion to form the fused feature set that enables inter-set knowledge interaction. The proposed distillation strategy is applied to the dual-path dilated convolutional recurrent network (DPDCRN) that ranked first in the SE track of the L3DAS23 challenge. Objective evaluations demonstrate that the proposed KD strategy consistently and effectively improves the performance of the low-complexity student model and outperforms other distillation schemes.

MELT: Towards Automated Multimodal Emotion Data Annotation by Leveraging LLM Embedded Knowledge

May 30, 2025Abstract:Although speech emotion recognition (SER) has advanced significantly with deep learning, annotation remains a major hurdle. Human annotation is not only costly but also subject to inconsistencies annotators often have different preferences and may lack the necessary contextual knowledge, which can lead to varied and inaccurate labels. Meanwhile, Large Language Models (LLMs) have emerged as a scalable alternative for annotating text data. However, the potential of LLMs to perform emotional speech data annotation without human supervision has yet to be thoroughly investigated. To address these problems, we apply GPT-4o to annotate a multimodal dataset collected from the sitcom Friends, using only textual cues as inputs. By crafting structured text prompts, our methodology capitalizes on the knowledge GPT-4o has accumulated during its training, showcasing that it can generate accurate and contextually relevant annotations without direct access to multimodal inputs. Therefore, we propose MELT, a multimodal emotion dataset fully annotated by GPT-4o. We demonstrate the effectiveness of MELT by fine-tuning four self-supervised learning (SSL) backbones and assessing speech emotion recognition performance across emotion datasets. Additionally, our subjective experiments\' results demonstrate a consistence performance improvement on SER.

Large Language Models for Depression Recognition in Spoken Language Integrating Psychological Knowledge

May 28, 2025Abstract:Depression is a growing concern gaining attention in both public discourse and AI research. While deep neural networks (DNNs) have been used for recognition, they still lack real-world effectiveness. Large language models (LLMs) show strong potential but require domain-specific fine-tuning and struggle with non-textual cues. Since depression is often expressed through vocal tone and behaviour rather than explicit text, relying on language alone is insufficient. Diagnostic accuracy also suffers without incorporating psychological expertise. To address these limitations, we present, to the best of our knowledge, the first application of LLMs to multimodal depression detection using the DAIC-WOZ dataset. We extract the audio features using the pre-trained model Wav2Vec, and mapped it to text-based LLMs for further processing. We also propose a novel strategy for incorporating psychological knowledge into LLMs to enhance diagnostic performance, specifically using a question and answer set to grant authorised knowledge to LLMs. Our approach yields a notable improvement in both Mean Absolute Error (MAE) and Root Mean Square Error (RMSE) compared to a base score proposed by the related original paper. The codes are available at https://github.com/myxp-lyp/Depression-detection.git

Neuroplasticity in Artificial Intelligence -- An Overview and Inspirations on Drop In \& Out Learning

Mar 27, 2025

Abstract:Artificial Intelligence (AI) has achieved new levels of performance and spread in public usage with the rise of deep neural networks (DNNs). Initially inspired by human neurons and their connections, NNs have become the foundation of AI models for many advanced architectures. However, some of the most integral processes in the human brain, particularly neurogenesis and neuroplasticity in addition to the more spread neuroapoptosis have largely been ignored in DNN architecture design. Instead, contemporary AI development predominantly focuses on constructing advanced frameworks, such as large language models, which retain a static structure of neural connections during training and inference. In this light, we explore how neurogenesis, neuroapoptosis, and neuroplasticity can inspire future AI advances. Specifically, we examine analogous activities in artificial NNs, introducing the concepts of ``dropin'' for neurogenesis and revisiting ``dropout'' and structural pruning for neuroapoptosis. We additionally suggest neuroplasticity combining the two for future large NNs in ``life-long learning'' settings following the biological inspiration. We conclude by advocating for greater research efforts in this interdisciplinary domain and identifying promising directions for future exploration.

GatedxLSTM: A Multimodal Affective Computing Approach for Emotion Recognition in Conversations

Mar 26, 2025Abstract:Affective Computing (AC) is essential for advancing Artificial General Intelligence (AGI), with emotion recognition serving as a key component. However, human emotions are inherently dynamic, influenced not only by an individual's expressions but also by interactions with others, and single-modality approaches often fail to capture their full dynamics. Multimodal Emotion Recognition (MER) leverages multiple signals but traditionally relies on utterance-level analysis, overlooking the dynamic nature of emotions in conversations. Emotion Recognition in Conversation (ERC) addresses this limitation, yet existing methods struggle to align multimodal features and explain why emotions evolve within dialogues. To bridge this gap, we propose GatedxLSTM, a novel speech-text multimodal ERC model that explicitly considers voice and transcripts of both the speaker and their conversational partner(s) to identify the most influential sentences driving emotional shifts. By integrating Contrastive Language-Audio Pretraining (CLAP) for improved cross-modal alignment and employing a gating mechanism to emphasise emotionally impactful utterances, GatedxLSTM enhances both interpretability and performance. Additionally, the Dialogical Emotion Decoder (DED) refines emotion predictions by modelling contextual dependencies. Experiments on the IEMOCAP dataset demonstrate that GatedxLSTM achieves state-of-the-art (SOTA) performance among open-source methods in four-class emotion classification. These results validate its effectiveness for ERC applications and provide an interpretability analysis from a psychological perspective.

Parameterised Quantum Circuits for Novel Representation Learning in Speech Emotion Recognition

Jan 21, 2025Abstract:Speech Emotion Recognition (SER) is a complex and challenging task in human-computer interaction due to the intricate dependencies of features and the overlapping nature of emotional expressions conveyed through speech. Although traditional deep learning methods have shown effectiveness, they often struggle to capture subtle emotional variations and overlapping states. This paper introduces a hybrid classical-quantum framework that integrates Parameterised Quantum Circuits (PQCs) with conventional Convolutional Neural Network (CNN) architectures. By leveraging quantum properties such as superposition and entanglement, the proposed model enhances feature representation and captures complex dependencies more effectively than classical methods. Experimental evaluations conducted on benchmark datasets, including IEMOCAP, RECOLA, and MSP-Improv, demonstrate that the hybrid model achieves higher accuracy in both binary and multi-class emotion classification while significantly reducing the number of trainable parameters. While a few existing studies have explored the feasibility of using Quantum Circuits to reduce model complexity, none have successfully shown how they can enhance accuracy. This study is the first to demonstrate that Quantum Circuits has the potential to improve the accuracy of SER. The findings highlight the promise of QML to transform SER, suggesting a promising direction for future research and practical applications in emotion-aware systems.

DOTA-ME-CS: Daily Oriented Text Audio-Mandarin English-Code Switching Dataset

Jan 21, 2025

Abstract:Code-switching, the alternation between two or more languages within communication, poses great challenges for Automatic Speech Recognition (ASR) systems. Existing models and datasets are limited in their ability to effectively handle these challenges. To address this gap and foster progress in code-switching ASR research, we introduce the DOTA-ME-CS: Daily oriented text audio Mandarin-English code-switching dataset, which consists of 18.54 hours of audio data, including 9,300 recordings from 34 participants. To enhance the dataset's diversity, we apply artificial intelligence (AI) techniques such as AI timbre synthesis, speed variation, and noise addition, thereby increasing the complexity and scalability of the task. The dataset is carefully curated to ensure both diversity and quality, providing a robust resource for researchers addressing the intricacies of bilingual speech recognition with detailed data analysis. We further demonstrate the dataset's potential in future research. The DOTA-ME-CS dataset, along with accompanying code, will be made publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge