Manuel Milling

EIHW -- Chair of Embedded Intelligence for Health Care and Wellbeing, University of Augsburg, Germany

Large Language Models for Depression Recognition in Spoken Language Integrating Psychological Knowledge

May 28, 2025Abstract:Depression is a growing concern gaining attention in both public discourse and AI research. While deep neural networks (DNNs) have been used for recognition, they still lack real-world effectiveness. Large language models (LLMs) show strong potential but require domain-specific fine-tuning and struggle with non-textual cues. Since depression is often expressed through vocal tone and behaviour rather than explicit text, relying on language alone is insufficient. Diagnostic accuracy also suffers without incorporating psychological expertise. To address these limitations, we present, to the best of our knowledge, the first application of LLMs to multimodal depression detection using the DAIC-WOZ dataset. We extract the audio features using the pre-trained model Wav2Vec, and mapped it to text-based LLMs for further processing. We also propose a novel strategy for incorporating psychological knowledge into LLMs to enhance diagnostic performance, specifically using a question and answer set to grant authorised knowledge to LLMs. Our approach yields a notable improvement in both Mean Absolute Error (MAE) and Root Mean Square Error (RMSE) compared to a base score proposed by the related original paper. The codes are available at https://github.com/myxp-lyp/Depression-detection.git

Neuroplasticity in Artificial Intelligence -- An Overview and Inspirations on Drop In \& Out Learning

Mar 27, 2025

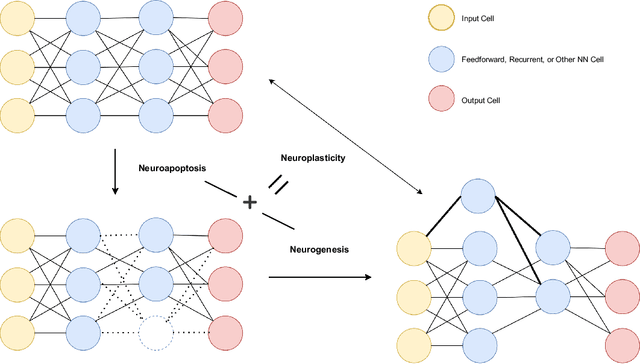

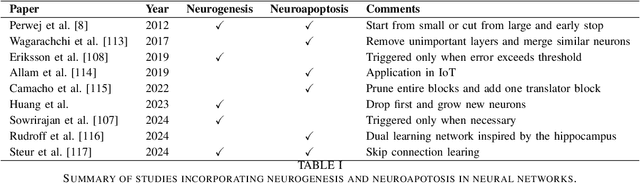

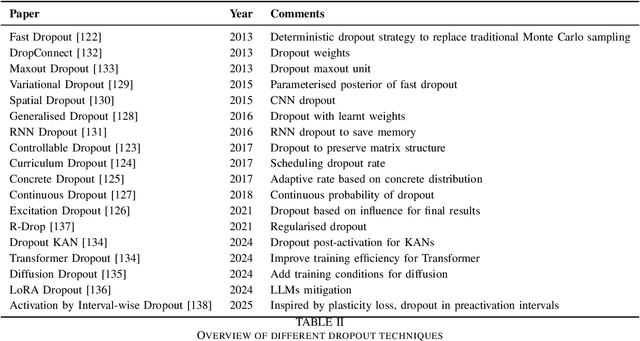

Abstract:Artificial Intelligence (AI) has achieved new levels of performance and spread in public usage with the rise of deep neural networks (DNNs). Initially inspired by human neurons and their connections, NNs have become the foundation of AI models for many advanced architectures. However, some of the most integral processes in the human brain, particularly neurogenesis and neuroplasticity in addition to the more spread neuroapoptosis have largely been ignored in DNN architecture design. Instead, contemporary AI development predominantly focuses on constructing advanced frameworks, such as large language models, which retain a static structure of neural connections during training and inference. In this light, we explore how neurogenesis, neuroapoptosis, and neuroplasticity can inspire future AI advances. Specifically, we examine analogous activities in artificial NNs, introducing the concepts of ``dropin'' for neurogenesis and revisiting ``dropout'' and structural pruning for neuroapoptosis. We additionally suggest neuroplasticity combining the two for future large NNs in ``life-long learning'' settings following the biological inspiration. We conclude by advocating for greater research efforts in this interdisciplinary domain and identifying promising directions for future exploration.

Detecting Document-level Paraphrased Machine Generated Content: Mimicking Human Writing Style and Involving Discourse Features

Dec 17, 2024

Abstract:The availability of high-quality APIs for Large Language Models (LLMs) has facilitated the widespread creation of Machine-Generated Content (MGC), posing challenges such as academic plagiarism and the spread of misinformation. Existing MGC detectors often focus solely on surface-level information, overlooking implicit and structural features. This makes them susceptible to deception by surface-level sentence patterns, particularly for longer texts and in texts that have been subsequently paraphrased. To overcome these challenges, we introduce novel methodologies and datasets. Besides the publicly available dataset Plagbench, we developed the paraphrased Long-Form Question and Answer (paraLFQA) and paraphrased Writing Prompts (paraWP) datasets using GPT and DIPPER, a discourse paraphrasing tool, by extending artifacts from their original versions. To address the challenge of detecting highly similar paraphrased texts, we propose MhBART, an encoder-decoder model designed to emulate human writing style while incorporating a novel difference score mechanism. This model outperforms strong classifier baselines and identifies deceptive sentence patterns. To better capture the structure of longer texts at document level, we propose DTransformer, a model that integrates discourse analysis through PDTB preprocessing to encode structural features. It results in substantial performance gains across both datasets -- 15.5\% absolute improvement on paraLFQA, 4\% absolute improvement on paraWP, and 1.5\% absolute improvement on M4 compared to SOTA approaches.

autrainer: A Modular and Extensible Deep Learning Toolkit for Computer Audition Tasks

Dec 16, 2024Abstract:This work introduces the key operating principles for autrainer, our new deep learning training framework for computer audition tasks. autrainer is a PyTorch-based toolkit that allows for rapid, reproducible, and easily extensible training on a variety of different computer audition tasks. Concretely, autrainer offers low-code training and supports a wide range of neural networks as well as preprocessing routines. In this work, we present an overview of its inner workings and key capabilities.

From Audio Deepfake Detection to AI-Generated Music Detection -- A Pathway and Overview

Nov 30, 2024

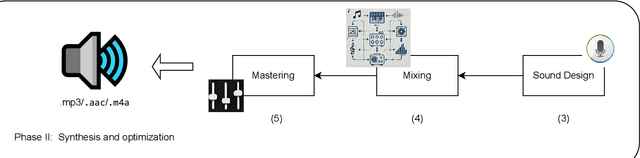

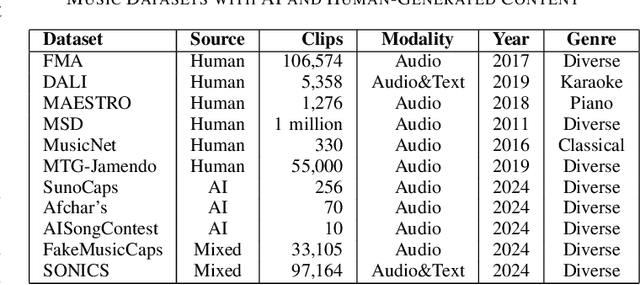

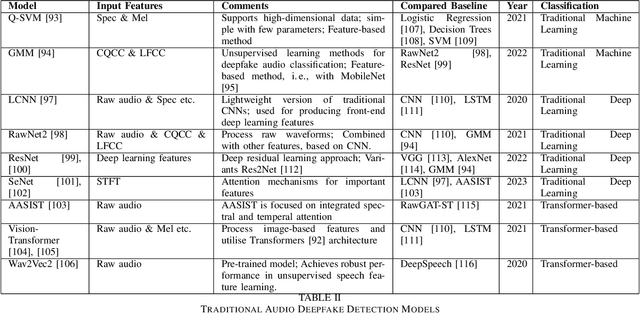

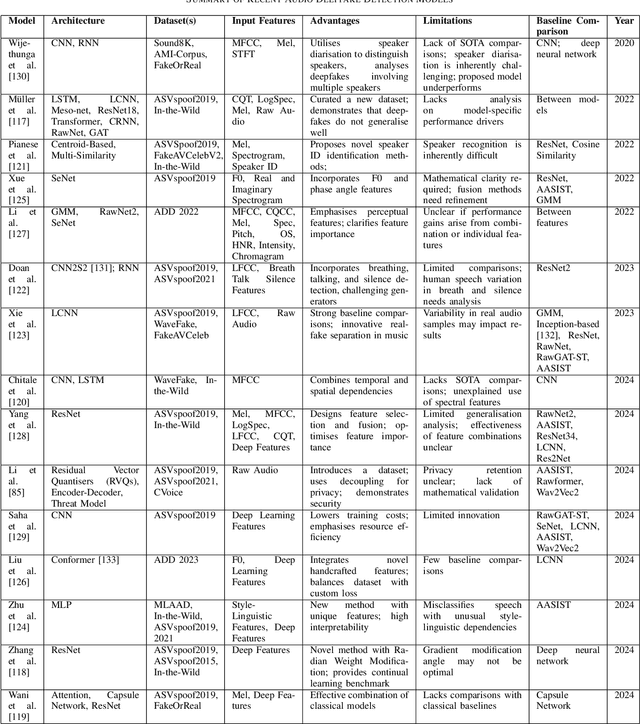

Abstract:As Artificial Intelligence (AI) technologies continue to evolve, their use in generating realistic, contextually appropriate content has expanded into various domains. Music, an art form and medium for entertainment, deeply rooted into human culture, is seeing an increased involvement of AI into its production. However, the unregulated use of AI music generation (AIGM) tools raises concerns about potential negative impacts on the music industry, copyright and artistic integrity, underscoring the importance of effective AIGM detection. This paper provides an overview of existing AIGM detection methods. To lay a foundation to the general workings and challenges of AIGM detection, we first review general principles of AIGM, including recent advancements in deepfake audios, as well as multimodal detection techniques. We further propose a potential pathway for leveraging foundation models from audio deepfake detection to AIGM detection. Additionally, we discuss implications of these tools and propose directions for future research to address ongoing challenges in the field.

Does the Definition of Difficulty Matter? Scoring Functions and their Role for Curriculum Learning

Nov 01, 2024

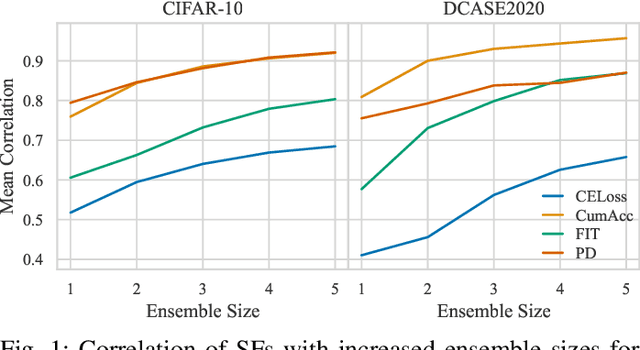

Abstract:Curriculum learning (CL) describes a machine learning training strategy in which samples are gradually introduced into the training process based on their difficulty. Despite a partially contradictory body of evidence in the literature, CL finds popularity in deep learning research due to its promise of leveraging human-inspired curricula to achieve higher model performance. Yet, the subjectivity and biases that follow any necessary definition of difficulty, especially for those found in orderings derived from models or training statistics, have rarely been investigated. To shed more light on the underlying unanswered questions, we conduct an extensive study on the robustness and similarity of the most common scoring functions for sample difficulty estimation, as well as their potential benefits in CL, using the popular benchmark dataset CIFAR-10 and the acoustic scene classification task from the DCASE2020 challenge as representatives of computer vision and computer audition, respectively. We report a strong dependence of scoring functions on the training setting, including randomness, which can partly be mitigated through ensemble scoring. While we do not find a general advantage of CL over uniform sampling, we observe that the ordering in which data is presented for CL-based training plays an important role in model performance. Furthermore, we find that the robustness of scoring functions across random seeds positively correlates with CL performance. Finally, we uncover that models trained with different CL strategies complement each other by boosting predictive power through late fusion, likely due to differences in the learnt concepts. Alongside our findings, we release the aucurriculum toolkit (https://github.com/autrainer/aucurriculum), implementing sample difficulty and CL-based training in a modular fashion.

Audio-based Kinship Verification Using Age Domain Conversion

Oct 14, 2024

Abstract:Audio-based kinship verification (AKV) is important in many domains, such as home security monitoring, forensic identification, and social network analysis. A key challenge in the task arises from differences in age across samples from different individuals, which can be interpreted as a domain bias in a cross-domain verification task. To address this issue, we design the notion of an "age-standardised domain" wherein we utilise the optimised CycleGAN-VC3 network to perform age-audio conversion to generate the in-domain audio. The generated audio dataset is employed to extract a range of features, which are then fed into a metric learning architecture to verify kinship. Experiments are conducted on the KAN_AV audio dataset, which contains age and kinship labels. The results demonstrate that the method markedly enhances the accuracy of kinship verification, while also offering novel insights for future kinship verification research.

Audio Enhancement for Computer Audition -- An Iterative Training Paradigm Using Sample Importance

Aug 12, 2024Abstract:Neural network models for audio tasks, such as automatic speech recognition (ASR) and acoustic scene classification (ASC), are susceptible to noise contamination for real-life applications. To improve audio quality, an enhancement module, which can be developed independently, is explicitly used at the front-end of the target audio applications. In this paper, we present an end-to-end learning solution to jointly optimise the models for audio enhancement (AE) and the subsequent applications. To guide the optimisation of the AE module towards a target application, and especially to overcome difficult samples, we make use of the sample-wise performance measure as an indication of sample importance. In experiments, we consider four representative applications to evaluate our training paradigm, i.e., ASR, speech command recognition (SCR), speech emotion recognition (SER), and ASC. These applications are associated with speech and non-speech tasks concerning semantic and non-semantic features, transient and global information, and the experimental results indicate that our proposed approach can considerably boost the noise robustness of the models, especially at low signal-to-noise ratios (SNRs), for a wide range of computer audition tasks in everyday-life noisy environments.

Are you sure? Analysing Uncertainty Quantification Approaches for Real-world Speech Emotion Recognition

Jul 01, 2024Abstract:Uncertainty Quantification (UQ) is an important building block for the reliable use of neural networks in real-world scenarios, as it can be a useful tool in identifying faulty predictions. Speech emotion recognition (SER) models can suffer from particularly many sources of uncertainty, such as the ambiguity of emotions, Out-of-Distribution (OOD) data or, in general, poor recording conditions. Reliable UQ methods are thus of particular interest as in many SER applications no prediction is better than a faulty prediction. While the effects of label ambiguity on uncertainty are well documented in the literature, we focus our work on an evaluation of UQ methods for SER under common challenges in real-world application, such as corrupted signals, and the absence of speech. We show that simple UQ methods can already give an indication of the uncertainty of a prediction and that training with additional OOD data can greatly improve the identification of such signals.

An automatic analysis of ultrasound vocalisations for the prediction of interaction context in captive Egyptian fruit bats

Jun 10, 2024Abstract:Prior work in computational bioacoustics has mostly focused on the detection of animal presence in a particular habitat. However, animal sounds contain much richer information than mere presence; among others, they encapsulate the interactions of those animals with other members of their species. Studying these interactions is almost impossible in a naturalistic setting, as the ground truth is often lacking. The use of animals in captivity instead offers a viable alternative pathway. However, most prior works follow a traditional, statistics-based approach to analysing interactions. In the present work, we go beyond this standard framework by attempting to predict the underlying context in interactions between captive \emph{Rousettus Aegyptiacus} using deep neural networks. We reach an unweighted average recall of over 30\% -- more than thrice the chance level -- and show error patterns that differ from our statistical analysis. This work thus represents an important step towards the automatic analysis of states in animals from sound.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge