Lu Xiong

A Communication-Latency-Aware Co-Simulation Platform for Safety and Comfort Evaluation of Cloud-Controlled ICVs

Jun 09, 2025Abstract:Testing cloud-controlled intelligent connected vehicles (ICVs) requires simulation environments that faithfully emulate both vehicle behavior and realistic communication latencies. This paper proposes a latency-aware co-simulation platform integrating CarMaker and Vissim to evaluate safety and comfort under real-world vehicle-to-cloud (V2C) latency conditions. Two communication latency models, derived from empirical 5G measurements in China and Hungary, are incorporated and statistically modeled using Gamma distributions. A proactive conflict module (PCM) is proposed to dynamically control background vehicles and generate safety-critical scenarios. The platform is validated through experiments involving an exemplary system under test (SUT) across six testing conditions combining two PCM modes (enabled/disabled) and three latency conditions (none, China, Hungary). Safety and comfort are assessed using metrics including collision rate, distance headway, post-encroachment time, and the spectral characteristics of longitudinal acceleration. Results show that the PCM effectively increases driving environment criticality, while V2C latency primarily affects ride comfort. These findings confirm the platform's effectiveness in systematically evaluating cloud-controlled ICVs under diverse testing conditions.

Uncertainty-Aware Safety-Critical Decision and Control for Autonomous Vehicles at Unsignalized Intersections

May 26, 2025Abstract:Reinforcement learning (RL) has demonstrated potential in autonomous driving (AD) decision tasks. However, applying RL to urban AD, particularly in intersection scenarios, still faces significant challenges. The lack of safety constraints makes RL vulnerable to risks. Additionally, cognitive limitations and environmental randomness can lead to unreliable decisions in safety-critical scenarios. Therefore, it is essential to quantify confidence in RL decisions to improve safety. This paper proposes an Uncertainty-aware Safety-Critical Decision and Control (USDC) framework, which generates a risk-averse policy by constructing a risk-aware ensemble distributional RL, while estimating uncertainty to quantify the policy's reliability. Subsequently, a high-order control barrier function (HOCBF) is employed as a safety filter to minimize intervention policy while dynamically enhancing constraints based on uncertainty. The ensemble critics evaluate both HOCBF and RL policies, embedding uncertainty to achieve dynamic switching between safe and flexible strategies, thereby balancing safety and efficiency. Simulation tests on unsignalized intersections in multiple tasks indicate that USDC can improve safety while maintaining traffic efficiency compared to baselines.

A Survey of Reinforcement Learning-Based Motion Planning for Autonomous Driving: Lessons Learned from a Driving Task Perspective

Mar 31, 2025

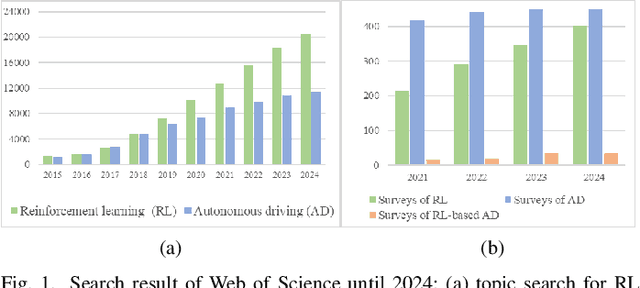

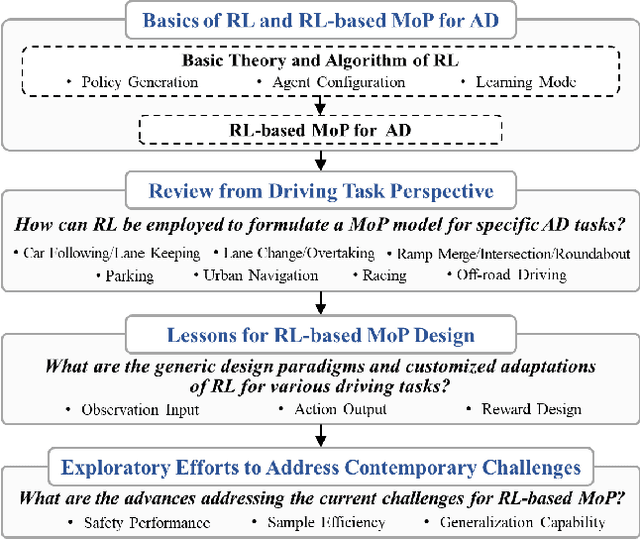

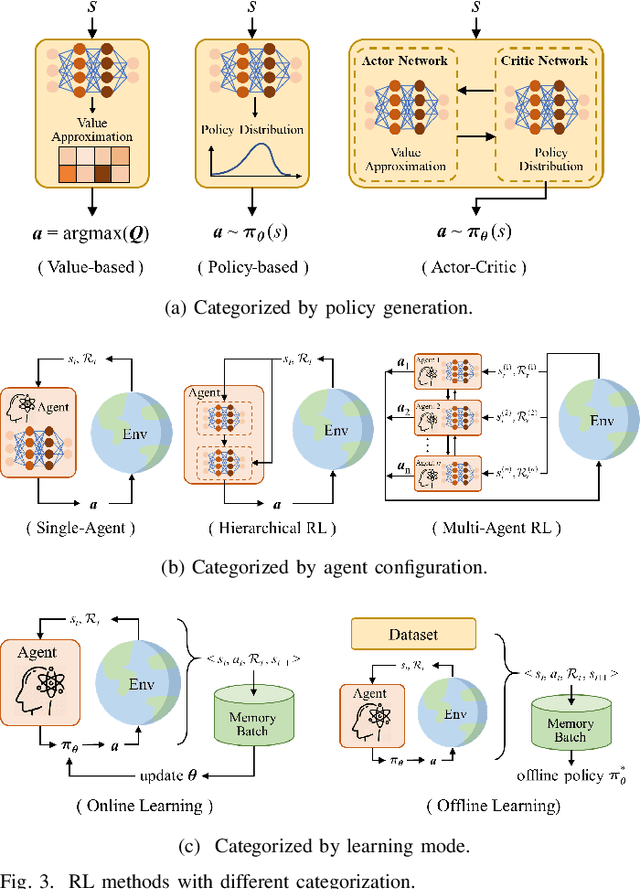

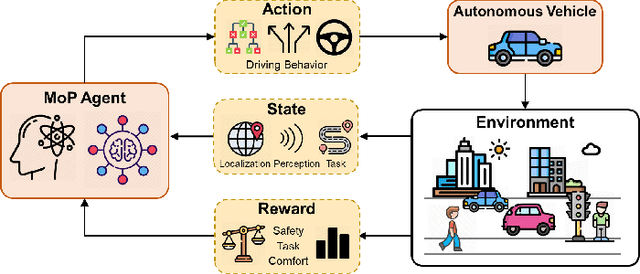

Abstract:Reinforcement learning (RL), with its ability to explore and optimize policies in complex, dynamic decision-making tasks, has emerged as a promising approach to addressing motion planning (MoP) challenges in autonomous driving (AD). Despite rapid advancements in RL and AD, a systematic description and interpretation of the RL design process tailored to diverse driving tasks remains underdeveloped. This survey provides a comprehensive review of RL-based MoP for AD, focusing on lessons from task-specific perspectives. We first outline the fundamentals of RL methodologies, and then survey their applications in MoP, analyzing scenario-specific features and task requirements to shed light on their influence on RL design choices. Building on this analysis, we summarize key design experiences, extract insights from various driving task applications, and provide guidance for future implementations. Additionally, we examine the frontier challenges in RL-based MoP, review recent efforts to addresse these challenges, and propose strategies for overcoming unresolved issues.

Risk-Aware Reinforcement Learning for Autonomous Driving: Improving Safety When Driving through Intersection

Mar 27, 2025Abstract:Applying reinforcement learning to autonomous driving has garnered widespread attention. However, classical reinforcement learning methods optimize policies by maximizing expected rewards but lack sufficient safety considerations, often putting agents in hazardous situations. This paper proposes a risk-aware reinforcement learning approach for autonomous driving to improve the safety performance when crossing the intersection. Safe critics are constructed to evaluate driving risk and work in conjunction with the reward critic to update the actor. Based on this, a Lagrangian relaxation method and cyclic gradient iteration are combined to project actions into a feasible safe region. Furthermore, a Multi-hop and Multi-layer perception (MLP) mixed Attention Mechanism (MMAM) is incorporated into the actor-critic network, enabling the policy to adapt to dynamic traffic and overcome permutation sensitivity challenges. This allows the policy to focus more effectively on surrounding potential risks while enhancing the identification of passing opportunities. Simulation tests are conducted on different tasks at unsignalized intersections. The results show that the proposed approach effectively reduces collision rates and improves crossing efficiency in comparison to baseline algorithms. Additionally, our ablation experiments demonstrate the benefits of incorporating risk-awareness and MMAM into RL.

R2LDM: An Efficient 4D Radar Super-Resolution Framework Leveraging Diffusion Model

Mar 21, 2025Abstract:We introduce R2LDM, an innovative approach for generating dense and accurate 4D radar point clouds, guided by corresponding LiDAR point clouds. Instead of utilizing range images or bird's eye view (BEV) images, we represent both LiDAR and 4D radar point clouds using voxel features, which more effectively capture 3D shape information. Subsequently, we propose the Latent Voxel Diffusion Model (LVDM), which performs the diffusion process in the latent space. Additionally, a novel Latent Point Cloud Reconstruction (LPCR) module is utilized to reconstruct point clouds from high-dimensional latent voxel features. As a result, R2LDM effectively generates LiDAR-like point clouds from paired raw radar data. We evaluate our approach on two different datasets, and the experimental results demonstrate that our model achieves 6- to 10-fold densification of radar point clouds, outperforming state-of-the-art baselines in 4D radar point cloud super-resolution. Furthermore, the enhanced radar point clouds generated by our method significantly improve downstream tasks, achieving up to 31.7% improvement in point cloud registration recall rate and 24.9% improvement in object detection accuracy.

Real-world Troublemaker: A 5G Cloud-controlled Track Testing Framework for Automated Driving Systems in Safety-critical Interaction Scenarios

Feb 23, 2025Abstract:Track testing plays a critical role in the safety evaluation of autonomous driving systems (ADS), as it provides a real-world interaction environment. However, the inflexibility in motion control of object targets and the absence of intelligent interactive testing methods often result in pre-fixed and limited testing scenarios. To address these limitations, we propose a novel 5G cloud-controlled track testing framework, Real-world Troublemaker. This framework overcomes the rigidity of traditional pre-programmed control by leveraging 5G cloud-controlled object targets integrated with the Internet of Things (IoT) and vehicle teleoperation technologies. Unlike conventional testing methods that rely on pre-set conditions, we propose a dynamic game strategy based on a quadratic risk interaction utility function, facilitating intelligent interactions with the vehicle under test (VUT) and creating a more realistic and dynamic interaction environment. The proposed framework has been successfully implemented at the Tongji University Intelligent Connected Vehicle Evaluation Base. Field test results demonstrate that Troublemaker can perform dynamic interactive testing of ADS accurately and effectively. Compared to traditional methods, Troublemaker improves scenario reproduction accuracy by 65.2\%, increases the diversity of interaction strategies by approximately 9.2 times, and enhances exposure frequency of safety-critical scenarios by 3.5 times in unprotected left-turn scenarios.

Hybrid Action Based Reinforcement Learning for Multi-Objective Compatible Autonomous Driving

Jan 14, 2025Abstract:Reinforcement Learning (RL) has shown excellent performance in solving decision-making and control problems of autonomous driving, which is increasingly applied in diverse driving scenarios. However, driving is a multi-attribute problem, leading to challenges in achieving multi-objective compatibility for current RL methods, especially in both policy execution and policy iteration. On the one hand, the common action space structure with single action type limits driving flexibility or results in large behavior fluctuations during policy execution. On the other hand, the multi-attribute weighted single reward function result in the agent's disproportionate attention to certain objectives during policy iterations. To this end, we propose a Multi-objective Ensemble-Critic reinforcement learning method with Hybrid Parametrized Action for multi-objective compatible autonomous driving. Specifically, a parameterized action space is constructed to generate hybrid driving actions, combining both abstract guidance and concrete control commands. A multi-objective critics architecture is constructed considering multiple attribute rewards, to ensure simultaneously focusing on different driving objectives. Additionally, uncertainty-based exploration strategy is introduced to help the agent faster approach viable driving policy. The experimental results in both the simulated traffic environment and the HighD dataset demonstrate that our method can achieve multi-objective compatible autonomous driving in terms of driving efficiency, action consistency, and safety. It enhances the general performance of the driving while significantly increasing training efficiency.

InterHub: A Naturalistic Trajectory Dataset with Dense Interaction for Autonomous Driving

Nov 30, 2024

Abstract:The driving interaction-a critical yet complex aspect of daily driving-lies at the core of autonomous driving research. However, real-world driving scenarios sparsely capture rich interaction events, limiting the availability of comprehensive trajectory datasets for this purpose. To address this challenge, we present InterHub, a dense interaction dataset derived by mining interaction events from extensive naturalistic driving records. We employ formal methods to describe and extract multi-agent interaction events, exposing the limitations of existing autonomous driving solutions. Additionally, we introduce a user-friendly toolkit enabling the expansion of InterHub with both public and private data. By unifying, categorizing, and analyzing diverse interaction events, InterHub facilitates cross-comparative studies and large-scale research, thereby advancing the evaluation and development of autonomous driving technologies.

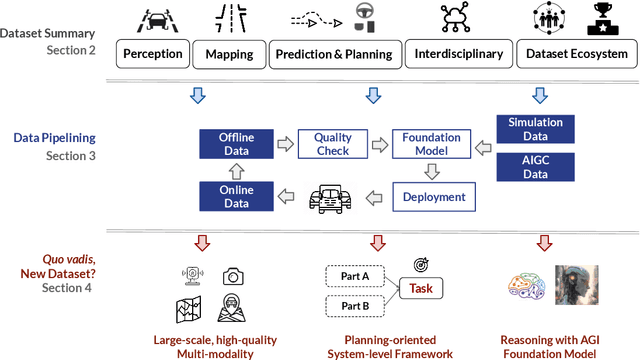

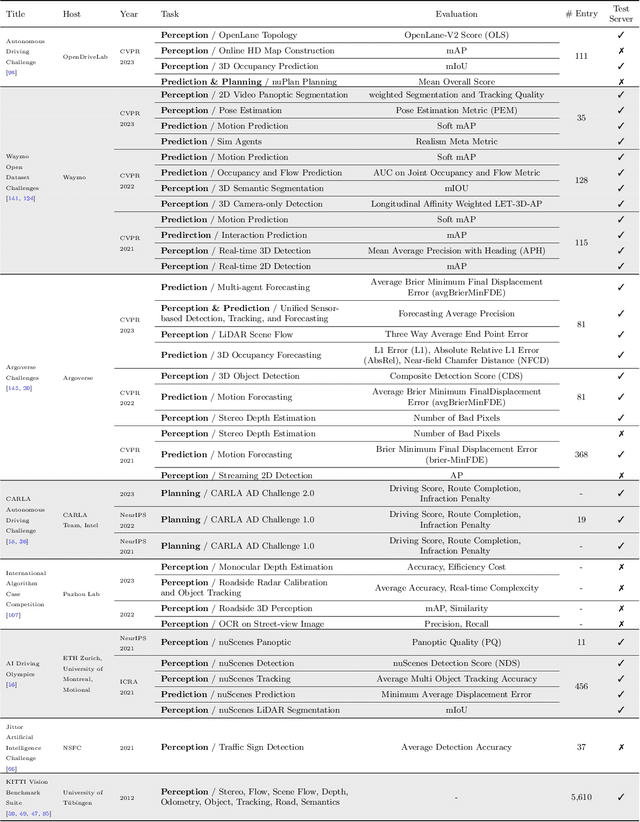

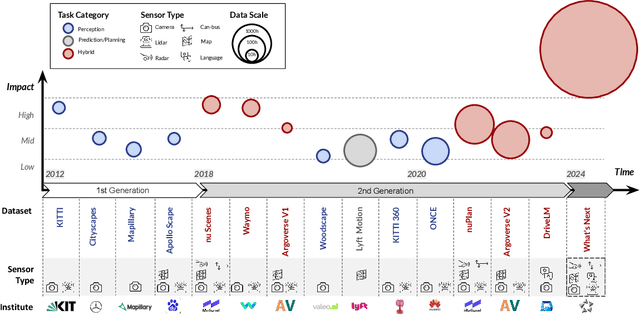

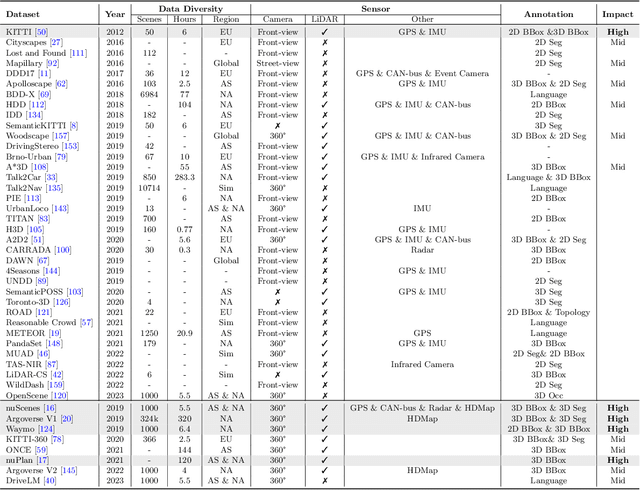

Open-sourced Data Ecosystem in Autonomous Driving: the Present and Future

Dec 06, 2023

Abstract:With the continuous maturation and application of autonomous driving technology, a systematic examination of open-source autonomous driving datasets becomes instrumental in fostering the robust evolution of the industry ecosystem. Current autonomous driving datasets can broadly be categorized into two generations. The first-generation autonomous driving datasets are characterized by relatively simpler sensor modalities, smaller data scale, and is limited to perception-level tasks. KITTI, introduced in 2012, serves as a prominent representative of this initial wave. In contrast, the second-generation datasets exhibit heightened complexity in sensor modalities, greater data scale and diversity, and an expansion of tasks from perception to encompass prediction and control. Leading examples of the second generation include nuScenes and Waymo, introduced around 2019. This comprehensive review, conducted in collaboration with esteemed colleagues from both academia and industry, systematically assesses over seventy open-source autonomous driving datasets from domestic and international sources. It offers insights into various aspects, such as the principles underlying the creation of high-quality datasets, the pivotal role of data engine systems, and the utilization of generative foundation models to facilitate scalable data generation. Furthermore, this review undertakes an exhaustive analysis and discourse regarding the characteristics and data scales that future third-generation autonomous driving datasets should possess. It also delves into the scientific and technical challenges that warrant resolution. These endeavors are pivotal in advancing autonomous innovation and fostering technological enhancement in critical domains. For further details, please refer to https://github.com/OpenDriveLab/DriveAGI.

4DRVO-Net: Deep 4D Radar-Visual Odometry Using Multi-Modal and Multi-Scale Adaptive Fusion

Aug 12, 2023

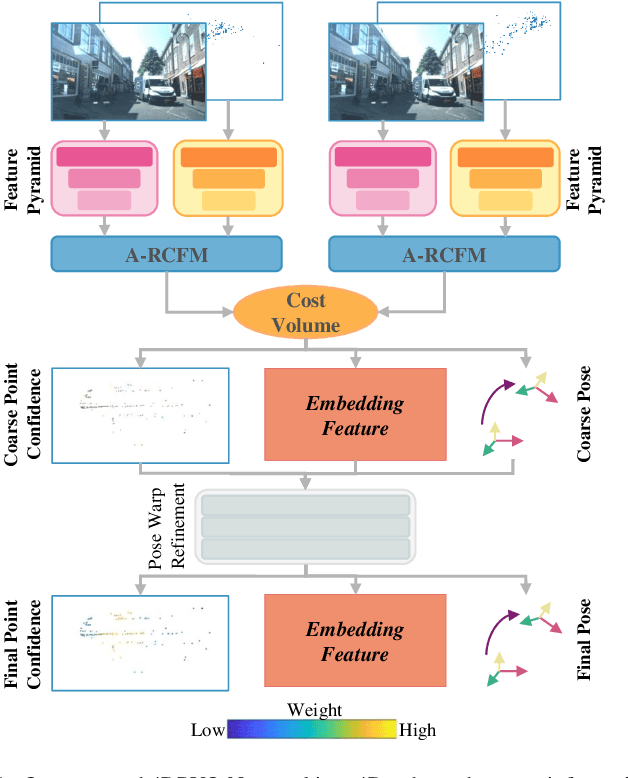

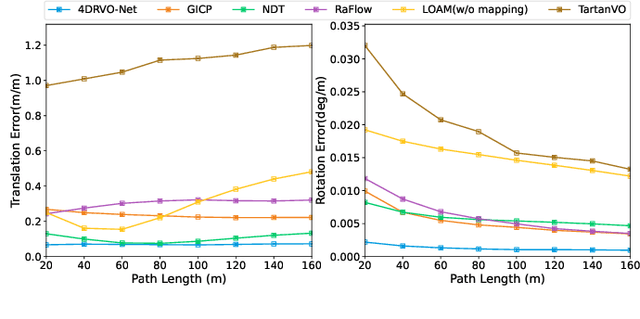

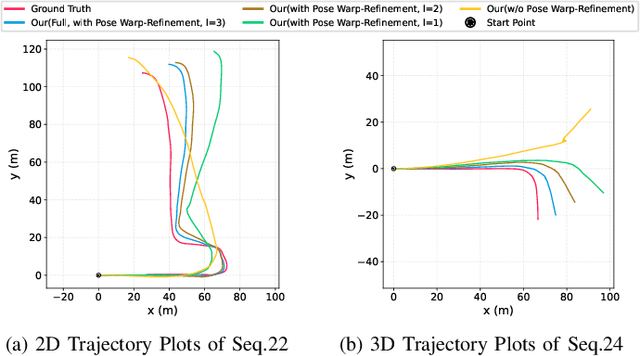

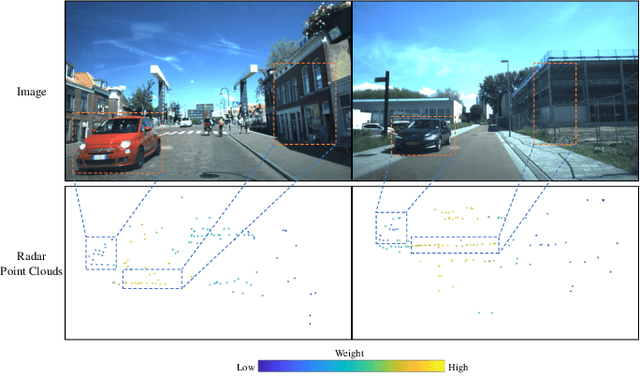

Abstract:Four-dimensional (4D) radar--visual odometry (4DRVO) integrates complementary information from 4D radar and cameras, making it an attractive solution for achieving accurate and robust pose estimation. However, 4DRVO may exhibit significant tracking errors owing to three main factors: 1) sparsity of 4D radar point clouds; 2) inaccurate data association and insufficient feature interaction between the 4D radar and camera; and 3) disturbances caused by dynamic objects in the environment, affecting odometry estimation. In this paper, we present 4DRVO-Net, which is a method for 4D radar--visual odometry. This method leverages the feature pyramid, pose warping, and cost volume (PWC) network architecture to progressively estimate and refine poses. Specifically, we propose a multi-scale feature extraction network called Radar-PointNet++ that fully considers rich 4D radar point information, enabling fine-grained learning for sparse 4D radar point clouds. To effectively integrate the two modalities, we design an adaptive 4D radar--camera fusion module (A-RCFM) that automatically selects image features based on 4D radar point features, facilitating multi-scale cross-modal feature interaction and adaptive multi-modal feature fusion. In addition, we introduce a velocity-guided point-confidence estimation module to measure local motion patterns, reduce the influence of dynamic objects and outliers, and provide continuous updates during pose refinement. We demonstrate the excellent performance of our method and the effectiveness of each module design on both the VoD and in-house datasets. Our method outperforms all learning-based and geometry-based methods for most sequences in the VoD dataset. Furthermore, it has exhibited promising performance that closely approaches that of the 64-line LiDAR odometry results of A-LOAM without mapping optimization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge