Yining Ma

A General Neural Backbone for Mixed-Integer Linear Optimization via Dual Attention

Jan 08, 2026Abstract:Mixed-integer linear programming (MILP), a widely used modeling framework for combinatorial optimization, are central to many scientific and engineering applications, yet remains computationally challenging at scale. Recent advances in deep learning address this challenge by representing MILP instances as variable-constraint bipartite graphs and applying graph neural networks (GNNs) to extract latent structural patterns and enhance solver efficiency. However, this architecture is inherently limited by the local-oriented mechanism, leading to restricted representation power and hindering neural approaches for MILP. Here we present an attention-driven neural architecture that learns expressive representations beyond the pure graph view. A dual-attention mechanism is designed to perform parallel self- and cross-attention over variables and constraints, enabling global information exchange and deeper representation learning. We apply this general backbone to various downstream tasks at the instance level, element level, and solving state level. Extensive experiments across widely used benchmarks show consistent improvements of our approach over state-of-the-art baselines, highlighting attention-based neural architectures as a powerful foundation for learning-enhanced mixed-integer linear optimization.

SHIELD: Multi-task Multi-distribution Vehicle Routing Solver with Sparsity and Hierarchy

Jun 11, 2025Abstract:Recent advances toward foundation models for routing problems have shown great potential of a unified deep model for various VRP variants. However, they overlook the complex real-world customer distributions. In this work, we advance the Multi-Task VRP (MTVRP) setting to the more realistic yet challenging Multi-Task Multi-Distribution VRP (MTMDVRP) setting, and introduce SHIELD, a novel model that leverages both sparsity and hierarchy principles. Building on a deeper decoder architecture, we first incorporate the Mixture-of-Depths (MoD) technique to enforce sparsity. This improves both efficiency and generalization by allowing the model to dynamically select nodes to use or skip each decoder layer, providing the needed capacity to adaptively allocate computation for learning the task/distribution specific and shared representations. We also develop a context-based clustering layer that exploits the presence of hierarchical structures in the problems to produce better local representations. These two designs inductively bias the network to identify key features that are common across tasks and distributions, leading to significantly improved generalization on unseen ones. Our empirical results demonstrate the superiority of our approach over existing methods on 9 real-world maps with 16 VRP variants each.

MetaBox-v2: A Unified Benchmark Platform for Meta-Black-Box Optimization

May 23, 2025

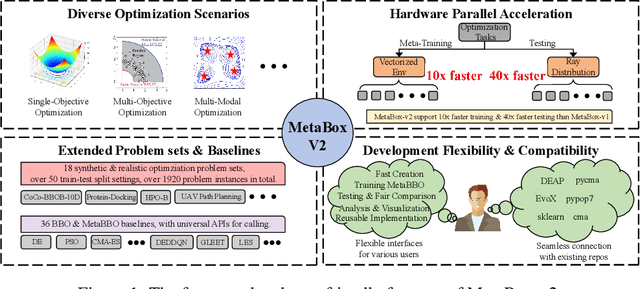

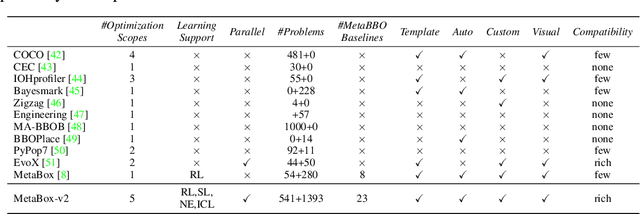

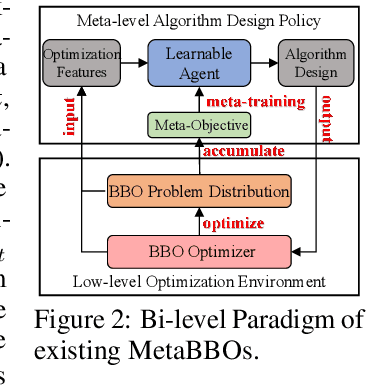

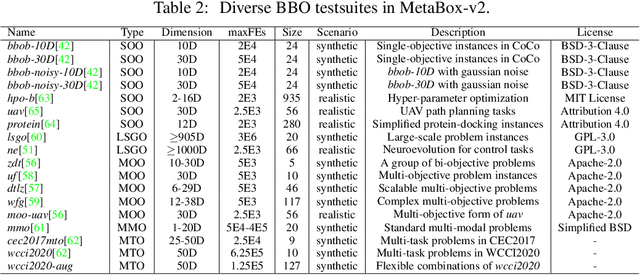

Abstract:Meta-Black-Box Optimization (MetaBBO) streamlines the automation of optimization algorithm design through meta-learning. It typically employs a bi-level structure: the meta-level policy undergoes meta-training to reduce the manual effort required in developing algorithms for low-level optimization tasks. The original MetaBox (2023) provided the first open-source framework for reinforcement learning-based single-objective MetaBBO. However, its relatively narrow scope no longer keep pace with the swift advancement in this field. In this paper, we introduce MetaBox-v2 (https://github.com/MetaEvo/MetaBox) as a milestone upgrade with four novel features: 1) a unified architecture supporting RL, evolutionary, and gradient-based approaches, by which we reproduce 23 up-to-date baselines; 2) efficient parallelization schemes, which reduce the training/testing time by 10-40x; 3) a comprehensive benchmark suite of 18 synthetic/realistic tasks (1900+ instances) spanning single-objective, multi-objective, multi-model, and multi-task optimization scenarios; 4) plentiful and extensible interfaces for custom analysis/visualization and integrating to external optimization tools/benchmarks. To show the utility of MetaBox-v2, we carry out a systematic case study that evaluates the built-in baselines in terms of the optimization performance, generalization ability and learning efficiency. Valuable insights are concluded from thorough and detailed analysis for practitioners and those new to the field.

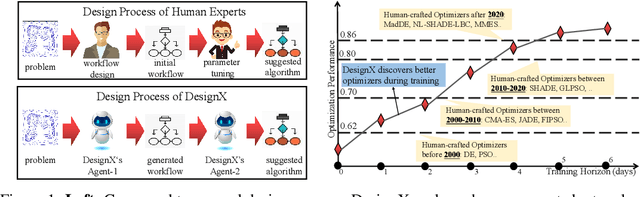

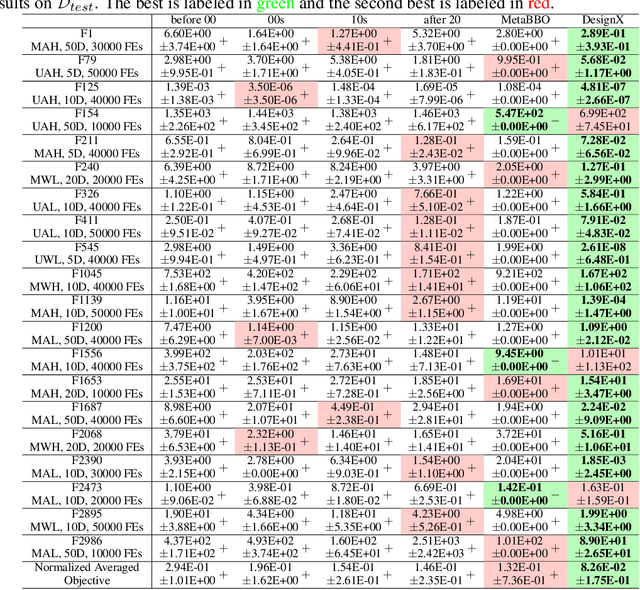

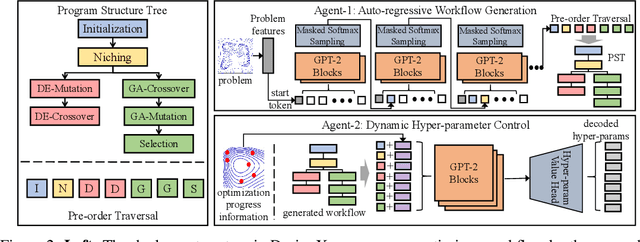

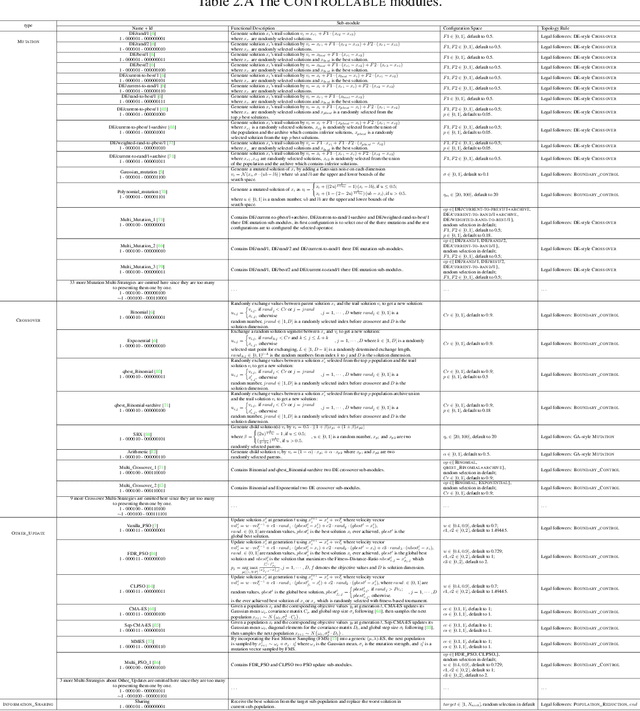

DesignX: Human-Competitive Algorithm Designer for Black-Box Optimization

May 23, 2025

Abstract:Designing effective black-box optimizers is hampered by limited problem-specific knowledge and manual control that spans months for almost every detail. In this paper, we present DesignX, the first automated algorithm design framework that generates an effective optimizer specific to a given black-box optimization problem within seconds. Rooted in the first principles, we identify two key sub-tasks: 1) algorithm structure generation and 2) hyperparameter control. To enable systematic construction, a comprehensive modular algorithmic space is first built, embracing hundreds of algorithm components collected from decades of research. We then introduce a dual-agent reinforcement learning system that collaborates on structural and parametric design through a novel cooperative training objective, enabling large-scale meta-training across 10k diverse instances. Remarkably, through days of autonomous learning, the DesignX-generated optimizers continuously surpass human-crafted optimizers by orders of magnitude, either on synthetic testbed or on realistic optimization scenarios such as Protein-docking, AutoML and UAV path planning. Further in-depth analysis reveals DesignX's capability to discover non-trivial algorithm patterns beyond expert intuition, which, conversely, provides valuable design insights for the optimization community. We provide DesignX's inference code at https://github.com/MetaEvo/DesignX.

Real-world Troublemaker: A 5G Cloud-controlled Track Testing Framework for Automated Driving Systems in Safety-critical Interaction Scenarios

Feb 23, 2025Abstract:Track testing plays a critical role in the safety evaluation of autonomous driving systems (ADS), as it provides a real-world interaction environment. However, the inflexibility in motion control of object targets and the absence of intelligent interactive testing methods often result in pre-fixed and limited testing scenarios. To address these limitations, we propose a novel 5G cloud-controlled track testing framework, Real-world Troublemaker. This framework overcomes the rigidity of traditional pre-programmed control by leveraging 5G cloud-controlled object targets integrated with the Internet of Things (IoT) and vehicle teleoperation technologies. Unlike conventional testing methods that rely on pre-set conditions, we propose a dynamic game strategy based on a quadratic risk interaction utility function, facilitating intelligent interactions with the vehicle under test (VUT) and creating a more realistic and dynamic interaction environment. The proposed framework has been successfully implemented at the Tongji University Intelligent Connected Vehicle Evaluation Base. Field test results demonstrate that Troublemaker can perform dynamic interactive testing of ADS accurately and effectively. Compared to traditional methods, Troublemaker improves scenario reproduction accuracy by 65.2\%, increases the diversity of interaction strategies by approximately 9.2 times, and enhances exposure frequency of safety-critical scenarios by 3.5 times in unprotected left-turn scenarios.

Learning-Guided Rolling Horizon Optimization for Long-Horizon Flexible Job-Shop Scheduling

Feb 18, 2025Abstract:Long-horizon combinatorial optimization problems (COPs), such as the Flexible Job-Shop Scheduling Problem (FJSP), often involve complex, interdependent decisions over extended time frames, posing significant challenges for existing solvers. While Rolling Horizon Optimization (RHO) addresses this by decomposing problems into overlapping shorter-horizon subproblems, such overlap often involves redundant computations. In this paper, we present L-RHO, the first learning-guided RHO framework for COPs. L-RHO employs a neural network to intelligently fix variables that in hindsight did not need to be re-optimized, resulting in smaller and thus easier-to-solve subproblems. For FJSP, this means identifying operations with unchanged machine assignments between consecutive subproblems. Applied to FJSP, L-RHO accelerates RHO by up to 54% while significantly improving solution quality, outperforming other heuristic and learning-based baselines. We also provide in-depth discussions and verify the desirable adaptability and generalization of L-RHO across numerous FJSP variates, distributions, online scenarios and benchmark instances. Moreover, we provide a theoretical analysis to elucidate the conditions under which learning is beneficial.

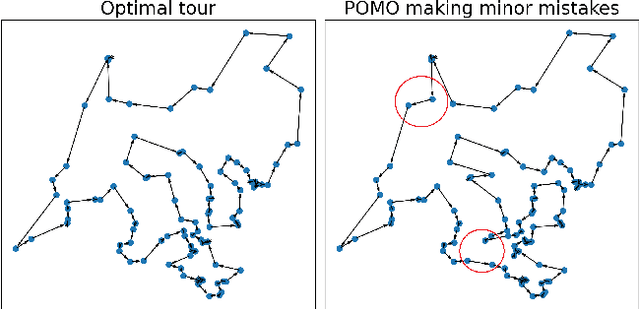

Diversity Optimization for Travelling Salesman Problem via Deep Reinforcement Learning

Jan 01, 2025Abstract:Existing neural methods for the Travelling Salesman Problem (TSP) mostly aim at finding a single optimal solution. To discover diverse yet high-quality solutions for Multi-Solution TSP (MSTSP), we propose a novel deep reinforcement learning based neural solver, which is primarily featured by an encoder-decoder structured policy. Concretely, on the one hand, a Relativization Filter (RF) is designed to enhance the robustness of the encoder to affine transformations of the instances, so as to potentially improve the quality of the found solutions. On the other hand, a Multi-Attentive Adaptive Active Search (MA3S) is tailored to allow the decoders to strike a balance between the optimality and diversity. Experimental evaluations on benchmark instances demonstrate the superiority of our method over recent neural baselines across different metrics, and its competitive performance against state-of-the-art traditional heuristics with significantly reduced computational time, ranging from $1.3\times$ to $15\times$ faster. Furthermore, we demonstrate that our method can also be applied to the Capacitated Vehicle Routing Problem (CVRP).

ConfigX: Modular Configuration for Evolutionary Algorithms via Multitask Reinforcement Learning

Dec 10, 2024

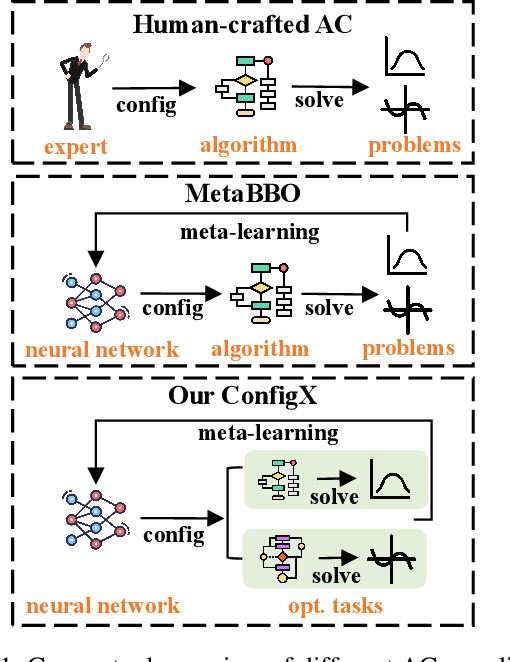

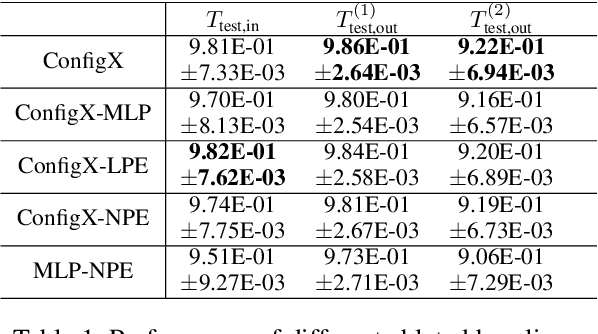

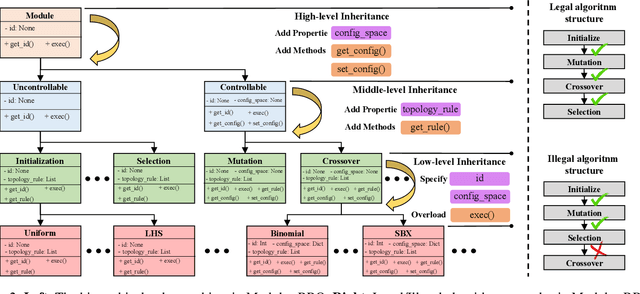

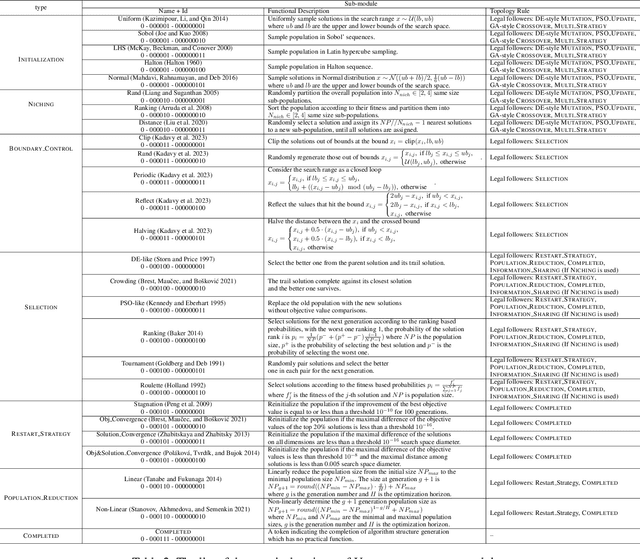

Abstract:Recent advances in Meta-learning for Black-Box Optimization (MetaBBO) have shown the potential of using neural networks to dynamically configure evolutionary algorithms (EAs), enhancing their performance and adaptability across various BBO instances. However, they are often tailored to a specific EA, which limits their generalizability and necessitates retraining or redesigns for different EAs and optimization problems. To address this limitation, we introduce ConfigX, a new paradigm of the MetaBBO framework that is capable of learning a universal configuration agent (model) for boosting diverse EAs. To achieve so, our ConfigX first leverages a novel modularization system that enables the flexible combination of various optimization sub-modules to generate diverse EAs during training. Additionally, we propose a Transformer-based neural network to meta-learn a universal configuration policy through multitask reinforcement learning across a designed joint optimization task space. Extensive experiments verify that, our ConfigX, after large-scale pre-training, achieves robust zero-shot generalization to unseen tasks and outperforms state-of-the-art baselines. Moreover, ConfigX exhibits strong lifelong learning capabilities, allowing efficient adaptation to new tasks through fine-tuning. Our proposed ConfigX represents a significant step toward an automatic, all-purpose configuration agent for EAs.

Learning to Handle Complex Constraints for Vehicle Routing Problems

Oct 28, 2024

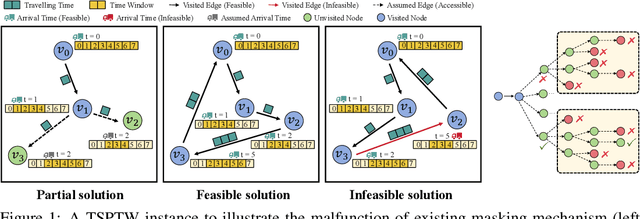

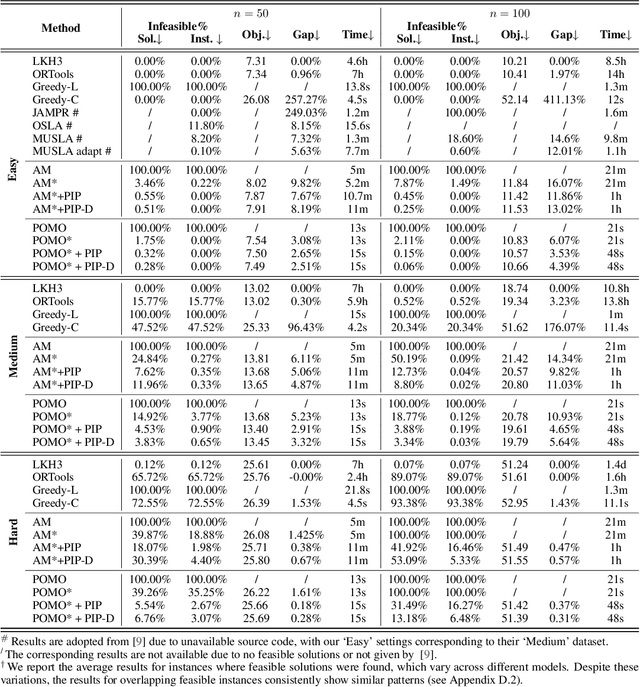

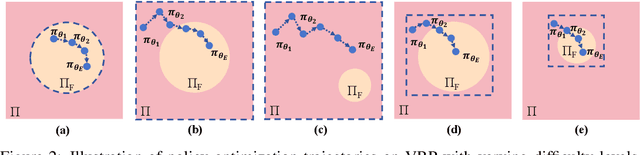

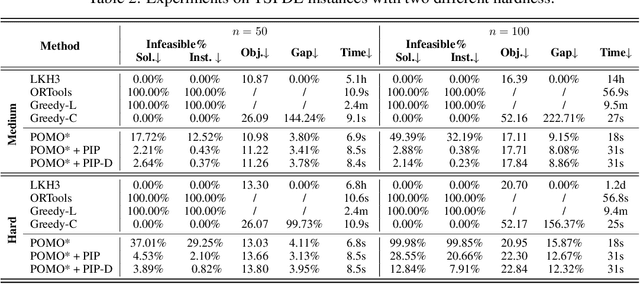

Abstract:Vehicle Routing Problems (VRPs) can model many real-world scenarios and often involve complex constraints. While recent neural methods excel in constructing solutions based on feasibility masking, they struggle with handling complex constraints, especially when obtaining the masking itself is NP-hard. In this paper, we propose a novel Proactive Infeasibility Prevention (PIP) framework to advance the capabilities of neural methods towards more complex VRPs. Our PIP integrates the Lagrangian multiplier as a basis to enhance constraint awareness and introduces preventative infeasibility masking to proactively steer the solution construction process. Moreover, we present PIP-D, which employs an auxiliary decoder and two adaptive strategies to learn and predict these tailored masks, potentially enhancing performance while significantly reducing computational costs during training. To verify our PIP designs, we conduct extensive experiments on the highly challenging Traveling Salesman Problem with Time Window (TSPTW), and TSP with Draft Limit (TSPDL) variants under different constraint hardness levels. Notably, our PIP is generic to boost many neural methods, and exhibits both a significant reduction in infeasible rate and a substantial improvement in solution quality.

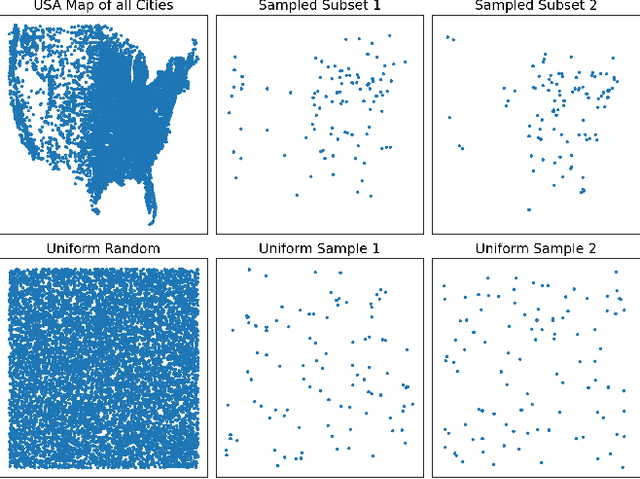

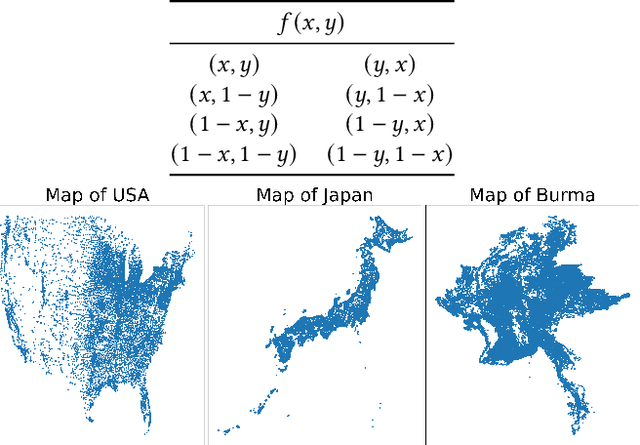

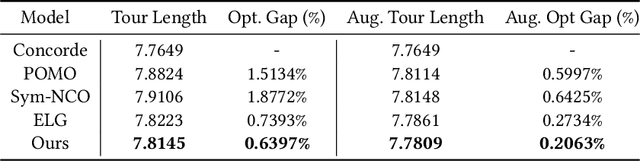

Hierarchical Neural Constructive Solver for Real-world TSP Scenarios

Aug 07, 2024

Abstract:Existing neural constructive solvers for routing problems have predominantly employed transformer architectures, conceptualizing the route construction as a set-to-sequence learning task. However, their efficacy has primarily been demonstrated on entirely random problem instances that inadequately capture real-world scenarios. In this paper, we introduce realistic Traveling Salesman Problem (TSP) scenarios relevant to industrial settings and derive the following insights: (1) The optimal next node (or city) to visit often lies within proximity to the current node, suggesting the potential benefits of biasing choices based on current locations. (2) Effectively solving the TSP requires robust tracking of unvisited nodes and warrants succinct grouping strategies. Building upon these insights, we propose integrating a learnable choice layer inspired by Hypernetworks to prioritize choices based on the current location, and a learnable approximate clustering algorithm inspired by the Expectation-Maximization algorithm to facilitate grouping the unvisited cities. Together, these two contributions form a hierarchical approach towards solving the realistic TSP by considering both immediate local neighbourhoods and learning an intermediate set of node representations. Our hierarchical approach yields superior performance compared to both classical and recent transformer models, showcasing the efficacy of the key designs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge