Li Niu

DynaDrag: Dynamic Drag-Style Image Editing by Motion Prediction

Jan 02, 2026Abstract:To achieve pixel-level image manipulation, drag-style image editing which edits images using points or trajectories as conditions is attracting widespread attention. Most previous methods follow move-and-track framework, in which miss tracking and ambiguous tracking are unavoidable challenging issues. Other methods under different frameworks suffer from various problems like the huge gap between source image and target edited image as well as unreasonable intermediate point which can lead to low editability. To avoid these problems, we propose DynaDrag, the first dragging method under predict-and-move framework. In DynaDrag, Motion Prediction and Motion Supervision are performed iteratively. In each iteration, Motion Prediction first predicts where the handle points should move, and then Motion Supervision drags them accordingly. We also propose to dynamically adjust the valid handle points to further improve the performance. Experiments on face and human datasets showcase the superiority over previous works.

D$^{3}$ToM: Decider-Guided Dynamic Token Merging for Accelerating Diffusion MLLMs

Nov 15, 2025Abstract:Diffusion-based multimodal large language models (Diffusion MLLMs) have recently demonstrated impressive non-autoregressive generative capabilities across vision-and-language tasks. However, Diffusion MLLMs exhibit substantially slower inference than autoregressive models: Each denoising step employs full bidirectional self-attention over the entire sequence, resulting in cubic decoding complexity that becomes computationally impractical with thousands of visual tokens. To address this challenge, we propose D$^{3}$ToM, a Decider-guided dynamic token merging method that dynamically merges redundant visual tokens at different denoising steps to accelerate inference in Diffusion MLLMs. At each denoising step, D$^{3}$ToM uses decider tokens-the tokens generated in the previous denoising step-to build an importance map over all visual tokens. Then it maintains a proportion of the most salient tokens and merges the remainder through similarity-based aggregation. This plug-and-play module integrates into a single transformer layer, physically shortening the visual token sequence for all subsequent layers without altering model parameters. Moreover, D$^{3}$ToM employs a merge ratio that dynamically varies with each denoising step, aligns with the native decoding process of Diffusion MLLMs, achieving superior performance under equivalent computational budgets. Extensive experiments show that D$^{3}$ToM accelerates inference while preserving competitive performance. The code is released at https://github.com/bcmi/D3ToM-Diffusion-MLLM.

CareCom: Generative Image Composition with Calibrated Reference Features

Nov 14, 2025Abstract:Image composition aims to seamlessly insert foreground object into background. Despite the huge progress in generative image composition, the existing methods are still struggling with simultaneous detail preservation and foreground pose/view adjustment. To address this issue, we extend the existing generative composition model to multi-reference version, which allows using arbitrary number of foreground reference images. Furthermore, we propose to calibrate the global and local features of foreground reference images to make them compatible with the background information. The calibrated reference features can supplement the original reference features with useful global and local information of proper pose/view. Extensive experiments on MVImgNet and MureCom demonstrate that the generative model can greatly benefit from the calibrated reference features.

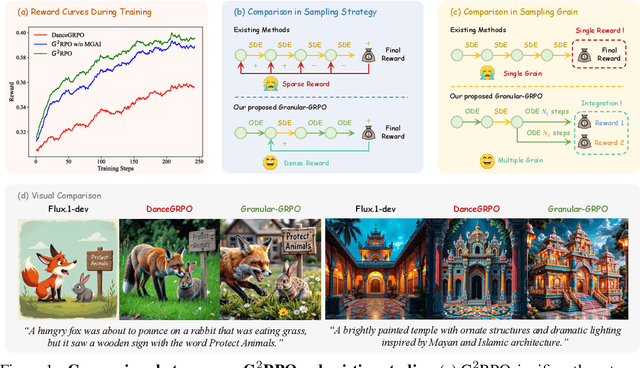

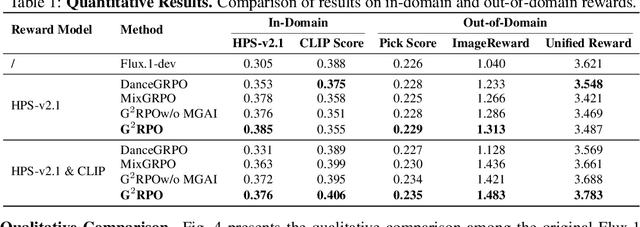

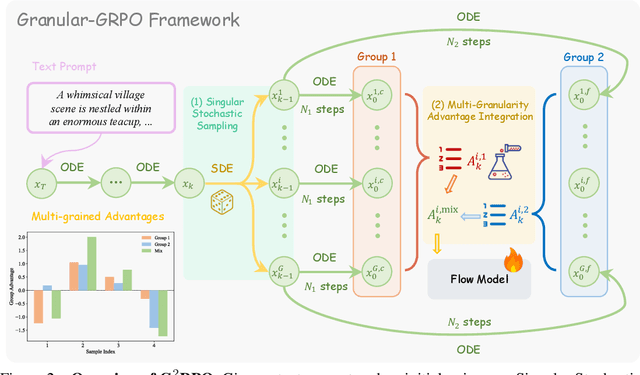

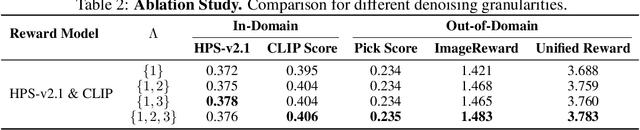

$\text{G}^2$RPO: Granular GRPO for Precise Reward in Flow Models

Oct 02, 2025

Abstract:The integration of online reinforcement learning (RL) into diffusion and flow models has recently emerged as a promising approach for aligning generative models with human preferences. Stochastic sampling via Stochastic Differential Equations (SDE) is employed during the denoising process to generate diverse denoising directions for RL exploration. While existing methods effectively explore potential high-value samples, they suffer from sub-optimal preference alignment due to sparse and narrow reward signals. To address these challenges, we propose a novel Granular-GRPO ($\text{G}^2$RPO ) framework that achieves precise and comprehensive reward assessments of sampling directions in reinforcement learning of flow models. Specifically, a Singular Stochastic Sampling strategy is introduced to support step-wise stochastic exploration while enforcing a high correlation between the reward and the injected noise, thereby facilitating a faithful reward for each SDE perturbation. Concurrently, to eliminate the bias inherent in fixed-granularity denoising, we introduce a Multi-Granularity Advantage Integration module that aggregates advantages computed at multiple diffusion scales, producing a more comprehensive and robust evaluation of the sampling directions. Experiments conducted on various reward models, including both in-domain and out-of-domain evaluations, demonstrate that our $\text{G}^2$RPO significantly outperforms existing flow-based GRPO baselines,highlighting its effectiveness and robustness.

Unsupervised Exposure Correction

Jul 23, 2025Abstract:Current exposure correction methods have three challenges, labor-intensive paired data annotation, limited generalizability, and performance degradation in low-level computer vision tasks. In this work, we introduce an innovative Unsupervised Exposure Correction (UEC) method that eliminates the need for manual annotations, offers improved generalizability, and enhances performance in low-level downstream tasks. Our model is trained using freely available paired data from an emulated Image Signal Processing (ISP) pipeline. This approach does not need expensive manual annotations, thereby minimizing individual style biases from the annotation and consequently improving its generalizability. Furthermore, we present a large-scale Radiometry Correction Dataset, specifically designed to emphasize exposure variations, to facilitate unsupervised learning. In addition, we develop a transformation function that preserves image details and outperforms state-of-the-art supervised methods [12], while utilizing only 0.01% of their parameters. Our work further investigates the broader impact of exposure correction on downstream tasks, including edge detection, demonstrating its effectiveness in mitigating the adverse effects of poor exposure on low-level features. The source code and dataset are publicly available at https://github.com/BeyondHeaven/uec_code.

Object Placement for Anything

Apr 16, 2025Abstract:Object placement aims to determine the appropriate placement (\emph{e.g.}, location and size) of a foreground object when placing it on the background image. Most previous works are limited by small-scale labeled dataset, which hinders the real-world application of object placement. In this work, we devise a semi-supervised framework which can exploit large-scale unlabeled dataset to promote the generalization ability of discriminative object placement models. The discriminative models predict the rationality label for each foreground placement given a foreground-background pair. To better leverage the labeled data, under the semi-supervised framework, we further propose to transfer the knowledge of rationality variation, \emph{i.e.}, whether the change of foreground placement would result in the change of rationality label, from labeled data to unlabeled data. Extensive experiments demonstrate that our framework can effectively enhance the generalization ability of discriminative object placement models.

The Devil is in the Prompts: Retrieval-Augmented Prompt Optimization for Text-to-Video Generation

Apr 16, 2025Abstract:The evolution of Text-to-video (T2V) generative models, trained on large-scale datasets, has been marked by significant progress. However, the sensitivity of T2V generative models to input prompts highlights the critical role of prompt design in influencing generative outcomes. Prior research has predominantly relied on Large Language Models (LLMs) to align user-provided prompts with the distribution of training prompts, albeit without tailored guidance encompassing prompt vocabulary and sentence structure nuances. To this end, we introduce \textbf{RAPO}, a novel \textbf{R}etrieval-\textbf{A}ugmented \textbf{P}rompt \textbf{O}ptimization framework. In order to address potential inaccuracies and ambiguous details generated by LLM-generated prompts. RAPO refines the naive prompts through dual optimization branches, selecting the superior prompt for T2V generation. The first branch augments user prompts with diverse modifiers extracted from a learned relational graph, refining them to align with the format of training prompts via a fine-tuned LLM. Conversely, the second branch rewrites the naive prompt using a pre-trained LLM following a well-defined instruction set. Extensive experiments demonstrate that RAPO can effectively enhance both the static and dynamic dimensions of generated videos, demonstrating the significance of prompt optimization for user-provided prompts. Project website: \href{https://whynothaha.github.io/Prompt_optimizer/RAPO.html}{GitHub}.

Light-A-Video: Training-free Video Relighting via Progressive Light Fusion

Feb 12, 2025

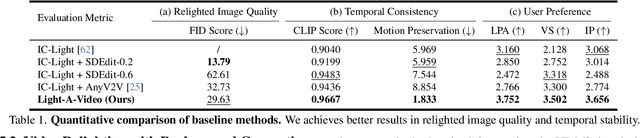

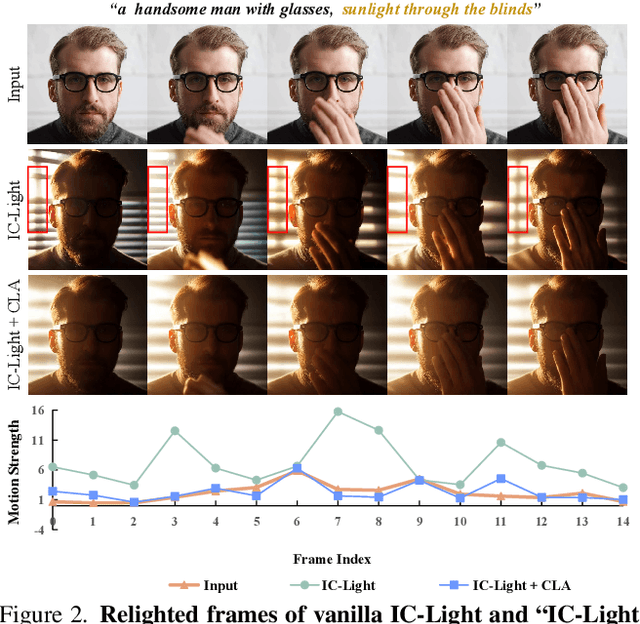

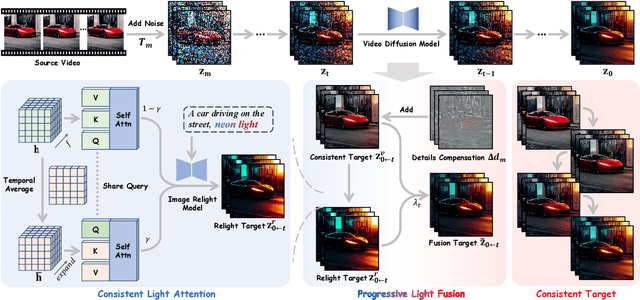

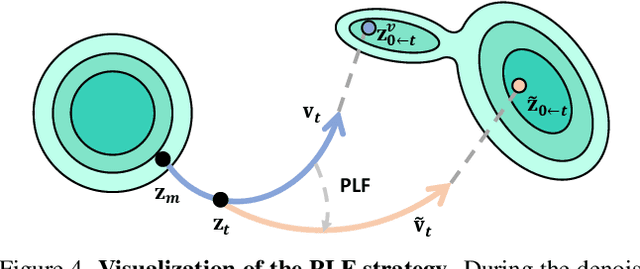

Abstract:Recent advancements in image relighting models, driven by large-scale datasets and pre-trained diffusion models, have enabled the imposition of consistent lighting. However, video relighting still lags, primarily due to the excessive training costs and the scarcity of diverse, high-quality video relighting datasets. A simple application of image relighting models on a frame-by-frame basis leads to several issues: lighting source inconsistency and relighted appearance inconsistency, resulting in flickers in the generated videos. In this work, we propose Light-A-Video, a training-free approach to achieve temporally smooth video relighting. Adapted from image relighting models, Light-A-Video introduces two key techniques to enhance lighting consistency. First, we design a Consistent Light Attention (CLA) module, which enhances cross-frame interactions within the self-attention layers to stabilize the generation of the background lighting source. Second, leveraging the physical principle of light transport independence, we apply linear blending between the source video's appearance and the relighted appearance, using a Progressive Light Fusion (PLF) strategy to ensure smooth temporal transitions in illumination. Experiments show that Light-A-Video improves the temporal consistency of relighted video while maintaining the image quality, ensuring coherent lighting transitions across frames. Project page: https://bujiazi.github.io/light-a-video.github.io/.

MureObjectStitch: Multi-reference Image Composition

Nov 12, 2024

Abstract:Generative image composition aims to regenerate the given foreground object in the background image to produce a realistic composite image. In this work, we propose an effective finetuning strategy for generative image composition model, in which we finetune a pretrained model using one or more images containing the same foreground object. Moreover, we propose a multi-reference strategy, which allows the model to take in multiple reference images of the foreground object. The experiments on MureCOM dataset verify the effectiveness of our method.

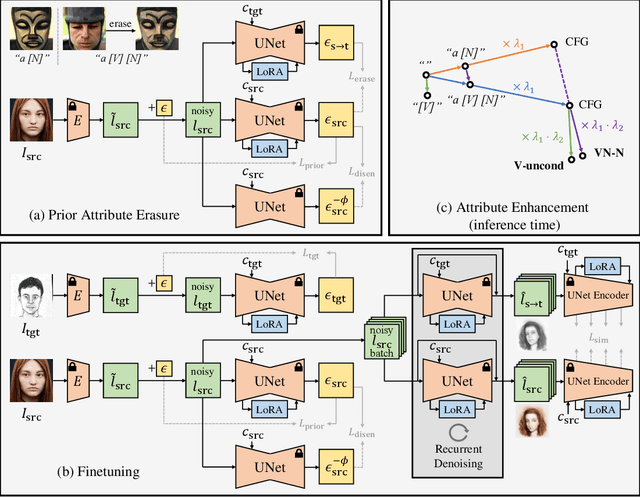

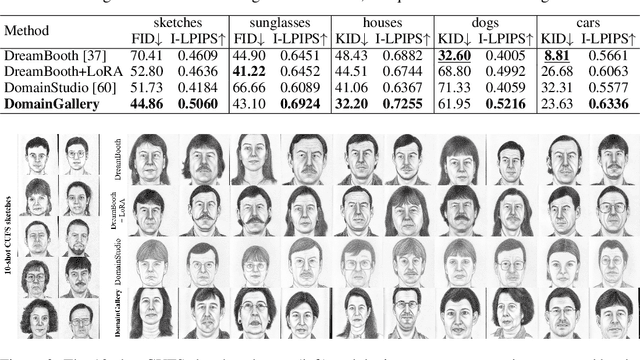

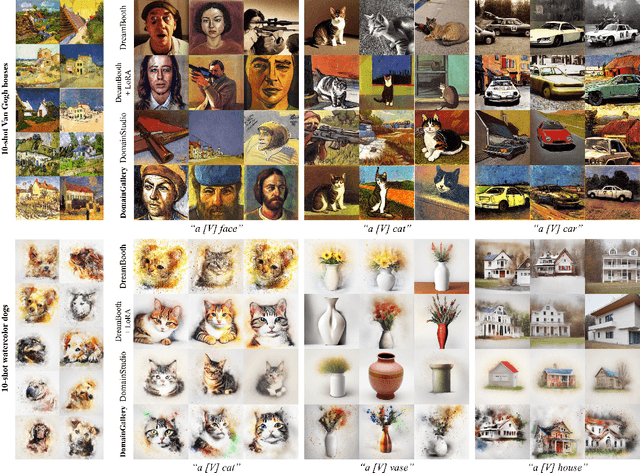

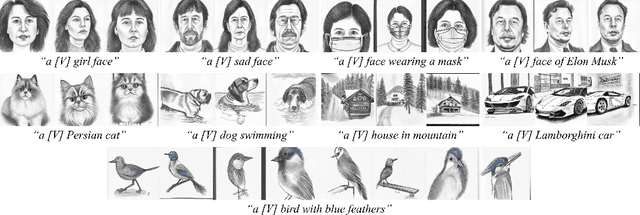

DomainGallery: Few-shot Domain-driven Image Generation by Attribute-centric Finetuning

Nov 07, 2024

Abstract:The recent progress in text-to-image models pretrained on large-scale datasets has enabled us to generate various images as long as we provide a text prompt describing what we want. Nevertheless, the availability of these models is still limited when we expect to generate images that fall into a specific domain either hard to describe or just unseen to the models. In this work, we propose DomainGallery, a few-shot domain-driven image generation method which aims at finetuning pretrained Stable Diffusion on few-shot target datasets in an attribute-centric manner. Specifically, DomainGallery features prior attribute erasure, attribute disentanglement, regularization and enhancement. These techniques are tailored to few-shot domain-driven generation in order to solve key issues that previous works have failed to settle. Extensive experiments are given to validate the superior performance of DomainGallery on a variety of domain-driven generation scenarios. Codes are available at https://github.com/Ldhlwh/DomainGallery.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge