Hui Yuan

Learning Dynamic Scene Reconstruction with Sinusoidal Geometric Priors

Dec 25, 2025Abstract:We propose SirenPose, a novel loss function that combines the periodic activation properties of sinusoidal representation networks with geometric priors derived from keypoint structures to improve the accuracy of dynamic 3D scene reconstruction. Existing approaches often struggle to maintain motion modeling accuracy and spatiotemporal consistency in fast moving and multi target scenes. By introducing physics inspired constraint mechanisms, SirenPose enforces coherent keypoint predictions across both spatial and temporal dimensions. We further expand the training dataset to 600,000 annotated instances to support robust learning. Experimental results demonstrate that models trained with SirenPose achieve significant improvements in spatiotemporal consistency metrics compared to prior methods, showing superior performance in handling rapid motion and complex scene changes.

UGAE: Unified Geometry and Attribute Enhancement for G-PCC Compressed Point Clouds

Oct 27, 2025Abstract:Lossy compression of point clouds reduces storage and transmission costs; however, it inevitably leads to irreversible distortion in geometry structure and attribute information. To address these issues, we propose a unified geometry and attribute enhancement (UGAE) framework, which consists of three core components: post-geometry enhancement (PoGE), pre-attribute enhancement (PAE), and post-attribute enhancement (PoAE). In PoGE, a Transformer-based sparse convolutional U-Net is used to reconstruct the geometry structure with high precision by predicting voxel occupancy probabilities. Building on the refined geometry structure, PAE introduces an innovative enhanced geometry-guided recoloring strategy, which uses a detail-aware K-Nearest Neighbors (DA-KNN) method to achieve accurate recoloring and effectively preserve high-frequency details before attribute compression. Finally, at the decoder side, PoAE uses an attribute residual prediction network with a weighted mean squared error (W-MSE) loss to enhance the quality of high-frequency regions while maintaining the fidelity of low-frequency regions. UGAE significantly outperformed existing methods on three benchmark datasets: 8iVFB, Owlii, and MVUB. Compared to the latest G-PCC test model (TMC13v29), UGAE achieved an average BD-PSNR gain of 9.98 dB and 90.98% BD-bitrate savings for geometry under the D1 metric, as well as a 3.67 dB BD-PSNR improvement with 56.88% BD-bitrate savings for attributes on the Y component. Additionally, it improved perceptual quality significantly.

STQE: Spatial-Temporal Quality Enhancement for G-PCC Compressed Dynamic Point Clouds

Jul 23, 2025Abstract:Very few studies have addressed quality enhancement for compressed dynamic point clouds. In particular, the effective exploitation of spatial-temporal correlations between point cloud frames remains largely unexplored. Addressing this gap, we propose a spatial-temporal attribute quality enhancement (STQE) network that exploits both spatial and temporal correlations to improve the visual quality of G-PCC compressed dynamic point clouds. Our contributions include a recoloring-based motion compensation module that remaps reference attribute information to the current frame geometry to achieve precise inter-frame geometric alignment, a channel-aware temporal attention module that dynamically highlights relevant regions across bidirectional reference frames, a Gaussian-guided neighborhood feature aggregation module that efficiently captures spatial dependencies between geometry and color attributes, and a joint loss function based on the Pearson correlation coefficient, designed to alleviate over-smoothing effects typical of point-wise mean squared error optimization. When applied to the latest G-PCC test model, STQE achieved improvements of 0.855 dB, 0.682 dB, and 0.828 dB in delta PSNR, with Bj{\o}ntegaard Delta rate (BD-rate) reductions of -25.2%, -31.6%, and -32.5% for the Luma, Cb, and Cr components, respectively.

LPCM: Learning-based Predictive Coding for LiDAR Point Cloud Compression

May 26, 2025Abstract:Since the data volume of LiDAR point clouds is very huge, efficient compression is necessary to reduce their storage and transmission costs. However, existing learning-based compression methods do not exploit the inherent angular resolution of LiDAR and ignore the significant differences in the correlation of geometry information at different bitrates. The predictive geometry coding method in the geometry-based point cloud compression (G-PCC) standard uses the inherent angular resolution to predict the azimuth angles. However, it only models a simple linear relationship between the azimuth angles of neighboring points. Moreover, it does not optimize the quantization parameters for residuals on each coordinate axis in the spherical coordinate system. We propose a learning-based predictive coding method (LPCM) with both high-bitrate and low-bitrate coding modes. LPCM converts point clouds into predictive trees using the spherical coordinate system. In high-bitrate coding mode, we use a lightweight Long-Short-Term Memory-based predictive (LSTM-P) module that captures long-term geometry correlations between different coordinates to efficiently predict and compress the elevation angles. In low-bitrate coding mode, where geometry correlation degrades, we introduce a variational radius compression (VRC) module to directly compress the point radii. Then, we analyze why the quantization of spherical coordinates differs from that of Cartesian coordinates and propose a differential evolution (DE)-based quantization parameter selection method, which improves rate-distortion performance without increasing coding time. Experimental results on the LiDAR benchmark \textit{SemanticKITTI} and the MPEG-specified \textit{Ford} datasets show that LPCM outperforms G-PCC and other learning-based methods.

EdgeRegNet: Edge Feature-based Multimodal Registration Network between Images and LiDAR Point Clouds

Mar 19, 2025

Abstract:Cross-modal data registration has long been a critical task in computer vision, with extensive applications in autonomous driving and robotics. Accurate and robust registration methods are essential for aligning data from different modalities, forming the foundation for multimodal sensor data fusion and enhancing perception systems' accuracy and reliability. The registration task between 2D images captured by cameras and 3D point clouds captured by Light Detection and Ranging (LiDAR) sensors is usually treated as a visual pose estimation problem. High-dimensional feature similarities from different modalities are leveraged to identify pixel-point correspondences, followed by pose estimation techniques using least squares methods. However, existing approaches often resort to downsampling the original point cloud and image data due to computational constraints, inevitably leading to a loss in precision. Additionally, high-dimensional features extracted using different feature extractors from various modalities require specific techniques to mitigate cross-modal differences for effective matching. To address these challenges, we propose a method that uses edge information from the original point clouds and images for cross-modal registration. We retain crucial information from the original data by extracting edge points and pixels, enhancing registration accuracy while maintaining computational efficiency. The use of edge points and edge pixels allows us to introduce an attention-based feature exchange block to eliminate cross-modal disparities. Furthermore, we incorporate an optimal matching layer to improve correspondence identification. We validate the accuracy of our method on the KITTI and nuScenes datasets, demonstrating its state-of-the-art performance.

PAPI-Reg: Patch-to-Pixel Solution for Efficient Cross-Modal Registration between LiDAR Point Cloud and Camera Image

Mar 19, 2025Abstract:The primary requirement for cross-modal data fusion is the precise alignment of data from different sensors. However, the calibration between LiDAR point clouds and camera images is typically time-consuming and needs external calibration board or specific environmental features. Cross-modal registration effectively solves this problem by aligning the data directly without requiring external calibration. However, due to the domain gap between the point cloud and the image, existing methods rarely achieve satisfactory registration accuracy while maintaining real-time performance. To address this issue, we propose a framework that projects point clouds into several 2D representations for matching with camera images, which not only leverages the geometric characteristic of LiDAR point clouds more effectively but also bridge the domain gap between the point cloud and image. Moreover, to tackle the challenges of cross modal differences and the limited overlap between LiDAR point clouds and images in the image matching task, we introduce a multi-scale feature extraction network to effectively extract features from both camera images and the projection maps of LiDAR point cloud. Additionally, we propose a patch-to-pixel matching network to provide more effective supervision and achieve higher accuracy. We validate the performance of our model through experiments on the KITTI and nuScenes datasets. Our network achieves real-time performance and extremely high registration accuracy. On the KITTI dataset, our model achieves a registration accuracy rate of over 99\%.

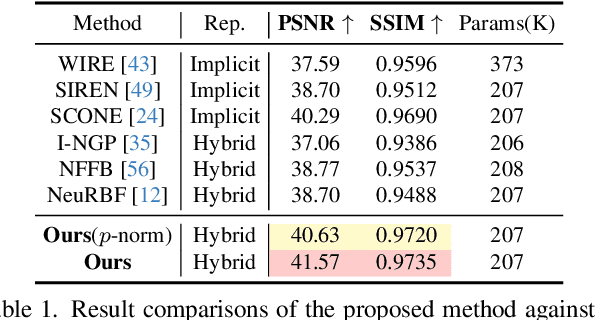

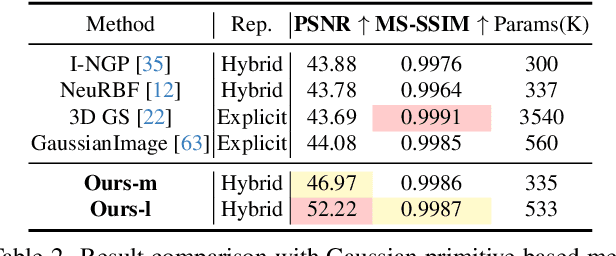

MetricGrids: Arbitrary Nonlinear Approximation with Elementary Metric Grids based Implicit Neural Representation

Mar 13, 2025

Abstract:This paper presents MetricGrids, a novel grid-based neural representation that combines elementary metric grids in various metric spaces to approximate complex nonlinear signals. While grid-based representations are widely adopted for their efficiency and scalability, the existing feature grids with linear indexing for continuous-space points can only provide degenerate linear latent space representations, and such representations cannot be adequately compensated to represent complex nonlinear signals by the following compact decoder. To address this problem while keeping the simplicity of a regular grid structure, our approach builds upon the standard grid-based paradigm by constructing multiple elementary metric grids as high-order terms to approximate complex nonlinearities, following the Taylor expansion principle. Furthermore, we enhance model compactness with hash encoding based on different sparsities of the grids to prevent detrimental hash collisions, and a high-order extrapolation decoder to reduce explicit grid storage requirements. experimental results on both 2D and 3D reconstructions demonstrate the superior fitting and rendering accuracy of the proposed method across diverse signal types, validating its robustness and generalizability. Code is available at https://github.com/wangshu31/MetricGrids}{https://github.com/wangshu31/MetricGrids.

Interleaved Block-based Learned Image Compression with Feature Enhancement and Quantization Error Compensation

Feb 21, 2025Abstract:In recent years, learned image compression (LIC) methods have achieved significant performance improvements. However, obtaining a more compact latent representation and reducing the impact of quantization errors remain key challenges in the field of LIC. To address these challenges, we propose a feature extraction module, a feature refinement module, and a feature enhancement module. Our feature extraction module shuffles the pixels in the image, splits the resulting image into sub-images, and extracts coarse features from the sub-images. Our feature refinement module stacks the coarse features and uses an attention refinement block composed of concatenated three-dimensional convolution residual blocks to learn more compact latent features by exploiting correlations across channels, within sub-images (intra-sub-image correlations), and across sub-images (inter-sub-image correlations). Our feature enhancement module reduces information loss in the decoded features following quantization. We also propose a quantization error compensation module that mitigates the quantization mismatch between training and testing. Our four modules can be readily integrated into state-of-the-art LIC methods. Experiments show that combining our modules with Tiny-LIC outperforms existing LIC methods and image compression standards in terms of peak signal-to-noise ratio (PSNR) and multi-scale structural similarity (MS-SSIM) on the Kodak dataset and the CLIC dataset.

FD-LSCIC: Frequency Decomposition-based Learned Screen Content Image Compression

Feb 21, 2025

Abstract:The learned image compression (LIC) methods have already surpassed traditional techniques in compressing natural scene (NS) images. However, directly applying these methods to screen content (SC) images, which possess distinct characteristics such as sharp edges, repetitive patterns, embedded text and graphics, yields suboptimal results. This paper addresses three key challenges in SC image compression: learning compact latent features, adapting quantization step sizes, and the lack of large SC datasets. To overcome these challenges, we propose a novel compression method that employs a multi-frequency two-stage octave residual block (MToRB) for feature extraction, a cascaded triple-scale feature fusion residual block (CTSFRB) for multi-scale feature integration and a multi-frequency context interaction module (MFCIM) to reduce inter-frequency correlations. Additionally, we introduce an adaptive quantization module that learns scaled uniform noise for each frequency component, enabling flexible control over quantization granularity. Furthermore, we construct a large SC image compression dataset (SDU-SCICD10K), which includes over 10,000 images spanning basic SC images, computer-rendered images, and mixed NS and SC images from both PC and mobile platforms. Experimental results demonstrate that our approach significantly improves SC image compression performance, outperforming traditional standards and state-of-the-art learning-based methods in terms of peak signal-to-noise ratio (PSNR) and multi-scale structural similarity (MS-SSIM).

Training-Free Guidance Beyond Differentiability: Scalable Path Steering with Tree Search in Diffusion and Flow Models

Feb 17, 2025Abstract:Training-free guidance enables controlled generation in diffusion and flow models, but most existing methods assume differentiable objectives and rely on gradients. This work focuses on training-free guidance addressing challenges from non-differentiable objectives and discrete data distributions. We propose an algorithmic framework TreeG: Tree Search-Based Path Steering Guidance, applicable to both continuous and discrete settings in diffusion and flow models. TreeG offers a unified perspective on training-free guidance: proposing candidates for the next step, evaluating candidates, and selecting the best to move forward, enhanced by a tree search mechanism over active paths or parallelizing exploration. We comprehensively investigate the design space of TreeG over the candidate proposal module and the evaluation function, instantiating TreeG into three novel algorithms. Our experiments show that TreeG consistently outperforms the top guidance baselines in symbolic music generation, small molecule generation, and enhancer DNA design, all of which involve non-differentiable challenges. Additionally, we identify an inference-time scaling law showing TreeG's scalability in inference-time computation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge