Xiaolong Mao

STQE: Spatial-Temporal Quality Enhancement for G-PCC Compressed Dynamic Point Clouds

Jul 23, 2025Abstract:Very few studies have addressed quality enhancement for compressed dynamic point clouds. In particular, the effective exploitation of spatial-temporal correlations between point cloud frames remains largely unexplored. Addressing this gap, we propose a spatial-temporal attribute quality enhancement (STQE) network that exploits both spatial and temporal correlations to improve the visual quality of G-PCC compressed dynamic point clouds. Our contributions include a recoloring-based motion compensation module that remaps reference attribute information to the current frame geometry to achieve precise inter-frame geometric alignment, a channel-aware temporal attention module that dynamically highlights relevant regions across bidirectional reference frames, a Gaussian-guided neighborhood feature aggregation module that efficiently captures spatial dependencies between geometry and color attributes, and a joint loss function based on the Pearson correlation coefficient, designed to alleviate over-smoothing effects typical of point-wise mean squared error optimization. When applied to the latest G-PCC test model, STQE achieved improvements of 0.855 dB, 0.682 dB, and 0.828 dB in delta PSNR, with Bj{\o}ntegaard Delta rate (BD-rate) reductions of -25.2%, -31.6%, and -32.5% for the Luma, Cb, and Cr components, respectively.

EdgeRegNet: Edge Feature-based Multimodal Registration Network between Images and LiDAR Point Clouds

Mar 19, 2025

Abstract:Cross-modal data registration has long been a critical task in computer vision, with extensive applications in autonomous driving and robotics. Accurate and robust registration methods are essential for aligning data from different modalities, forming the foundation for multimodal sensor data fusion and enhancing perception systems' accuracy and reliability. The registration task between 2D images captured by cameras and 3D point clouds captured by Light Detection and Ranging (LiDAR) sensors is usually treated as a visual pose estimation problem. High-dimensional feature similarities from different modalities are leveraged to identify pixel-point correspondences, followed by pose estimation techniques using least squares methods. However, existing approaches often resort to downsampling the original point cloud and image data due to computational constraints, inevitably leading to a loss in precision. Additionally, high-dimensional features extracted using different feature extractors from various modalities require specific techniques to mitigate cross-modal differences for effective matching. To address these challenges, we propose a method that uses edge information from the original point clouds and images for cross-modal registration. We retain crucial information from the original data by extracting edge points and pixels, enhancing registration accuracy while maintaining computational efficiency. The use of edge points and edge pixels allows us to introduce an attention-based feature exchange block to eliminate cross-modal disparities. Furthermore, we incorporate an optimal matching layer to improve correspondence identification. We validate the accuracy of our method on the KITTI and nuScenes datasets, demonstrating its state-of-the-art performance.

CS-Net:Contribution-based Sampling Network for Point Cloud Simplification

Jan 18, 2025

Abstract:Point cloud sampling plays a crucial role in reducing computation costs and storage requirements for various vision tasks. Traditional sampling methods, such as farthest point sampling, lack task-specific information and, as a result, cannot guarantee optimal performance in specific applications. Learning-based methods train a network to sample the point cloud for the targeted downstream task. However, they do not guarantee that the sampled points are the most relevant ones. Moreover, they may result in duplicate sampled points, which requires completion of the sampled point cloud through post-processing techniques. To address these limitations, we propose a contribution-based sampling network (CS-Net), where the sampling operation is formulated as a Top-k operation. To ensure that the network can be trained in an end-to-end way using gradient descent algorithms, we use a differentiable approximation to the Top-k operation via entropy regularization of an optimal transport problem. Our network consists of a feature embedding module, a cascade attention module, and a contribution scoring module. The feature embedding module includes a specifically designed spatial pooling layer to reduce parameters while preserving important features. The cascade attention module combines the outputs of three skip connected offset attention layers to emphasize the attractive features and suppress less important ones. The contribution scoring module generates a contribution score for each point and guides the sampling process to prioritize the most important ones. Experiments on the ModelNet40 and PU147 showed that CS-Net achieved state-of-the-art performance in two semantic-based downstream tasks (classification and registration) and two reconstruction-based tasks (compression and surface reconstruction).

SPAC: Sampling-based Progressive Attribute Compression for Dense Point Clouds

Sep 16, 2024

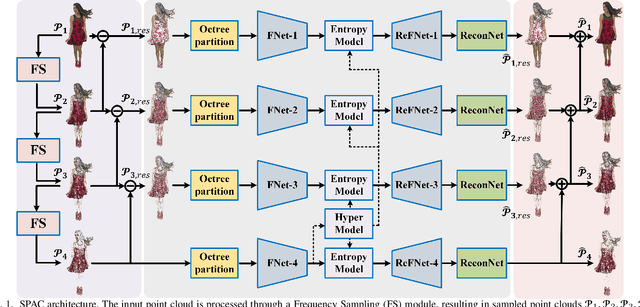

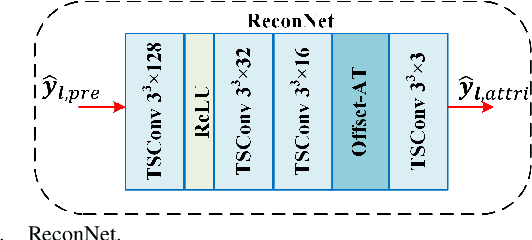

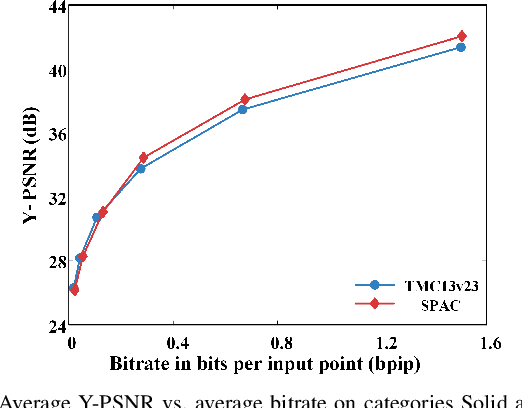

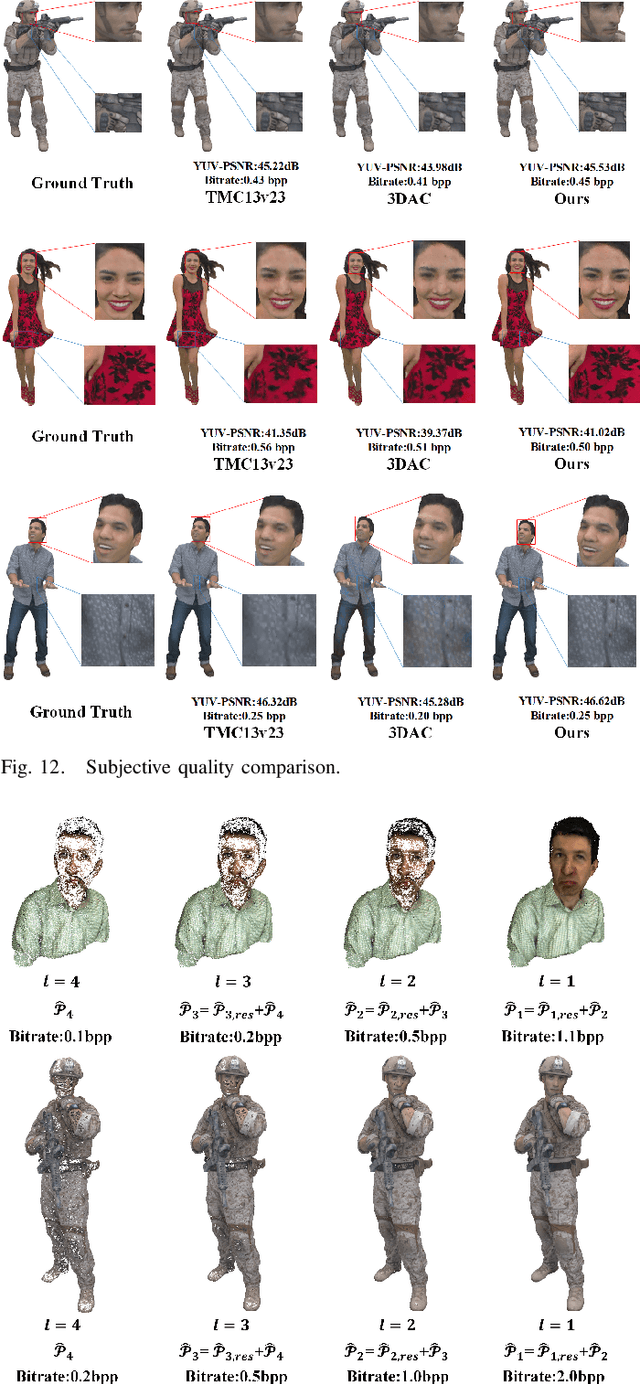

Abstract:We propose an end-to-end attribute compression method for dense point clouds. The proposed method combines a frequency sampling module, an adaptive scale feature extraction module with geometry assistance, and a global hyperprior entropy model. The frequency sampling module uses a Hamming window and the Fast Fourier Transform to extract high-frequency components of the point cloud. The difference between the original point cloud and the sampled point cloud is divided into multiple sub-point clouds. These sub-point clouds are then partitioned using an octree, providing a structured input for feature extraction. The feature extraction module integrates adaptive convolutional layers and uses offset-attention to capture both local and global features. Then, a geometry-assisted attribute feature refinement module is used to refine the extracted attribute features. Finally, a global hyperprior model is introduced for entropy encoding. This model propagates hyperprior parameters from the deepest (base) layer to the other layers, further enhancing the encoding efficiency. At the decoder, a mirrored network is used to progressively restore features and reconstruct the color attribute through transposed convolutional layers. The proposed method encodes base layer information at a low bitrate and progressively adds enhancement layer information to improve reconstruction accuracy. Compared to the latest G-PCC test model (TMC13v23) under the MPEG common test conditions (CTCs), the proposed method achieved an average Bjontegaard delta bitrate reduction of 24.58% for the Y component (21.23% for YUV combined) on the MPEG Category Solid dataset and 22.48% for the Y component (17.19% for YUV combined) on the MPEG Category Dense dataset. This is the first instance of a learning-based codec outperforming the G-PCC standard on these datasets under the MPEG CTCs.

Enhancing octree-based context models for point cloud geometry compression with attention-based child node number prediction

Jul 11, 2024

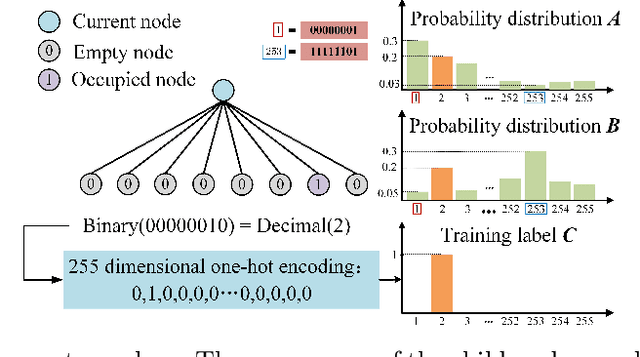

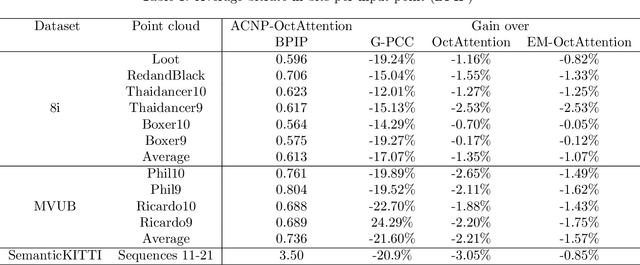

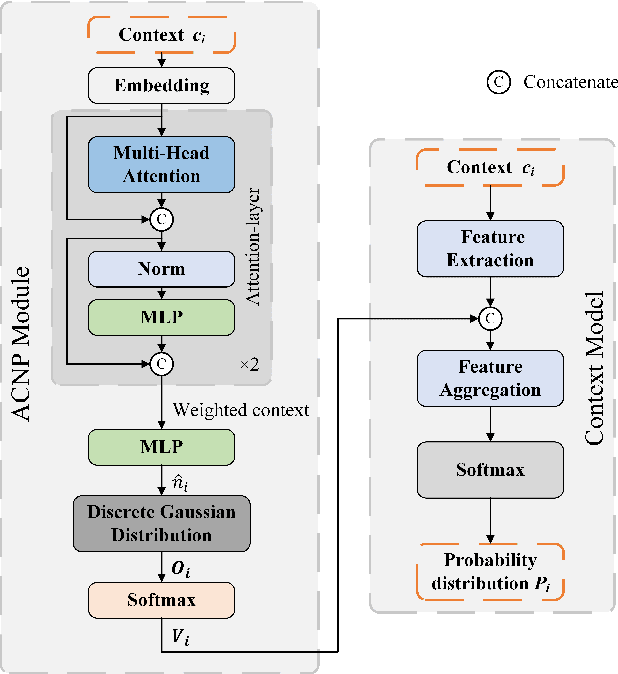

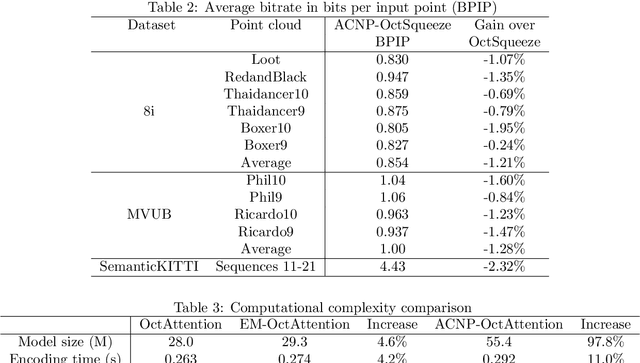

Abstract:In point cloud geometry compression, most octreebased context models use the cross-entropy between the onehot encoding of node occupancy and the probability distribution predicted by the context model as the loss. This approach converts the problem of predicting the number (a regression problem) and the position (a classification problem) of occupied child nodes into a 255-dimensional classification problem. As a result, it fails to accurately measure the difference between the one-hot encoding and the predicted probability distribution. We first analyze why the cross-entropy loss function fails to accurately measure the difference between the one-hot encoding and the predicted probability distribution. Then, we propose an attention-based child node number prediction (ACNP) module to enhance the context models. The proposed module can predict the number of occupied child nodes and map it into an 8- dimensional vector to assist the context model in predicting the probability distribution of the occupancy of the current node for efficient entropy coding. Experimental results demonstrate that the proposed module enhances the coding efficiency of octree-based context models.

* 2 figures and 2 tables

PCAC-GAN:ASparse-Tensor-Based Generative Adversarial Network for 3D Point Cloud Attribute Compression

Jul 09, 2024

Abstract:Learning-based methods have proven successful in compressing geometric information for point clouds. For attribute compression, however, they still lag behind non-learning-based methods such as the MPEG G-PCC standard. To bridge this gap, we propose a novel deep learning-based point cloud attribute compression method that uses a generative adversarial network (GAN) with sparse convolution layers. Our method also includes a module that adaptively selects the resolution of the voxels used to voxelize the input point cloud. Sparse vectors are used to represent the voxelized point cloud, and sparse convolutions process the sparse tensors, ensuring computational efficiency. To the best of our knowledge, this is the first application of GANs to compress point cloud attributes. Our experimental results show that our method outperforms existing learning-based techniques and rivals the latest G-PCC test model (TMC13v23) in terms of visual quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge