Hui He

SVBench: Evaluation of Video Generation Models on Social Reasoning

Dec 25, 2025Abstract:Recent text-to-video generation models exhibit remarkable progress in visual realism, motion fidelity, and text-video alignment, yet they remain fundamentally limited in their ability to generate socially coherent behavior. Unlike humans, who effortlessly infer intentions, beliefs, emotions, and social norms from brief visual cues, current models tend to render literal scenes without capturing the underlying causal or psychological logic. To systematically evaluate this gap, we introduce the first benchmark for social reasoning in video generation. Grounded in findings from developmental and social psychology, our benchmark organizes thirty classic social cognition paradigms into seven core dimensions, including mental-state inference, goal-directed action, joint attention, social coordination, prosocial behavior, social norms, and multi-agent strategy. To operationalize these paradigms, we develop a fully training-free agent-based pipeline that (i) distills the reasoning mechanism of each experiment, (ii) synthesizes diverse video-ready scenarios, (iii) enforces conceptual neutrality and difficulty control through cue-based critique, and (iv) evaluates generated videos using a high-capacity VLM judge across five interpretable dimensions of social reasoning. Using this framework, we conduct the first large-scale study across seven state-of-the-art video generation systems. Our results reveal substantial performance gaps: while modern models excel in surface-level plausibility, they systematically fail in intention recognition, belief reasoning, joint attention, and prosocial inference.

Circumventing Backdoor Space via Weight Symmetry

Jun 09, 2025Abstract:Deep neural networks are vulnerable to backdoor attacks, where malicious behaviors are implanted during training. While existing defenses can effectively purify compromised models, they typically require labeled data or specific training procedures, making them difficult to apply beyond supervised learning settings. Notably, recent studies have shown successful backdoor attacks across various learning paradigms, highlighting a critical security concern. To address this gap, we propose Two-stage Symmetry Connectivity (TSC), a novel backdoor purification defense that operates independently of data format and requires only a small fraction of clean samples. Through theoretical analysis, we prove that by leveraging permutation invariance in neural networks and quadratic mode connectivity, TSC amplifies the loss on poisoned samples while maintaining bounded clean accuracy. Experiments demonstrate that TSC achieves robust performance comparable to state-of-the-art methods in supervised learning scenarios. Furthermore, TSC generalizes to self-supervised learning frameworks, such as SimCLR and CLIP, maintaining its strong defense capabilities. Our code is available at https://github.com/JiePeng104/TSC.

FGSGT: Saliency-Guided Siamese Network Tracker Based on Key Fine-Grained Feature Information for Thermal Infrared Target Tracking

Apr 19, 2025Abstract:Thermal infrared (TIR) images typically lack detailed features and have low contrast, making it challenging for conventional feature extraction models to capture discriminative target characteristics. As a result, trackers are often affected by interference from visually similar objects and are susceptible to tracking drift. To address these challenges, we propose a novel saliency-guided Siamese network tracker based on key fine-grained feature infor-mation. First, we introduce a fine-grained feature parallel learning convolu-tional block with a dual-stream architecture and convolutional kernels of varying sizes. This design captures essential global features from shallow layers, enhances feature diversity, and minimizes the loss of fine-grained in-formation typically encountered in residual connections. In addition, we propose a multi-layer fine-grained feature fusion module that uses bilinear matrix multiplication to effectively integrate features across both deep and shallow layers. Next, we introduce a Siamese residual refinement block that corrects saliency map prediction errors using residual learning. Combined with deep supervision, this mechanism progressively refines predictions, ap-plying supervision at each recursive step to ensure consistent improvements in accuracy. Finally, we present a saliency loss function to constrain the sali-ency predictions, directing the network to focus on highly discriminative fi-ne-grained features. Extensive experiment results demonstrate that the pro-posed tracker achieves the highest precision and success rates on the PTB-TIR and LSOTB-TIR benchmarks. It also achieves a top accuracy of 0.78 on the VOT-TIR 2015 benchmark and 0.75 on the VOT-TIR 2017 benchmark.

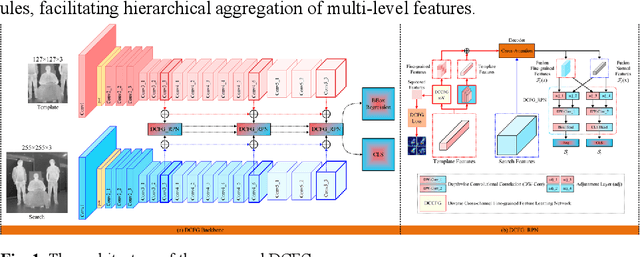

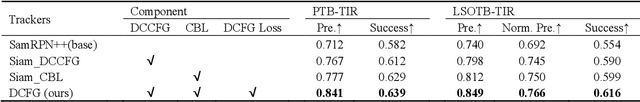

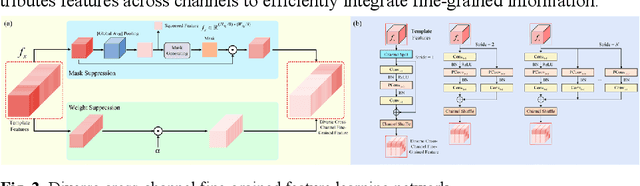

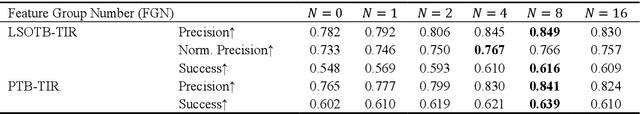

DCFG: Diverse Cross-Channel Fine-Grained Feature Learning and Progressive Fusion Siamese Tracker for Thermal Infrared Target Tracking

Apr 19, 2025

Abstract:To address the challenge of capturing highly discriminative features in ther-mal infrared (TIR) tracking, we propose a novel Siamese tracker based on cross-channel fine-grained feature learning and progressive fusion. First, we introduce a cross-channel fine-grained feature learning network that employs masks and suppression coefficients to suppress dominant target features, en-abling the tracker to capture more detailed and subtle information. The net-work employs a channel rearrangement mechanism to enhance efficient in-formation flow, coupled with channel equalization to reduce parameter count. Additionally, we incorporate layer-by-layer combination units for ef-fective feature extraction and fusion, thereby minimizing parameter redun-dancy and computational complexity. The network further employs feature redirection and channel shuffling strategies to better integrate fine-grained details. Second, we propose a specialized cross-channel fine-grained loss function designed to guide feature groups toward distinct discriminative re-gions of the target, thus improving overall target representation. This loss function includes an inter-channel loss term that promotes orthogonality be-tween channels, maximizing feature diversity and facilitating finer detail capture. Extensive experiments demonstrate that our proposed tracker achieves the highest accuracy, scoring 0.81 on the VOT-TIR 2015 and 0.78 on the VOT-TIR 2017 benchmark, while also outperforming other methods across all evaluation metrics on the LSOTB-TIR and PTB-TIR benchmarks.

RURANET++: An Unsupervised Learning Method for Diabetic Macular Edema Based on SCSE Attention Mechanisms and Dynamic Multi-Projection Head Clustering

Feb 27, 2025

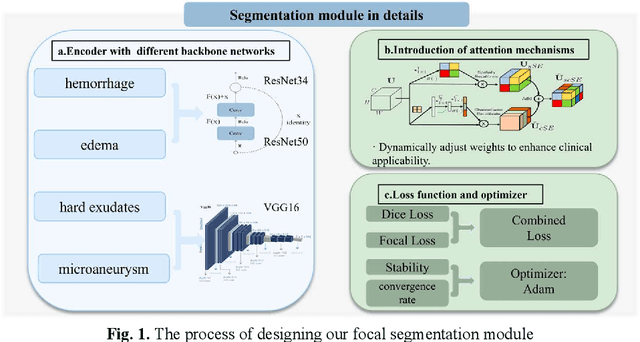

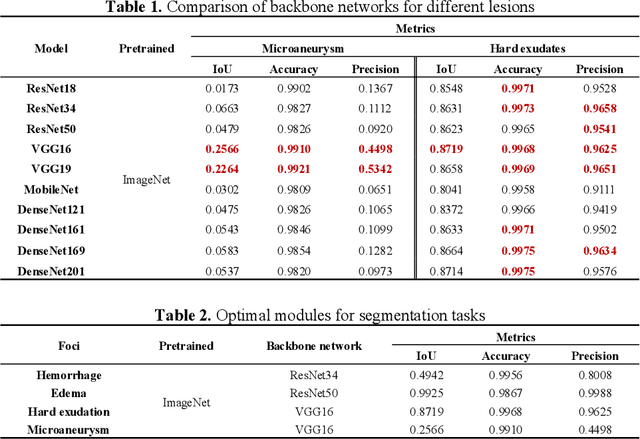

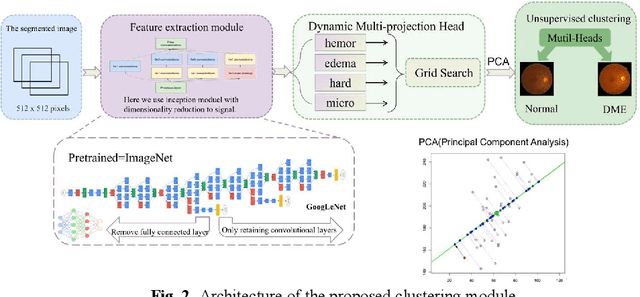

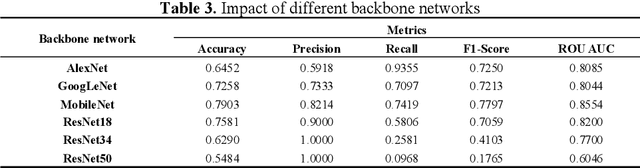

Abstract:Diabetic Macular Edema (DME), a prevalent complication among diabetic patients, constitutes a major cause of visual impairment and blindness. Although deep learning has achieved remarkable progress in medical image analysis, traditional DME diagnosis still relies on extensive annotated data and subjective ophthalmologist assessments, limiting practical applications. To address this, we present RURANET++, an unsupervised learning-based automated DME diagnostic system. This framework incorporates an optimized U-Net architecture with embedded Spatial and Channel Squeeze & Excitation (SCSE) attention mechanisms to enhance lesion feature extraction. During feature processing, a pre-trained GoogLeNet model extracts deep features from retinal images, followed by PCA-based dimensionality reduction to 50 dimensions for computational efficiency. Notably, we introduce a novel clustering algorithm employing multi-projection heads to explicitly control cluster diversity while dynamically adjusting similarity thresholds, thereby optimizing intra-class consistency and inter-class discrimination. Experimental results demonstrate superior performance across multiple metrics, achieving maximum accuracy (0.8411), precision (0.8593), recall (0.8411), and F1-score (0.8390), with exceptional clustering quality. This work provides an efficient unsupervised solution for DME diagnosis with significant clinical implications.

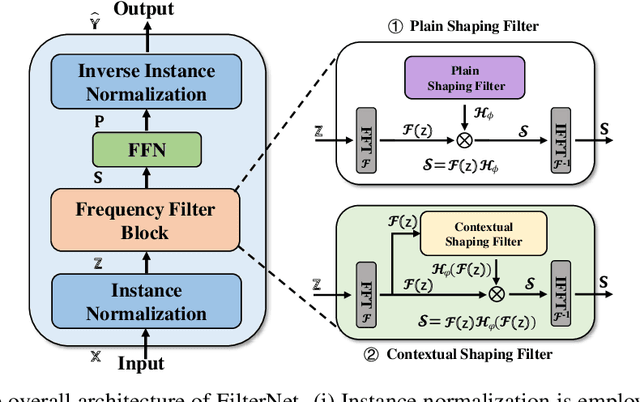

FilterNet: Harnessing Frequency Filters for Time Series Forecasting

Nov 05, 2024

Abstract:While numerous forecasters have been proposed using different network architectures, the Transformer-based models have state-of-the-art performance in time series forecasting. However, forecasters based on Transformers are still suffering from vulnerability to high-frequency signals, efficiency in computation, and bottleneck in full-spectrum utilization, which essentially are the cornerstones for accurately predicting time series with thousands of points. In this paper, we explore a novel perspective of enlightening signal processing for deep time series forecasting. Inspired by the filtering process, we introduce one simple yet effective network, namely FilterNet, built upon our proposed learnable frequency filters to extract key informative temporal patterns by selectively passing or attenuating certain components of time series signals. Concretely, we propose two kinds of learnable filters in the FilterNet: (i) Plain shaping filter, that adopts a universal frequency kernel for signal filtering and temporal modeling; (ii) Contextual shaping filter, that utilizes filtered frequencies examined in terms of its compatibility with input signals for dependency learning. Equipped with the two filters, FilterNet can approximately surrogate the linear and attention mappings widely adopted in time series literature, while enjoying superb abilities in handling high-frequency noises and utilizing the whole frequency spectrum that is beneficial for forecasting. Finally, we conduct extensive experiments on eight time series forecasting benchmarks, and experimental results have demonstrated our superior performance in terms of both effectiveness and efficiency compared with state-of-the-art methods. Code is available at this repository: https://github.com/aikunyi/FilterNet

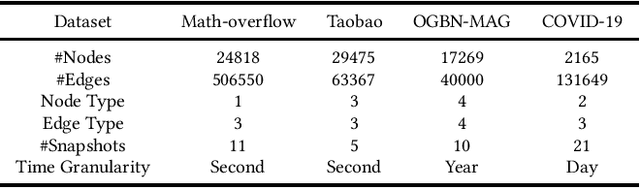

How to Bridge Spatial and Temporal Heterogeneity in Link Prediction? A Contrastive Method

Nov 01, 2024

Abstract:Temporal Heterogeneous Networks play a crucial role in capturing the dynamics and heterogeneity inherent in various real-world complex systems, rendering them a noteworthy research avenue for link prediction. However, existing methods fail to capture the fine-grained differential distribution patterns and temporal dynamic characteristics, which we refer to as spatial heterogeneity and temporal heterogeneity. To overcome such limitations, we propose a novel \textbf{C}ontrastive Learning-based \textbf{L}ink \textbf{P}rediction model, \textbf{CLP}, which employs a multi-view hierarchical self-supervised architecture to encode spatial and temporal heterogeneity. Specifically, aiming at spatial heterogeneity, we develop a spatial feature modeling layer to capture the fine-grained topological distribution patterns from node- and edge-level representations, respectively. Furthermore, aiming at temporal heterogeneity, we devise a temporal information modeling layer to perceive the evolutionary dependencies of dynamic graph topologies from time-level representations. Finally, we encode the spatial and temporal distribution heterogeneity from a contrastive learning perspective, enabling a comprehensive self-supervised hierarchical relation modeling for the link prediction task. Extensive experiments conducted on four real-world dynamic heterogeneous network datasets verify that our \mymodel consistently outperforms the state-of-the-art models, demonstrating an average improvement of 10.10\%, 13.44\% in terms of AUC and AP, respectively.

Multi-SIGATnet: A multimodal schizophrenia MRI classification algorithm using sparse interaction mechanisms and graph attention networks

Aug 25, 2024

Abstract:Schizophrenia is a serious psychiatric disorder. Its pathogenesis is not completely clear, making it difficult to treat patients precisely. Because of the complicated non-Euclidean network structure of the human brain, learning critical information from brain networks remains difficult. To effectively capture the topological information of brain neural networks, a novel multimodal graph attention network based on sparse interaction mechanism (Multi-SIGATnet) was proposed for SZ classification was proposed for SZ classification. Firstly, structural and functional information were fused into multimodal data to obtain more comprehensive and abundant features for patients with SZ. Subsequently, a sparse interaction mechanism was proposed to effectively extract salient features and enhance the feature representation capability. By enhancing the strong connections and weakening the weak connections between feature information based on an asymmetric convolutional network, high-order interactive features were captured. Moreover, sparse learning strategies were designed to filter out redundant connections to improve model performance. Finally, local and global features were updated in accordance with the topological features and connection weight constraints of the higher-order brain network, the features being projected to the classification target space for disorder classification. The effectiveness of the model is verified on the Center for Biomedical Research Excellence (COBRE) and University of California Los Angeles (UCLA) datasets, achieving 81.9\% and 75.8\% average accuracy, respectively, 4.6\% and 5.5\% higher than the graph attention network (GAT) method. Experiments showed that the Multi-SIGATnet method exhibited good performance in identifying SZ.

Robust Multivariate Time Series Forecasting against Intra- and Inter-Series Transitional Shift

Jul 18, 2024

Abstract:The non-stationary nature of real-world Multivariate Time Series (MTS) data presents forecasting models with a formidable challenge of the time-variant distribution of time series, referred to as distribution shift. Existing studies on the distribution shift mostly adhere to adaptive normalization techniques for alleviating temporal mean and covariance shifts or time-variant modeling for capturing temporal shifts. Despite improving model generalization, these normalization-based methods often assume a time-invariant transition between outputs and inputs but disregard specific intra-/inter-series correlations, while time-variant models overlook the intrinsic causes of the distribution shift. This limits model expressiveness and interpretability of tackling the distribution shift for MTS forecasting. To mitigate such a dilemma, we present a unified Probabilistic Graphical Model to Jointly capturing intra-/inter-series correlations and modeling the time-variant transitional distribution, and instantiate a neural framework called JointPGM for non-stationary MTS forecasting. Specifically, JointPGM first employs multiple Fourier basis functions to learn dynamic time factors and designs two distinct learners: intra-series and inter-series learners. The intra-series learner effectively captures temporal dynamics by utilizing temporal gates, while the inter-series learner explicitly models spatial dynamics through multi-hop propagation, incorporating Gumbel-softmax sampling. These two types of series dynamics are subsequently fused into a latent variable, which is inversely employed to infer time factors, generate final prediction, and perform reconstruction. We validate the effectiveness and efficiency of JointPGM through extensive experiments on six highly non-stationary MTS datasets, achieving state-of-the-art forecasting performance of MTS forecasting.

Towards Bridging the Cross-modal Semantic Gap for Multi-modal Recommendation

Jul 07, 2024Abstract:Multi-modal recommendation greatly enhances the performance of recommender systems by modeling the auxiliary information from multi-modality contents. Most existing multi-modal recommendation models primarily exploit multimedia information propagation processes to enrich item representations and directly utilize modal-specific embedding vectors independently obtained from upstream pre-trained models. However, this might be inappropriate since the abundant task-specific semantics remain unexplored, and the cross-modality semantic gap hinders the recommendation performance. Inspired by the recent progress of the cross-modal alignment model CLIP, in this paper, we propose a novel \textbf{CLIP} \textbf{E}nhanced \textbf{R}ecommender (\textbf{CLIPER}) framework to bridge the semantic gap between modalities and extract fine-grained multi-view semantic information. Specifically, we introduce a multi-view modality-alignment approach for representation extraction and measure the semantic similarity between modalities. Furthermore, we integrate the multi-view multimedia representations into downstream recommendation models. Extensive experiments conducted on three public datasets demonstrate the consistent superiority of our model over state-of-the-art multi-modal recommendation models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge