Shufeng Hao

FilterNet: Harnessing Frequency Filters for Time Series Forecasting

Nov 05, 2024

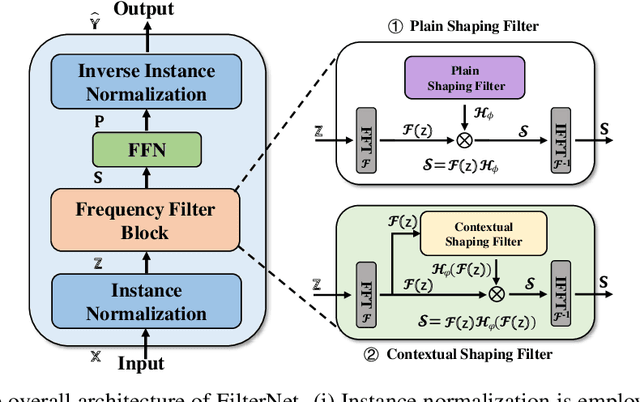

Abstract:While numerous forecasters have been proposed using different network architectures, the Transformer-based models have state-of-the-art performance in time series forecasting. However, forecasters based on Transformers are still suffering from vulnerability to high-frequency signals, efficiency in computation, and bottleneck in full-spectrum utilization, which essentially are the cornerstones for accurately predicting time series with thousands of points. In this paper, we explore a novel perspective of enlightening signal processing for deep time series forecasting. Inspired by the filtering process, we introduce one simple yet effective network, namely FilterNet, built upon our proposed learnable frequency filters to extract key informative temporal patterns by selectively passing or attenuating certain components of time series signals. Concretely, we propose two kinds of learnable filters in the FilterNet: (i) Plain shaping filter, that adopts a universal frequency kernel for signal filtering and temporal modeling; (ii) Contextual shaping filter, that utilizes filtered frequencies examined in terms of its compatibility with input signals for dependency learning. Equipped with the two filters, FilterNet can approximately surrogate the linear and attention mappings widely adopted in time series literature, while enjoying superb abilities in handling high-frequency noises and utilizing the whole frequency spectrum that is beneficial for forecasting. Finally, we conduct extensive experiments on eight time series forecasting benchmarks, and experimental results have demonstrated our superior performance in terms of both effectiveness and efficiency compared with state-of-the-art methods. Code is available at this repository: https://github.com/aikunyi/FilterNet

CoSD: Collaborative Stance Detection with Contrastive Heterogeneous Topic Graph Learning

Apr 26, 2024Abstract:Stance detection seeks to identify the viewpoints of individuals either in favor or against a given target or a controversial topic. Current advanced neural models for stance detection typically employ fully parametric softmax classifiers. However, these methods suffer from several limitations, including lack of explainability, insensitivity to the latent data structure, and unimodality, which greatly restrict their performance and applications. To address these challenges, we present a novel collaborative stance detection framework called (CoSD) which leverages contrastive heterogeneous topic graph learning to learn topic-aware semantics and collaborative signals among texts, topics, and stance labels for enhancing stance detection. During training, we construct a heterogeneous graph to structurally organize texts and stances through implicit topics via employing latent Dirichlet allocation. We then perform contrastive graph learning to learn heterogeneous node representations, aggregating informative multi-hop collaborative signals via an elaborate Collaboration Propagation Aggregation (CPA) module. During inference, we introduce a hybrid similarity scoring module to enable the comprehensive incorporation of topic-aware semantics and collaborative signals for stance detection. Extensive experiments on two benchmark datasets demonstrate the state-of-the-art detection performance of CoSD, verifying the effectiveness and explainability of our collaborative framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge