Huipan Guan

DCFG: Diverse Cross-Channel Fine-Grained Feature Learning and Progressive Fusion Siamese Tracker for Thermal Infrared Target Tracking

Apr 19, 2025

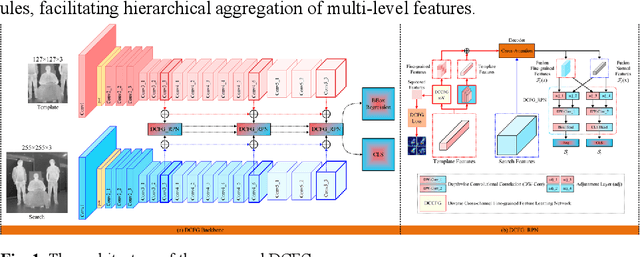

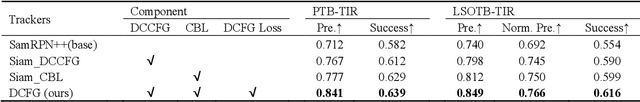

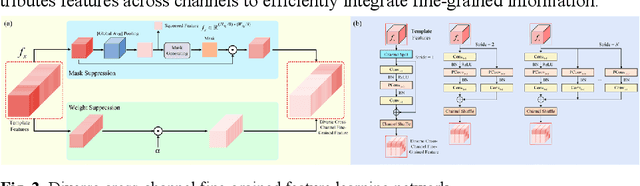

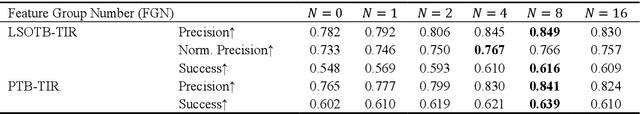

Abstract:To address the challenge of capturing highly discriminative features in ther-mal infrared (TIR) tracking, we propose a novel Siamese tracker based on cross-channel fine-grained feature learning and progressive fusion. First, we introduce a cross-channel fine-grained feature learning network that employs masks and suppression coefficients to suppress dominant target features, en-abling the tracker to capture more detailed and subtle information. The net-work employs a channel rearrangement mechanism to enhance efficient in-formation flow, coupled with channel equalization to reduce parameter count. Additionally, we incorporate layer-by-layer combination units for ef-fective feature extraction and fusion, thereby minimizing parameter redun-dancy and computational complexity. The network further employs feature redirection and channel shuffling strategies to better integrate fine-grained details. Second, we propose a specialized cross-channel fine-grained loss function designed to guide feature groups toward distinct discriminative re-gions of the target, thus improving overall target representation. This loss function includes an inter-channel loss term that promotes orthogonality be-tween channels, maximizing feature diversity and facilitating finer detail capture. Extensive experiments demonstrate that our proposed tracker achieves the highest accuracy, scoring 0.81 on the VOT-TIR 2015 and 0.78 on the VOT-TIR 2017 benchmark, while also outperforming other methods across all evaluation metrics on the LSOTB-TIR and PTB-TIR benchmarks.

FGSGT: Saliency-Guided Siamese Network Tracker Based on Key Fine-Grained Feature Information for Thermal Infrared Target Tracking

Apr 19, 2025Abstract:Thermal infrared (TIR) images typically lack detailed features and have low contrast, making it challenging for conventional feature extraction models to capture discriminative target characteristics. As a result, trackers are often affected by interference from visually similar objects and are susceptible to tracking drift. To address these challenges, we propose a novel saliency-guided Siamese network tracker based on key fine-grained feature infor-mation. First, we introduce a fine-grained feature parallel learning convolu-tional block with a dual-stream architecture and convolutional kernels of varying sizes. This design captures essential global features from shallow layers, enhances feature diversity, and minimizes the loss of fine-grained in-formation typically encountered in residual connections. In addition, we propose a multi-layer fine-grained feature fusion module that uses bilinear matrix multiplication to effectively integrate features across both deep and shallow layers. Next, we introduce a Siamese residual refinement block that corrects saliency map prediction errors using residual learning. Combined with deep supervision, this mechanism progressively refines predictions, ap-plying supervision at each recursive step to ensure consistent improvements in accuracy. Finally, we present a saliency loss function to constrain the sali-ency predictions, directing the network to focus on highly discriminative fi-ne-grained features. Extensive experiment results demonstrate that the pro-posed tracker achieves the highest precision and success rates on the PTB-TIR and LSOTB-TIR benchmarks. It also achieves a top accuracy of 0.78 on the VOT-TIR 2015 benchmark and 0.75 on the VOT-TIR 2017 benchmark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge