Haohang Xu

Accelerating Diffusion Transformers with Dual Feature Caching

Dec 25, 2024Abstract:Diffusion Transformers (DiT) have become the dominant methods in image and video generation yet still suffer substantial computational costs. As an effective approach for DiT acceleration, feature caching methods are designed to cache the features of DiT in previous timesteps and reuse them in the next timesteps, allowing us to skip the computation in the next timesteps. However, on the one hand, aggressively reusing all the features cached in previous timesteps leads to a severe drop in generation quality. On the other hand, conservatively caching only the features in the redundant layers or tokens but still computing the important ones successfully preserves the generation quality but results in reductions in acceleration ratios. Observing such a tradeoff between generation quality and acceleration performance, this paper begins by quantitatively studying the accumulated error from cached features. Surprisingly, we find that aggressive caching does not introduce significantly more caching errors in the caching step, and the conservative feature caching can fix the error introduced by aggressive caching. Thereby, we propose a dual caching strategy that adopts aggressive and conservative caching iteratively, leading to significant acceleration and high generation quality at the same time. Besides, we further introduce a V-caching strategy for token-wise conservative caching, which is compatible with flash attention and requires no training and calibration data. Our codes have been released in Github: \textbf{Code: \href{https://github.com/Shenyi-Z/DuCa}{\texttt{\textcolor{cyan}{https://github.com/Shenyi-Z/DuCa}}}}

Betrayed by Attention: A Simple yet Effective Approach for Self-supervised Video Object Segmentation

Nov 29, 2023

Abstract:In this paper, we propose a simple yet effective approach for self-supervised video object segmentation (VOS). Our key insight is that the inherent structural dependencies present in DINO-pretrained Transformers can be leveraged to establish robust spatio-temporal correspondences in videos. Furthermore, simple clustering on this correspondence cue is sufficient to yield competitive segmentation results. Previous self-supervised VOS techniques majorly resort to auxiliary modalities or utilize iterative slot attention to assist in object discovery, which restricts their general applicability and imposes higher computational requirements. To deal with these challenges, we develop a simplified architecture that capitalizes on the emerging objectness from DINO-pretrained Transformers, bypassing the need for additional modalities or slot attention. Specifically, we first introduce a single spatio-temporal Transformer block to process the frame-wise DINO features and establish spatio-temporal dependencies in the form of self-attention. Subsequently, utilizing these attention maps, we implement hierarchical clustering to generate object segmentation masks. To train the spatio-temporal block in a fully self-supervised manner, we employ semantic and dynamic motion consistency coupled with entropy normalization. Our method demonstrates state-of-the-art performance across multiple unsupervised VOS benchmarks and particularly excels in complex real-world multi-object video segmentation tasks such as DAVIS-17-Unsupervised and YouTube-VIS-19. The code and model checkpoints will be released at https://github.com/shvdiwnkozbw/SSL-UVOS.

Masked Autoencoders are Robust Data Augmentors

Jun 10, 2022

Abstract:Deep neural networks are capable of learning powerful representations to tackle complex vision tasks but expose undesirable properties like the over-fitting issue. To this end, regularization techniques like image augmentation are necessary for deep neural networks to generalize well. Nevertheless, most prevalent image augmentation recipes confine themselves to off-the-shelf linear transformations like scale, flip, and colorjitter. Due to their hand-crafted property, these augmentations are insufficient to generate truly hard augmented examples. In this paper, we propose a novel perspective of augmentation to regularize the training process. Inspired by the recent success of applying masked image modeling to self-supervised learning, we adopt the self-supervised masked autoencoder to generate the distorted view of the input images. We show that utilizing such model-based nonlinear transformation as data augmentation can improve high-level recognition tasks. We term the proposed method as \textbf{M}ask-\textbf{R}econstruct \textbf{A}ugmentation (MRA). The extensive experiments on various image classification benchmarks verify the effectiveness of the proposed augmentation. Specifically, MRA consistently enhances the performance on supervised, semi-supervised as well as few-shot classification. The code will be available at \url{https://github.com/haohang96/MRA}.

Semantic-Aware Generation for Self-Supervised Visual Representation Learning

Nov 25, 2021

Abstract:In this paper, we propose a self-supervised visual representation learning approach which involves both generative and discriminative proxies, where we focus on the former part by requiring the target network to recover the original image based on the mid-level features. Different from prior work that mostly focuses on pixel-level similarity between the original and generated images, we advocate for Semantic-aware Generation (SaGe) to facilitate richer semantics rather than details to be preserved in the generated image. The core idea of implementing SaGe is to use an evaluator, a deep network that is pre-trained without labels, for extracting semantic-aware features. SaGe complements the target network with view-specific features and thus alleviates the semantic degradation brought by intensive data augmentations. We execute SaGe on ImageNet-1K and evaluate the pre-trained models on five downstream tasks including nearest neighbor test, linear classification, and fine-scaled image recognition, demonstrating its ability to learn stronger visual representations.

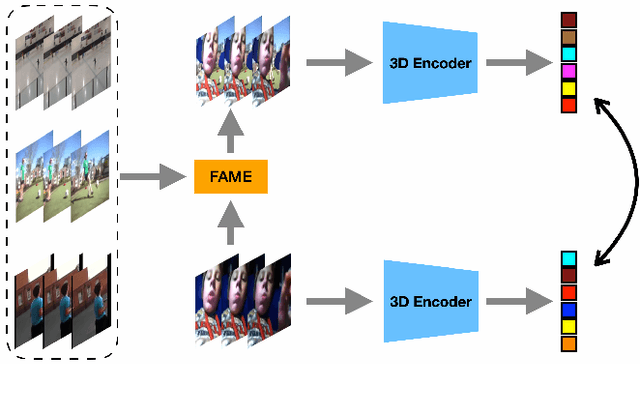

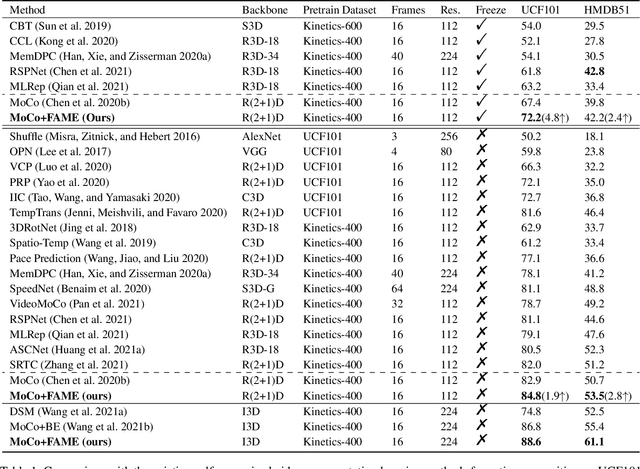

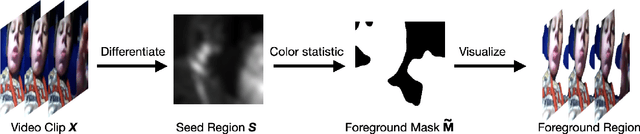

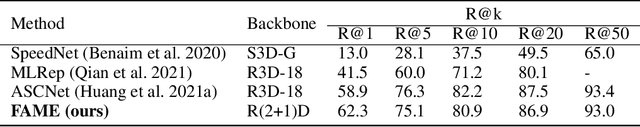

Motion-aware Self-supervised Video Representation Learning via Foreground-background Merging

Sep 30, 2021

Abstract:In light of the success of contrastive learning in the image domain, current self-supervised video representation learning methods usually employ contrastive loss to facilitate video representation learning. When naively pulling two augmented views of a video closer, the model however tends to learn the common static background as a shortcut but fails to capture the motion information, a phenomenon dubbed as background bias. This bias makes the model suffer from weak generalization ability, leading to worse performance on downstream tasks such as action recognition. To alleviate such bias, we propose Foreground-background Merging (FAME) to deliberately compose the foreground region of the selected video onto the background of others. Specifically, without any off-the-shelf detector, we extract the foreground and background regions via the frame difference and color statistics, and shuffle the background regions among the videos. By leveraging the semantic consistency between the original clips and the fused ones, the model focuses more on the foreground motion pattern and is thus more robust to the background context. Extensive experiments demonstrate that FAME can significantly boost the performance in different downstream tasks with various backbones. When integrated with MoCo, FAME reaches 84.8% and 53.5% accuracy on UCF101 and HMDB51, respectively, achieving the state-of-the-art performance.

Bag of Instances Aggregation Boosts Self-supervised Learning

Jul 04, 2021

Abstract:Recent advances in self-supervised learning have experienced remarkable progress, especially for contrastive learning based methods, which regard each image as well as its augmentations as an individual class and try to distinguish them from all other images. However, due to the large quantity of exemplars, this kind of pretext task intrinsically suffers from slow convergence and is hard for optimization. This is especially true for small scale models, which we find the performance drops dramatically comparing with its supervised counterpart. In this paper, we propose a simple but effective distillation strategy for unsupervised learning. The highlight is that the relationship among similar samples counts and can be seamlessly transferred to the student to boost the performance. Our method, termed as BINGO, which is short for \textbf{B}ag of \textbf{I}nsta\textbf{N}ces a\textbf{G}gregati\textbf{O}n, targets at transferring the relationship learned by the teacher to the student. Here bag of instances indicates a set of similar samples constructed by the teacher and are grouped within a bag, and the goal of distillation is to aggregate compact representations over the student with respect to instances in a bag. Notably, BINGO achieves new state-of-the-art performance on small scale models, \emph{i.e.}, 65.5% and 68.9% top-1 accuracies with linear evaluation on ImageNet, using ResNet-18 and ResNet-34 as backbone, respectively, surpassing baselines (52.5% and 57.4% top-1 accuracies) by a significant margin. The code will be available at \url{https://github.com/haohang96/bingo}.

Multi-dataset Pretraining: A Unified Model for Semantic Segmentation

Jun 08, 2021

Abstract:Collecting annotated data for semantic segmentation is time-consuming and hard to scale up. In this paper, we for the first time propose a unified framework, termed as Multi-Dataset Pretraining, to take full advantage of the fragmented annotations of different datasets. The highlight is that the annotations from different domains can be efficiently reused and consistently boost performance for each specific domain. This is achieved by first pretraining the network via the proposed pixel-to-prototype contrastive loss over multiple datasets regardless of their taxonomy labels, and followed by fine-tuning the pretrained model over specific dataset as usual. In order to better model the relationship among images and classes from different datasets, we extend the pixel level embeddings via cross dataset mixing and propose a pixel-to-class sparse coding strategy that explicitly models the pixel-class similarity over the manifold embedding space. In this way, we are able to increase intra-class compactness and inter-class separability, as well as considering inter-class similarity across different datasets for better transferability. Experiments conducted on several benchmarks demonstrate its superior performance. Notably, MDP consistently outperforms the pretrained models over ImageNet by a considerable margin, while only using less than 10% samples for pretraining.

Semi-supervised Contrastive Learning with Similarity Co-calibration

May 16, 2021

Abstract:Semi-supervised learning acts as an effective way to leverage massive unlabeled data. In this paper, we propose a novel training strategy, termed as Semi-supervised Contrastive Learning (SsCL), which combines the well-known contrastive loss in self-supervised learning with the cross entropy loss in semi-supervised learning, and jointly optimizes the two objectives in an end-to-end way. The highlight is that different from self-training based semi-supervised learning that conducts prediction and retraining over the same model weights, SsCL interchanges the predictions over the unlabeled data between the two branches, and thus formulates a co-calibration procedure, which we find is beneficial for better prediction and avoid being trapped in local minimum. Towards this goal, the contrastive loss branch models pairwise similarities among samples, using the nearest neighborhood generated from the cross entropy branch, and in turn calibrates the prediction distribution of the cross entropy branch with the contrastive similarity. We show that SsCL produces more discriminative representation and is beneficial to few shot learning. Notably, on ImageNet with ResNet50 as the backbone, SsCL achieves 60.2% and 72.1% top-1 accuracy with 1% and 10% labeled samples, respectively, which significantly outperforms the baseline, and is better than previous semi-supervised and self-supervised methods.

Hierarchical Semantic Aggregation for Contrastive Representation Learning

Dec 04, 2020

Abstract:Self-supervised learning based on instance discrimination has shown remarkable progress. In particular, contrastive learning, which regards each image as well as its augmentations as a separate class, and pushes all other images away, has been proved effective for pretraining. However, contrasting two images that are de facto similar in semantic space is hard for optimization and not applicable for general representations. In this paper, we tackle the representation inefficiency of contrastive learning and propose a hierarchical training strategy to explicitly model the invariance to semantic similar images in a bottom-up way. This is achieved by extending the contrastive loss to allow for multiple positives per anchor, and explicitly pulling semantically similar images/patches together at the earlier layers as well as the last embedding space. In this way, we are able to learn feature representation that is more discriminative throughout different layers, which we find is beneficial for fast convergence. The hierarchical semantic aggregation strategy produces more discriminative representation on several unsupervised benchmarks. Notably, on ImageNet with ResNet-50 as backbone, we reach $76.4\%$ top-1 accuracy with linear evaluation, and $75.1\%$ top-1 accuracy with only $10\%$ labels.

K-Shot Contrastive Learning of Visual Features with Multiple Instance Augmentations

Jul 27, 2020

Abstract:In this paper, we propose the $K$-Shot Contrastive Learning (KSCL) of visual features by applying multiple augmentations to investigate the sample variations within individual instances. It aims to combine the advantages of inter-instance discrimination by learning discriminative features to distinguish between different instances, as well as intra-instance variations by matching queries against the variants of augmented samples over instances. Particularly, for each instance, it constructs an instance subspace to model the configuration of how the significant factors of variations in $K$-shot augmentations can be combined to form the variants of augmentations. Given a query, the most relevant variant of instances is then retrieved by projecting the query onto their subspaces to predict the positive instance class. This generalizes the existing contrastive learning that can be viewed as a special one-shot case. An eigenvalue decomposition is performed to configure instance subspaces, and the embedding network can be trained end-to-end through the differentiable subspace configuration. Experiment results demonstrate the proposed $K$-shot contrastive learning achieves superior performances to the state-of-the-art unsupervised methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge