Han Zhou

Voxtral Realtime

Feb 11, 2026Abstract:We introduce Voxtral Realtime, a natively streaming automatic speech recognition model that matches offline transcription quality at sub-second latency. Unlike approaches that adapt offline models through chunking or sliding windows, Voxtral Realtime is trained end-to-end for streaming, with explicit alignment between audio and text streams. Our architecture builds on the Delayed Streams Modeling framework, introducing a new causal audio encoder and Ada RMS-Norm for improved delay conditioning. We scale pretraining to a large-scale dataset spanning 13 languages. At a delay of 480ms, Voxtral Realtime achieves performance on par with Whisper, the most widely deployed offline transcription system. We release the model weights under the Apache 2.0 license.

HLGFA: High-Low Resolution Guided Feature Alignment for Unsupervised Anomaly Detection

Feb 10, 2026Abstract:Unsupervised industrial anomaly detection (UAD) is essential for modern manufacturing inspection, where defect samples are scarce and reliable detection is required. In this paper, we propose HLGFA, a high-low resolution guided feature alignment framework that learns normality by modeling cross-resolution feature consistency between high-resolution and low-resolution representations of normal samples, instead of relying on pixel-level reconstruction. Dual-resolution inputs are processed by a shared frozen backbone to extract multi-level features, and high-resolution representations are decomposed into structure and detail priors to guide the refinement of low-resolution features through conditional modulation and gated residual correction. During inference, anomalies are naturally identified as regions where cross-resolution alignment breaks down. In addition, a noise-aware data augmentation strategy is introduced to suppress nuisance-induced responses commonly observed in industrial environments. Extensive experiments on standard benchmarks demonstrate the effectiveness of HLGFA, achieving 97.9% pixel-level AUROC and 97.5% image-level AUROC on the MVTec AD dataset, outperforming representative reconstruction-based and feature-based methods.

ERNIE 5.0 Technical Report

Feb 04, 2026Abstract:In this report, we introduce ERNIE 5.0, a natively autoregressive foundation model desinged for unified multimodal understanding and generation across text, image, video, and audio. All modalities are trained from scratch under a unified next-group-of-tokens prediction objective, based on an ultra-sparse mixture-of-experts (MoE) architecture with modality-agnostic expert routing. To address practical challenges in large-scale deployment under diverse resource constraints, ERNIE 5.0 adopts a novel elastic training paradigm. Within a single pre-training run, the model learns a family of sub-models with varying depths, expert capacities, and routing sparsity, enabling flexible trade-offs among performance, model size, and inference latency in memory- or time-constrained scenarios. Moreover, we systematically address the challenges of scaling reinforcement learning to unified foundation models, thereby guaranteeing efficient and stable post-training under ultra-sparse MoE architectures and diverse multimodal settings. Extensive experiments demonstrate that ERNIE 5.0 achieves strong and balanced performance across multiple modalities. To the best of our knowledge, among publicly disclosed models, ERNIE 5.0 represents the first production-scale realization of a trillion-parameter unified autoregressive model that supports both multimodal understanding and generation. To facilitate further research, we present detailed visualizations of modality-agnostic expert routing in the unified model, alongside comprehensive empirical analysis of elastic training, aiming to offer profound insights to the community.

Thinking in Frames: How Visual Context and Test-Time Scaling Empower Video Reasoning

Jan 28, 2026Abstract:Vision-Language Models have excelled at textual reasoning, but they often struggle with fine-grained spatial understanding and continuous action planning, failing to simulate the dynamics required for complex visual reasoning. In this work, we formulate visual reasoning by means of video generation models, positing that generated frames can act as intermediate reasoning steps between initial states and solutions. We evaluate their capacity in two distinct regimes: Maze Navigation for sequential discrete planning with low visual change and Tangram Puzzle for continuous manipulation with high visual change. Our experiments reveal three critical insights: (1) Robust Zero-Shot Generalization: In both tasks, the model demonstrates strong performance on unseen data distributions without specific finetuning. (2) Visual Context: The model effectively uses visual context as explicit control, such as agent icons and tangram shapes, enabling it to maintain high visual consistency and adapt its planning capability robustly to unseen patterns. (3) Visual Test-Time Scaling: We observe a test-time scaling law in sequential planning; increasing the generated video length (visual inference budget) empowers better zero-shot generalization to spatially and temporally complex paths. These findings suggest that video generation is not merely a media tool, but a scalable, generalizable paradigm for visual reasoning.

Learning Longitudinal Health Representations from EHR and Wearable Data

Jan 18, 2026Abstract:Foundation models trained on electronic health records show strong performance on many clinical prediction tasks but are limited by sparse and irregular documentation. Wearable devices provide dense continuous physiological signals but lack semantic grounding. Existing methods usually model these data sources separately or combine them through late fusion. We propose a multimodal foundation model that jointly represents electronic health records and wearable data as a continuous time latent process. The model uses modality specific encoders and a shared temporal backbone pretrained with self supervised and cross modal objectives. This design produces representations that are temporally coherent and clinically grounded. Across forecasting physiological and risk modeling tasks the model outperforms strong electronic health record only and wearable only baselines especially at long horizons and under missing data. These results show that joint electronic health record and wearable pretraining yields more faithful representations of longitudinal health.

STEP3-VL-10B Technical Report

Jan 15, 2026Abstract:We present STEP3-VL-10B, a lightweight open-source foundation model designed to redefine the trade-off between compact efficiency and frontier-level multimodal intelligence. STEP3-VL-10B is realized through two strategic shifts: first, a unified, fully unfrozen pre-training strategy on 1.2T multimodal tokens that integrates a language-aligned Perception Encoder with a Qwen3-8B decoder to establish intrinsic vision-language synergy; and second, a scaled post-training pipeline featuring over 1k iterations of reinforcement learning. Crucially, we implement Parallel Coordinated Reasoning (PaCoRe) to scale test-time compute, allocating resources to scalable perceptual reasoning that explores and synthesizes diverse visual hypotheses. Consequently, despite its compact 10B footprint, STEP3-VL-10B rivals or surpasses models 10$\times$-20$\times$ larger (e.g., GLM-4.6V-106B, Qwen3-VL-235B) and top-tier proprietary flagships like Gemini 2.5 Pro and Seed-1.5-VL. Delivering best-in-class performance, it records 92.2% on MMBench and 80.11% on MMMU, while excelling in complex reasoning with 94.43% on AIME2025 and 75.95% on MathVision. We release the full model suite to provide the community with a powerful, efficient, and reproducible baseline.

From Human Hands to Robot Arms: Manipulation Skills Transfer via Trajectory Alignment

Oct 01, 2025Abstract:Learning diverse manipulation skills for real-world robots is severely bottlenecked by the reliance on costly and hard-to-scale teleoperated demonstrations. While human videos offer a scalable alternative, effectively transferring manipulation knowledge is fundamentally hindered by the significant morphological gap between human and robotic embodiments. To address this challenge and facilitate skill transfer from human to robot, we introduce Traj2Action,a novel framework that bridges this embodiment gap by using the 3D trajectory of the operational endpoint as a unified intermediate representation, and then transfers the manipulation knowledge embedded in this trajectory to the robot's actions. Our policy first learns to generate a coarse trajectory, which forms an high-level motion plan by leveraging both human and robot data. This plan then conditions the synthesis of precise, robot-specific actions (e.g., orientation and gripper state) within a co-denoising framework. Extensive real-world experiments on a Franka robot demonstrate that Traj2Action boosts the performance by up to 27% and 22.25% over $\pi_0$ baseline on short- and long-horizon real-world tasks, and achieves significant gains as human data scales in robot policy learning. Our project website, featuring code and video demonstrations, is available at https://anonymous.4open.science/w/Traj2Action-4A45/.

ArgusCogito: Chain-of-Thought for Cross-Modal Synergy and Omnidirectional Reasoning in Camouflaged Object Segmentation

Aug 25, 2025

Abstract:Camouflaged Object Segmentation (COS) poses a significant challenge due to the intrinsic high similarity between targets and backgrounds, demanding models capable of profound holistic understanding beyond superficial cues. Prevailing methods, often limited by shallow feature representation, inadequate reasoning mechanisms, and weak cross-modal integration, struggle to achieve this depth of cognition, resulting in prevalent issues like incomplete target separation and imprecise segmentation. Inspired by the perceptual strategy of the Hundred-eyed Giant-emphasizing holistic observation, omnidirectional focus, and intensive scrutiny-we introduce ArgusCogito, a novel zero-shot, chain-of-thought framework underpinned by cross-modal synergy and omnidirectional reasoning within Vision-Language Models (VLMs). ArgusCogito orchestrates three cognitively-inspired stages: (1) Conjecture: Constructs a strong cognitive prior through global reasoning with cross-modal fusion (RGB, depth, semantic maps), enabling holistic scene understanding and enhanced target-background disambiguation. (2) Focus: Performs omnidirectional, attention-driven scanning and focused reasoning, guided by semantic priors from Conjecture, enabling precise target localization and region-of-interest refinement. (3) Sculpting: Progressively sculpts high-fidelity segmentation masks by integrating cross-modal information and iteratively generating dense positive/negative point prompts within focused regions, emulating Argus' intensive scrutiny. Extensive evaluations on four challenging COS benchmarks and three Medical Image Segmentation (MIS) benchmarks demonstrate that ArgusCogito achieves state-of-the-art (SOTA) performance, validating the framework's exceptional efficacy, superior generalization capability, and robustness.

A Comprehensive Survey of Self-Evolving AI Agents: A New Paradigm Bridging Foundation Models and Lifelong Agentic Systems

Aug 10, 2025

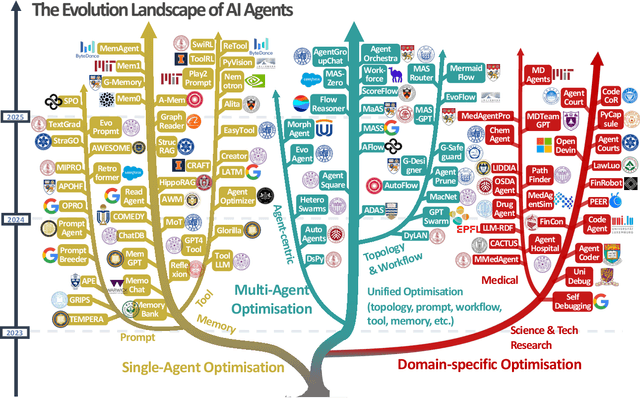

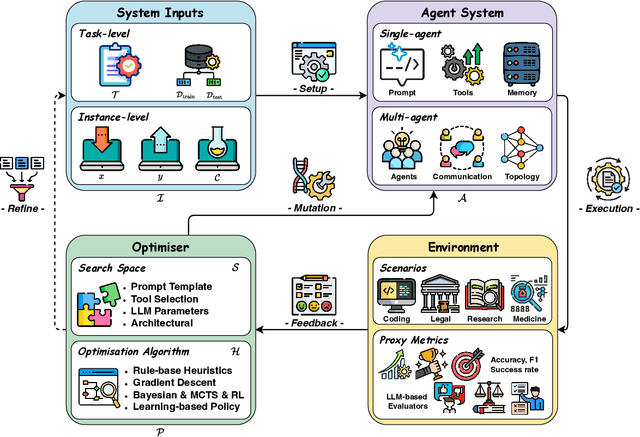

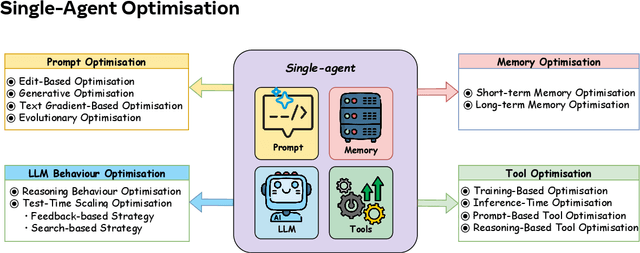

Abstract:Recent advances in large language models have sparked growing interest in AI agents capable of solving complex, real-world tasks. However, most existing agent systems rely on manually crafted configurations that remain static after deployment, limiting their ability to adapt to dynamic and evolving environments. To this end, recent research has explored agent evolution techniques that aim to automatically enhance agent systems based on interaction data and environmental feedback. This emerging direction lays the foundation for self-evolving AI agents, which bridge the static capabilities of foundation models with the continuous adaptability required by lifelong agentic systems. In this survey, we provide a comprehensive review of existing techniques for self-evolving agentic systems. Specifically, we first introduce a unified conceptual framework that abstracts the feedback loop underlying the design of self-evolving agentic systems. The framework highlights four key components: System Inputs, Agent System, Environment, and Optimisers, serving as a foundation for understanding and comparing different strategies. Based on this framework, we systematically review a wide range of self-evolving techniques that target different components of the agent system. We also investigate domain-specific evolution strategies developed for specialised fields such as biomedicine, programming, and finance, where optimisation objectives are tightly coupled with domain constraints. In addition, we provide a dedicated discussion on the evaluation, safety, and ethical considerations for self-evolving agentic systems, which are critical to ensuring their effectiveness and reliability. This survey aims to provide researchers and practitioners with a systematic understanding of self-evolving AI agents, laying the foundation for the development of more adaptive, autonomous, and lifelong agentic systems.

AU-IQA: A Benchmark Dataset for Perceptual Quality Assessment of AI-Enhanced User-Generated Content

Aug 07, 2025Abstract:AI-based image enhancement techniques have been widely adopted in various visual applications, significantly improving the perceptual quality of user-generated content (UGC). However, the lack of specialized quality assessment models has become a significant limiting factor in this field, limiting user experience and hindering the advancement of enhancement methods. While perceptual quality assessment methods have shown strong performance on UGC and AIGC individually, their effectiveness on AI-enhanced UGC (AI-UGC) which blends features from both, remains largely unexplored. To address this gap, we construct AU-IQA, a benchmark dataset comprising 4,800 AI-UGC images produced by three representative enhancement types which include super-resolution, low-light enhancement, and denoising. On this dataset, we further evaluate a range of existing quality assessment models, including traditional IQA methods and large multimodal models. Finally, we provide a comprehensive analysis of how well current approaches perform in assessing the perceptual quality of AI-UGC. The access link to the AU-IQA is https://github.com/WNNGGU/AU-IQA-Dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge