Chunyu Li

HomoFM: Deep Homography Estimation with Flow Matching

Jan 26, 2026Abstract:Deep homography estimation has broad applications in computer vision and robotics. Remarkable progresses have been achieved while the existing methods typically treat it as a direct regression or iterative refinement problem and often struggling to capture complex geometric transformations or generalize across different domains. In this work, we propose HomoFM, a new framework that introduces the flow matching technique from generative modeling into the homography estimation task for the first time. Unlike the existing methods, we formulate homography estimation problem as a velocity field learning problem. By modeling a continuous and point-wise velocity field that transforms noisy distributions into registered coordinates, the proposed network recovers high-precision transformations through a conditional flow trajectory. Furthermore, to address the challenge of domain shifts issue, e.g., the cases of multimodal matching or varying illumination scenarios, we integrate a gradient reversal layer (GRL) into the feature extraction backbone. This domain adaptation strategy explicitly constrains the encoder to learn domain-invariant representations, significantly enhancing the network's robustness. Extensive experiments demonstrate the effectiveness of the proposed method, showing that HomoFM outperforms state-of-the-art methods in both estimation accuracy and robustness on standard benchmarks. Code and data resource are available at https://github.com/hmf21/HomoFM.

Physics-constrained Gaussian Processes for Predicting Shockwave Hugoniot Curves

Jan 10, 2026Abstract:A physics-constrained Gaussian Process regression framework is developed for predicting shocked material states along the Hugoniot curve using data from a small number of shockwave simulations. The proposed Gaussian process employs a probabilistic Taylor series expansion in conjunction with the Rankine-Hugoniot jump conditions between the various shocked material states to construct a thermodynamically consistent covariance function. This leads to the formulation of an optimization problem over a small number of interpretable hyperparameters and enables the identification of regime transitions, from a leading elastic wave to trailing plastic and phase transformation waves. This work is motivated by the need to investigate shock-driven material response for materials discovery and for offering mechanistic insights in regimes where experimental characterizations and simulations are costly. The proposed methodology relies on large-scale molecular dynamics which are an accurate but expensive computational alternative to experiments. Under these constraints, the proposed methodology establishes Hugoniot curves from a limited number of molecular dynamics simulations. We consider silicon carbide as a representative material and atomic-level simulations are performed using a reverse ballistic approach together with appropriate interatomic potentials. The framework reproduces the Hugoniot curve with satisfactory accuracy while also quantifying the uncertainty in the predictions using the Gaussian Process posterior.

TWC-SLAM: Multi-Agent Cooperative SLAM with Text Semantics and WiFi Features Integration for Similar Indoor Environments

Oct 26, 2025Abstract:Multi-agent cooperative SLAM often encounters challenges in similar indoor environments characterized by repetitive structures, such as corridors and rooms. These challenges can lead to significant inaccuracies in shared location identification when employing point cloud-based techniques. To mitigate these issues, we introduce TWC-SLAM, a multi-agent cooperative SLAM framework that integrates text semantics and WiFi signal features to enhance location identification and loop closure detection. TWC-SLAM comprises a single-agent front-end odometry module based on FAST-LIO2, a location identification and loop closure detection module that leverages text semantics and WiFi features, and a global mapping module. The agents are equipped with sensors capable of capturing textual information and detecting WiFi signals. By correlating these data sources, TWC-SLAM establishes a common location, facilitating point cloud alignment across different agents' maps. Furthermore, the system employs loop closure detection and optimization modules to achieve global optimization and cohesive mapping. We evaluated our approach using an indoor dataset featuring similar corridors, rooms, and text signs. The results demonstrate that TWC-SLAM significantly improves the performance of cooperative SLAM systems in complex environments with repetitive architectural features.

Xinyu AI Search: Enhanced Relevance and Comprehensive Results with Rich Answer Presentations

May 28, 2025Abstract:Traditional search engines struggle to synthesize fragmented information for complex queries, while generative AI search engines face challenges in relevance, comprehensiveness, and presentation. To address these limitations, we introduce Xinyu AI Search, a novel system that incorporates a query-decomposition graph to dynamically break down complex queries into sub-queries, enabling stepwise retrieval and generation. Our retrieval pipeline enhances diversity through multi-source aggregation and query expansion, while filtering and re-ranking strategies optimize passage relevance. Additionally, Xinyu AI Search introduces a novel approach for fine-grained, precise built-in citation and innovates in result presentation by integrating timeline visualization and textual-visual choreography. Evaluated on recent real-world queries, Xinyu AI Search outperforms eight existing technologies in human assessments, excelling in relevance, comprehensiveness, and insightfulness. Ablation studies validate the necessity of its key sub-modules. Our work presents the first comprehensive framework for generative AI search engines, bridging retrieval, generation, and user-centric presentation.

VideoFusion: A Spatio-Temporal Collaborative Network for Mutli-modal Video Fusion and Restoration

Mar 30, 2025Abstract:Compared to images, videos better align with real-world acquisition scenarios and possess valuable temporal cues. However, existing multi-sensor fusion research predominantly integrates complementary context from multiple images rather than videos. This primarily stems from two factors: 1) the scarcity of large-scale multi-sensor video datasets, limiting research in video fusion, and 2) the inherent difficulty of jointly modeling spatial and temporal dependencies in a unified framework. This paper proactively compensates for the dilemmas. First, we construct M3SVD, a benchmark dataset with $220$ temporally synchronized and spatially registered infrared-visible video pairs comprising 153,797 frames, filling the data gap for the video fusion community. Secondly, we propose VideoFusion, a multi-modal video fusion model that fully exploits cross-modal complementarity and temporal dynamics to generate spatio-temporally coherent videos from (potentially degraded) multi-modal inputs. Specifically, 1) a differential reinforcement module is developed for cross-modal information interaction and enhancement, 2) a complete modality-guided fusion strategy is employed to adaptively integrate multi-modal features, and 3) a bi-temporal co-attention mechanism is devised to dynamically aggregate forward-backward temporal contexts to reinforce cross-frame feature representations. Extensive experiments reveal that VideoFusion outperforms existing image-oriented fusion paradigms in sequential scenarios, effectively mitigating temporal inconsistency and interference.

DSPFusion: Image Fusion via Degradation and Semantic Dual-Prior Guidance

Mar 30, 2025Abstract:Existing fusion methods are tailored for high-quality images but struggle with degraded images captured under harsh circumstances, thus limiting the practical potential of image fusion. This work presents a \textbf{D}egradation and \textbf{S}emantic \textbf{P}rior dual-guided framework for degraded image \textbf{Fusion} (\textbf{DSPFusion}), utilizing degradation priors and high-quality scene semantic priors restored via diffusion models to guide both information recovery and fusion in a unified model. In specific, it first individually extracts modality-specific degradation priors, while jointly capturing comprehensive low-quality semantic priors. Subsequently, a diffusion model is developed to iteratively restore high-quality semantic priors in a compact latent space, enabling our method to be over $20 \times$ faster than mainstream diffusion model-based image fusion schemes. Finally, the degradation priors and high-quality semantic priors are employed to guide information enhancement and aggregation via the dual-prior guidance and prior-guided fusion modules. Extensive experiments demonstrate that DSPFusion mitigates most typical degradations while integrating complementary context with minimal computational cost, greatly broadening the application scope of image fusion.

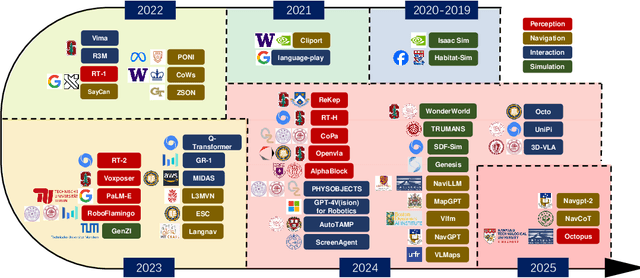

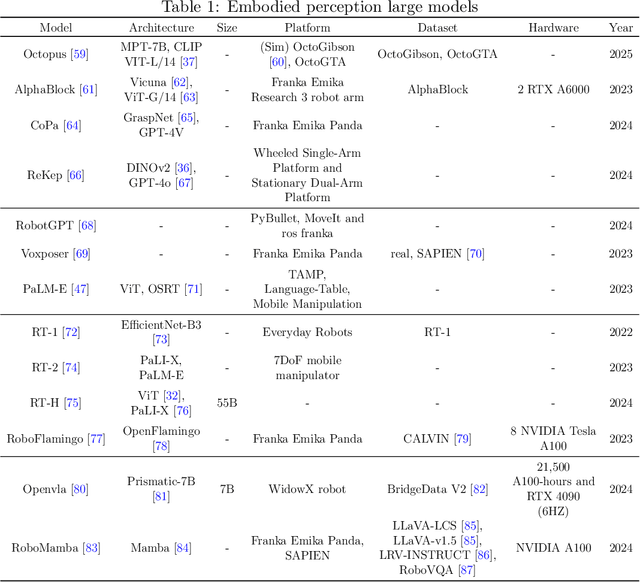

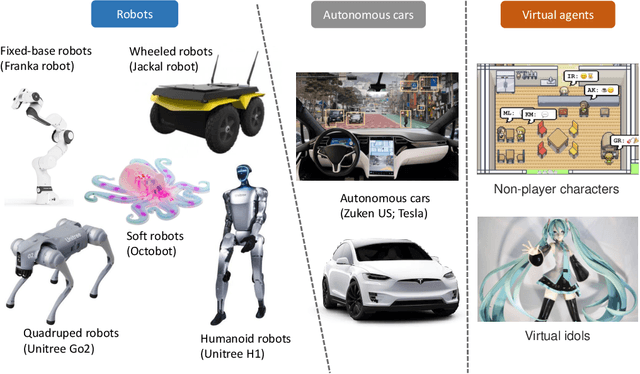

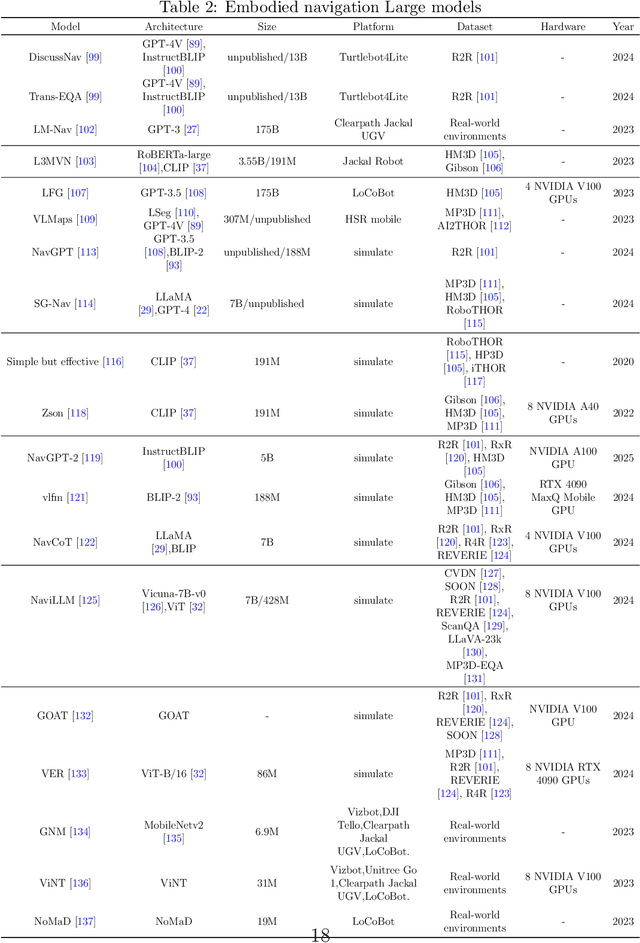

Exploring Embodied Multimodal Large Models: Development, Datasets, and Future Directions

Feb 21, 2025

Abstract:Embodied multimodal large models (EMLMs) have gained significant attention in recent years due to their potential to bridge the gap between perception, cognition, and action in complex, real-world environments. This comprehensive review explores the development of such models, including Large Language Models (LLMs), Large Vision Models (LVMs), and other models, while also examining other emerging architectures. We discuss the evolution of EMLMs, with a focus on embodied perception, navigation, interaction, and simulation. Furthermore, the review provides a detailed analysis of the datasets used for training and evaluating these models, highlighting the importance of diverse, high-quality data for effective learning. The paper also identifies key challenges faced by EMLMs, including issues of scalability, generalization, and real-time decision-making. Finally, we outline future directions, emphasizing the integration of multimodal sensing, reasoning, and action to advance the development of increasingly autonomous systems. By providing an in-depth analysis of state-of-the-art methods and identifying critical gaps, this paper aims to inspire future advancements in EMLMs and their applications across diverse domains.

LatentSync: Audio Conditioned Latent Diffusion Models for Lip Sync

Dec 12, 2024Abstract:We present LatentSync, an end-to-end lip sync framework based on audio conditioned latent diffusion models without any intermediate motion representation, diverging from previous diffusion-based lip sync methods based on pixel space diffusion or two-stage generation. Our framework can leverage the powerful capabilities of Stable Diffusion to directly model complex audio-visual correlations. Additionally, we found that the diffusion-based lip sync methods exhibit inferior temporal consistency due to the inconsistency in the diffusion process across different frames. We propose Temporal REPresentation Alignment (TREPA) to enhance temporal consistency while preserving lip-sync accuracy. TREPA uses temporal representations extracted by large-scale self-supervised video models to align the generated frames with the ground truth frames. Furthermore, we observe the commonly encountered SyncNet convergence issue and conduct comprehensive empirical studies, identifying key factors affecting SyncNet convergence in terms of model architecture, training hyperparameters, and data preprocessing methods. We significantly improve the accuracy of SyncNet from 91% to 94% on the HDTF test set. Since we did not change the overall training framework of SyncNet, our experience can also be applied to other lip sync and audio-driven portrait animation methods that utilize SyncNet. Based on the above innovations, our method outperforms state-of-the-art lip sync methods across various metrics on the HDTF and VoxCeleb2 datasets.

HEViTPose: High-Efficiency Vision Transformer for Human Pose Estimation

Nov 22, 2023

Abstract:Human pose estimation in complicated situations has always been a challenging task. Many Transformer-based pose networks have been proposed recently, achieving encouraging progress in improving performance. However, the remarkable performance of pose networks is always accompanied by heavy computation costs and large network scale. In order to deal with this problem, this paper proposes a High-Efficiency Vision Transformer for Human Pose Estimation (HEViTPose). In HEViTPose, a Cascaded Group Spatial Reduction Multi-Head Attention Module (CGSR-MHA) is proposed, which reduces the computational cost through feature grouping and spatial degradation mechanisms, while preserving feature diversity through multiple low-dimensional attention heads. Moreover, a concept of Patch Embedded Overlap Width (PEOW) is defined to help understand the relationship between the amount of overlap and local continuity. By optimising PEOW, our model gains improvements in performance, parameters and GFLOPs. Comprehensive experiments on two benchmark datasets (MPII and COCO) demonstrate that the small and large HEViTPose models are on par with state-of-the-art models while being more lightweight. Specifically, HEViTPose-B achieves 90.7 PCK@0.5 on the MPII test set and 72.6 AP on the COCO test-dev2017 set. Compared with HRNet-W32 and Swin-S, our HEViTPose-B significantly reducing Params ($\downarrow$62.1%,$\downarrow$80.4%,) and GFLOPs ($\downarrow$43.4%,$\downarrow$63.8%,). Code and models are available at \url{here}.

Multi-View Neural Surface Reconstruction with Structured Light

Nov 22, 2022

Abstract:Three-dimensional (3D) object reconstruction based on differentiable rendering (DR) is an active research topic in computer vision. DR-based methods minimize the difference between the rendered and target images by optimizing both the shape and appearance and realizing a high visual reproductivity. However, most approaches perform poorly for textureless objects because of the geometrical ambiguity, which means that multiple shapes can have the same rendered result in such objects. To overcome this problem, we introduce active sensing with structured light (SL) into multi-view 3D object reconstruction based on DR to learn the unknown geometry and appearance of arbitrary scenes and camera poses. More specifically, our framework leverages the correspondences between pixels in different views calculated by structured light as an additional constraint in the DR-based optimization of implicit surface, color representations, and camera poses. Because camera poses can be optimized simultaneously, our method realizes high reconstruction accuracy in the textureless region and reduces efforts for camera pose calibration, which is required for conventional SL-based methods. Experiment results on both synthetic and real data demonstrate that our system outperforms conventional DR- and SL-based methods in a high-quality surface reconstruction, particularly for challenging objects with textureless or shiny surfaces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge