Yusuke Monno

Segmentation-Guided Neural Radiance Fields for Novel Street View Synthesis

Mar 18, 2025Abstract:Recent advances in Neural Radiance Fields (NeRF) have shown great potential in 3D reconstruction and novel view synthesis, particularly for indoor and small-scale scenes. However, extending NeRF to large-scale outdoor environments presents challenges such as transient objects, sparse cameras and textures, and varying lighting conditions. In this paper, we propose a segmentation-guided enhancement to NeRF for outdoor street scenes, focusing on complex urban environments. Our approach extends ZipNeRF and utilizes Grounded SAM for segmentation mask generation, enabling effective handling of transient objects, modeling of the sky, and regularization of the ground. We also introduce appearance embeddings to adapt to inconsistent lighting across view sequences. Experimental results demonstrate that our method outperforms the baseline ZipNeRF, improving novel view synthesis quality with fewer artifacts and sharper details.

TDM: Temporally-Consistent Diffusion Model for All-in-One Real-World Video Restoration

Jan 04, 2025

Abstract:In this paper, we propose the first diffusion-based all-in-one video restoration method that utilizes the power of a pre-trained Stable Diffusion and a fine-tuned ControlNet. Our method can restore various types of video degradation with a single unified model, overcoming the limitation of standard methods that require specific models for each restoration task. Our contributions include an efficient training strategy with Task Prompt Guidance (TPG) for diverse restoration tasks, an inference strategy that combines Denoising Diffusion Implicit Models~(DDIM) inversion with a novel Sliding Window Cross-Frame Attention (SW-CFA) mechanism for enhanced content preservation and temporal consistency, and a scalable pipeline that makes our method all-in-one to adapt to different video restoration tasks. Through extensive experiments on five video restoration tasks, we demonstrate the superiority of our method in generalization capability to real-world videos and temporal consistency preservation over existing state-of-the-art methods. Our method advances the video restoration task by providing a unified solution that enhances video quality across multiple applications.

Disparity Estimation Using a Quad-Pixel Sensor

Sep 01, 2024

Abstract:A quad-pixel (QP) sensor is increasingly integrated into commercial mobile cameras. The QP sensor has a unit of 2$\times$2 four photodiodes under a single microlens, generating multi-directional phase shifting when out-focus blurs occur. Similar to a dual-pixel (DP) sensor, the phase shifting can be regarded as stereo disparity and utilized for depth estimation. Based on this, we propose a QP disparity estimation network (QPDNet), which exploits abundant QP information by fusing vertical and horizontal stereo-matching correlations for effective disparity estimation. We also present a synthetic pipeline to generate a training dataset from an existing RGB-Depth dataset. Experimental results demonstrate that our QPDNet outperforms state-of-the-art stereo and DP methods. Our code and synthetic dataset are available at https://github.com/Zhuofeng-Wu/QPDNet.

Neural Radiance Fields for Novel View Synthesis in Monocular Gastroscopy

May 29, 2024

Abstract:Enabling the synthesis of arbitrarily novel viewpoint images within a patient's stomach from pre-captured monocular gastroscopic images is a promising topic in stomach diagnosis. Typical methods to achieve this objective integrate traditional 3D reconstruction techniques, including structure-from-motion (SfM) and Poisson surface reconstruction. These methods produce explicit 3D representations, such as point clouds and meshes, thereby enabling the rendering of the images from novel viewpoints. However, the existence of low-texture and non-Lambertian regions within the stomach often results in noisy and incomplete reconstructions of point clouds and meshes, hindering the attainment of high-quality image rendering. In this paper, we apply the emerging technique of neural radiance fields (NeRF) to monocular gastroscopic data for synthesizing photo-realistic images for novel viewpoints. To address the performance degradation due to view sparsity in local regions of monocular gastroscopy, we incorporate geometry priors from a pre-reconstructed point cloud into the training of NeRF, which introduces a novel geometry-based loss to both pre-captured observed views and generated unobserved views. Compared to other recent NeRF methods, our approach showcases high-fidelity image renderings from novel viewpoints within the stomach both qualitatively and quantitatively.

Self-Supervised Spatially Variant PSF Estimation for Aberration-Aware Depth-from-Defocus

Feb 28, 2024Abstract:In this paper, we address the task of aberration-aware depth-from-defocus (DfD), which takes account of spatially variant point spread functions (PSFs) of a real camera. To effectively obtain the spatially variant PSFs of a real camera without requiring any ground-truth PSFs, we propose a novel self-supervised learning method that leverages the pair of real sharp and blurred images, which can be easily captured by changing the aperture setting of the camera. In our PSF estimation, we assume rotationally symmetric PSFs and introduce the polar coordinate system to more accurately learn the PSF estimation network. We also handle the focus breathing phenomenon that occurs in real DfD situations. Experimental results on synthetic and real data demonstrate the effectiveness of our method regarding both the PSF estimation and the depth estimation.

Reflection Removal Using Recurrent Polarization-to-Polarization Network

Feb 28, 2024Abstract:This paper addresses reflection removal, which is the task of separating reflection components from a captured image and deriving the image with only transmission components. Considering that the existence of the reflection changes the polarization state of a scene, some existing methods have exploited polarized images for reflection removal. While these methods apply polarized images as the inputs, they predict the reflection and the transmission directly as non-polarized intensity images. In contrast, we propose a polarization-to-polarization approach that applies polarized images as the inputs and predicts "polarized" reflection and transmission images using two sequential networks to facilitate the separation task by utilizing the interrelated polarization information between the reflection and the transmission. We further adopt a recurrent framework, where the predicted reflection and transmission images are used to iteratively refine each other. Experimental results on a public dataset demonstrate that our method outperforms other state-of-the-art methods.

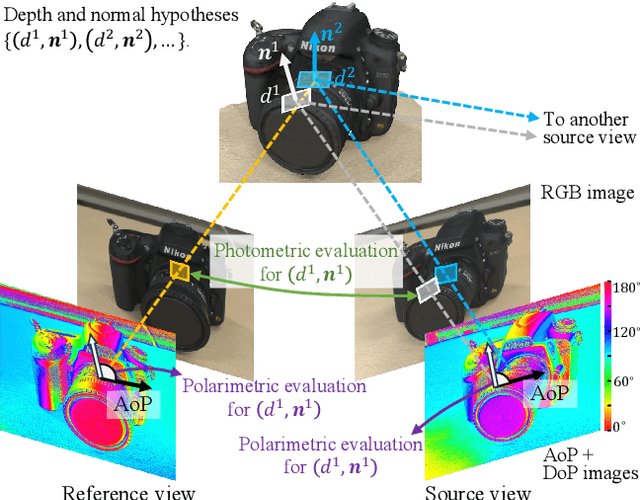

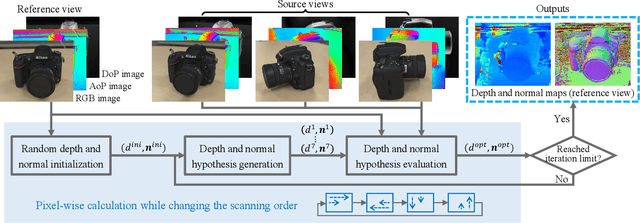

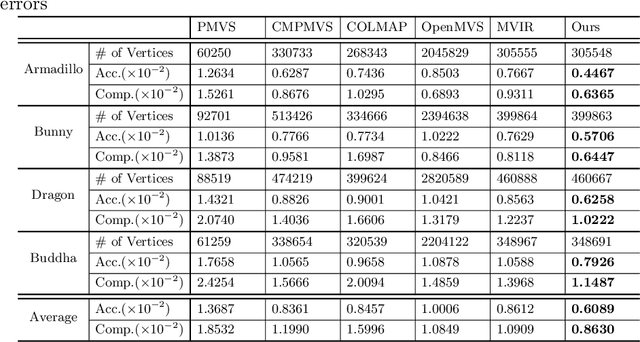

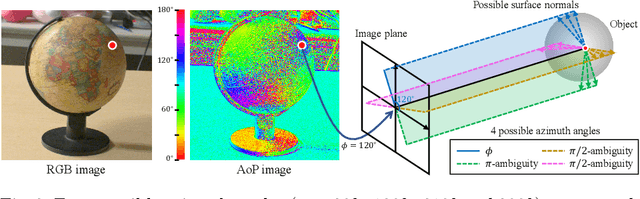

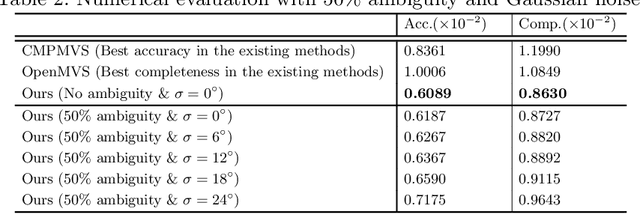

Polarimetric PatchMatch Multi-View Stereo

Nov 11, 2023

Abstract:PatchMatch Multi-View Stereo (PatchMatch MVS) is one of the popular MVS approaches, owing to its balanced accuracy and efficiency. In this paper, we propose Polarimetric PatchMatch multi-view Stereo (PolarPMS), which is the first method exploiting polarization cues to PatchMatch MVS. The key of PatchMatch MVS is to generate depth and normal hypotheses, which form local 3D planes and slanted stereo matching windows, and efficiently search for the best hypothesis based on the consistency among multi-view images. In addition to standard photometric consistency, our PolarPMS evaluates polarimetric consistency to assess the validness of a depth and normal hypothesis, motivated by the physical property that the polarimetric information is related to the object's surface normal. Experimental results demonstrate that our PolarPMS can improve the accuracy and the completeness of reconstructed 3D models, especially for texture-less surfaces, compared with state-of-the-art PatchMatch MVS methods.

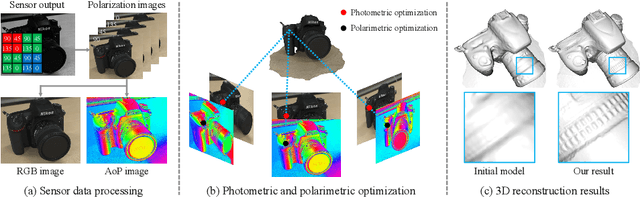

Polarimetric Multi-View Inverse Rendering

Dec 24, 2022

Abstract:A polarization camera has great potential for 3D reconstruction since the angle of polarization (AoP) and the degree of polarization (DoP) of reflected light are related to an object's surface normal. In this paper, we propose a novel 3D reconstruction method called Polarimetric Multi-View Inverse Rendering (Polarimetric MVIR) that effectively exploits geometric, photometric, and polarimetric cues extracted from input multi-view color-polarization images. We first estimate camera poses and an initial 3D model by geometric reconstruction with a standard structure-from-motion and multi-view stereo pipeline. We then refine the initial model by optimizing photometric rendering errors and polarimetric errors using multi-view RGB, AoP, and DoP images, where we propose a novel polarimetric cost function that enables an effective constraint on the estimated surface normal of each vertex, while considering four possible ambiguous azimuth angles revealed from the AoP measurement. The weight for the polarimetric cost is effectively determined based on the DoP measurement, which is regarded as the reliability of polarimetric information. Experimental results using both synthetic and real data demonstrate that our Polarimetric MVIR can reconstruct a detailed 3D shape without assuming a specific surface material and lighting condition.

Dual-Pixel Raindrop Removal

Oct 24, 2022Abstract:Removing raindrops in images has been addressed as a significant task for various computer vision applications. In this paper, we propose the first method using a Dual-Pixel (DP) sensor to better address the raindrop removal. Our key observation is that raindrops attached to a glass window yield noticeable disparities in DP's left-half and right-half images, while almost no disparity exists for in-focus backgrounds. Therefore, DP disparities can be utilized for robust raindrop detection. The DP disparities also brings the advantage that the occluded background regions by raindrops are shifted between the left-half and the right-half images. Therefore, fusing the information from the left-half and the right-half images can lead to more accurate background texture recovery. Based on the above motivation, we propose a DP Raindrop Removal Network (DPRRN) consisting of DP raindrop detection and DP fused raindrop removal. To efficiently generate a large amount of training data, we also propose a novel pipeline to add synthetic raindrops to real-world background DP images. Experimental results on synthetic and real-world datasets demonstrate that our DPRRN outperforms existing state-of-the-art methods, especially showing better robustness to real-world situations. Our source code and datasets are available at http://www.ok.sc.e.titech.ac.jp/res/SIR/.

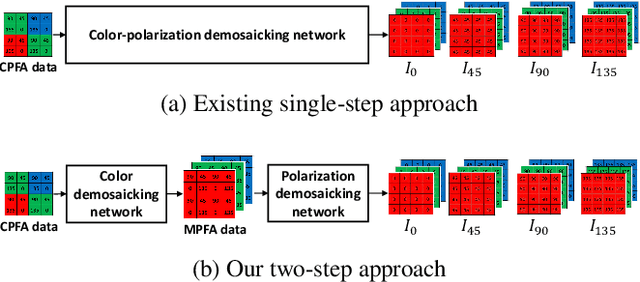

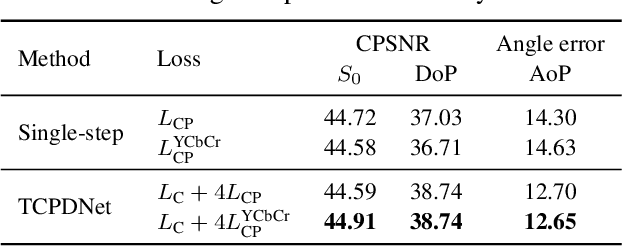

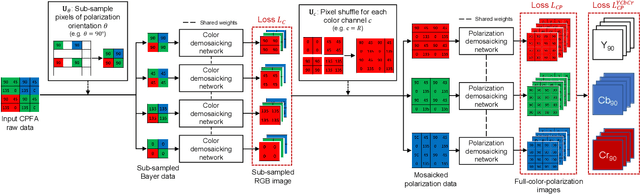

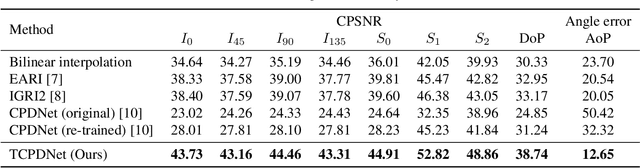

Two-Step Color-Polarization Demosaicking Network

Sep 13, 2022

Abstract:Polarization information of light in a scene is valuable for various image processing and computer vision tasks. A division-of-focal-plane polarimeter is a promising approach to capture the polarization images of different orientations in one shot, while it requires color-polarization demosaicking. In this paper, we propose a two-step color-polarization demosaicking network~(TCPDNet), which consists of two sub-tasks of color demosaicking and polarization demosaicking. We also introduce a reconstruction loss in the YCbCr color space to improve the performance of TCPDNet. Experimental comparisons demonstrate that TCPDNet outperforms existing methods in terms of the image quality of polarization images and the accuracy of Stokes parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge