Ziang Yan

Video-o3: Native Interleaved Clue Seeking for Long Video Multi-Hop Reasoning

Jan 30, 2026Abstract:Existing multimodal large language models for long-video understanding predominantly rely on uniform sampling and single-turn inference, limiting their ability to identify sparse yet critical evidence amid extensive redundancy. We introduce Video-o3, a novel framework that supports iterative discovery of salient visual clues, fine-grained inspection of key segments, and adaptive termination once sufficient evidence is acquired. Technically, we address two core challenges in interleaved tool invocation. First, to mitigate attention dispersion induced by the heterogeneity of reasoning and tool-calling, we propose Task-Decoupled Attention Masking, which isolates per-step concentration while preserving shared global context. Second, to control context length growth in multi-turn interactions, we introduce a Verifiable Trajectory-Guided Reward that balances exploration coverage with reasoning efficiency. To support training at scale, we further develop a data synthesis pipeline and construct Seeker-173K, comprising 173K high-quality tool-interaction trajectories for effective supervised and reinforcement learning. Extensive experiments show that Video-o3 substantially outperforms state-of-the-art methods, achieving 72.1% accuracy on MLVU and 46.5% on Video-Holmes. These results demonstrate Video-o3's strong multi-hop evidence-seeking and reasoning capabilities, and validate the effectiveness of native tool invocation in long-video scenarios.

DSI-Bench: A Benchmark for Dynamic Spatial Intelligence

Oct 21, 2025Abstract:Reasoning about dynamic spatial relationships is essential, as both observers and objects often move simultaneously. Although vision-language models (VLMs) and visual expertise models excel in 2D tasks and static scenarios, their ability to fully understand dynamic 3D scenarios remains limited. We introduce Dynamic Spatial Intelligence and propose DSI-Bench, a benchmark with nearly 1,000 dynamic videos and over 1,700 manually annotated questions covering nine decoupled motion patterns of observers and objects. Spatially and temporally symmetric designs reduce biases and enable systematic evaluation of models' reasoning about self-motion and object motion. Our evaluation of 14 VLMs and expert models reveals key limitations: models often conflate observer and object motion, exhibit semantic biases, and fail to accurately infer relative relationships in dynamic scenarios. Our DSI-Bench provides valuable findings and insights about the future development of general and expertise models with dynamic spatial intelligence.

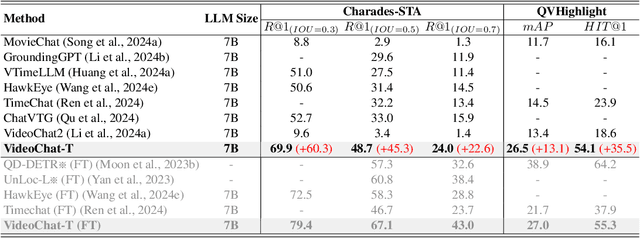

VideoChat-R1: Enhancing Spatio-Temporal Perception via Reinforcement Fine-Tuning

Apr 10, 2025Abstract:Recent advancements in reinforcement learning have significantly advanced the reasoning capabilities of multimodal large language models (MLLMs). While approaches such as Group Relative Policy Optimization (GRPO) and rule-based reward mechanisms demonstrate promise in text and image domains, their application to video understanding remains limited. This paper presents a systematic exploration of Reinforcement Fine-Tuning (RFT) with GRPO for video MLLMs, aiming to enhance spatio-temporal perception while maintaining general capabilities. Our experiments reveal that RFT is highly data-efficient for task-specific improvements. Through multi-task RFT on spatio-temporal perception objectives with limited samples, we develop VideoChat-R1, a powerful video MLLM that achieves state-of-the-art performance on spatio-temporal perception tasks without sacrificing chat ability, while exhibiting emerging spatio-temporal reasoning abilities. Compared to Qwen2.5-VL-7B, VideoChat-R1 boosts performance several-fold in tasks like temporal grounding (+31.8) and object tracking (+31.2). Additionally, it significantly improves on general QA benchmarks such as VideoMME (+0.9), MVBench (+1.0), and Perception Test (+0.9). Our findings underscore the potential of RFT for specialized task enhancement of Video MLLMs. We hope our work offers valuable insights for future RL research in video MLLMs.

InternVideo2.5: Empowering Video MLLMs with Long and Rich Context Modeling

Jan 21, 2025

Abstract:This paper aims to improve the performance of video multimodal large language models (MLLM) via long and rich context (LRC) modeling. As a result, we develop a new version of InternVideo2.5 with a focus on enhancing the original MLLMs' ability to perceive fine-grained details and capture long-form temporal structure in videos. Specifically, our approach incorporates dense vision task annotations into MLLMs using direct preference optimization and develops compact spatiotemporal representations through adaptive hierarchical token compression. Experimental results demonstrate this unique design of LRC greatly improves the results of video MLLM in mainstream video understanding benchmarks (short & long), enabling the MLLM to memorize significantly longer video inputs (at least 6x longer than the original), and master specialized vision capabilities like object tracking and segmentation. Our work highlights the importance of multimodal context richness (length and fineness) in empowering MLLM's innate abilites (focus and memory), providing new insights for future research on video MLLM. Code and models are available at https://github.com/OpenGVLab/InternVideo/tree/main/InternVideo2.5

Task Preference Optimization: Improving Multimodal Large Language Models with Vision Task Alignment

Dec 26, 2024Abstract:Current multimodal large language models (MLLMs) struggle with fine-grained or precise understanding of visuals though they give comprehensive perception and reasoning in a spectrum of vision applications. Recent studies either develop tool-using or unify specific visual tasks into the autoregressive framework, often at the expense of overall multimodal performance. To address this issue and enhance MLLMs with visual tasks in a scalable fashion, we propose Task Preference Optimization (TPO), a novel method that utilizes differentiable task preferences derived from typical fine-grained visual tasks. TPO introduces learnable task tokens that establish connections between multiple task-specific heads and the MLLM. By leveraging rich visual labels during training, TPO significantly enhances the MLLM's multimodal capabilities and task-specific performance. Through multi-task co-training within TPO, we observe synergistic benefits that elevate individual task performance beyond what is achievable through single-task training methodologies. Our instantiation of this approach with VideoChat and LLaVA demonstrates an overall 14.6% improvement in multimodal performance compared to baseline models. Additionally, MLLM-TPO demonstrates robust zero-shot capabilities across various tasks, performing comparably to state-of-the-art supervised models. The code will be released at https://github.com/OpenGVLab/TPO

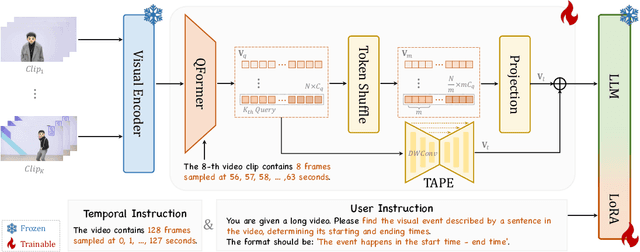

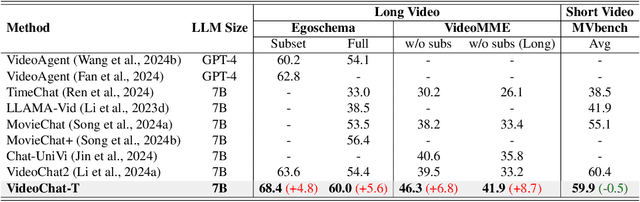

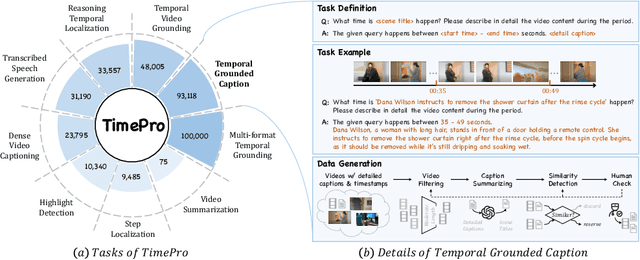

TimeSuite: Improving MLLMs for Long Video Understanding via Grounded Tuning

Oct 25, 2024

Abstract:Multimodal Large Language Models (MLLMs) have demonstrated impressive performance in short video understanding. However, understanding long-form videos still remains challenging for MLLMs. This paper proposes TimeSuite, a collection of new designs to adapt the existing short-form video MLLMs for long video understanding, including a simple yet efficient framework to process long video sequence, a high-quality video dataset for grounded tuning of MLLMs, and a carefully-designed instruction tuning task to explicitly incorporate the grounding supervision in the traditional QA format. Specifically, based on VideoChat, we propose our long-video MLLM, coined as VideoChat-T, by implementing a token shuffling to compress long video tokens and introducing Temporal Adaptive Position Encoding (TAPE) to enhance the temporal awareness of visual representation. Meanwhile, we introduce the TimePro, a comprehensive grounding-centric instruction tuning dataset composed of 9 tasks and 349k high-quality grounded annotations. Notably, we design a new instruction tuning task type, called Temporal Grounded Caption, to peform detailed video descriptions with the corresponding time stamps prediction. This explicit temporal location prediction will guide MLLM to correctly attend on the visual content when generating description, and thus reduce the hallucination risk caused by the LLMs. Experimental results demonstrate that our TimeSuite provides a successful solution to enhance the long video understanding capability of short-form MLLM, achieving improvement of 5.6% and 6.8% on the benchmarks of Egoschema and VideoMME, respectively. In addition, VideoChat-T exhibits robust zero-shot temporal grounding capabilities, significantly outperforming the existing state-of-the-art MLLMs. After fine-tuning, it performs on par with the traditional supervised expert models.

An End-to-End Framework for Marketing Effectiveness Optimization under Budget Constraint

Feb 09, 2023

Abstract:Online platforms often incentivize consumers to improve user engagement and platform revenue. Since different consumers might respond differently to incentives, individual-level budget allocation is an essential task in marketing campaigns. Recent advances in this field often address the budget allocation problem using a two-stage paradigm: the first stage estimates the individual-level treatment effects using causal inference algorithms, and the second stage invokes integer programming techniques to find the optimal budget allocation solution. Since the objectives of these two stages might not be perfectly aligned, such a two-stage paradigm could hurt the overall marketing effectiveness. In this paper, we propose a novel end-to-end framework to directly optimize the business goal under budget constraints. Our core idea is to construct a regularizer to represent the marketing goal and optimize it efficiently using gradient estimation techniques. As such, the obtained models can learn to maximize the marketing goal directly and precisely. We extensively evaluate our proposed method in both offline and online experiments, and experimental results demonstrate that our method outperforms current state-of-the-art methods. Our proposed method is currently deployed to allocate marketing budgets for hundreds of millions of users on a short video platform and achieves significant business goal improvements. Our code will be publicly available.

Sill-Net: Feature Augmentation with Separated Illumination Representation

Feb 06, 2021

Abstract:For visual object recognition tasks, the illumination variations can cause distinct changes in object appearance and thus confuse the deep neural network based recognition models. Especially for some rare illumination conditions, collecting sufficient training samples could be time-consuming and expensive. To solve this problem, in this paper we propose a novel neural network architecture called Separating-Illumination Network (Sill-Net). Sill-Net learns to separate illumination features from images, and then during training we augment training samples with these separated illumination features in the feature space. Experimental results demonstrate that our approach outperforms current state-of-the-art methods in several object classification benchmarks.

Learning Fast Approximations of Sparse Nonlinear Regression

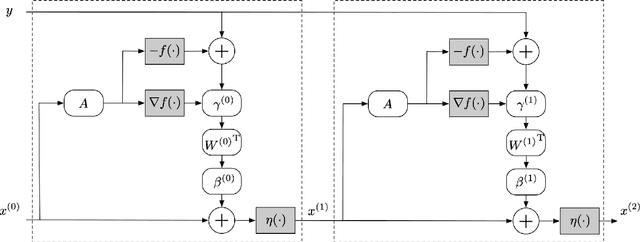

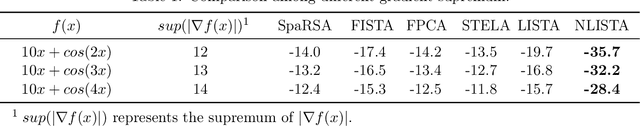

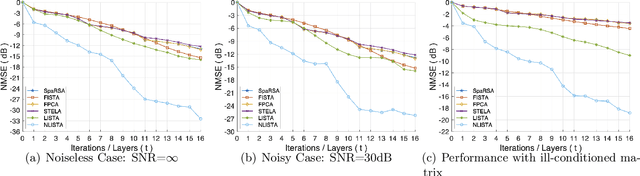

Oct 26, 2020

Abstract:The idea of unfolding iterative algorithms as deep neural networks has been widely applied in solving sparse coding problems, providing both solid theoretical analysis in convergence rate and superior empirical performance. However, for sparse nonlinear regression problems, a similar idea is rarely exploited due to the complexity of nonlinearity. In this work, we bridge this gap by introducing the Nonlinear Learned Iterative Shrinkage Thresholding Algorithm (NLISTA), which can attain a linear convergence under suitable conditions. Experiments on synthetic data corroborate our theoretical results and show our method outperforms state-of-the-art methods.

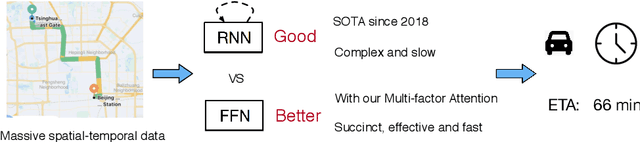

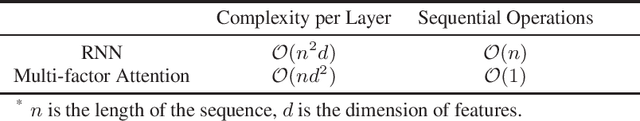

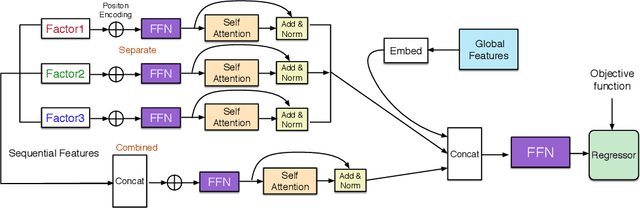

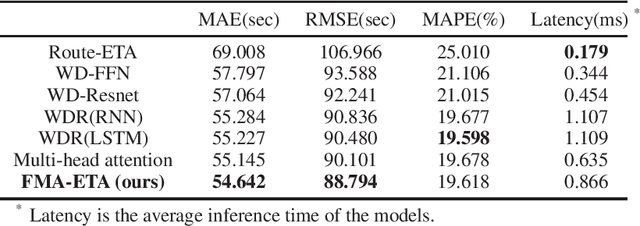

FMA-ETA: Estimating Travel Time Entirely Based on FFN With Attention

Jun 07, 2020

Abstract:Estimated time of arrival (ETA) is one of the most important services in intelligent transportation systems and becomes a challenging spatial-temporal (ST) data mining task in recent years. Nowadays, deep learning based methods, specifically recurrent neural networks (RNN) based ones are adapted to model the ST patterns from massive data for ETA and become the state-of-the-art. However, RNN is suffering from slow training and inference speed, as its structure is unfriendly to parallel computing. To solve this problem, we propose a novel, brief and effective framework mainly based on feed-forward network (FFN) for ETA, FFN with Multi-factor self-Attention (FMA-ETA). The novel Multi-factor self-attention mechanism is proposed to deal with different category features and aggregate the information purposefully. Extensive experimental results on the real-world vehicle travel dataset show FMA-ETA is competitive with state-of-the-art methods in terms of the prediction accuracy with significantly better inference speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge