Zhen Zhou

Let Experts Feel Uncertainty: A Multi-Expert Label Distribution Approach to Probabilistic Time Series Forecasting

Feb 04, 2026Abstract:Time series forecasting in real-world applications requires both high predictive accuracy and interpretable uncertainty quantification. Traditional point prediction methods often fail to capture the inherent uncertainty in time series data, while existing probabilistic approaches struggle to balance computational efficiency with interpretability. We propose a novel Multi-Expert Learning Distributional Labels (LDL) framework that addresses these challenges through mixture-of-experts architectures with distributional learning capabilities. Our approach introduces two complementary methods: (1) Multi-Expert LDL, which employs multiple experts with different learned parameters to capture diverse temporal patterns, and (2) Pattern-Aware LDL-MoE, which explicitly decomposes time series into interpretable components (trend, seasonality, changepoints, volatility) through specialized sub-experts. Both frameworks extend traditional point prediction to distributional learning, enabling rich uncertainty quantification through Maximum Mean Discrepancy (MMD). We evaluate our methods on aggregated sales data derived from the M5 dataset, demonstrating superior performance compared to baseline approaches. The continuous Multi-Expert LDL achieves the best overall performance, while the Pattern-Aware LDL-MoE provides enhanced interpretability through component-wise analysis. Our frameworks successfully balance predictive accuracy with interpretability, making them suitable for real-world forecasting applications where both performance and actionable insights are crucial.

Structure-constrained Language-informed Diffusion Model for Unpaired Low-dose Computed Tomography Angiography Reconstruction

Jan 28, 2026Abstract:The application of iodinated contrast media (ICM) improves the sensitivity and specificity of computed tomography (CT) for a wide range of clinical indications. However, overdose of ICM can cause problems such as kidney damage and life-threatening allergic reactions. Deep learning methods can generate CT images of normal-dose ICM from low-dose ICM, reducing the required dose while maintaining diagnostic power. However, existing methods are difficult to realize accurate enhancement with incompletely paired images, mainly because of the limited ability of the model to recognize specific structures. To overcome this limitation, we propose a Structure-constrained Language-informed Diffusion Model (SLDM), a unified medical generation model that integrates structural synergy and spatial intelligence. First, the structural prior information of the image is effectively extracted to constrain the model inference process, thus ensuring structural consistency in the enhancement process. Subsequently, semantic supervision strategy with spatial intelligence is introduced, which integrates the functions of visual perception and spatial reasoning, thus prompting the model to achieve accurate enhancement. Finally, the subtraction angiography enhancement module is applied, which serves to improve the contrast of the ICM agent region to suitable interval for observation. Qualitative analysis of visual comparison and quantitative results of several metrics demonstrate the effectiveness of our method in angiographic reconstruction for low-dose contrast medium CT angiography.

Hunyuan3D Studio: End-to-End AI Pipeline for Game-Ready 3D Asset Generation

Sep 16, 2025

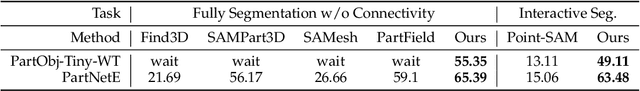

Abstract:The creation of high-quality 3D assets, a cornerstone of modern game development, has long been characterized by labor-intensive and specialized workflows. This paper presents Hunyuan3D Studio, an end-to-end AI-powered content creation platform designed to revolutionize the game production pipeline by automating and streamlining the generation of game-ready 3D assets. At its core, Hunyuan3D Studio integrates a suite of advanced neural modules (such as Part-level 3D Generation, Polygon Generation, Semantic UV, etc.) into a cohesive and user-friendly system. This unified framework allows for the rapid transformation of a single concept image or textual description into a fully-realized, production-quality 3D model complete with optimized geometry and high-fidelity PBR textures. We demonstrate that assets generated by Hunyuan3D Studio are not only visually compelling but also adhere to the stringent technical requirements of contemporary game engines, significantly reducing iteration time and lowering the barrier to entry for 3D content creation. By providing a seamless bridge from creative intent to technical asset, Hunyuan3D Studio represents a significant leap forward for AI-assisted workflows in game development and interactive media.

HAD: Hybrid Architecture Distillation Outperforms Teacher in Genomic Sequence Modeling

May 27, 2025Abstract:Inspired by the great success of Masked Language Modeling (MLM) in the natural language domain, the paradigm of self-supervised pre-training and fine-tuning has also achieved remarkable progress in the field of DNA sequence modeling. However, previous methods often relied on massive pre-training data or large-scale base models with huge parameters, imposing a significant computational burden. To address this, many works attempted to use more compact models to achieve similar outcomes but still fell short by a considerable margin. In this work, we propose a Hybrid Architecture Distillation (HAD) approach, leveraging both distillation and reconstruction tasks for more efficient and effective pre-training. Specifically, we employ the NTv2-500M as the teacher model and devise a grouping masking strategy to align the feature embeddings of visible tokens while concurrently reconstructing the invisible tokens during MLM pre-training. To validate the effectiveness of our proposed method, we conducted comprehensive experiments on the Nucleotide Transformer Benchmark and Genomic Benchmark. Compared to models with similar parameters, our model achieved excellent performance. More surprisingly, it even surpassed the distillation ceiling-teacher model on some sub-tasks, which is more than 500 $\times$ larger. Lastly, we utilize t-SNE for more intuitive visualization, which shows that our model can gain a sophisticated understanding of the intrinsic representation pattern in genomic sequences.

EPRecon: An Efficient Framework for Real-Time Panoptic 3D Reconstruction from Monocular Video

Sep 03, 2024

Abstract:Panoptic 3D reconstruction from a monocular video is a fundamental perceptual task in robotic scene understanding. However, existing efforts suffer from inefficiency in terms of inference speed and accuracy, limiting their practical applicability. We present EPRecon, an efficient real-time panoptic 3D reconstruction framework. Current volumetric-based reconstruction methods usually utilize multi-view depth map fusion to obtain scene depth priors, which is time-consuming and poses challenges to real-time scene reconstruction. To end this, we propose a lightweight module to directly estimate scene depth priors in a 3D volume for reconstruction quality improvement by generating occupancy probabilities of all voxels. In addition, to infer richer panoptic features from occupied voxels, EPRecon extracts panoptic features from both voxel features and corresponding image features, obtaining more detailed and comprehensive instance-level semantic information and achieving more accurate segmentation results. Experimental results on the ScanNetV2 dataset demonstrate the superiority of EPRecon over current state-of-the-art methods in terms of both panoptic 3D reconstruction quality and real-time inference. Code is available at https://github.com/zhen6618/EPRecon.

Completely Occluded and Dense Object Instance Segmentation Using Box Prompt-Based Segmentation Foundation Models

Jan 16, 2024Abstract:Completely occluded and dense object instance segmentation (IS) is an important and challenging task. Although current amodal IS methods can predict invisible regions of occluded objects, they are difficult to directly predict completely occluded objects. For dense object IS, existing box-based methods are overly dependent on the performance of bounding box detection. In this paper, we propose CFNet, a coarse-to-fine IS framework for completely occluded and dense objects, which is based on box prompt-based segmentation foundation models (BSMs). Specifically, CFNet first detects oriented bounding boxes (OBBs) to distinguish instances and provide coarse localization information. Then, it predicts OBB prompt-related masks for fine segmentation. To predict completely occluded object instances, CFNet performs IS on occluders and utilizes prior geometric properties, which overcomes the difficulty of directly predicting completely occluded object instances. Furthermore, based on BSMs, CFNet reduces the dependence on bounding box detection performance, improving dense object IS performance. Moreover, we propose a novel OBB prompt encoder for BSMs. To make CFNet more lightweight, we perform knowledge distillation on it and introduce a Gaussian smoothing method for teacher targets. Experimental results demonstrate that CFNet achieves the best performance on both industrial and publicly available datasets.

Linear Gaussian Bounding Box Representation and Ring-Shaped Rotated Convolution for Oriented Object Detection

Nov 14, 2023Abstract:In oriented object detection, current representations of oriented bounding boxes (OBBs) often suffer from boundary discontinuity problem. Methods of designing continuous regression losses do not essentially solve this problem. Although Gaussian bounding box (GBB) representation avoids this problem, directly regressing GBB is susceptible to numerical instability. We propose linear GBB (LGBB), a novel OBB representation. By linearly transforming the elements of GBB, LGBB avoids the boundary discontinuity problem and has high numerical stability. In addition, existing convolution-based rotation-sensitive feature extraction methods only have local receptive fields, resulting in slow feature aggregation. We propose ring-shaped rotated convolution (RRC), which adaptively rotates feature maps to arbitrary orientations to extract rotation-sensitive features under a ring-shaped receptive field, rapidly aggregating features and contextual information. Experimental results demonstrate that LGBB and RRC achieve state-of-the-art performance. Furthermore, integrating LGBB and RRC into various models effectively improves detection accuracy.

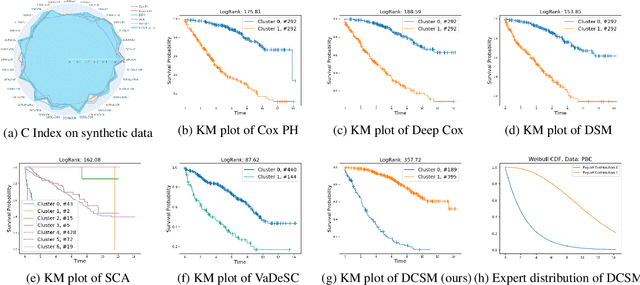

Deep Clustering Survival Machines with Interpretable Expert Distributions

Jan 27, 2023

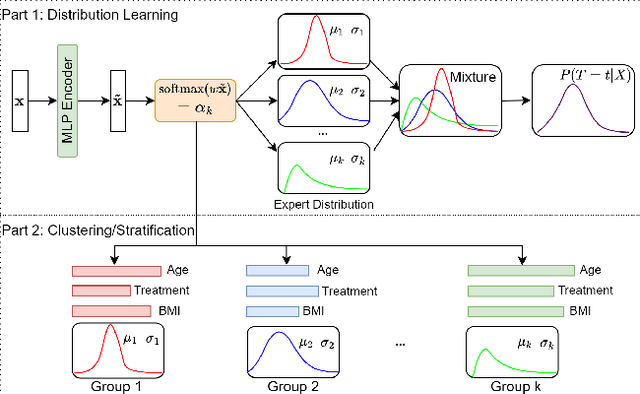

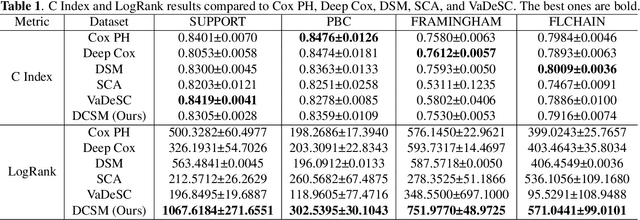

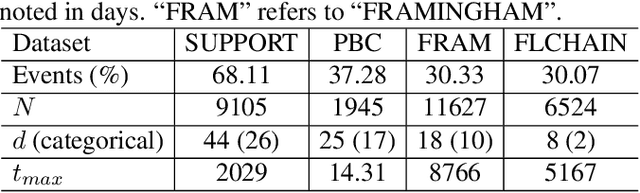

Abstract:Conventional survival analysis methods are typically ineffective to characterize heterogeneity in the population while such information can be used to assist predictive modeling. In this study, we propose a hybrid survival analysis method, referred to as deep clustering survival machines, that combines the discriminative and generative mechanisms. Similar to the mixture models, we assume that the timing information of survival data is generatively described by a mixture of certain numbers of parametric distributions, i.e., expert distributions. We learn weights of the expert distributions for individual instances according to their features discriminatively such that each instance's survival information can be characterized by a weighted combination of the learned constant expert distributions. This method also facilitates interpretable subgrouping/clustering of all instances according to their associated expert distributions. Extensive experiments on both real and synthetic datasets have demonstrated that the method is capable of obtaining promising clustering results and competitive time-to-event predicting performance.

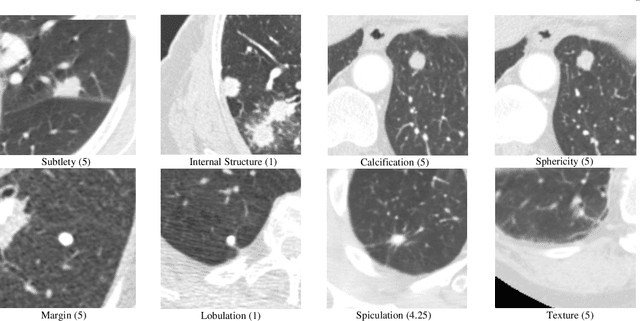

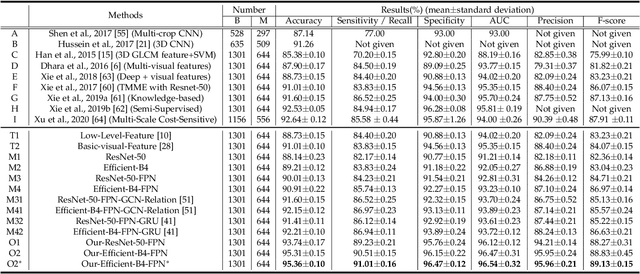

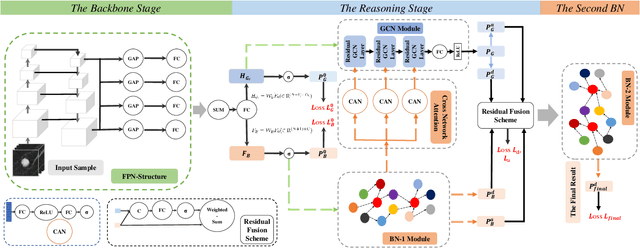

Diagnose Like a Radiologist: Hybrid Neuro-Probabilistic Reasoning for Attribute-Based Medical Image Diagnosis

Aug 19, 2022

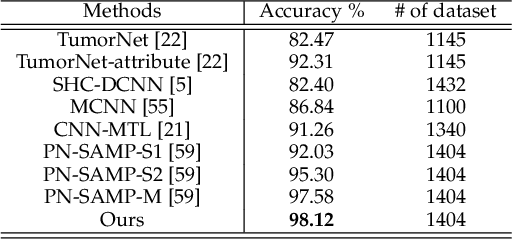

Abstract:During clinical practice, radiologists often use attributes, e.g. morphological and appearance characteristics of a lesion, to aid disease diagnosis. Effectively modeling attributes as well as all relationships involving attributes could boost the generalization ability and verifiability of medical image diagnosis algorithms. In this paper, we introduce a hybrid neuro-probabilistic reasoning algorithm for verifiable attribute-based medical image diagnosis. There are two parallel branches in our hybrid algorithm, a Bayesian network branch performing probabilistic causal relationship reasoning and a graph convolutional network branch performing more generic relational modeling and reasoning using a feature representation. Tight coupling between these two branches is achieved via a cross-network attention mechanism and the fusion of their classification results. We have successfully applied our hybrid reasoning algorithm to two challenging medical image diagnosis tasks. On the LIDC-IDRI benchmark dataset for benign-malignant classification of pulmonary nodules in CT images, our method achieves a new state-of-the-art accuracy of 95.36\% and an AUC of 96.54\%. Our method also achieves a 3.24\% accuracy improvement on an in-house chest X-ray image dataset for tuberculosis diagnosis. Our ablation study indicates that our hybrid algorithm achieves a much better generalization performance than a pure neural network architecture under very limited training data.

Brain Network Construction and Classification Toolbox (BrainNetClass)

Jun 17, 2019

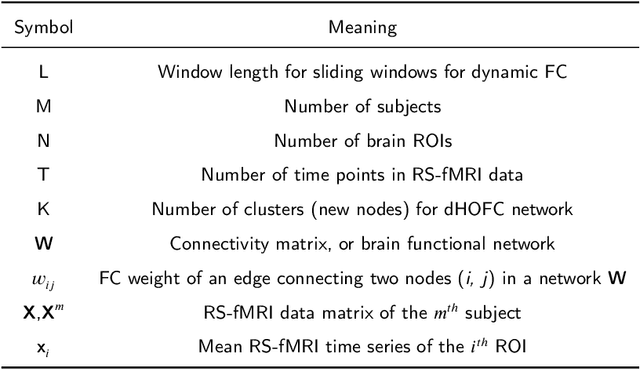

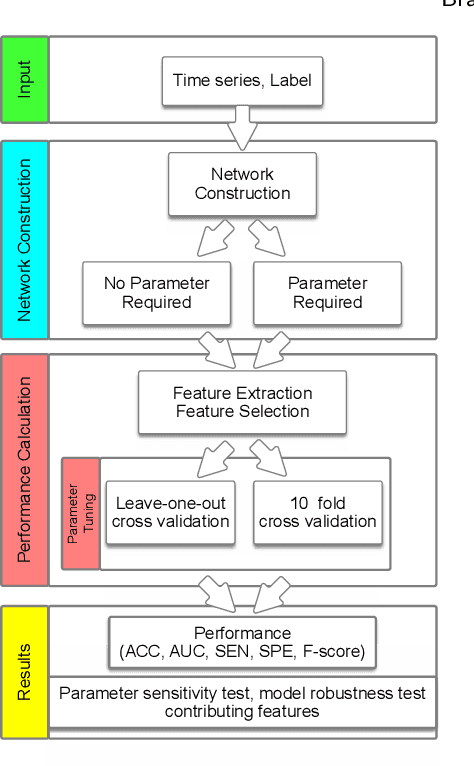

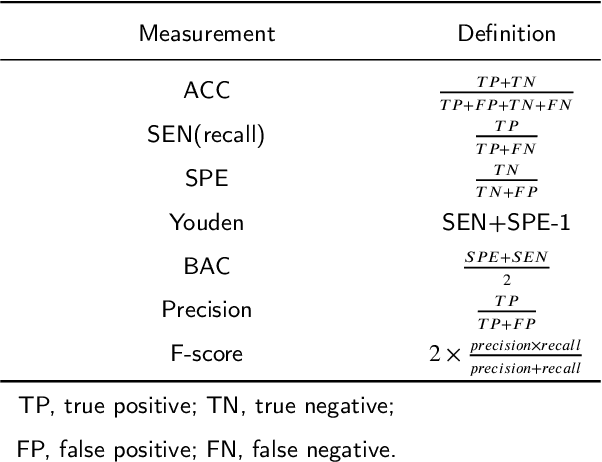

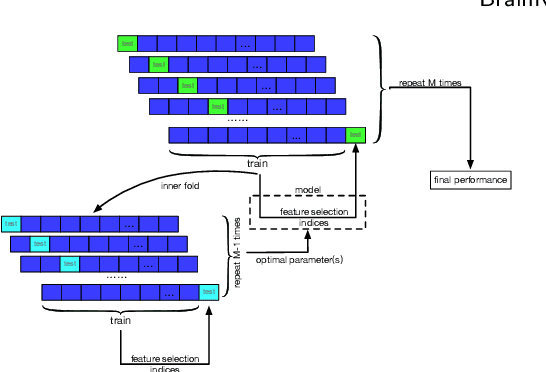

Abstract:Brain functional network has become an increasingly used approach in understanding brain functions and diseases. Many network construction methods have been developed, whereas the majority of the studies still used static pairwise Pearson's correlation-based functional connectivity. The goal of this work is to introduce a toolbox namely "Brain Network Construction and Classification" (BrainNetClass) to the field to promote more advanced brain network construction methods. It comprises various brain network construction methods, including some state-of-the-art methods that were recently developed to capture more complex interactions among brain regions along with connectome feature extraction, reduction, parameter optimization towards network-based individualized classification. BrainNetClass is a MATLAB-based, open-source, cross-platform toolbox with graphical user-friendly interfaces for cognitive and clinical neuroscientists to perform rigorous computer-aided diagnosis with interpretable result presentations even though they do not possess neuroimage computing and machine learning knowledge. We demonstrate the implementations of this toolbox on real resting-state functional MRI datasets. BrainNetClass (v1.0) can be downloaded from https://github.com/zzstefan/BrainNetClass.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge