Yuan Qu

DOCR-Inspector: Fine-Grained and Automated Evaluation of Document Parsing with VLM

Dec 11, 2025

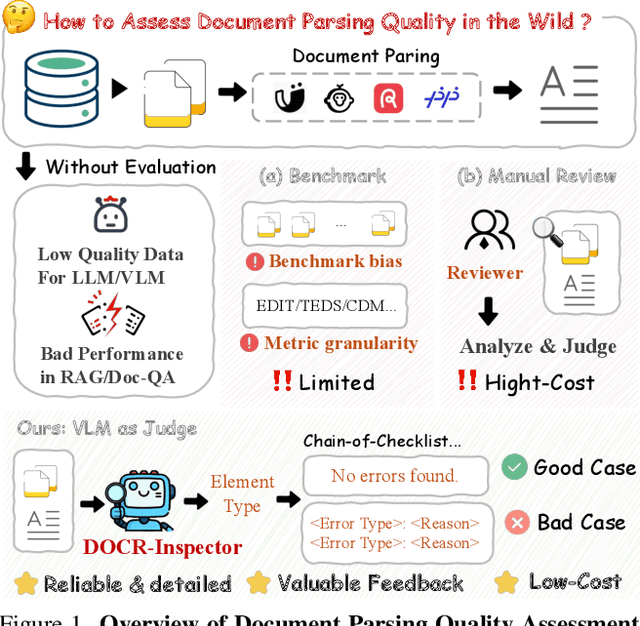

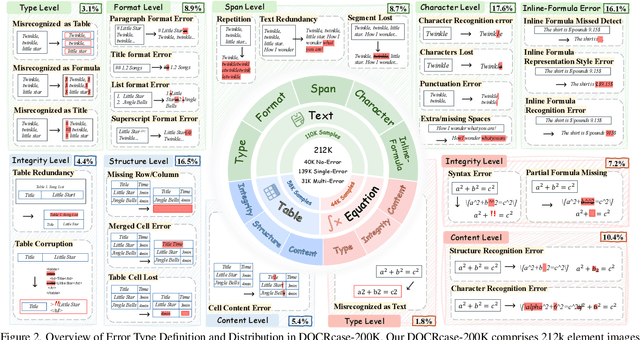

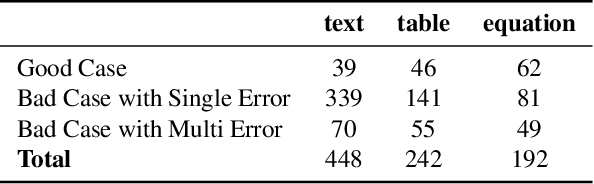

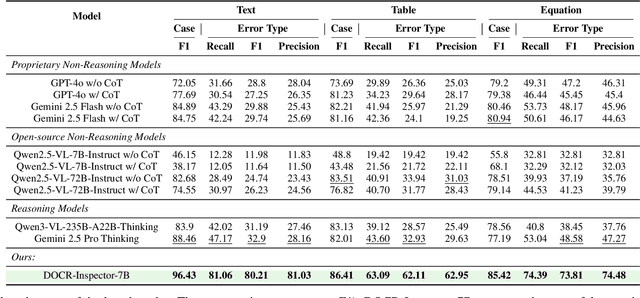

Abstract:Document parsing aims to transform unstructured PDF images into semi-structured data, facilitating the digitization and utilization of information in diverse domains. While vision language models (VLMs) have significantly advanced this task, achieving reliable, high-quality parsing in real-world scenarios remains challenging. Common practice often selects the top-performing model on standard benchmarks. However, these benchmarks may carry dataset-specific biases, leading to inconsistent model rankings and limited correlation with real-world performance. Moreover, benchmark metrics typically provide only overall scores, which can obscure distinct error patterns in output. This raises a key challenge: how can we reliably and comprehensively assess document parsing quality in the wild? We address this problem with DOCR-Inspector, which formalizes document parsing assessment as fine-grained error detection and analysis. Leveraging VLM-as-a-Judge, DOCR-Inspector analyzes a document image and its parsed output, identifies all errors, assigns them to one of 28 predefined types, and produces a comprehensive quality assessment. To enable this capability, we construct DOCRcase-200K for training and propose the Chain-of-Checklist reasoning paradigm to enable the hierarchical structure of parsing quality assessment. For empirical validation, we introduce DOCRcaseBench, a set of 882 real-world document parsing cases with manual annotations. On this benchmark, DOCR-Inspector-7B outperforms commercial models like Gemini 2.5 Pro, as well as leading open-source models. Further experiments demonstrate that its quality assessments provide valuable guidance for parsing results refinement, making DOCR-Inspector both a practical evaluator and a driver for advancing document parsing systems at scale. Model and code are released at: https://github.com/ZZZZZQT/DOCR-Inspector.

MinerU2.5: A Decoupled Vision-Language Model for Efficient High-Resolution Document Parsing

Sep 26, 2025Abstract:We introduce MinerU2.5, a 1.2B-parameter document parsing vision-language model that achieves state-of-the-art recognition accuracy while maintaining exceptional computational efficiency. Our approach employs a coarse-to-fine, two-stage parsing strategy that decouples global layout analysis from local content recognition. In the first stage, the model performs efficient layout analysis on downsampled images to identify structural elements, circumventing the computational overhead of processing high-resolution inputs. In the second stage, guided by the global layout, it performs targeted content recognition on native-resolution crops extracted from the original image, preserving fine-grained details in dense text, complex formulas, and tables. To support this strategy, we developed a comprehensive data engine that generates diverse, large-scale training corpora for both pretraining and fine-tuning. Ultimately, MinerU2.5 demonstrates strong document parsing ability, achieving state-of-the-art performance on multiple benchmarks, surpassing both general-purpose and domain-specific models across various recognition tasks, while maintaining significantly lower computational overhead.

OmniDocBench: Benchmarking Diverse PDF Document Parsing with Comprehensive Annotations

Dec 10, 2024

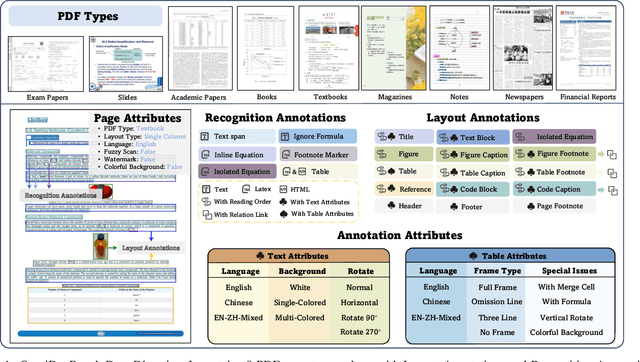

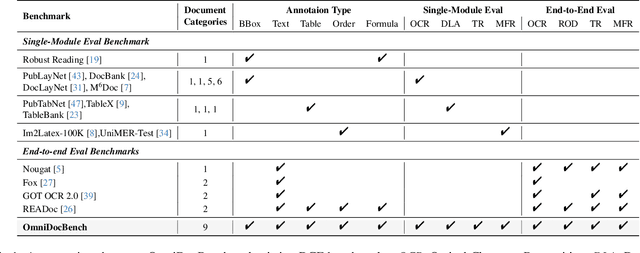

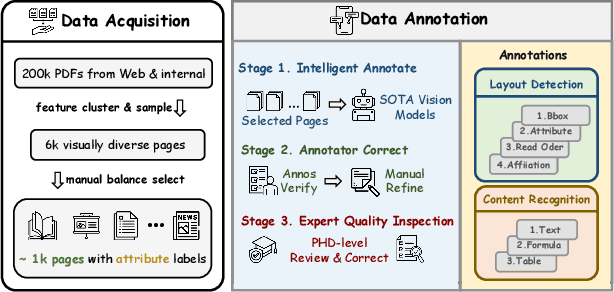

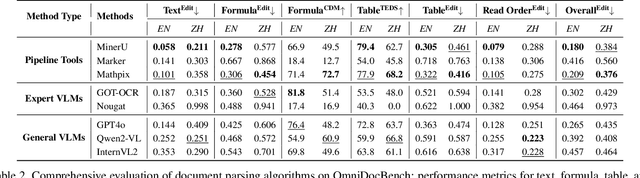

Abstract:Document content extraction is crucial in computer vision, especially for meeting the high-quality data needs of large language models (LLMs) and retrieval-augmented generation (RAG) technologies. However, current document parsing methods suffer from significant limitations in terms of diversity and comprehensive evaluation. To address these challenges, we introduce OmniDocBench, a novel multi-source benchmark designed to advance automated document content extraction. OmniDocBench includes a meticulously curated and annotated high-quality evaluation dataset comprising nine diverse document types, such as academic papers, textbooks, slides, among others. Our benchmark provides a flexible and comprehensive evaluation framework with 19 layout category labels and 14 attribute labels, enabling multi-level assessments across entire datasets, individual modules, or specific data types. Using OmniDocBench, we perform an exhaustive comparative analysis of existing modular pipelines and multimodal end-to-end methods, highlighting their limitations in handling document diversity and ensuring fair evaluation. OmniDocBench establishes a robust, diverse, and fair evaluation standard for the document content extraction field, offering crucial insights for future advancements and fostering the development of document parsing technologies. The codes and dataset is available in https://github.com/opendatalab/OmniDocBench.

UMSPU: Universal Multi-Size Phase Unwrapping via Mutual Self-Distillation and Adaptive Boosting Ensemble Segmenters

Dec 07, 2024

Abstract:Spatial phase unwrapping is a key technique for extracting phase information to obtain 3D morphology and other features. Modern industrial measurement scenarios demand high precision, large image sizes, and high speed. However, conventional methods struggle with noise resistance and processing speed. Current deep learning methods are limited by the receptive field size and sparse semantic information, making them ineffective for large size images. To address this issue, we propose a mutual self-distillation (MSD) mechanism and adaptive boosting ensemble segmenters to construct a universal multi-size phase unwrapping network (UMSPU). MSD performs hierarchical attention refinement and achieves cross-layer collaborative learning through bidirectional distillation, ensuring fine-grained semantic representation across image sizes. The adaptive boosting ensemble segmenters combine weak segmenters with different receptive fields into a strong one, ensuring stable segmentation across spatial frequencies. Experimental results show that UMSPU overcomes image size limitations, achieving high precision across image sizes ranging from 256*256 to 2048*2048 (an 8 times increase). It also outperforms existing methods in speed, robustness, and generalization. Its practicality is further validated in structured light imaging and InSAR. We believe that UMSPU offers a universal solution for phase unwrapping, with broad potential for industrial applications.

MinerU: An Open-Source Solution for Precise Document Content Extraction

Sep 27, 2024

Abstract:Document content analysis has been a crucial research area in computer vision. Despite significant advancements in methods such as OCR, layout detection, and formula recognition, existing open-source solutions struggle to consistently deliver high-quality content extraction due to the diversity in document types and content. To address these challenges, we present MinerU, an open-source solution for high-precision document content extraction. MinerU leverages the sophisticated PDF-Extract-Kit models to extract content from diverse documents effectively and employs finely-tuned preprocessing and postprocessing rules to ensure the accuracy of the final results. Experimental results demonstrate that MinerU consistently achieves high performance across various document types, significantly enhancing the quality and consistency of content extraction. The MinerU open-source project is available at https://github.com/opendatalab/MinerU.

InternLM2 Technical Report

Mar 26, 2024

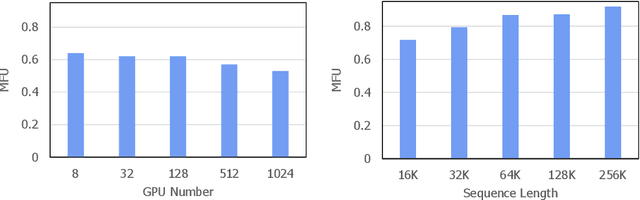

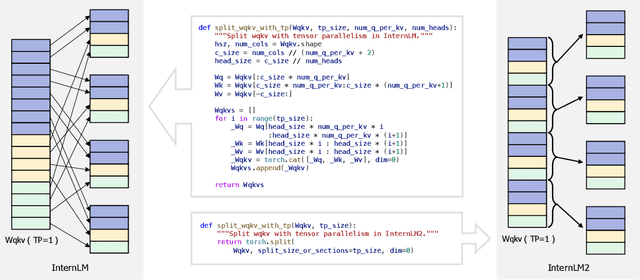

Abstract:The evolution of Large Language Models (LLMs) like ChatGPT and GPT-4 has sparked discussions on the advent of Artificial General Intelligence (AGI). However, replicating such advancements in open-source models has been challenging. This paper introduces InternLM2, an open-source LLM that outperforms its predecessors in comprehensive evaluations across 6 dimensions and 30 benchmarks, long-context modeling, and open-ended subjective evaluations through innovative pre-training and optimization techniques. The pre-training process of InternLM2 is meticulously detailed, highlighting the preparation of diverse data types including text, code, and long-context data. InternLM2 efficiently captures long-term dependencies, initially trained on 4k tokens before advancing to 32k tokens in pre-training and fine-tuning stages, exhibiting remarkable performance on the 200k ``Needle-in-a-Haystack" test. InternLM2 is further aligned using Supervised Fine-Tuning (SFT) and a novel Conditional Online Reinforcement Learning from Human Feedback (COOL RLHF) strategy that addresses conflicting human preferences and reward hacking. By releasing InternLM2 models in different training stages and model sizes, we provide the community with insights into the model's evolution.

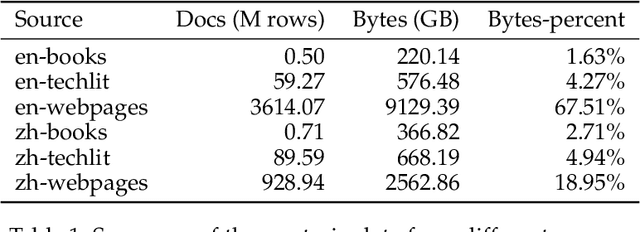

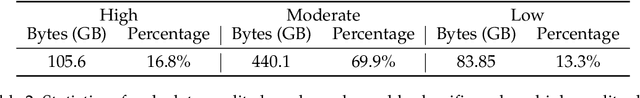

WanJuan-CC: A Safe and High-Quality Open-sourced English Webtext Dataset

Mar 12, 2024

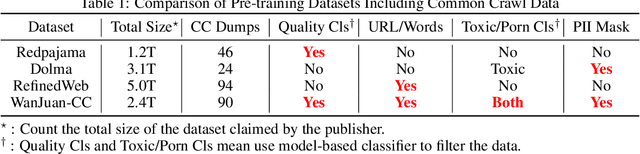

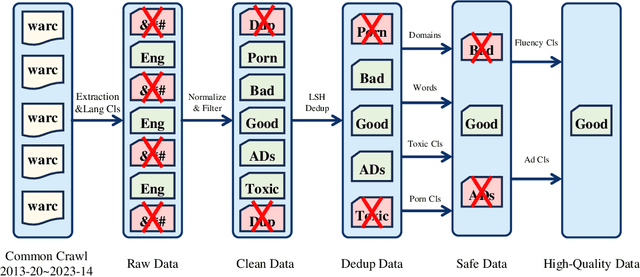

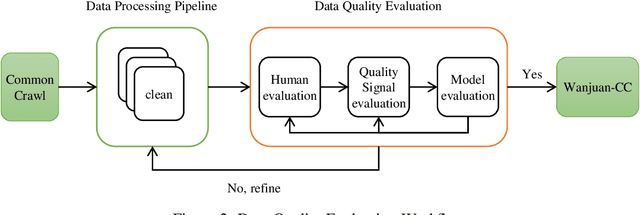

Abstract:This paper presents WanJuan-CC, a safe and high-quality open-sourced English webtext dataset derived from Common Crawl data. The study addresses the challenges of constructing large-scale pre-training datasets for language models, which require vast amounts of high-quality data. A comprehensive process was designed to handle Common Crawl data, including extraction, heuristic rule filtering, fuzzy deduplication, content safety filtering, and data quality filtering. From approximately 68 billion original English documents, we obtained 2.22T Tokens of safe data and selected 1.0T Tokens of high-quality data as part of WanJuan-CC. We have open-sourced 100B Tokens from this dataset. The paper also provides statistical information related to data quality, enabling users to select appropriate data according to their needs. To evaluate the quality and utility of the dataset, we trained 1B-parameter and 3B-parameter models using WanJuan-CC and another dataset, RefinedWeb. Results show that WanJuan-CC performs better on validation datasets and downstream tasks.

Quantification of cervical elasticity during pregnancy based on transvaginal ultrasound imaging and stress measurement

Mar 10, 2023Abstract:Objective: Strain elastography and shear wave elastography are two commonly used methods to quantify cervical elasticity; however, they have limitations. Strain elastography is effective in showing tissue elasticity distribution in a single image, but the absence of stress information causes difficulty in comparing the results acquired from different imaging sessions. Shear wave elastography is effective in measuring shear wave speed (an intrinsic tissue property correlated with elasticity) in relatively homogeneous tissue, such as in the liver. However, for inhomogeneous tissue in the cervix, the shear wave speed measurement is less robust. To overcome these limitations, we develop a quantitative cervical elastography system by adding a stress sensor to an ultrasound imaging system. Methods: In an imaging session for quantitative cervical elastography, we use the transvaginal ultrasound imaging system to record B-mode images of the cervix showing its deformation and use the stress sensor to record the probe-surface stress simultaneously. We develop a correlation-based automatic feature tracking algorithm to quantify the deformation, from which the strain is quantified. After each imaging session, we calibrate the stress sensor and transform its measurement to true stress. Applying a linear regression to the stress and strain, we obtain an approximation of the cervical Young's modulus. Results: We validate the accuracy and robustness of this elastography system using phantom experiments. Applying this system to pregnant participants, we observe significant softening of the cervix during pregnancy (p-value < 0.001) with the cervical Young's modulus decreasing 3.95% per week. We estimate that geometric mean values of cervical Young's moduli during the first (11 to 13 weeks), second, and third trimesters are 13.07 kPa, 7.59 kPa, and 4.40 kPa, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge