Ying Lu

Stain-aware Domain Alignment for Imbalance Blood Cell Classification

Dec 04, 2024

Abstract:Blood cell identification is critical for hematological analysis as it aids physicians in diagnosing various blood-related diseases. In real-world scenarios, blood cell image datasets often present the issues of domain shift and data imbalance, posing challenges for accurate blood cell identification. To address these issues, we propose a novel blood cell classification method termed SADA via stain-aware domain alignment. The primary objective of this work is to mine domain-invariant features in the presence of domain shifts and data imbalances. To accomplish this objective, we propose a stain-based augmentation approach and a local alignment constraint to learn domain-invariant features. Furthermore, we propose a domain-invariant supervised contrastive learning strategy to capture discriminative features. We decouple the training process into two stages of domain-invariant feature learning and classification training, alleviating the problem of data imbalance. Experiment results on four public blood cell datasets and a private real dataset collected from the Third Affiliated Hospital of Sun Yat-sen University demonstrate that SADA can achieve a new state-of-the-art baseline, which is superior to the existing cutting-edge methods with a big margin. The source code can be available at the URL (\url{https://github.com/AnoK3111/SADA}).

Domain-invariant Representation Learning via Segment Anything Model for Blood Cell Classification

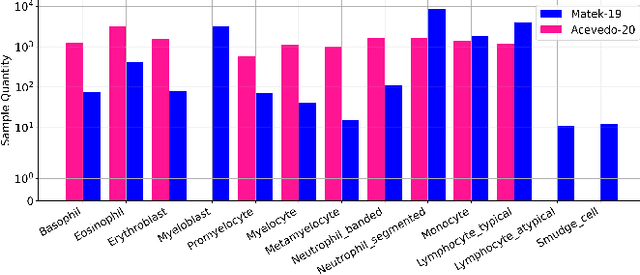

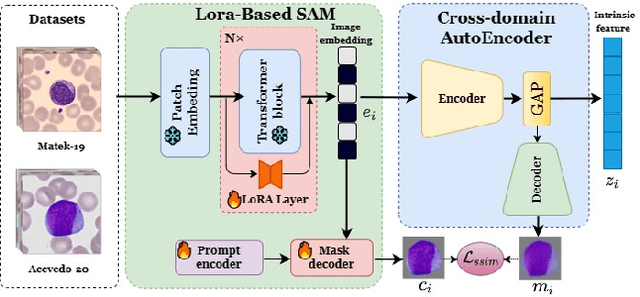

Aug 14, 2024Abstract:Accurate classification of blood cells is of vital significance in the diagnosis of hematological disorders. However, in real-world scenarios, domain shifts caused by the variability in laboratory procedures and settings, result in a rapid deterioration of the model's generalization performance. To address this issue, we propose a novel framework of domain-invariant representation learning (DoRL) via segment anything model (SAM) for blood cell classification. The DoRL comprises two main components: a LoRA-based SAM (LoRA-SAM) and a cross-domain autoencoder (CAE). The advantage of DoRL is that it can extract domain-invariant representations from various blood cell datasets in an unsupervised manner. Specifically, we first leverage the large-scale foundation model of SAM, fine-tuned with LoRA, to learn general image embeddings and segment blood cells. Additionally, we introduce CAE to learn domain-invariant representations across different-domain datasets while mitigating images' artifacts. To validate the effectiveness of domain-invariant representations, we employ five widely used machine learning classifiers to construct blood cell classification models. Experimental results on two public blood cell datasets and a private real dataset demonstrate that our proposed DoRL achieves a new state-of-the-art cross-domain performance, surpassing existing methods by a significant margin. The source code can be available at the URL (https://github.com/AnoK3111/DoRL).

Towards Cross-Domain Single Blood Cell Image Classification via Large-Scale LoRA-based Segment Anything Model

Aug 13, 2024

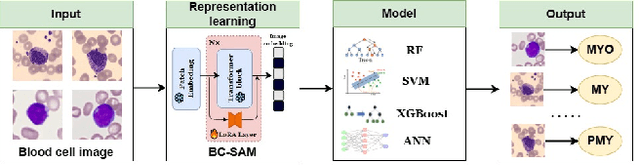

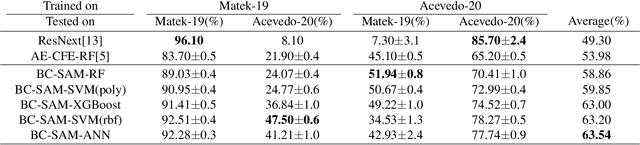

Abstract:Accurate classification of blood cells plays a vital role in hematological analysis as it aids physicians in diagnosing various medical conditions. In this study, we present a novel approach for classifying blood cell images known as BC-SAM. BC-SAM leverages the large-scale foundation model of Segment Anything Model (SAM) and incorporates a fine-tuning technique using LoRA, allowing it to extract general image embeddings from blood cell images. To enhance the applicability of BC-SAM across different blood cell image datasets, we introduce an unsupervised cross-domain autoencoder that focuses on learning intrinsic features while suppressing artifacts in the images. To assess the performance of BC-SAM, we employ four widely used machine learning classifiers (Random Forest, Support Vector Machine, Artificial Neural Network, and XGBoost) to construct blood cell classification models and compare them against existing state-of-the-art methods. Experimental results conducted on two publicly available blood cell datasets (Matek-19 and Acevedo-20) demonstrate that our proposed BC-SAM achieves a new state-of-the-art result, surpassing the baseline methods with a significant improvement. The source code of this paper is available at https://github.com/AnoK3111/BC-SAM.

Scalable Radar-based ITS: Self-localization and Occupancy Heat Map for Traffic Analysis

Apr 01, 2024Abstract:4D mmWave radar sensors are well suited for city scale Intelligent Transportation Systems (ITS) given their long sensing range, weatherproof functionality, simple mechanical design, and low manufacturing cost. In this paper, we investigate radar-based ITS for scalable traffic analysis. Localization of these radar sensors in a city scale range is a fundamental task in ITS. For mobile ITS setups it requires more endeavor. To address this task, we propose a self-localization approach that matches two descriptions of "road": the one from the geometry of the motion trajectories of cumulatively observed vehicles, and the other one from the aerial laser scan. An ICP (iterative closest point) algorithm is used to register the motion trajectory into the road section of the laser scan to estimate the sensor pose. We evaluates the results and show that it outperforms other map-based radar localization methods, especially for the orientation estimation. Beyond the localization result, we project radar sensor data onto city scale laser scan and generate an scalable occupancy heat map as a traffic analysis tool. This is demonstrated using two radar sensors monitoring an urban area in the real world.

Opportunities and challenges in the application of large artificial intelligence models in radiology

Mar 24, 2024

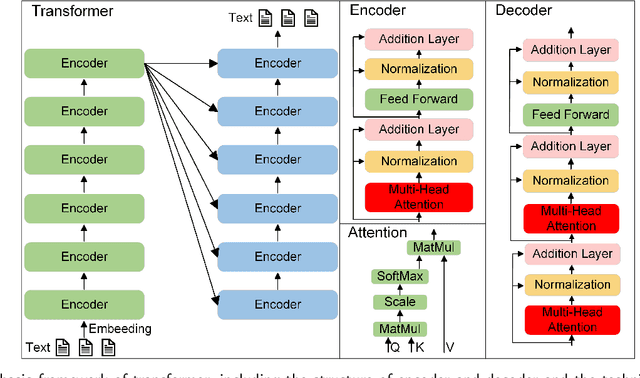

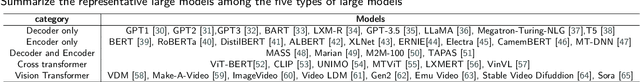

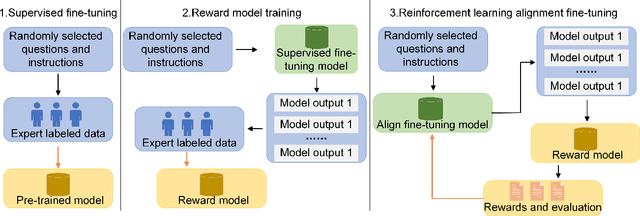

Abstract:Influenced by ChatGPT, artificial intelligence (AI) large models have witnessed a global upsurge in large model research and development. As people enjoy the convenience by this AI large model, more and more large models in subdivided fields are gradually being proposed, especially large models in radiology imaging field. This article first introduces the development history of large models, technical details, workflow, working principles of multimodal large models and working principles of video generation large models. Secondly, we summarize the latest research progress of AI large models in radiology education, radiology report generation, applications of unimodal and multimodal radiology. Finally, this paper also summarizes some of the challenges of large AI models in radiology, with the aim of better promoting the rapid revolution in the field of radiography.

Developing A Fair Individualized Polysocial Risk Score (iPsRS) for Identifying Increased Social Risk of Hospitalizations in Patients with Type 2 Diabetes (T2D)

Sep 05, 2023

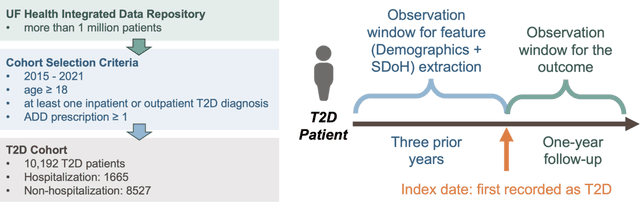

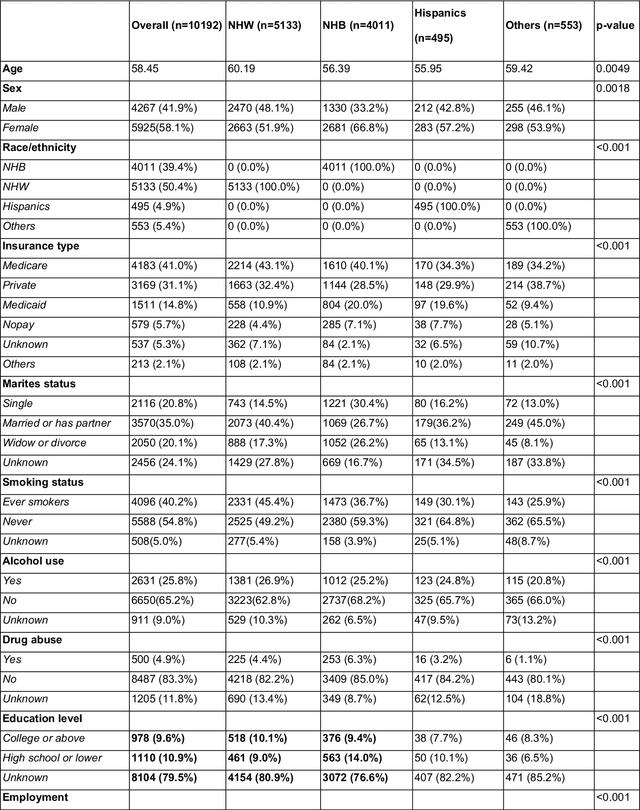

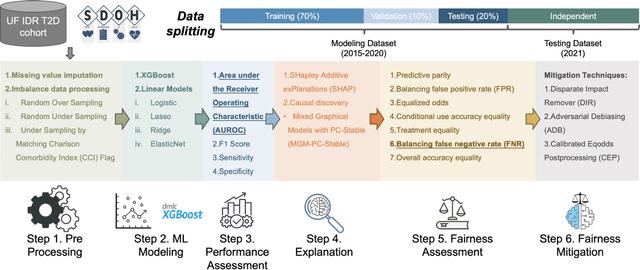

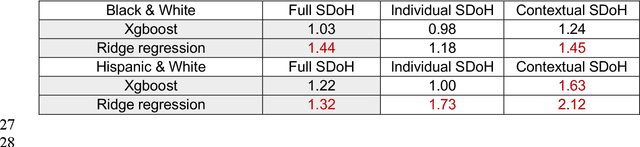

Abstract:Background: Racial and ethnic minority groups and individuals facing social disadvantages, which often stem from their social determinants of health (SDoH), bear a disproportionate burden of type 2 diabetes (T2D) and its complications. It is therefore crucial to implement effective social risk management strategies at the point of care. Objective: To develop an EHR-based machine learning (ML) analytical pipeline to identify the unmet social needs associated with hospitalization risk in patients with T2D. Methods: We identified 10,192 T2D patients from the EHR data (from 2012 to 2022) from the University of Florida Health Integrated Data Repository, including contextual SDoH (e.g., neighborhood deprivation) and individual-level SDoH (e.g., housing stability). We developed an electronic health records (EHR)-based machine learning (ML) analytic pipeline, namely individualized polysocial risk score (iPsRS), to identify high social risk associated with hospitalizations in T2D patients, along with explainable AI (XAI) techniques and fairness assessment and optimization. Results: Our iPsRS achieved a C statistic of 0.72 in predicting 1-year hospitalization after fairness optimization across racial-ethnic groups. The iPsRS showed excellent utility for capturing individuals at high hospitalization risk; the actual 1-year hospitalization rate in the top 5% of iPsRS was ~13 times as high as the bottom decile. Conclusion: Our ML pipeline iPsRS can fairly and accurately screen for patients who have increased social risk leading to hospitalization in T2D patients.

Persistent Ballistic Entanglement Spreading with Optimal Control in Quantum Spin Chains

Jul 21, 2023

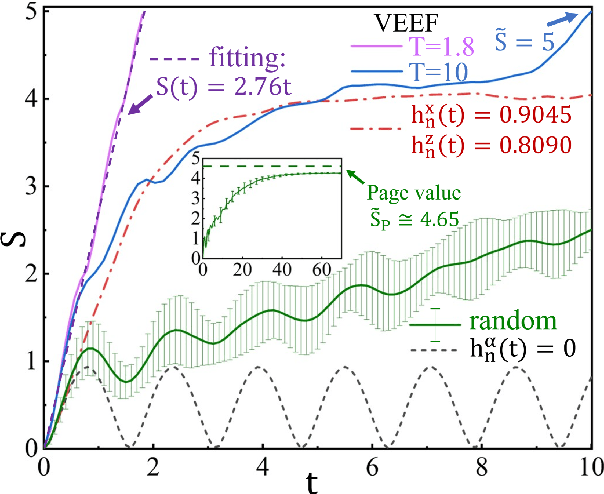

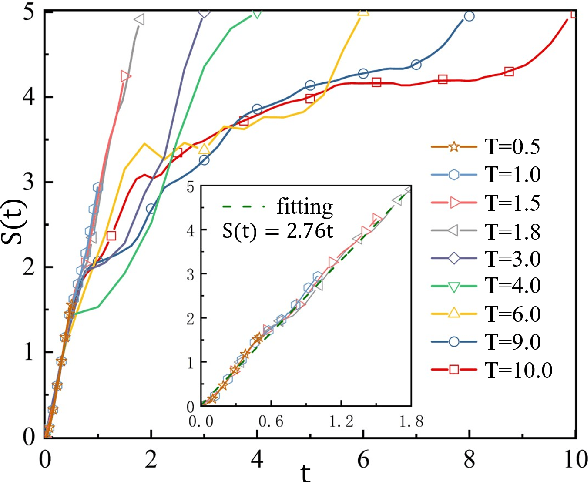

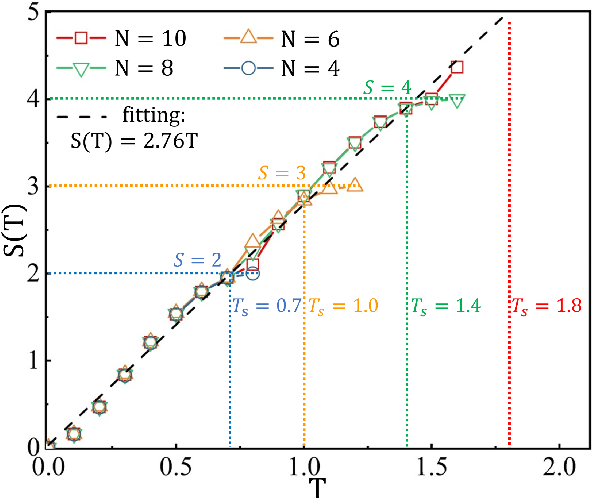

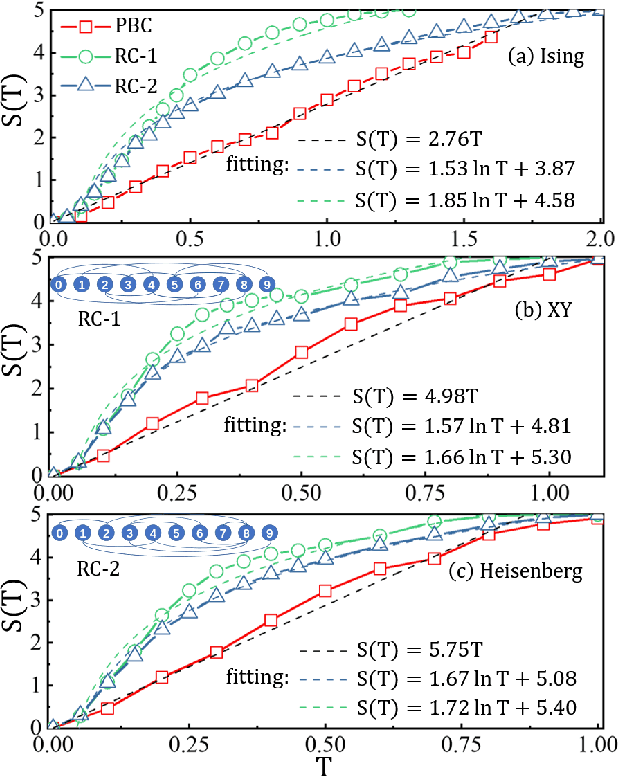

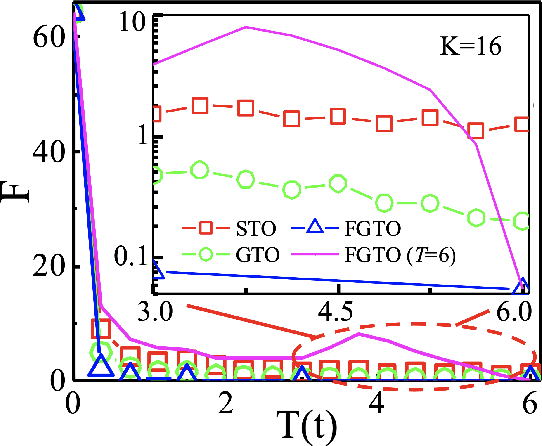

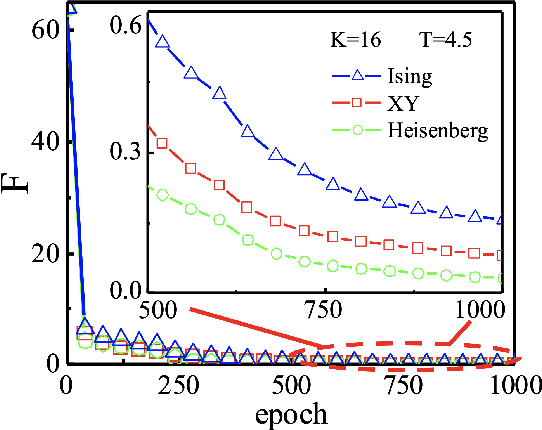

Abstract:Entanglement propagation provides a key routine to understand quantum many-body dynamics in and out of equilibrium. In this work, we uncover that the ``variational entanglement-enhancing'' field (VEEF) robustly induces a persistent ballistic spreading of entanglement in quantum spin chains. The VEEF is time dependent, and is optimally controlled to maximize the bipartite entanglement entropy (EE) of the final state. Such a linear growth persists till the EE reaches the genuine saturation $\tilde{S} = - \log_{2} 2^{-\frac{N}{2}}=\frac{N}{2}$ with $N$ the total number of spins. The EE satisfies $S(t) = v t$ for the time $t \leq \frac{N}{2v}$, with $v$ the velocity. These results are in sharp contrast with the behaviors without VEEF, where the EE generally approaches a sub-saturation known as the Page value $\tilde{S}_{P} =\tilde{S} - \frac{1}{2\ln{2}}$ in the long-time limit, and the entanglement growth deviates from being linear before the Page value is reached. The dependence between the velocity and interactions is explored, with $v \simeq 2.76$, $4.98$, and $5.75$ for the spin chains with Ising, XY, and Heisenberg interactions, respectively. We further show that the nonlinear growth of EE emerges with the presence of long-range interactions.

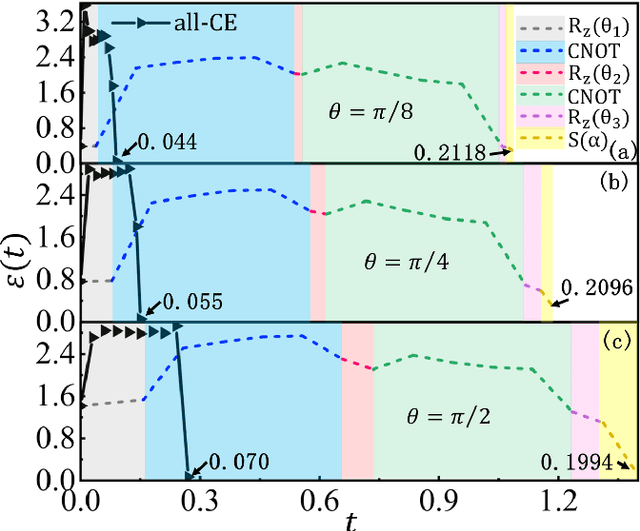

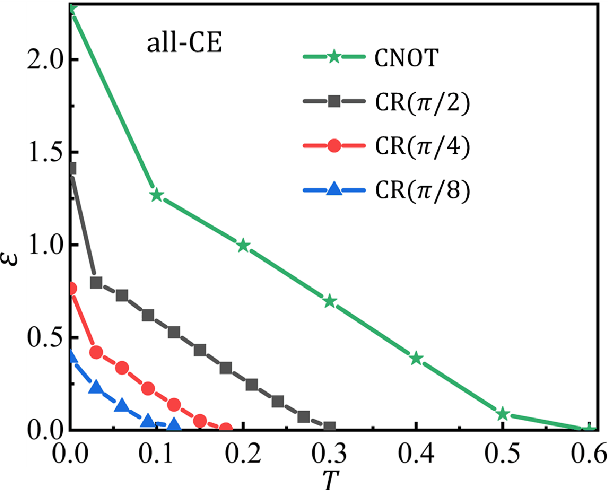

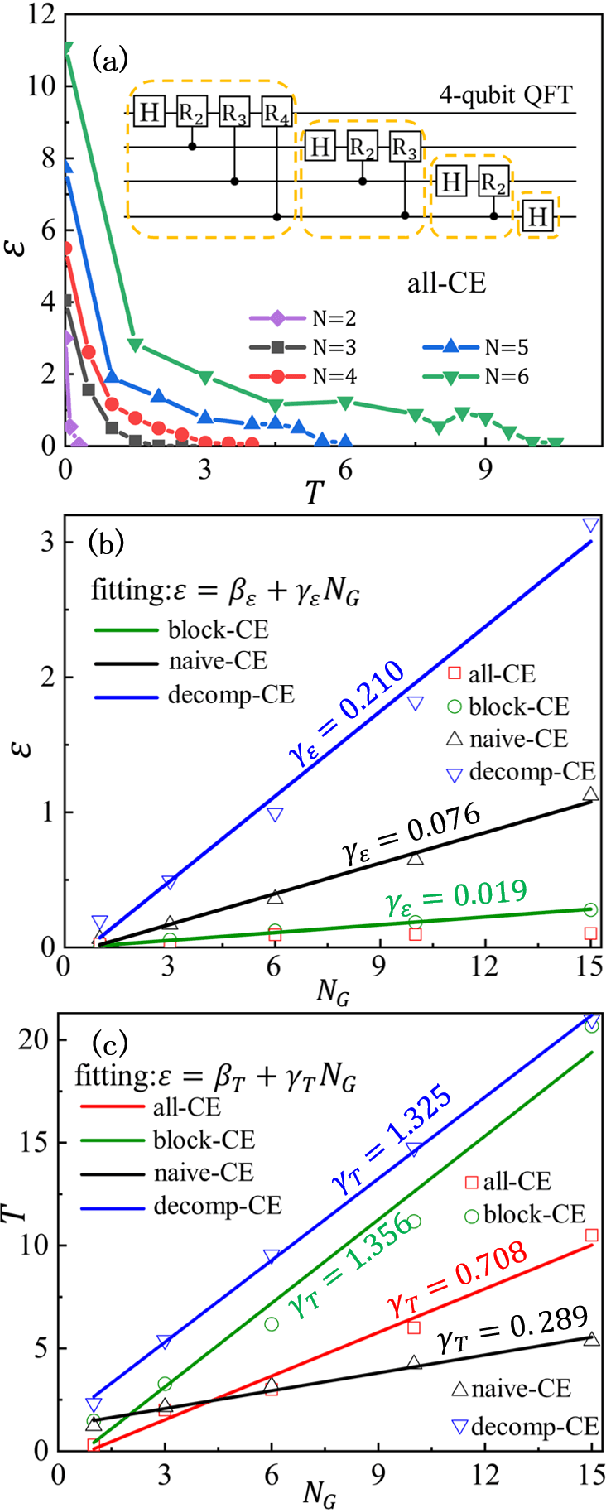

Circuit encapsulation for efficient quantum computing based on controlled many-body dynamics

Mar 29, 2022

Abstract:Controlling the time evolution of interacting spin systems is an important approach of implementing quantum computing. Different from the approaches by compiling the circuits into the product of multiple elementary gates, we here propose the quantum circuit encapsulation (QCE), where we encapsulate the circuits into different parts, and optimize the magnetic fields to realize the unitary transformation of each part by the time evolution. The QCE is demonstrated to possess well-controlled error and time cost, which avoids the error accumulations by aiming at finding the shortest path directly to the target unitary. We test four different encapsulation ways to realize the multi-qubit quantum Fourier transformations by controlling the time evolution of the quantum Ising chain. The scaling behaviors of the time costs and errors against the number of two-qubit controlled gates are demonstrated. The QCE provides an alternative compiling scheme that translates the circuits into a physically-executable form based on the quantum many-body dynamics, where the key issue becomes the encapsulation way to balance between the efficiency and flexibility.

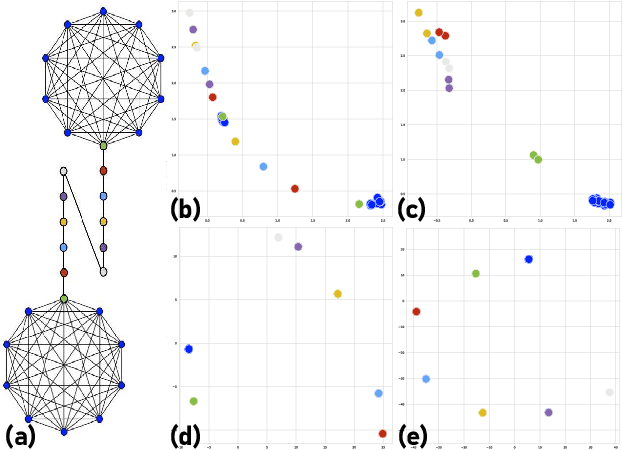

Embedding Node Structural Role Identity Using Stress Majorization

Sep 14, 2021

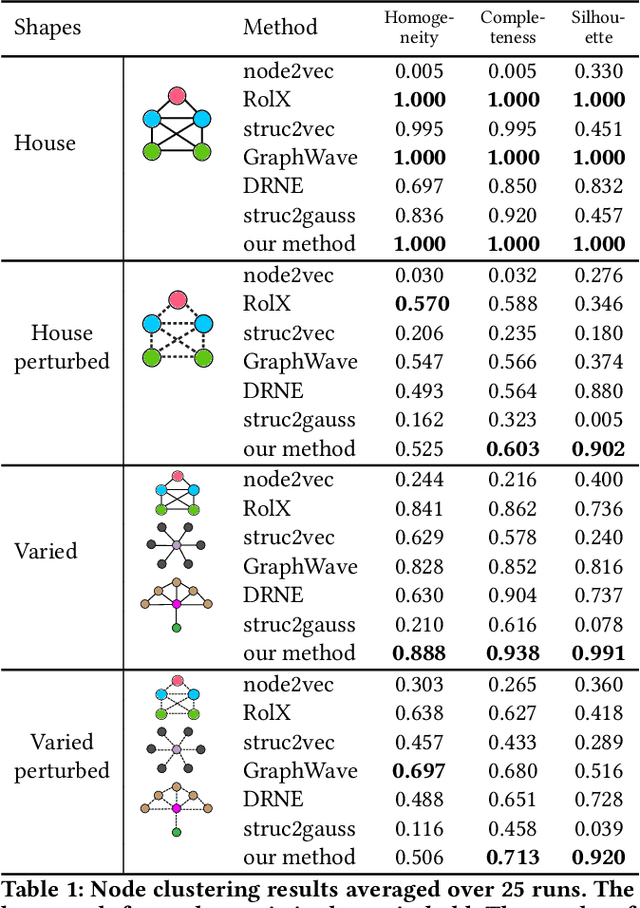

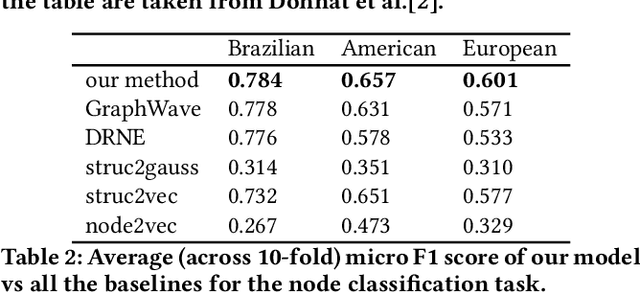

Abstract:Nodes in networks may have one or more functions that determine their role in the system. As opposed to local proximity, which captures the local context of nodes, the role identity captures the functional "role" that nodes play in a network, such as being the center of a group, or the bridge between two groups. This means that nodes far apart in a network can have similar structural role identities. Several recent works have explored methods for embedding the roles of nodes in networks. However, these methods all rely on either approximating or indirect modeling of structural equivalence. In this paper, we present a novel and flexible framework using stress majorization, to transform the high-dimensional role identities in networks directly (without approximation or indirect modeling) to a low-dimensional embedding space. Our method is also flexible, in that it does not rely on specific structural similarity definitions. We evaluated our method on the tasks of node classification, clustering, and visualization, using three real-world and five synthetic networks. Our experiments show that our framework achieves superior results than existing methods in learning node role representations.

Preparation of Many-body Ground States by Time Evolution with Variational Microscopic Magnetic Fields and Incomplete Interactions

Jun 03, 2021

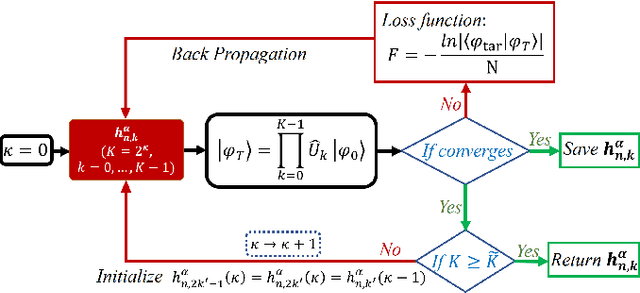

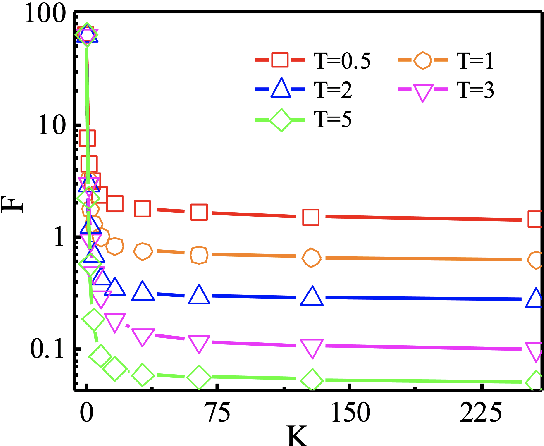

Abstract:State preparation is of fundamental importance in quantum physics, which can be realized by constructing the quantum circuit as a unitary that transforms the initial state to the target, or implementing a quantum control protocol to evolve to the target state with a designed Hamiltonian. In this work, we study the latter on quantum many-body systems by the time evolution with fixed couplings and variational magnetic fields. In specific, we consider to prepare the ground states of the Hamiltonians containing certain interactions that are missing in the Hamiltonians for the time evolution. An optimization method is proposed to optimize the magnetic fields by "fine-graining" the discretization of time, in order to gain high precision and stability. The back propagation technique is utilized to obtain the gradients of the fields against the logarithmic fidelity. Our method is tested on preparing the ground state of Heisenberg chain with the time evolution by the XY and Ising interactions, and its performance surpasses two baseline methods that use local and global optimization strategies, respectively. Our work can be applied and generalized to other quantum models such as those defined on higher dimensional lattices. It enlightens to reduce the complexity of the required interactions for implementing quantum control or other tasks in quantum information and computation by means of optimizing the magnetic fields.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge