Jürgen Beyerer

Higher-Order Adversarial Patches for Real-Time Object Detectors

Jan 08, 2026Abstract:Higher-order adversarial attacks can directly be considered the result of a cat-and-mouse game -- an elaborate action involving constant pursuit, near captures, and repeated escapes. This idiom describes the enduring circular training of adversarial attack patterns and adversarial training the best. The following work investigates the impact of higher-order adversarial attacks on object detectors by successively training attack patterns and hardening object detectors with adversarial training. The YOLOv10 object detector is chosen as a representative, and adversarial patches are used in an evasion attack manner. Our results indicate that higher-order adversarial patches are not only affecting the object detector directly trained on but rather provide a stronger generalization capacity compared to lower-order adversarial patches. Moreover, the results highlight that solely adversarial training is not sufficient to harden an object detector efficiently against this kind of adversarial attack. Code: https://github.com/JensBayer/HigherOrder

A Cross Branch Fusion-Based Contrastive Learning Framework for Point Cloud Self-supervised Learning

May 30, 2025Abstract:Contrastive learning is an essential method in self-supervised learning. It primarily employs a multi-branch strategy to compare latent representations obtained from different branches and train the encoder. In the case of multi-modal input, diverse modalities of the same object are fed into distinct branches. When using single-modal data, the same input undergoes various augmentations before being fed into different branches. However, all existing contrastive learning frameworks have so far only performed contrastive operations on the learned features at the final loss end, with no information exchange between different branches prior to this stage. In this paper, for point cloud unsupervised learning without the use of extra training data, we propose a Contrastive Cross-branch Attention-based framework for Point cloud data (termed PoCCA), to learn rich 3D point cloud representations. By introducing sub-branches, PoCCA allows information exchange between different branches before the loss end. Experimental results demonstrate that in the case of using no extra training data, the representations learned with our self-supervised model achieve state-of-the-art performances when used for downstream tasks on point clouds.

6D Pose Estimation on Point Cloud Data through Prior Knowledge Integration: A Case Study in Autonomous Disassembly

May 30, 2025Abstract:The accurate estimation of 6D pose remains a challenging task within the computer vision domain, even when utilizing 3D point cloud data. Conversely, in the manufacturing domain, instances arise where leveraging prior knowledge can yield advancements in this endeavor. This study focuses on the disassembly of starter motors to augment the engineering of product life cycles. A pivotal objective in this context involves the identification and 6D pose estimation of bolts affixed to the motors, facilitating automated disassembly within the manufacturing workflow. Complicating matters, the presence of occlusions and the limitations of single-view data acquisition, notably when motors are placed in a clamping system, obscure certain portions and render some bolts imperceptible. Consequently, the development of a comprehensive pipeline capable of acquiring complete bolt information is imperative to avoid oversight in bolt detection. In this paper, employing the task of bolt detection within the scope of our project as a pertinent use case, we introduce a meticulously devised pipeline. This multi-stage pipeline effectively captures the 6D information with regard to all bolts on the motor, thereby showcasing the effective utilization of prior knowledge in handling this challenging task. The proposed methodology not only contributes to the field of 6D pose estimation but also underscores the viability of integrating domain-specific insights to tackle complex problems in manufacturing and automation.

SAMBLE: Shape-Specific Point Cloud Sampling for an Optimal Trade-Off Between Local Detail and Global Uniformity

Apr 28, 2025Abstract:Driven by the increasing demand for accurate and efficient representation of 3D data in various domains, point cloud sampling has emerged as a pivotal research topic in 3D computer vision. Recently, learning-to-sample methods have garnered growing interest from the community, particularly for their ability to be jointly trained with downstream tasks. However, previous learning-based sampling methods either lead to unrecognizable sampling patterns by generating a new point cloud or biased sampled results by focusing excessively on sharp edge details. Moreover, they all overlook the natural variations in point distribution across different shapes, applying a similar sampling strategy to all point clouds. In this paper, we propose a Sparse Attention Map and Bin-based Learning method (termed SAMBLE) to learn shape-specific sampling strategies for point cloud shapes. SAMBLE effectively achieves an improved balance between sampling edge points for local details and preserving uniformity in the global shape, resulting in superior performance across multiple common point cloud downstream tasks, even in scenarios with few-point sampling.

Decentralized Fusion of 3D Extended Object Tracking based on a B-Spline Shape Model

Apr 25, 2025Abstract:Extended Object Tracking (EOT) exploits the high resolution of modern sensors for detailed environmental perception. Combined with decentralized fusion, it contributes to a more scalable and robust perception system. This paper investigates the decentralized fusion of 3D EOT using a B-spline curve based model. The spline curve is used to represent the side-view profile, which is then extruded with a width to form a 3D shape. We use covariance intersection (CI) for the decentralized fusion and discuss the challenge of applying it to EOT. We further evaluate the tracking result of the decentralized fusion with simulated and real datasets of traffic scenarios. We show that the CI-based fusion can significantly improve the tracking performance for sensors with unfavorable perspective.

3D Extended Object Tracking based on Extruded B-Spline Side View Profiles

Mar 13, 2025Abstract:Object tracking is an essential task for autonomous systems. With the advancement of 3D sensors, these systems can better perceive their surroundings using effective 3D Extended Object Tracking (EOT) methods. Based on the observation that common road users are symmetrical on the right and left sides in the traveling direction, we focus on the side view profile of the object. In order to leverage of the development in 2D EOT and balance the number of parameters of a shape model in the tracking algorithms, we propose a method for 3D extended object tracking (EOT) by describing the side view profile of the object with B-spline curves and forming an extrusion to obtain a 3D extent. The use of B-spline curves exploits their flexible representation power by allowing the control points to move freely. The algorithm is developed into an Extended Kalman Filter (EKF). For a through evaluation of this method, we use simulated traffic scenario of different vehicle models and realworld open dataset containing both radar and lidar data.

Partially Observable Gaussian Process Network and Doubly Stochastic Variational Inference

Feb 19, 2025Abstract:To reduce the curse of dimensionality for Gaussian processes (GP), they can be decomposed into a Gaussian Process Network (GPN) of coupled subprocesses with lower dimensionality. In some cases, intermediate observations are available within the GPN. However, intermediate observations are often indirect, noisy, and incomplete in most real-world systems. This work introduces the Partially Observable Gaussian Process Network (POGPN) to model real-world process networks. We model a joint distribution of latent functions of subprocesses and make inferences using observations from all subprocesses. POGPN incorporates observation lenses (observation likelihoods) into the well-established inference method of deep Gaussian processes. We also introduce two training methods for POPGN to make inferences on the whole network using node observations. The application to benchmark problems demonstrates how incorporating partial observations during training and inference can improve the predictive performance of the overall network, offering a promising outlook for its practical application.

Traversing the Subspace of Adversarial Patches

Dec 02, 2024Abstract:Despite ongoing research on the topic of adversarial examples in deep learning for computer vision, some fundamentals of the nature of these attacks remain unclear. As the manifold hypothesis posits, high-dimensional data tends to be part of a low-dimensional manifold. To verify the thesis with adversarial patches, this paper provides an analysis of a set of adversarial patches and investigates the reconstruction abilities of three different dimensionality reduction methods. Quantitatively, the performance of reconstructed patches in an attack setting is measured and the impact of sampled patches from the latent space during adversarial training is investigated. The evaluation is performed on two publicly available datasets for person detection. The results indicate that more sophisticated dimensionality reduction methods offer no advantages over a simple principal component analysis.

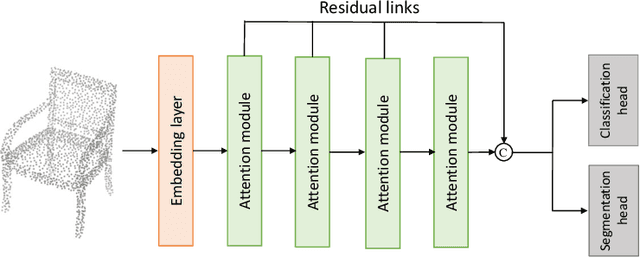

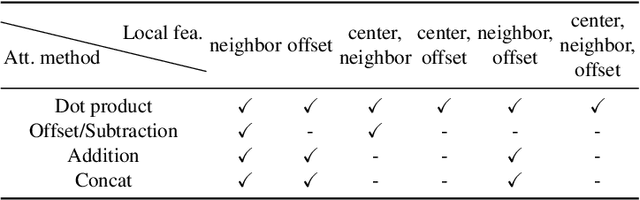

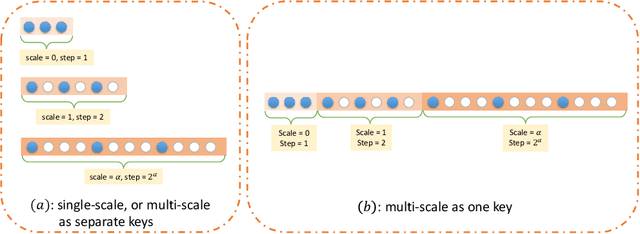

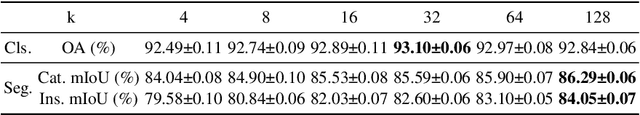

Rethinking Attention Module Design for Point Cloud Analysis

Jul 27, 2024

Abstract:In recent years, there have been significant advancements in applying attention mechanisms to point cloud analysis. However, attention module variants featured in various research papers often operate under diverse settings and tasks, incorporating potential training strategies. This heterogeneity poses challenges in establishing a fair comparison among these attention module variants. In this paper, we address this issue by rethinking and exploring attention module design within a consistent base framework and settings. Both global-based and local-based attention methods are studied, with a focus on the selection basis and scales of neighbors for local-based attention. Different combinations of aggregated local features and computation methods for attention scores are evaluated, ranging from the initial addition/concatenation-based approach to the widely adopted dot product-based method and the recently proposed vector attention technique. Various position encoding methods are also investigated. Our extensive experimental analysis reveals that there is no universally optimal design across diverse point cloud tasks. Instead, drawing from best practices, we propose tailored attention modules for specific tasks, leading to superior performance on point cloud classification and segmentation benchmarks.

Applying Extended Object Tracking for Self-Localization of Roadside Radar Sensors

Jul 03, 2024

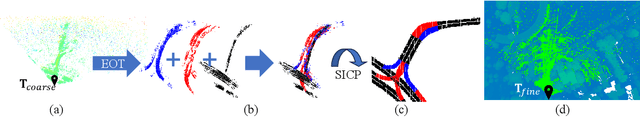

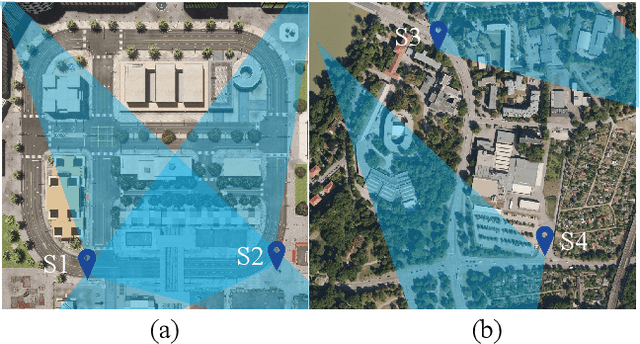

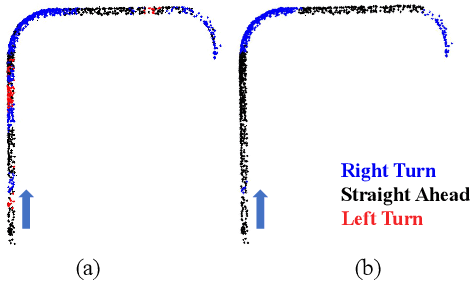

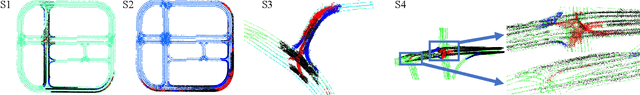

Abstract:Intelligent Transportation Systems (ITS) can benefit from roadside 4D mmWave radar sensors for large-scale traffic monitoring due to their weatherproof functionality, long sensing range and low manufacturing cost. However, the localization method using external measurement devices has limitations in urban environments. Furthermore, if the sensor mount exhibits changes due to environmental influences, they cannot be corrected when the measurement is performed only during the installation. In this paper, we propose self-localization of roadside radar data using Extended Object Tracking (EOT). The method analyses both the tracked trajectories of the vehicles observed by the sensor and the aerial laser scan of city streets, assigns labels of driving behaviors such as "straight ahead", "left turn", "right turn" to trajectory sections and road segments, and performs Semantic Iterative Closest Points (SICP) algorithm to register the point cloud. The method exploits the result from a down stream task -- object tracking -- for localization. We demonstrate high accuracy in the sub-meter range along with very low orientation error. The method also shows good data efficiency. The evaluation is done in both simulation and real-world tests.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge