Ye Lu

Northwestern University, Evanston, USA

FuXi-$γ$: Efficient Sequential Recommendation with Exponential-Power Temporal Encoder and Diagonal-Sparse Positional Mechanism

Dec 14, 2025Abstract:Sequential recommendation aims to model users' evolving preferences based on their historical interactions. Recent advances leverage Transformer-based architectures to capture global dependencies, but existing methods often suffer from high computational overhead, primarily due to discontinuous memory access in temporal encoding and dense attention over long sequences. To address these limitations, we propose FuXi-$γ$, a novel sequential recommendation framework that improves both effectiveness and efficiency through principled architectural design. FuXi-$γ$ adopts a decoder-only Transformer structure and introduces two key innovations: (1) An exponential-power temporal encoder that encodes relative temporal intervals using a tunable exponential decay function inspired by the Ebbinghaus forgetting curve. This encoder enables flexible modeling of both short-term and long-term preferences while maintaining high efficiency through continuous memory access and pure matrix operations. (2) A diagonal-sparse positional mechanism that prunes low-contribution attention blocks using a diagonal-sliding strategy guided by the persymmetry of Toeplitz matrix. Extensive experiments on four real-world datasets demonstrate that FuXi-$γ$ achieves state-of-the-art performance in recommendation quality, while accelerating training by up to 4.74$\times$ and inference by up to 6.18$\times$, making it a practical and scalable solution for long-sequence recommendation. Our code is available at https://github.com/Yeedzhi/FuXi-gamma.

Cultivating Helpful, Personalized, and Creative AI Tutors: A Framework for Pedagogical Alignment using Reinforcement Learning

Jul 27, 2025

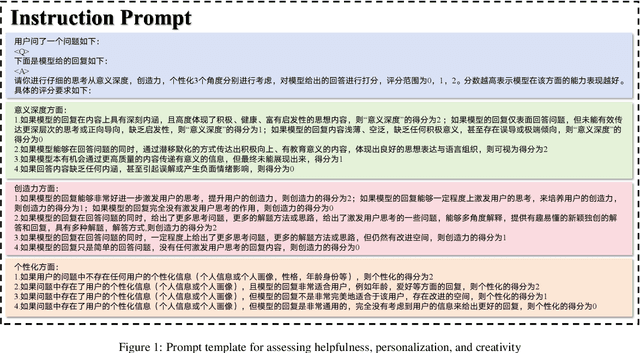

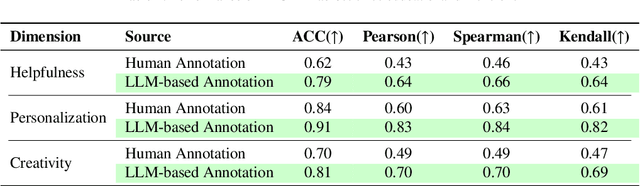

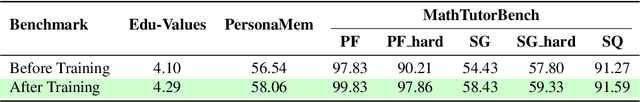

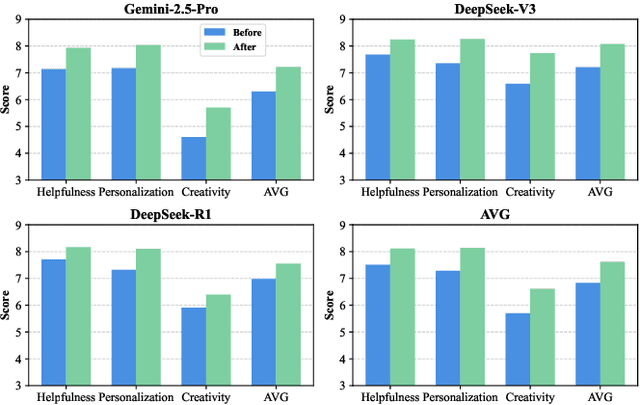

Abstract:The integration of large language models (LLMs) into education presents unprecedented opportunities for scalable personalized learning. However, standard LLMs often function as generic information providers, lacking alignment with fundamental pedagogical principles such as helpfulness, student-centered personalization, and creativity cultivation. To bridge this gap, we propose EduAlign, a novel framework designed to guide LLMs toward becoming more effective and responsible educational assistants. EduAlign consists of two main stages. In the first stage, we curate a dataset of 8k educational interactions and annotate them-both manually and automatically-along three key educational dimensions: Helpfulness, Personalization, and Creativity (HPC). These annotations are used to train HPC-RM, a multi-dimensional reward model capable of accurately scoring LLM outputs according to these educational principles. We further evaluate the consistency and reliability of this reward model. In the second stage, we leverage HPC-RM as a reward signal to fine-tune a pre-trained LLM using Group Relative Policy Optimization (GRPO) on a set of 2k diverse prompts. We then assess the pre- and post-finetuning models on both educational and general-domain benchmarks across the three HPC dimensions. Experimental results demonstrate that the fine-tuned model exhibits significantly improved alignment with pedagogical helpfulness, personalization, and creativity stimulation. This study presents a scalable and effective approach to aligning LLMs with nuanced and desirable educational traits, paving the way for the development of more engaging, pedagogically aligned AI tutors.

Empowering Large Language Model for Continual Video Question Answering with Collaborative Prompting

Oct 01, 2024

Abstract:In recent years, the rapid increase in online video content has underscored the limitations of static Video Question Answering (VideoQA) models trained on fixed datasets, as they struggle to adapt to new questions or tasks posed by newly available content. In this paper, we explore the novel challenge of VideoQA within a continual learning framework, and empirically identify a critical issue: fine-tuning a large language model (LLM) for a sequence of tasks often results in catastrophic forgetting. To address this, we propose Collaborative Prompting (ColPro), which integrates specific question constraint prompting, knowledge acquisition prompting, and visual temporal awareness prompting. These prompts aim to capture textual question context, visual content, and video temporal dynamics in VideoQA, a perspective underexplored in prior research. Experimental results on the NExT-QA and DramaQA datasets show that ColPro achieves superior performance compared to existing approaches, achieving 55.14\% accuracy on NExT-QA and 71.24\% accuracy on DramaQA, highlighting its practical relevance and effectiveness.

Deep Feature Surgery: Towards Accurate and Efficient Multi-Exit Networks

Jul 19, 2024

Abstract:Multi-exit network is a promising architecture for efficient model inference by sharing backbone networks and weights among multiple exits. However, the gradient conflict of the shared weights results in sub-optimal accuracy. This paper introduces Deep Feature Surgery (\methodname), which consists of feature partitioning and feature referencing approaches to resolve gradient conflict issues during the training of multi-exit networks. The feature partitioning separates shared features along the depth axis among all exits to alleviate gradient conflict while simultaneously promoting joint optimization for each exit. Subsequently, feature referencing enhances multi-scale features for distinct exits across varying depths to improve the model accuracy. Furthermore, \methodname~reduces the training operations with the reduced complexity of backpropagation. Experimental results on Cifar100 and ImageNet datasets exhibit that \methodname~provides up to a \textbf{50.00\%} reduction in training time and attains up to a \textbf{6.94\%} enhancement in accuracy when contrasted with baseline methods across diverse models and tasks. Budgeted batch classification evaluation on MSDNet demonstrates that DFS uses about $\mathbf{2}\boldsymbol{\times}$ fewer average FLOPs per image to achieve the same classification accuracy as baseline methods on Cifar100.

Statistical Parameterized Physics-Based Machine Learning Digital Twin Models for Laser Powder Bed Fusion Process

Nov 14, 2023

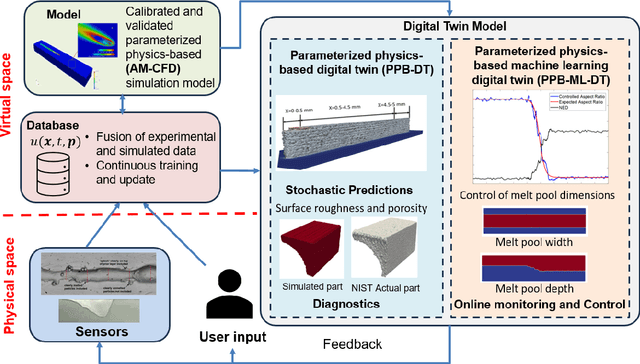

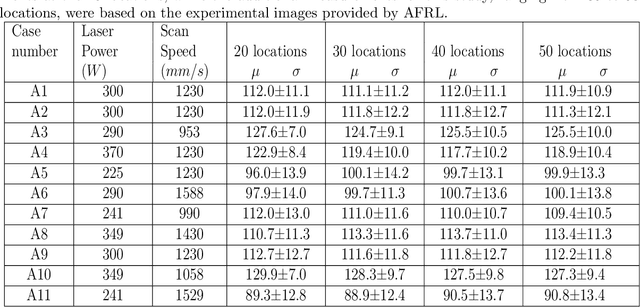

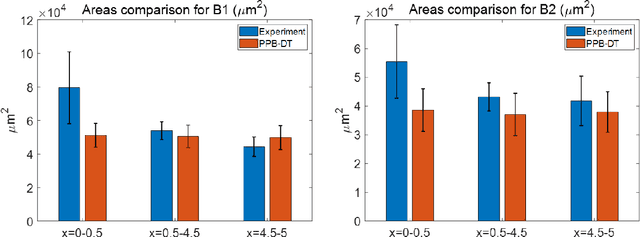

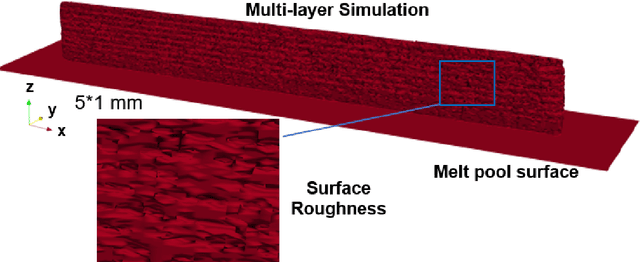

Abstract:A digital twin (DT) is a virtual representation of physical process, products and/or systems that requires a high-fidelity computational model for continuous update through the integration of sensor data and user input. In the context of laser powder bed fusion (LPBF) additive manufacturing, a digital twin of the manufacturing process can offer predictions for the produced parts, diagnostics for manufacturing defects, as well as control capabilities. This paper introduces a parameterized physics-based digital twin (PPB-DT) for the statistical predictions of LPBF metal additive manufacturing process. We accomplish this by creating a high-fidelity computational model that accurately represents the melt pool phenomena and subsequently calibrating and validating it through controlled experiments. In PPB-DT, a mechanistic reduced-order method-driven stochastic calibration process is introduced, which enables the statistical predictions of the melt pool geometries and the identification of defects such as lack-of-fusion porosity and surface roughness, specifically for diagnostic applications. Leveraging data derived from this physics-based model and experiments, we have trained a machine learning-based digital twin (PPB-ML-DT) model for predicting, monitoring, and controlling melt pool geometries. These proposed digital twin models can be employed for predictions, control, optimization, and quality assurance within the LPBF process, ultimately expediting product development and certification in LPBF-based metal additive manufacturing.

AutoQNN: An End-to-End Framework for Automatically Quantizing Neural Networks

Apr 07, 2023Abstract:Exploring the expected quantizing scheme with suitable mixed-precision policy is the key point to compress deep neural networks (DNNs) in high efficiency and accuracy. This exploration implies heavy workloads for domain experts, and an automatic compression method is needed. However, the huge search space of the automatic method introduces plenty of computing budgets that make the automatic process challenging to be applied in real scenarios. In this paper, we propose an end-to-end framework named AutoQNN, for automatically quantizing different layers utilizing different schemes and bitwidths without any human labor. AutoQNN can seek desirable quantizing schemes and mixed-precision policies for mainstream DNN models efficiently by involving three techniques: quantizing scheme search (QSS), quantizing precision learning (QPL), and quantized architecture generation (QAG). QSS introduces five quantizing schemes and defines three new schemes as a candidate set for scheme search, and then uses the differentiable neural architecture search (DNAS) algorithm to seek the layer- or model-desired scheme from the set. QPL is the first method to learn mixed-precision policies by reparameterizing the bitwidths of quantizing schemes, to the best of our knowledge. QPL optimizes both classification loss and precision loss of DNNs efficiently and obtains the relatively optimal mixed-precision model within limited model size and memory footprint. QAG is designed to convert arbitrary architectures into corresponding quantized ones without manual intervention, to facilitate end-to-end neural network quantization. We have implemented AutoQNN and integrated it into Keras. Extensive experiments demonstrate that AutoQNN can consistently outperform state-of-the-art quantization.

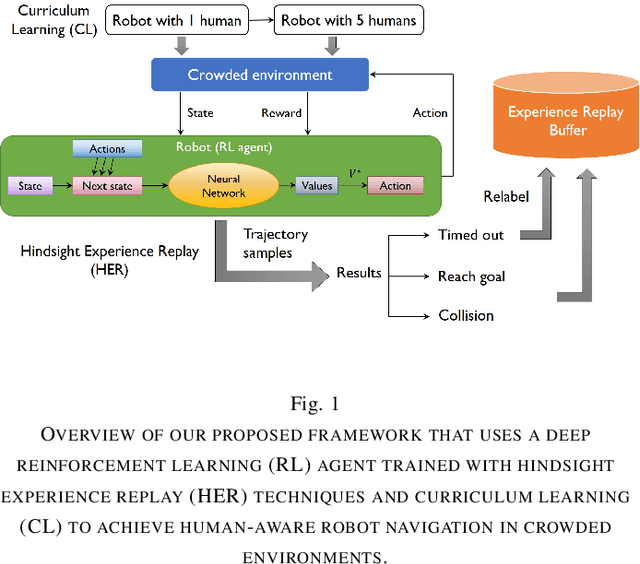

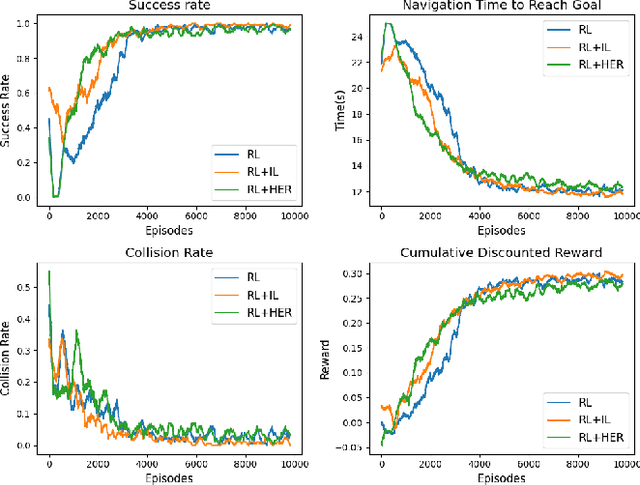

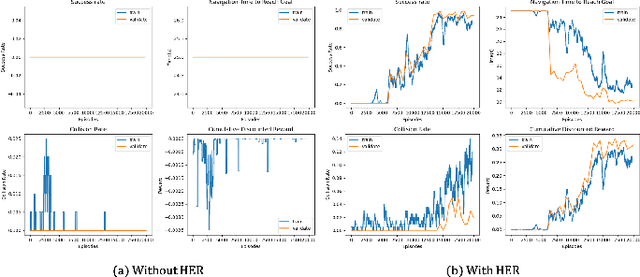

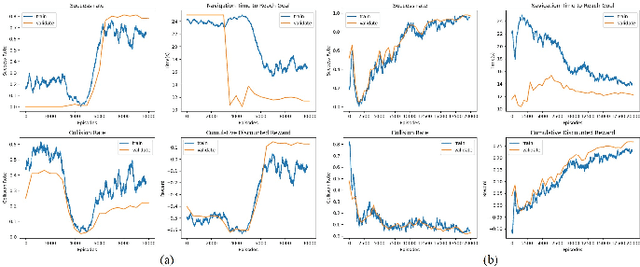

Human-Aware Robot Navigation via Reinforcement Learning with Hindsight Experience Replay and Curriculum Learning

Oct 09, 2021

Abstract:In recent years, the growing demand for more intelligent service robots is pushing the development of mobile robot navigation algorithms to allow safe and efficient operation in a dense crowd. Reinforcement learning (RL) approaches have shown superior ability in solving sequential decision making problems, and recent work has explored its potential to learn navigation polices in a socially compliant manner. However, the expert demonstration data used in existing methods is usually expensive and difficult to obtain. In this work, we consider the task of training an RL agent without employing the demonstration data, to achieve efficient and collision-free navigation in a crowded environment. To address the sparse reward navigation problem, we propose to incorporate the hindsight experience replay (HER) and curriculum learning (CL) techniques with RL to efficiently learn the optimal navigation policy in the dense crowd. The effectiveness of our method is validated in a simulated crowd-robot coexisting environment. The results demonstrate that our method can effectively learn human-aware navigation without requiring additional demonstration data.

Elastic Significant Bit Quantization and Acceleration for Deep Neural Networks

Sep 08, 2021

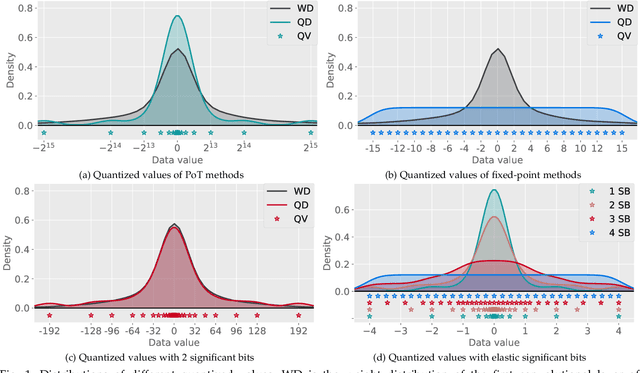

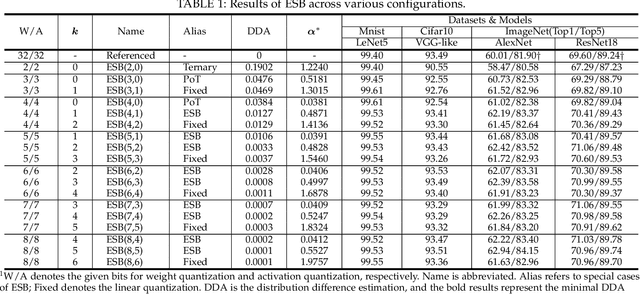

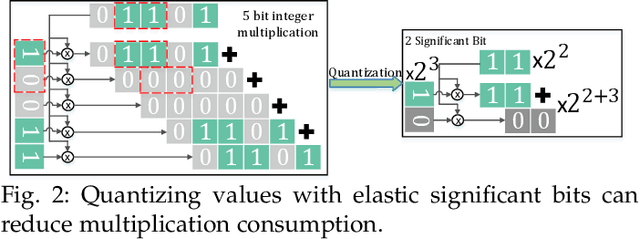

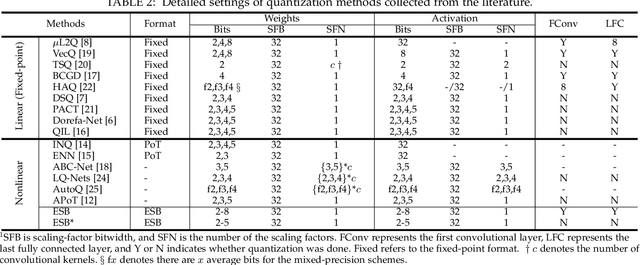

Abstract:Quantization has been proven to be a vital method for improving the inference efficiency of deep neural networks (DNNs). However, it is still challenging to strike a good balance between accuracy and efficiency while quantizing DNN weights or activation values from high-precision formats to their quantized counterparts. We propose a new method called elastic significant bit quantization (ESB) that controls the number of significant bits of quantized values to obtain better inference accuracy with fewer resources. We design a unified mathematical formula to constrain the quantized values of the ESB with a flexible number of significant bits. We also introduce a distribution difference aligner (DDA) to quantitatively align the distributions between the full-precision weight or activation values and quantized values. Consequently, ESB is suitable for various bell-shaped distributions of weights and activation of DNNs, thus maintaining a high inference accuracy. Benefitting from fewer significant bits of quantized values, ESB can reduce the multiplication complexity. We implement ESB as an accelerator and quantitatively evaluate its efficiency on FPGAs. Extensive experimental results illustrate that ESB quantization consistently outperforms state-of-the-art methods and achieves average accuracy improvements of 4.78%, 1.92%, and 3.56% over AlexNet, ResNet18, and MobileNetV2, respectively. Furthermore, ESB as an accelerator can achieve 10.95 GOPS peak performance of 1k LUTs without DSPs on the Xilinx ZCU102 FPGA platform. Compared with CPU, GPU, and state-of-the-art accelerators on FPGAs, the ESB accelerator can improve the energy efficiency by up to 65x, 11x, and 26x, respectively.

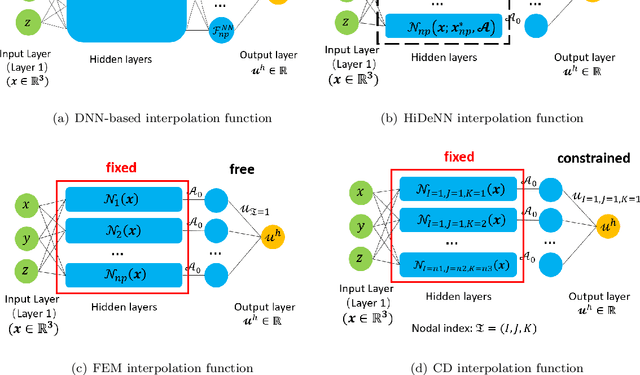

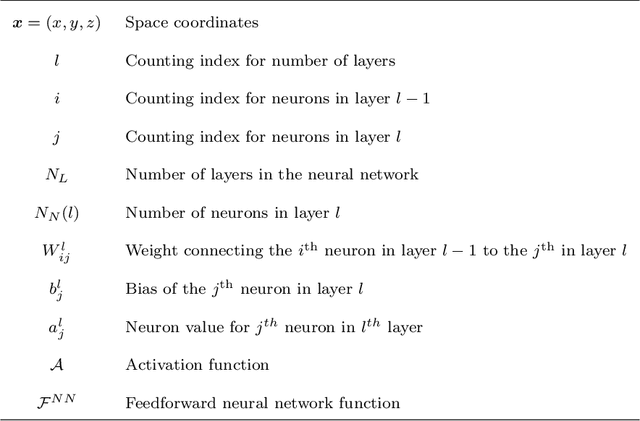

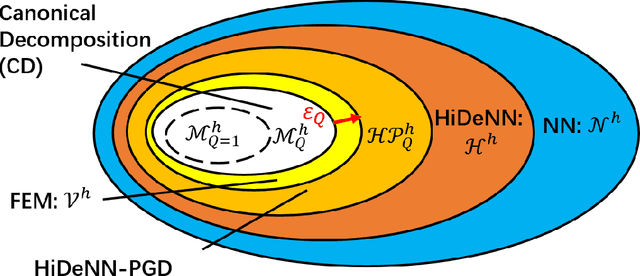

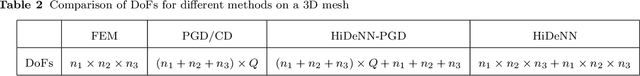

HiDeNN-PGD: reduced-order hierarchical deep learning neural networks

May 13, 2021

Abstract:This paper presents a proper generalized decomposition (PGD) based reduced-order model of hierarchical deep-learning neural networks (HiDeNN). The proposed HiDeNN-PGD method keeps both advantages of HiDeNN and PGD methods. The automatic mesh adaptivity makes the HiDeNN-PGD more accurate than the finite element method (FEM) and conventional PGD, using a fraction of the FEM degrees of freedom. The accuracy and convergence of the method have been studied theoretically and numerically, with a comparison to different methods, including FEM, PGD, HiDeNN and Deep Neural Networks. In addition, we theoretically showed that the PGD converges to FEM at increasing modes, and the PGD error is a direct sum of the FEM error and the mode reduction error. The proposed HiDeNN-PGD performs high accuracy with orders of magnitude fewer degrees of freedom, which shows a high potential to achieve fast computations with a high level of accuracy for large-size engineering problems.

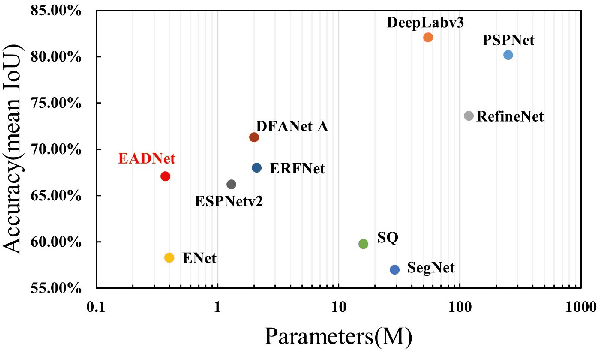

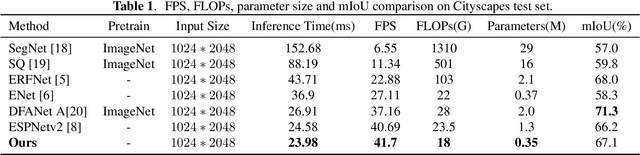

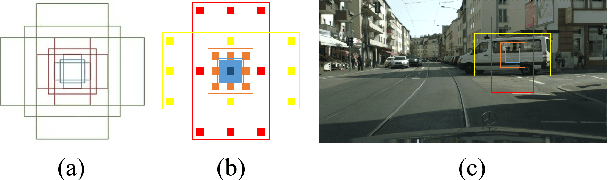

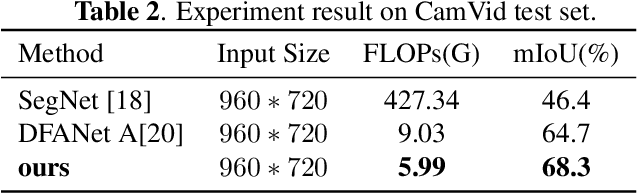

EADNet: Efficient Asymmetric Dilated Network for Semantic Segmentation

Mar 16, 2021

Abstract:Due to real-time image semantic segmentation needs on power constrained edge devices, there has been an increasing desire to design lightweight semantic segmentation neural network, to simultaneously reduce computational cost and increase inference speed. In this paper, we propose an efficient asymmetric dilated semantic segmentation network, named EADNet, which consists of multiple developed asymmetric convolution branches with different dilation rates to capture the variable shapes and scales information of an image. Specially, a multi-scale multi-shape receptive field convolution (MMRFC) block with only a few parameters is designed to capture such information. Experimental results on the Cityscapes dataset demonstrate that our proposed EADNet achieves segmentation mIoU of 67.1 with smallest number of parameters (only 0.35M) among mainstream lightweight semantic segmentation networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge