Yadong Mu

Columbia University

Learning from Next-Frame Prediction: Autoregressive Video Modeling Encodes Effective Representations

Dec 24, 2025

Abstract:Recent advances in pretraining general foundation models have significantly improved performance across diverse downstream tasks. While autoregressive (AR) generative models like GPT have revolutionized NLP, most visual generative pretraining methods still rely on BERT-style masked modeling, which often disregards the temporal information essential for video analysis. The few existing autoregressive visual pretraining methods suffer from issues such as inaccurate semantic localization and poor generation quality, leading to poor semantics. In this work, we propose NExT-Vid, a novel autoregressive visual generative pretraining framework that utilizes masked next-frame prediction to jointly model images and videos. NExT-Vid introduces a context-isolated autoregressive predictor to decouple semantic representation from target decoding, and a conditioned flow-matching decoder to enhance generation quality and diversity. Through context-isolated flow-matching pretraining, our approach achieves strong representations. Extensive experiments on large-scale pretrained models demonstrate that our proposed method consistently outperforms previous generative pretraining methods for visual representation learning via attentive probing in downstream classification.

PartCrafter: Structured 3D Mesh Generation via Compositional Latent Diffusion Transformers

Jun 05, 2025Abstract:We introduce PartCrafter, the first structured 3D generative model that jointly synthesizes multiple semantically meaningful and geometrically distinct 3D meshes from a single RGB image. Unlike existing methods that either produce monolithic 3D shapes or follow two-stage pipelines, i.e., first segmenting an image and then reconstructing each segment, PartCrafter adopts a unified, compositional generation architecture that does not rely on pre-segmented inputs. Conditioned on a single image, it simultaneously denoises multiple 3D parts, enabling end-to-end part-aware generation of both individual objects and complex multi-object scenes. PartCrafter builds upon a pretrained 3D mesh diffusion transformer (DiT) trained on whole objects, inheriting the pretrained weights, encoder, and decoder, and introduces two key innovations: (1) A compositional latent space, where each 3D part is represented by a set of disentangled latent tokens; (2) A hierarchical attention mechanism that enables structured information flow both within individual parts and across all parts, ensuring global coherence while preserving part-level detail during generation. To support part-level supervision, we curate a new dataset by mining part-level annotations from large-scale 3D object datasets. Experiments show that PartCrafter outperforms existing approaches in generating decomposable 3D meshes, including parts that are not directly visible in input images, demonstrating the strength of part-aware generative priors for 3D understanding and synthesis. Code and training data will be released.

Weakly-Supervised Affordance Grounding Guided by Part-Level Semantic Priors

May 30, 2025Abstract:In this work, we focus on the task of weakly supervised affordance grounding, where a model is trained to identify affordance regions on objects using human-object interaction images and egocentric object images without dense labels. Previous works are mostly built upon class activation maps, which are effective for semantic segmentation but may not be suitable for locating actions and functions. Leveraging recent advanced foundation models, we develop a supervised training pipeline based on pseudo labels. The pseudo labels are generated from an off-the-shelf part segmentation model, guided by a mapping from affordance to part names. Furthermore, we introduce three key enhancements to the baseline model: a label refining stage, a fine-grained feature alignment process, and a lightweight reasoning module. These techniques harness the semantic knowledge of static objects embedded in off-the-shelf foundation models to improve affordance learning, effectively bridging the gap between objects and actions. Extensive experiments demonstrate that the performance of the proposed model has achieved a breakthrough improvement over existing methods. Our codes are available at https://github.com/woyut/WSAG-PLSP .

BioMoDiffuse: Physics-Guided Biomechanical Diffusion for Controllable and Authentic Human Motion Synthesis

Mar 08, 2025Abstract:Human motion generation holds significant promise in fields such as animation, film production, and robotics. However, existing methods often fail to produce physically plausible movements that adhere to biomechanical principles. While recent autoregressive and diffusion models have improved visual quality, they frequently overlook essential biodynamic features, such as muscle activation patterns and joint coordination, leading to motions that either violate physical laws or lack controllability. This paper introduces BioMoDiffuse, a novel biomechanics-aware diffusion framework that addresses these limitations. It features three key innovations: (1) A lightweight biodynamic network that integrates muscle electromyography (EMG) signals and kinematic features with acceleration constraints, (2) A physics-guided diffusion process that incorporates real-time biomechanical verification via modified Euler-Lagrange equations, and (3) A decoupled control mechanism that allows independent regulation of motion speed and semantic context. We also propose a set of comprehensive evaluation protocols that combines traditional metrics (FID, R-precision, etc.) with new biomechanical criteria (smoothness, foot sliding, floating, etc.). Our approach bridges the gap between data-driven motion synthesis and biomechanical authenticity, establishing new benchmarks for physically accurate motion generation.

OmniPhysGS: 3D Constitutive Gaussians for General Physics-Based Dynamics Generation

Jan 31, 2025

Abstract:Recently, significant advancements have been made in the reconstruction and generation of 3D assets, including static cases and those with physical interactions. To recover the physical properties of 3D assets, existing methods typically assume that all materials belong to a specific predefined category (e.g., elasticity). However, such assumptions ignore the complex composition of multiple heterogeneous objects in real scenarios and tend to render less physically plausible animation given a wider range of objects. We propose OmniPhysGS for synthesizing a physics-based 3D dynamic scene composed of more general objects. A key design of OmniPhysGS is treating each 3D asset as a collection of constitutive 3D Gaussians. For each Gaussian, its physical material is represented by an ensemble of 12 physical domain-expert sub-models (rubber, metal, honey, water, etc.), which greatly enhances the flexibility of the proposed model. In the implementation, we define a scene by user-specified prompts and supervise the estimation of material weighting factors via a pretrained video diffusion model. Comprehensive experiments demonstrate that OmniPhysGS achieves more general and realistic physical dynamics across a broader spectrum of materials, including elastic, viscoelastic, plastic, and fluid substances, as well as interactions between different materials. Our method surpasses existing methods by approximately 3% to 16% in metrics of visual quality and text alignment.

DiffSplat: Repurposing Image Diffusion Models for Scalable Gaussian Splat Generation

Jan 28, 2025Abstract:Recent advancements in 3D content generation from text or a single image struggle with limited high-quality 3D datasets and inconsistency from 2D multi-view generation. We introduce DiffSplat, a novel 3D generative framework that natively generates 3D Gaussian splats by taming large-scale text-to-image diffusion models. It differs from previous 3D generative models by effectively utilizing web-scale 2D priors while maintaining 3D consistency in a unified model. To bootstrap the training, a lightweight reconstruction model is proposed to instantly produce multi-view Gaussian splat grids for scalable dataset curation. In conjunction with the regular diffusion loss on these grids, a 3D rendering loss is introduced to facilitate 3D coherence across arbitrary views. The compatibility with image diffusion models enables seamless adaptions of numerous techniques for image generation to the 3D realm. Extensive experiments reveal the superiority of DiffSplat in text- and image-conditioned generation tasks and downstream applications. Thorough ablation studies validate the efficacy of each critical design choice and provide insights into the underlying mechanism.

Pyramidal Flow Matching for Efficient Video Generative Modeling

Oct 08, 2024Abstract:Video generation requires modeling a vast spatiotemporal space, which demands significant computational resources and data usage. To reduce the complexity, the prevailing approaches employ a cascaded architecture to avoid direct training with full resolution. Despite reducing computational demands, the separate optimization of each sub-stage hinders knowledge sharing and sacrifices flexibility. This work introduces a unified pyramidal flow matching algorithm. It reinterprets the original denoising trajectory as a series of pyramid stages, where only the final stage operates at the full resolution, thereby enabling more efficient video generative modeling. Through our sophisticated design, the flows of different pyramid stages can be interlinked to maintain continuity. Moreover, we craft autoregressive video generation with a temporal pyramid to compress the full-resolution history. The entire framework can be optimized in an end-to-end manner and with a single unified Diffusion Transformer (DiT). Extensive experiments demonstrate that our method supports generating high-quality 5-second (up to 10-second) videos at 768p resolution and 24 FPS within 20.7k A100 GPU training hours. All code and models will be open-sourced at https://pyramid-flow.github.io.

Closed-loop Long-horizon Robotic Planning via Equilibrium Sequence Modeling

Oct 02, 2024

Abstract:In the endeavor to make autonomous robots take actions, task planning is a major challenge that requires translating high-level task descriptions into long-horizon action sequences. Despite recent advances in language model agents, they remain prone to planning errors and limited in their ability to plan ahead. To address these limitations in robotic planning, we advocate a self-refining scheme that iteratively refines a draft plan until an equilibrium is reached. Remarkably, this process can be optimized end-to-end from an analytical perspective without the need to curate additional verifiers or reward models, allowing us to train self-refining planners in a simple supervised learning fashion. Meanwhile, a nested equilibrium sequence modeling procedure is devised for efficient closed-loop planning that incorporates useful feedback from the environment (or an internal world model). Our method is evaluated on the VirtualHome-Env benchmark, showing advanced performance with better scaling for inference computation. Code is available at https://github.com/Singularity0104/equilibrium-planner.

Local Occupancy-Enhanced Object Grasping with Multiple Triplanar Projection

Jul 22, 2024Abstract:This paper addresses the challenge of robotic grasping of general objects. Similar to prior research, the task reads a single-view 3D observation (i.e., point clouds) captured by a depth camera as input. Crucially, the success of object grasping highly demands a comprehensive understanding of the shape of objects within the scene. However, single-view observations often suffer from occlusions (including both self and inter-object occlusions), which lead to gaps in the point clouds, especially in complex cluttered scenes. This renders incomplete perception of the object shape and frequently causes failures or inaccurate pose estimation during object grasping. In this paper, we tackle this issue with an effective albeit simple solution, namely completing grasping-related scene regions through local occupancy prediction. Following prior practice, the proposed model first runs by proposing a number of most likely grasp points in the scene. Around each grasp point, a module is designed to infer any voxel in its neighborhood to be either void or occupied by some object. Importantly, the occupancy map is inferred by fusing both local and global cues. We implement a multi-group tri-plane scheme for efficiently aggregating long-distance contextual information. The model further estimates 6-DoF grasp poses utilizing the local occupancy-enhanced object shape information and returns the top-ranked grasp proposal. Comprehensive experiments on both the large-scale GraspNet-1Billion benchmark and real robotic arm demonstrate that the proposed method can effectively complete the unobserved parts in cluttered and occluded scenes. Benefiting from the occupancy-enhanced feature, our model clearly outstrips other competing methods under various performance metrics such as grasping average precision.

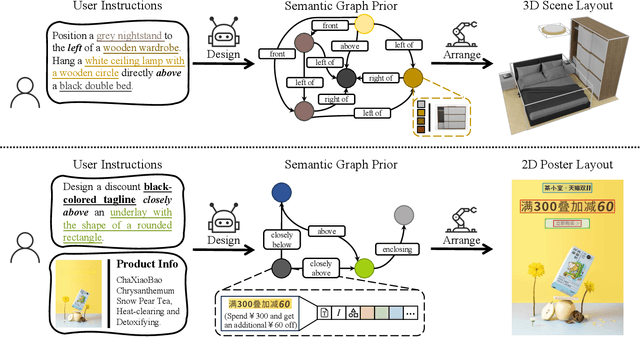

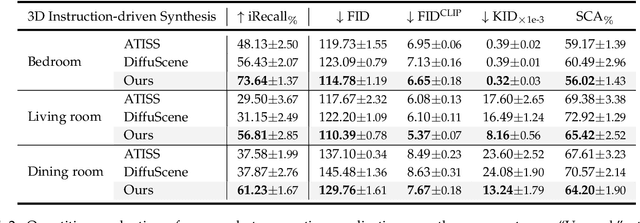

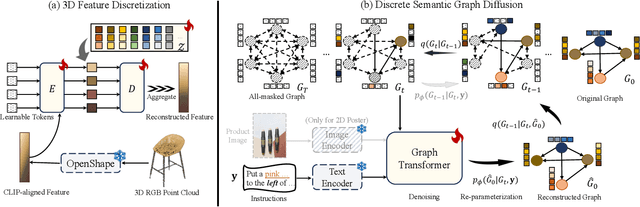

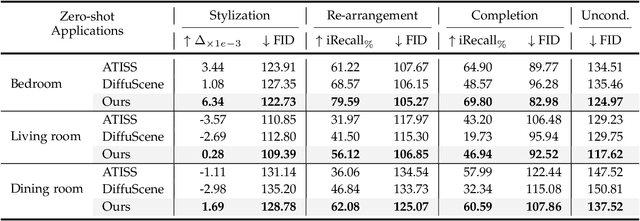

InstructLayout: Instruction-Driven 2D and 3D Layout Synthesis with Semantic Graph Prior

Jul 11, 2024

Abstract:Comprehending natural language instructions is a charming property for both 2D and 3D layout synthesis systems. Existing methods implicitly model object joint distributions and express object relations, hindering generation's controllability. We introduce InstructLayout, a novel generative framework that integrates a semantic graph prior and a layout decoder to improve controllability and fidelity for 2D and 3D layout synthesis. The proposed semantic graph prior learns layout appearances and object distributions simultaneously, demonstrating versatility across various downstream tasks in a zero-shot manner. To facilitate the benchmarking for text-driven 2D and 3D scene synthesis, we respectively curate two high-quality datasets of layout-instruction pairs from public Internet resources with large language and multimodal models. Extensive experimental results reveal that the proposed method outperforms existing state-of-the-art approaches by a large margin in both 2D and 3D layout synthesis tasks. Thorough ablation studies confirm the efficacy of crucial design components.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge