Xianyi Cheng

OPENTOUCH: Bringing Full-Hand Touch to Real-World Interaction

Dec 18, 2025Abstract:The human hand is our primary interface to the physical world, yet egocentric perception rarely knows when, where, or how forcefully it makes contact. Robust wearable tactile sensors are scarce, and no existing in-the-wild datasets align first-person video with full-hand touch. To bridge the gap between visual perception and physical interaction, we present OpenTouch, the first in-the-wild egocentric full-hand tactile dataset, containing 5.1 hours of synchronized video-touch-pose data and 2,900 curated clips with detailed text annotations. Using OpenTouch, we introduce retrieval and classification benchmarks that probe how touch grounds perception and action. We show that tactile signals provide a compact yet powerful cue for grasp understanding, strengthen cross-modal alignment, and can be reliably retrieved from in-the-wild video queries. By releasing this annotated vision-touch-pose dataset and benchmark, we aim to advance multimodal egocentric perception, embodied learning, and contact-rich robotic manipulation.

Humanoid Locomotion and Manipulation: Current Progress and Challenges in Control, Planning, and Learning

Jan 03, 2025

Abstract:Humanoid robots have great potential to perform various human-level skills. These skills involve locomotion, manipulation, and cognitive capabilities. Driven by advances in machine learning and the strength of existing model-based approaches, these capabilities have progressed rapidly, but often separately. Therefore, a timely overview of current progress and future trends in this fast-evolving field is essential. This survey first summarizes the model-based planning and control that have been the backbone of humanoid robotics for the past three decades. We then explore emerging learning-based methods, with a focus on reinforcement learning and imitation learning that enhance the versatility of loco-manipulation skills. We examine the potential of integrating foundation models with humanoid embodiments, assessing the prospects for developing generalist humanoid agents. In addition, this survey covers emerging research for whole-body tactile sensing that unlocks new humanoid skills that involve physical interactions. The survey concludes with a discussion of the challenges and future trends.

Co-Designing Tools and Control Policies for Robust Manipulation

Sep 17, 2024Abstract:Inherent robustness in manipulation is prevalent in biological systems and critical for robotic manipulation systems due to real-world uncertainties and disturbances. This robustness relies not only on robust control policies but also on the design characteristics of the end-effectors. This paper introduces a bi-level optimization approach to co-designing tools and control policies to achieve robust manipulation. The approach employs reinforcement learning for lower-level control policy learning and multi-task Bayesian optimization for upper-level design optimization. Diverging from prior approaches, we incorporate caging-based robustness metrics into both levels, ensuring manipulation robustness against disturbances and environmental variations. Our method is evaluated in four non-prehensile manipulation environments, demonstrating improvements in task success rate under disturbances and environment changes. A real-world experiment is also conducted to validate the framework's practical effectiveness.

Caging in Motion: Characterizing Robustness in Manipulation through Energy Margin and Dynamic Caging Analysis

Apr 18, 2024Abstract:To develop robust manipulation policies, quantifying robustness is essential. Evaluating robustness in general dexterous manipulation, nonetheless, poses significant challenges due to complex hybrid dynamics, combinatorial explosion of possible contact interactions, global geometry, etc. This paper introduces ``caging in motion'', an approach for analyzing manipulation robustness through energy margins and caging-based analysis. Our method assesses manipulation robustness by measuring the energy margin to failure and extends traditional caging concepts for a global analysis of dynamic manipulation. This global analysis is facilitated by a kinodynamic planning framework that naturally integrates global geometry, contact changes, and robot compliance. We validate the effectiveness of our approach in the simulation and real-world experiments of multiple dynamic manipulation scenarios, highlighting its potential to predict manipulation success and robustness.

WebArena: A Realistic Web Environment for Building Autonomous Agents

Jul 25, 2023

Abstract:With generative AI advances, the exciting potential for autonomous agents to manage daily tasks via natural language commands has emerged. However, cur rent agents are primarily created and tested in simplified synthetic environments, substantially limiting real-world scenario representation. In this paper, we build an environment for agent command and control that is highly realistic and reproducible. Specifically, we focus on agents that perform tasks on websites, and we create an environment with fully functional websites from four common domains: e-commerce, social forum discussions, collaborative software development, and content management. Our environment is enriched with tools (e.g., a map) and external knowledge bases (e.g., user manuals) to encourage human-like task-solving. Building upon our environment, we release a set of benchmark tasks focusing on evaluating the functional correctness of task completions. The tasks in our benchmark are diverse, long-horizon, and are designed to emulate tasks that humans routinely perform on the internet. We design and implement several autonomous agents, integrating recent techniques such as reasoning before acting. The results demonstrate that solving complex tasks is challenging: our best GPT-4-based agent only achieves an end-to-end task success rate of 10.59%. These results highlight the need for further development of robust agents, that current state-of-the-art LMs are far from perfect performance in these real-life tasks, and that WebArena can be used to measure such progress. Our code, data, environment reproduction resources, and video demonstrations are publicly available at https://webarena.dev/.

Enhancing Dexterity in Robotic Manipulation via Hierarchical Contact Exploration

Jul 01, 2023

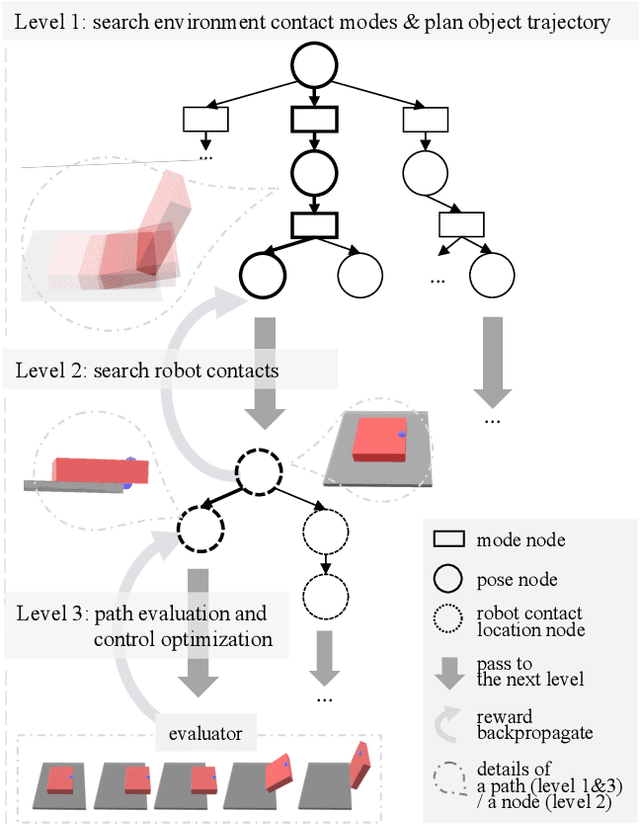

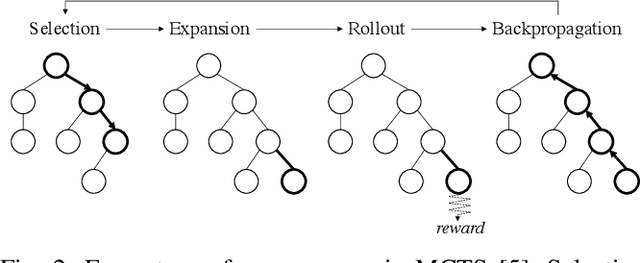

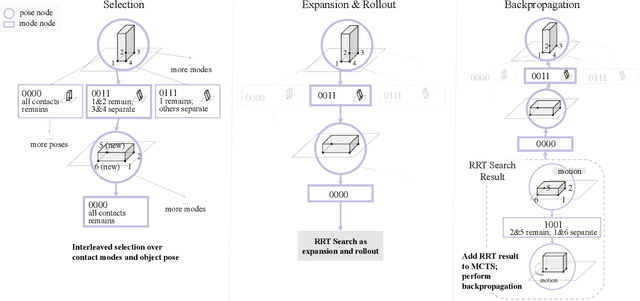

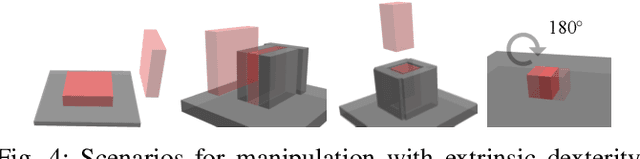

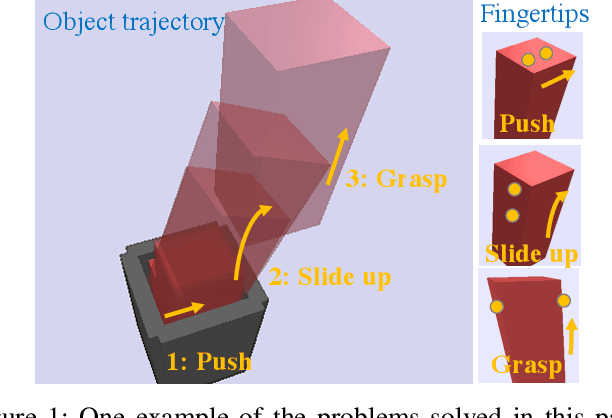

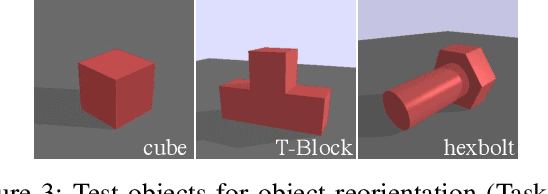

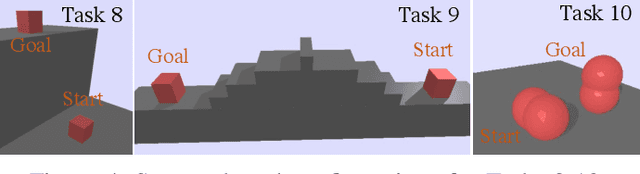

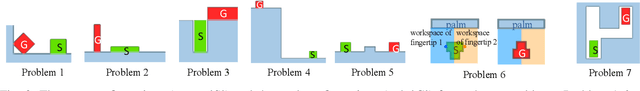

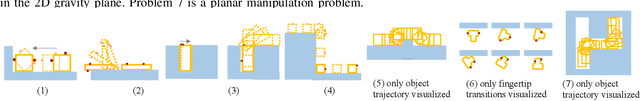

Abstract:We present a hierarchical planning framework for dexterous robotic manipulation (HiDex). This framework exploits in-hand and extrinsic dexterity by actively exploring contacts. It generates rigid-body motions and complex contact sequences. Our framework is based on Monte-Carlo Tree Search (MCTS) and has three levels: 1) planning object motions and environment contact modes; 2) planning robot contacts; 3) path evaluation and control optimization that passes the rewards to the upper levels. This framework offers two main advantages. First, it allows efficient global reasoning over high-dimensional complex space created by contacts. It solves a diverse set of manipulation tasks that require dexterity, both intrinsic (using the fingers) and extrinsic (also using the environment), mostly in seconds. Second, our framework allows the incorporation of expert knowledge and customizable setups in task mechanics and models. It requires minor modifications to accommodate different scenarios and robots. Hence, it could provide a flexible and generalizable solution for various manipulation tasks. As examples, we analyze the results on 7 hand configurations and 15 scenarios. We demonstrate 8 of them on two robot platforms.

Learning Preconditions of Hybrid Force-Velocity Controllers for Contact-Rich Manipulation

Jun 25, 2022

Abstract:Robots need to manipulate objects in constrained environments like shelves and cabinets when assisting humans in everyday settings like homes and offices. These constraints make manipulation difficult by reducing grasp accessibility, so robots need to use non-prehensile strategies that leverage object-environment contacts to perform manipulation tasks. To tackle the challenge of planning and controlling contact-rich behaviors in such settings, this work uses Hybrid Force-Velocity Controllers (HFVCs) as the skill representation and plans skill sequences with learned preconditions. While HFVCs naturally enable robust and compliant contact-rich behaviors, solvers that synthesize them have traditionally relied on precise object models and closed-loop feedback on object pose, which are difficult to obtain in constrained environments due to occlusions. We first relax HFVCs' need for precise models and feedback with our HFVC synthesis framework, then learn a point-cloud-based precondition function to classify where HFVC executions will still be successful despite modeling inaccuracies. Finally, we use the learned precondition in a search-based task planner to complete contact-rich manipulation tasks in a shelf domain. Our method achieves a task success rate of $73.2\%$, outperforming the $51.5\%$ achieved by a baseline without the learned precondition. While the precondition function is trained in simulation, it can also transfer to a real-world setup without further fine-tuning.

Contact Mode Guided Motion Planning for Dexterous Manipulation

May 30, 2021

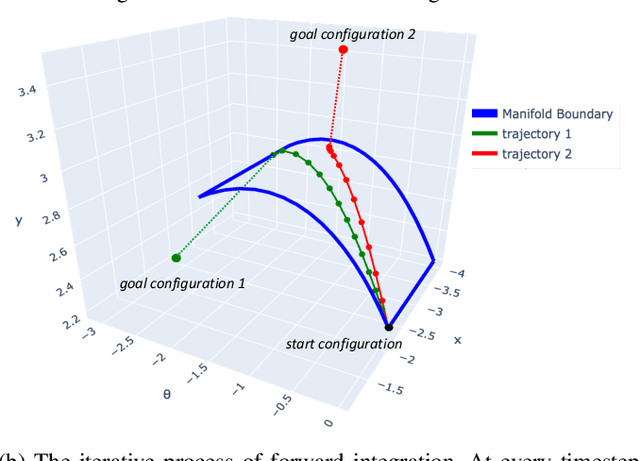

Abstract:Within the field of robotic manipulation, a central goal is to replicate the human ability to manipulate any object in any situation using a sequence of manipulation primitives such as grasping, pushing, inserting, sliding, etc. Conceptually, each manipulation primitive restricts the object and robot to move on a lower-dimensional manifold defined by the primitive's dynamic equations of motion. Likewise, a manipulation sequence represents a dynamically feasible trajectory that traverses multiple manifolds. To manipulate any object in any situation, robotic systems must include the ability to automatically synthesize manipulation primitives (manifolds) and sequence those primitives into a coherent plan (find a path across the manifolds). This paper investigates a principled approach for solving dexterous manipulation planning. This approach is based on rapidly-exploring random trees which use contact modes to guide tree expansion along primitive manifolds. This paper extends this algorithm from 2D domains to 3D domains. We validated our algorithm on a large collection of simulated 3D manipulation tasks. These tasks required our algorithm to sequence between 6-42 manipulation primitives (i.e.\! distinct contact modes). We believe this work represents an important step towards robotic manipulation capabilities which generalize across objects and environments.

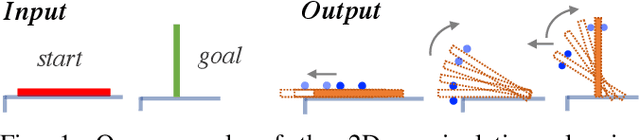

Contact Mode Guided Sampling-Based Planning for Quasistatic Dexterous Manipulation in 2D

Nov 03, 2020

Abstract:The discontinuities and multi-modality introduced by contacts make manipulation planning challenging. Many previous works avoid this problem by pre-designing a set of high-level motion primitives like grasping and pushing. However, such motion primitives are often not adequate to describe dexterous manipulation motions. In this work, we propose a method for automatic dexterous manipulation planning at a more primitive level. The key idea is to use contact modes to guide the search in a sampling-based planning framework. Our method can automatically generate contact transitions and motion trajectories under the quasistatic assumption. In the experiments, this method sometimes generates motions that are often pre-designed as motion primitives, as well as dexterous motions that are more task-specific.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge