Shaohang Han

Exact Smooth Reformulations for Trajectory Optimization Under Signal Temporal Logic Specifications

Nov 10, 2025Abstract:We study motion planning under Signal Temporal Logic (STL), a useful formalism for specifying spatial-temporal requirements. We pose STL synthesis as a trajectory optimization problem leveraging the STL robustness semantics. To obtain a differentiable problem without approximation error, we introduce an exact reformulation of the max and min operators. The resulting method is exact, smooth, and sound. We validate it in numerical simulations, demonstrating its practical performance.

Risk-Aware Robot Control in Dynamic Environments Using Belief Control Barrier Functions

Apr 05, 2025

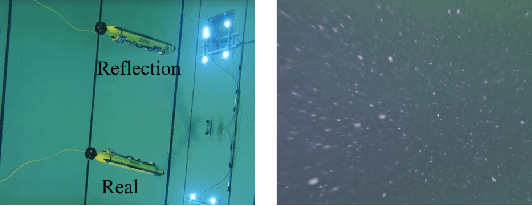

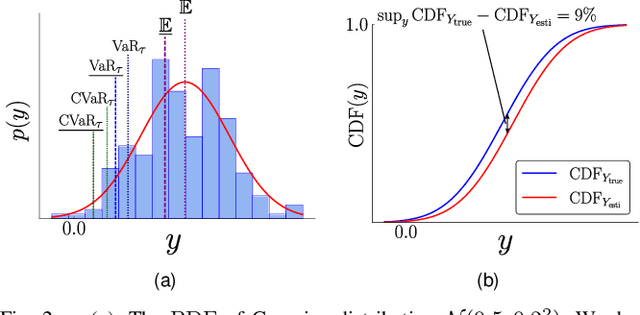

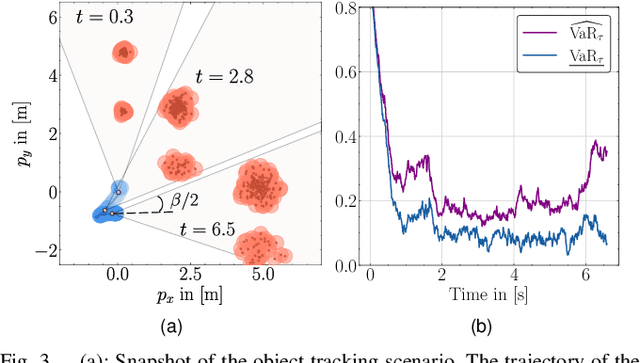

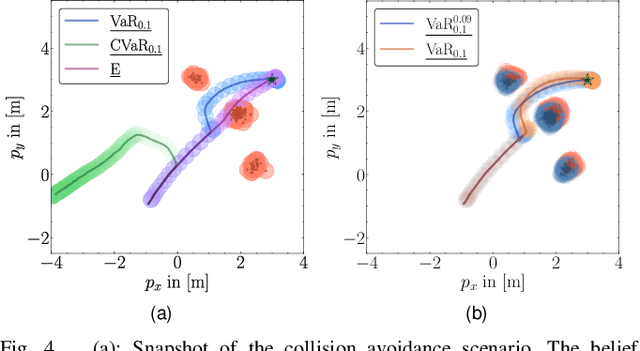

Abstract:Ensuring safety for autonomous robots operating in dynamic environments can be challenging due to factors such as unmodeled dynamics, noisy sensor measurements, and partial observability. To account for these limitations, it is common to maintain a belief distribution over the true state. This belief could be a non-parametric, sample-based representation to capture uncertainty more flexibly. In this paper, we propose a novel form of Belief Control Barrier Functions (BCBFs) specifically designed to ensure safety in dynamic environments under stochastic dynamics and a sample-based belief about the environment state. Our approach incorporates provable concentration bounds on tail risk measures into BCBFs, effectively addressing possible multimodal and skewed belief distributions represented by samples. Moreover, the proposed method demonstrates robustness against distributional shifts up to a predefined bound. We validate the effectiveness and real-time performance (approximately 1kHz) of the proposed method through two simulated underwater robotic applications: object tracking and dynamic collision avoidance.

Co-Designing Tools and Control Policies for Robust Manipulation

Sep 17, 2024Abstract:Inherent robustness in manipulation is prevalent in biological systems and critical for robotic manipulation systems due to real-world uncertainties and disturbances. This robustness relies not only on robust control policies but also on the design characteristics of the end-effectors. This paper introduces a bi-level optimization approach to co-designing tools and control policies to achieve robust manipulation. The approach employs reinforcement learning for lower-level control policy learning and multi-task Bayesian optimization for upper-level design optimization. Diverging from prior approaches, we incorporate caging-based robustness metrics into both levels, ensuring manipulation robustness against disturbances and environmental variations. Our method is evaluated in four non-prehensile manipulation environments, demonstrating improvements in task success rate under disturbances and environment changes. A real-world experiment is also conducted to validate the framework's practical effectiveness.

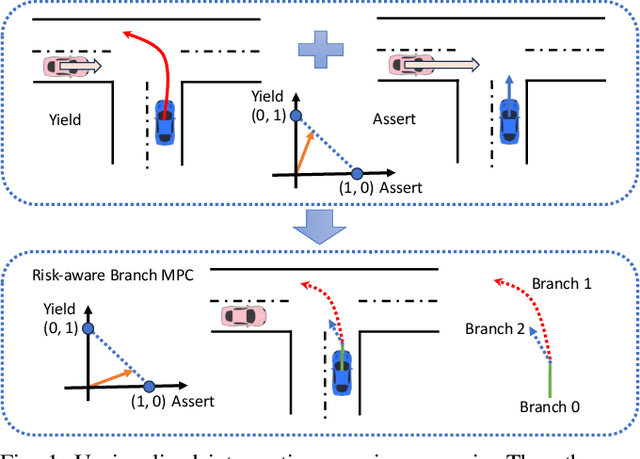

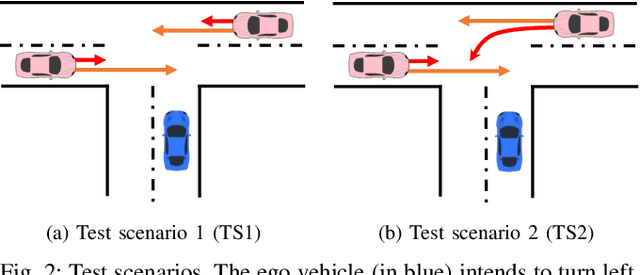

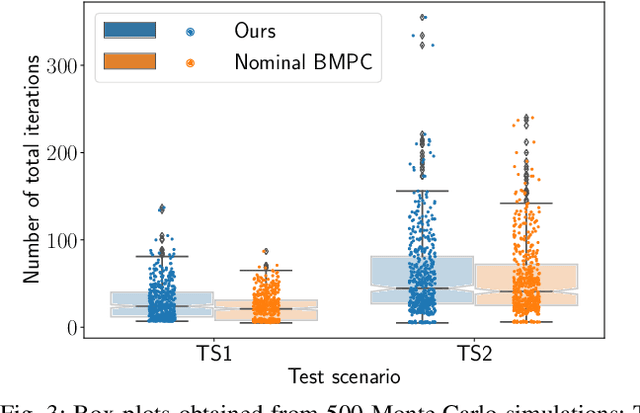

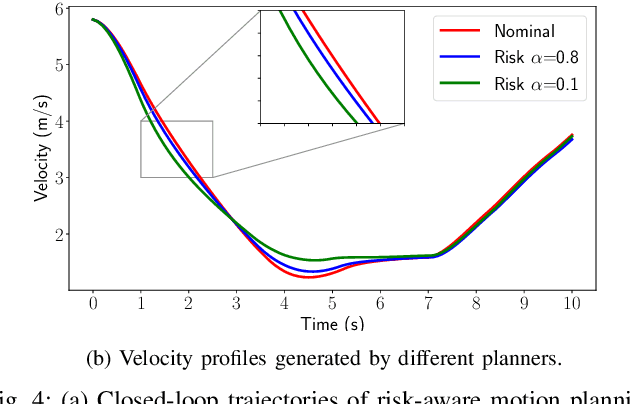

An Efficient Risk-aware Branch MPC for Automated Driving that is Robust to Uncertain Vehicle Behaviors

Mar 27, 2024

Abstract:One of the critical challenges in automated driving is ensuring safety of automated vehicles despite the unknown behavior of the other vehicles. Although motion prediction modules are able to generate a probability distribution associated with various behavior modes, their probabilistic estimates are often inaccurate, thus leading to a possibly unsafe trajectory. To overcome this challenge, we propose a risk-aware motion planning framework that appropriately accounts for the ambiguity in the estimated probability distribution. We formulate the risk-aware motion planning problem as a min-max optimization problem and develop an efficient iterative method by incorporating a regularization term in the probability update step. Via extensive numerical studies, we validate the convergence of our method and demonstrate its advantages compared to the state-of-the-art approaches.

An Efficient Game-Theoretic Planner for Automated Lane Merging with Multi-Modal Behavior Understanding

Dec 02, 2023Abstract:In this paper, we propose a novel behavior planner that combines game theory with search-based planning for automated lane merging. Specifically, inspired by human drivers, we model the interaction between vehicles as a gap selection process. To overcome the challenge of multi-modal behavior exhibited by the surrounding vehicles, we formulate the trajectory selection as a matrix game and compute an equilibrium. Next, we validate our proposed planner in the high-fidelity simulator CARLA and demonstrate its effectiveness in handling interactions in dense traffic scenarios.

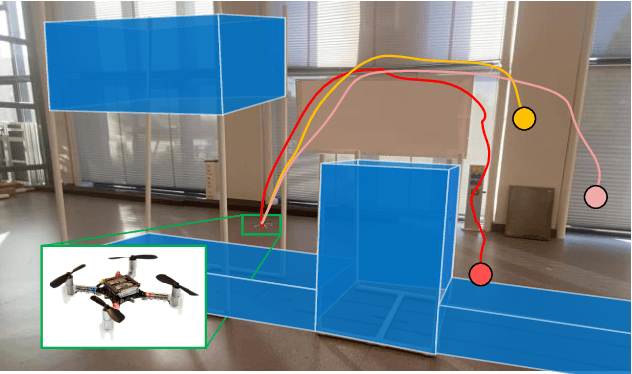

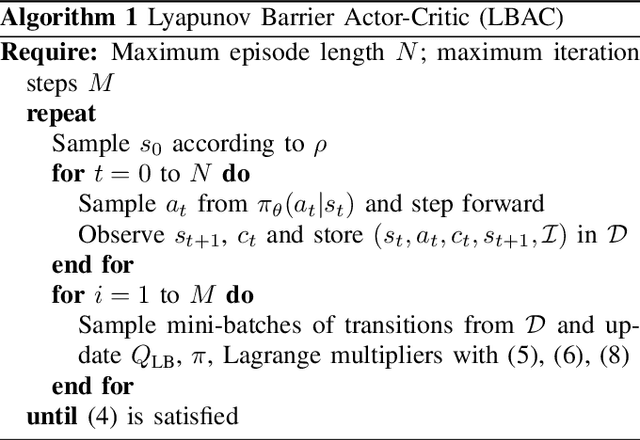

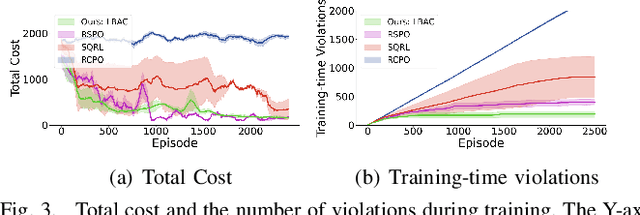

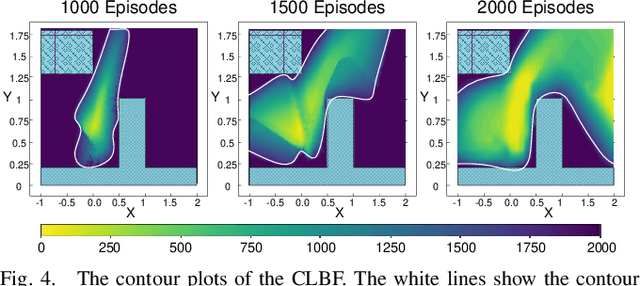

Reinforcement Learning for Safe Robot Control using Control Lyapunov Barrier Functions

May 16, 2023

Abstract:Reinforcement learning (RL) exhibits impressive performance when managing complicated control tasks for robots. However, its wide application to physical robots is limited by the absence of strong safety guarantees. To overcome this challenge, this paper explores the control Lyapunov barrier function (CLBF) to analyze the safety and reachability solely based on data without explicitly employing a dynamic model. We also proposed the Lyapunov barrier actor-critic (LBAC), a model-free RL algorithm, to search for a controller that satisfies the data-based approximation of the safety and reachability conditions. The proposed approach is demonstrated through simulation and real-world robot control experiments, i.e., a 2D quadrotor navigation task. The experimental findings reveal this approach's effectiveness in reachability and safety, surpassing other model-free RL methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge